color version - PET: Python Entre Todos - Python Argentina

color version - PET: Python Entre Todos - Python Argentina

color version - PET: Python Entre Todos - Python Argentina

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

(presidential advisors for example).<br />

In this case the value of the information would be 10,000, and since we define<br />

value as “much” and what value we define as “little”, this value is “large” (much).<br />

This, we can consider as correct as any medium that can influence important<br />

people should have a high value.<br />

Keeping the values, but changing our function by AUDIENCE + IMPACT the<br />

value of the information would be 1010 which, keeping the above reasoning<br />

remains high.<br />

Now, if we replace the value of the audience by a large number 1000, and keep the<br />

impact, the value of the original function would be 1 million and the second case<br />

in 2000.<br />

If we consider we now likely impact on 1000 presidential advisors 2000 value<br />

remains small, since we are presence of a medium likely to generate a global<br />

impact. Which shows that multiplication represents much better variation of<br />

parameters.<br />

• The value vanishes in the absence of audience or impact: When the audience<br />

or impact are 0 (zero) (No one sees or pays attention to the medium) the value of<br />

information is also 0 (zero).<br />

This is not trivial because it suggests that the information is worthless if nobody<br />

cares to see it or no one pays attention.<br />

Infopython<br />

Since there is a wide variety of public services that extract statistics and data on new<br />

media (web, twitter, etc), including:<br />

• Klout (http://klout.com/)<br />

• Compete (http://www.compete.com/)<br />

• Alexa (http://www.alexa.com/)<br />

To quote a few. Infopython focuses on providing a simple API to value through<br />

agenda-setting (in the future to implement other theories) to the media regardless of<br />

type, using aforementioned services<br />

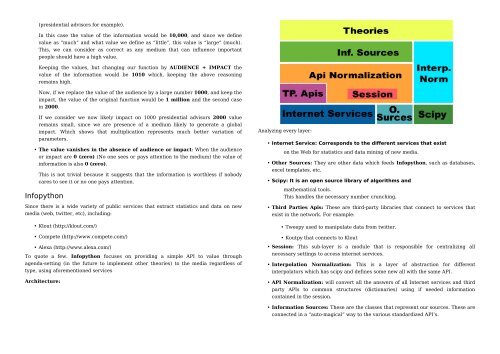

Architecture:<br />

Analyzing every layer:<br />

• Internet Service: Corresponds to the different services that exist<br />

on the Web for statistics and data mining of new media.<br />

• Other Sources: They are other data which feeds Infopython, such as databases,<br />

excel templates, etc.<br />

• Scipy: It is an open source library of algorithms and<br />

mathematical tools.<br />

This handles the necessary number crunching.<br />

• Third Parties Apis: These are third-party libraries that connect to services that<br />

exist in the network. For example:<br />

• Tweepy used to manipulate data from twitter.<br />

• Koutpy that connects to Klout<br />

• Session: This sub-layer is a module that is responsible for centralizing all<br />

necessary settings to access internet services.<br />

• Interpolation Normalization: This is a layer of abstraction for different<br />

interpolators which has scipy and defines some new all with the same API.<br />

• API Normalization: will convert all the answers of all Internet services and third<br />

party APIs to common structures (dictionaries) using if needed information<br />

contained in the session.<br />

• Information Sources: These are the classes that represent our sources. These are<br />

connected in a “auto-magical” way to the various standardized API’s.