1FfUrl0

1FfUrl0

1FfUrl0

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Regression – Recommendations<br />

Setting hyperparameters in a smart way<br />

In the preceding example, we set the penalty parameter to 1. We could just as well<br />

have set it to 2 (or half, or 200, or 20 million). Naturally, the results vary each time.<br />

If we pick an overly large value, we get underfitting. In extreme case, the learning<br />

system will just return every coefficient equal to zero. If we pick a value that is too<br />

small, we overfit and are very close to OLS, which generalizes poorly.<br />

How do we choose a good value? This is a general problem in machine learning:<br />

setting parameters for our learning methods. A generic solution is to use crossvalidation.<br />

We pick a set of possible values, and then use cross-validation to choose<br />

which one is best. This performs more computation (ten times more if we use 10<br />

folds), but is always applicable and unbiased.<br />

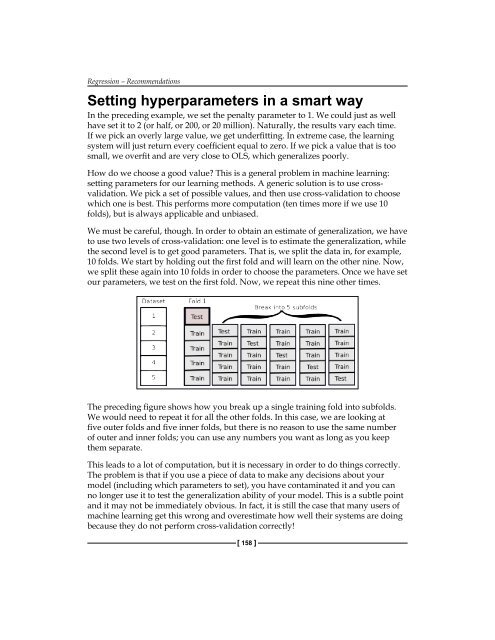

We must be careful, though. In order to obtain an estimate of generalization, we have<br />

to use two levels of cross-validation: one level is to estimate the generalization, while<br />

the second level is to get good parameters. That is, we split the data in, for example,<br />

10 folds. We start by holding out the first fold and will learn on the other nine. Now,<br />

we split these again into 10 folds in order to choose the parameters. Once we have set<br />

our parameters, we test on the first fold. Now, we repeat this nine other times.<br />

The preceding figure shows how you break up a single training fold into subfolds.<br />

We would need to repeat it for all the other folds. In this case, we are looking at<br />

five outer folds and five inner folds, but there is no reason to use the same number<br />

of outer and inner folds; you can use any numbers you want as long as you keep<br />

them separate.<br />

This leads to a lot of computation, but it is necessary in order to do things correctly.<br />

The problem is that if you use a piece of data to make any decisions about your<br />

model (including which parameters to set), you have contaminated it and you can<br />

no longer use it to test the generalization ability of your model. This is a subtle point<br />

and it may not be immediately obvious. In fact, it is still the case that many users of<br />

machine learning get this wrong and overestimate how well their systems are doing<br />

because they do not perform cross-validation correctly!<br />

[ 158 ]