Planning under Uncertainty in Dynamic Domains - Carnegie Mellon ...

Planning under Uncertainty in Dynamic Domains - Carnegie Mellon ...

Planning under Uncertainty in Dynamic Domains - Carnegie Mellon ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

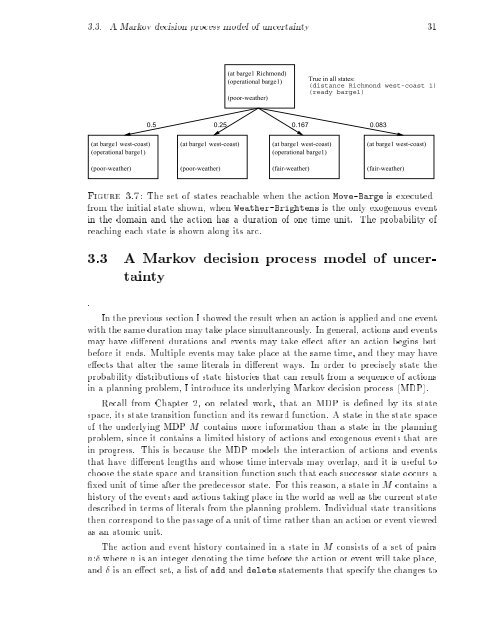

3.3. A Markov decision process model of uncerta<strong>in</strong>ty 31(at barge1 Richmond)(operational barge1)(poor-weather)True <strong>in</strong> all states:(distance Richmond west-coast 1)(ready barge1)0.5 0.250.167 0.083(at barge1 west-coast) (at barge1 west-coast) (at barge1 west-coast) (at barge1 west-coast)(operational barge1)(operational barge1)(poor-weather)(poor-weather)(fair-weather)(fair-weather)Figure 3.7: The set of states reachable when the action Move-Barge is executedfrom the <strong>in</strong>itial state shown, when Weather-Brightens is the only exogenous event<strong>in</strong> the doma<strong>in</strong> and the action has a duration of one time unit. The probability ofreach<strong>in</strong>g each state is shown along its arc.3.3 A Markov decision process model of uncerta<strong>in</strong>ty.In the previous section I showed the result when an action is applied and one eventwith the same duration may take place simultaneously. In general, actions and eventsmay have dierent durations and events may take eect after an action beg<strong>in</strong>s butbefore it ends. Multiple events may take place at the same time, and they may haveeects that alter the same literals <strong>in</strong> dierent ways. In order to precisely state theprobability distributions of state histories that can result from a sequence of actions<strong>in</strong> a plann<strong>in</strong>g problem, I <strong>in</strong>troduce its <strong>under</strong>ly<strong>in</strong>g Markov decision process (MDP).Recall from Chapter 2, on related work, that an MDP is dened by its statespace, its state transition function and its reward function. A state <strong>in</strong> the state spaceof the <strong>under</strong>ly<strong>in</strong>g MDP M conta<strong>in</strong>s more <strong>in</strong>formation than a state <strong>in</strong> the plann<strong>in</strong>gproblem, s<strong>in</strong>ce it conta<strong>in</strong>s a limited history of actions and exogenous events that are<strong>in</strong> progress. This is because the MDP models the <strong>in</strong>teraction of actions and eventsthat have dierent lengths and whose time <strong>in</strong>tervals may overlap, and it is useful tochoose the state space and transition function such that each successor state occurs axed unit of time after the predecessor state. For this reason, a state <strong>in</strong> M conta<strong>in</strong>s ahistory of the events and actions tak<strong>in</strong>g place <strong>in</strong> the world as well as the current statedescribed <strong>in</strong> terms of literals from the plann<strong>in</strong>g problem. Individual state transitionsthen correspond to the passage of a unit of time rather than an action or event viewedas an atomic unit.The action and event history conta<strong>in</strong>ed <strong>in</strong> a state <strong>in</strong> M consists of a set of pairsn: where n is an <strong>in</strong>teger denot<strong>in</strong>g the time before the action or event will take place,and is an eect set, a list of add and delete statements that specify the changes to