to uphold our prior confidence in that the novice could not have played deliberately, wewould still be compelled to admit that the connection between his moves was advisable <strong>and</strong>appropriate.A similar remark is applicable to the hypothesis on the regularity of the phenomena ofnature. As much as we would like to believe in miracles, we will inevitably have to admit theregularities in order to explain the data available. However, it is impossible to dissuadeanyone from believing in the existence of wonders taking place beyond the domain of preciseobservation; <strong>and</strong> the laws, until now considered as indisputable, will perhaps turn out to befreaks of chance.40. (§4.5). For example, before the theory of logarithms was discovered, that it had beenln2.41. (§4.5). That is, F(z) = z.42. (§4.5). {The author apparently had in mind the Lexian theory of dispersion. See thedescription of the appropriate work of Markov <strong>and</strong> Chuprov in <strong>Sheynin</strong> (1996, §14).}ReferencesBernstein, S.N. (1927), (Theory of <strong>Probability</strong>). Fourth ed.: M.,1946.Bohlmann, G. (ca. 1905), Technique de l’assurances sur la vie. Enc. Sci. Math., t. 1, vol. 4.German text: Lebensversicherungsmathematik. Enc. Math. Wiss., Bd. 1, Tl. 2, pp. 852 – 917.Boole, G. (1854), On the conditions by which the solution of questions in the theory ofprobabilities are limited. Studies in Logic <strong>and</strong> <strong>Probability</strong>. London, 1952, pp. 280 – 288.Borel, E. (1914), Le hasard. Paris. Several subsequent editions.Hochkirchen, T. (1999), Die Axiomatisierung der Wahrscheinlichkeits-rechnung. Göttingen.Ibragimov, I.A. (2000). Translation: On Bernstein’s work in probability. Transl. Amer. Math.Soc., ser. 2, vol. 205, 2002, pp. 83 – 104.Kolmogorov, A.N. (1948, in Russian), E.E. Slutsky. An obituary. Math. Scientist, vol. 27,2002, pp. 67 – 74.Kolmogorov, A.N., Sarmanov, O.V. (1960), Bernstein’s works on the theory of probability.Teoria Veroiatnostei i Ee Primenenia, vol. 5, pp. 215 – 221. That periodical is beingtranslated as Theory of <strong>Probability</strong> <strong>and</strong> Its Applications.Laplace, P.S. (1812), Théorie analytique des probabilités. Oeuvr. Compl., t. 7. Paris, 1886.Laplace, P.S. (1814), Essai philosophique sur les probabilités. English translation:Philosophical Essay on Probabilities. New York, 1995.Markov, A.A. (1912), Wahrscheinlichkeitsrechnung. Leipzig – Berlin. This is a translation ofthe Russian edition of 1908. Other Russian editions: 1900, 1913 (to which Bernsteinreferred) <strong>and</strong> 1924.Schröder, E. (1890 – 1895), Vorlesungen über der Algebra der Logik, Bde 1 – 3. Leipzig.<strong>Sheynin</strong>, O. (1996), Chuprov. Göttingen.Slutsky, E.E. (1922), On the logical foundation of the calculus of probability. Translated inthis collection.--- (1925), On the law of large numbers. Vestnik Statistiki, No. 7/9, pp. 1 – 55. (R)4. S.N. Bernstein. On the Fisherian “Confidence” ProbabilitiesBernstein, S.N. (Coll. Works), vol. 4. N.p., 1964, pp. 386 – 3931. This paper aims at making public <strong>and</strong> at somewhat extending my main remarksformulated after the reports of Romanovsky <strong>and</strong> Kolmogorov at the conference onmathematical statistics in November 1940 1 . So as better to ascertain the principles of thematter, I shall try to write as elementary as possible <strong>and</strong> will consider a case in which the

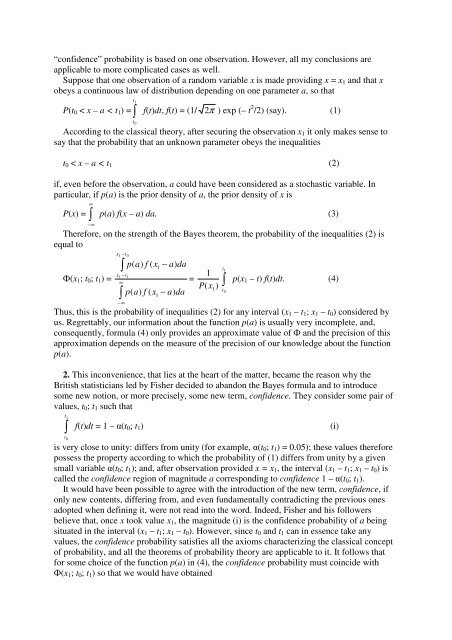

“confidence” probability is based on one observation. However, all my conclusions areapplicable to more complicated cases as well.Suppose that one observation of a r<strong>and</strong>om variable x is made providing x = x 1 <strong>and</strong> that xobeys a continuous law of distribution depending on one parameter a, so thatP(t 0 < x – a < t 1 ) = 1 t0tf(t)dt, f(t) = (1/2 π ) exp (– t 2 /2) (say). (1)According to the classical theory, after securing the observation x 1 it only makes sense tosay that the probability that an unknown parameter obeys the inequalitiest 0 < x – a < t 1 (2)if, even before the observation, a could have been considered as a stochastic variable. Inparticular, if p(a) is the prior density of a, the prior density of x is∞P(x) = p(a) f(x – a) da. (3)−∞Therefore, on the strength of the Bayes theorem, the probability of the inequalities (2) isequal to(x 1 ; t 0 ; t 1 ) =x −t1x −t∞−∞0p(a)f ( x − a)da1 1=p(a)f ( x − a)da111P(x 1) 1 t0tp(x 1 – t) f(t)dt. (4)Thus, this is the probability of inequalities (2) for any interval (x 1 – t 1 ; x 1 – t 0 ) considered byus. Regrettably, our information about the function p(a) is usually very incomplete, <strong>and</strong>,consequently, formula (4) only provides an approximate value of <strong>and</strong> the precision of thisapproximation depends on the measure of the precision of our knowledge about the functionp(a).2. This inconvenience, that lies at the heart of the matter, became the reason why theBritish statisticians led by Fisher decided to ab<strong>and</strong>on the Bayes formula <strong>and</strong> to introducesome new notion, or more precisely, some new term, confidence. They consider some pair ofvalues, t 0 ; t 1 such thatt 1 t0f(t)dt = 1 – (t 0 ; t 1 )is very close to unity: differs from unity (for example, (t 0 ; t 1 ) = 0.05); these values thereforepossess the property according to which the probability of (1) differs from unity by a givensmall variable (t 0 ; t 1 ); <strong>and</strong>, after observation provided x = x 1 , the interval (x 1 – t 1 ; x 1 – t 0 ) iscalled the confidence region of magnitude a corresponding to confidence 1 – (t 0 ; t 1 ).It would have been possible to agree with the introduction of the new term, confidence, ifonly new contents, differing from, <strong>and</strong> even fundamentally contradicting the previous onesadopted when defining it, were not read into the word. Indeed, Fisher <strong>and</strong> his followersbelieve that, once x took value x 1 , the magnitude (i) is the confidence probability of a beingsituated in the interval (x 1 – t 1 ; x 1 – t 0 ). However, since t 0 <strong>and</strong> t 1 can in essence take anyvalues, the confidence probability satisfies all the axioms characterizing the classical conceptof probability, <strong>and</strong> all the theorems of probability theory are applicable to it. It follows thatfor some choice of the function p(a) in (4), the confidence probability must coincide with(x 1 ; t 0 ; t 1 ) so that we would have obtained(i)

- Page 3:

of All Countries and to the Entire

- Page 6 and 7:

(Coll. Works), vol. 4. N.p., 1964,

- Page 8 and 9:

individuals of the third class, the

- Page 10:

From the theoretical point of view

- Page 13 and 14:

Second case: Each crossing can repr

- Page 15 and 16:

On the other hand, for four classes

- Page 17 and 18:

f i = i S + i , i = 1, 2, 3, 4, (

- Page 19 and 20:

f 1 = C 1 P(f 1 ; …; f n+1 ), C 1

- Page 21 and 22:

ut in this case f = 2 , f 1 = 2 ,

- Page 23 and 24: I also note the essential differenc

- Page 25 and 26: A 1 23n1 + 1 A 1 A 1 … A 11A 2 A

- Page 27 and 28: coefficient of 2 in the right side

- Page 29 and 30: h(A r h - c h A r 0 ) = - A r0we tr

- Page 31 and 32: Notes1. Our formulas obviously pres

- Page 33 and 34: Bernstein’s standpoint regarding

- Page 35 and 36: Corollary 1.8. A true proposition c

- Page 37 and 38: It is important to indicate that al

- Page 39 and 40: ut for the simultaneous realization

- Page 41 and 42: devoid of quadratic divisors and re

- Page 43 and 44: propositions (B i and C j ) can be

- Page 45 and 46: A ~ A 1 and B = B 1 , we will have

- Page 47 and 48: included in a given totality as equ

- Page 49 and 50: For unconnected totalities we would

- Page 51 and 52: proposition given that a second one

- Page 53 and 54: On the other hand, let x be a parti

- Page 55 and 56: totality is perfect, but that the j

- Page 57 and 58: In this case, all the finite or inf

- Page 59 and 60: probabilities p 1 , p 2 , … respe

- Page 61 and 62: where x is determined by the inequa

- Page 63 and 64: totality of the second type (§3.1.

- Page 65 and 66: x = /2 + /(23) + … + /(23… p n

- Page 67 and 68: that the fall of a given die on any

- Page 69 and 70: infinitely many digits only dependi

- Page 71 and 72: 10. (§2.1.5). Such two proposition

- Page 73: F(x + h) - F(x) = Mh, therefore F(x

- Page 77 and 78: x1+ Lp n (x) x1− Lx1+ Lf(t)dt < x

- Page 79 and 80: |(x 1 ; t 0 ; t 1 ) - 1 t0tf(t)dt|

- Page 81 and 82: 5. The distribution ofξ , the arit

- Page 83 and 84: P(x 1i < x) = F(x; a i ) = C(a i )

- Page 85 and 86: egards his promises. Markov shows t

- Page 87 and 88: other solely and equally possible i

- Page 89 and 90: notion of probability and of its re

- Page 91 and 92: However, already in the beginning o

- Page 93 and 94: the revolution. My main findings we

- Page 95 and 96: Nevertheless, Slutsky is not suffic

- Page 97 and 98: path that would completely answer h

- Page 99 and 100: on political economy as well as wit

- Page 101 and 102: scientific merit. Borel was indeed

- Page 103 and 104: [3] Already in Kiev Slutsky had bee

- Page 105 and 106: different foundation. The difficult

- Page 107 and 108: 5. On the criterion of goodness of

- Page 109 and 110: --- (1999, in Russian), Slutsky: co

- Page 111 and 112: Here also, the author considers the

- Page 113 and 114: second, it is not based on assumpti

- Page 115 and 116: experimentation and connected with

- Page 117 and 118: Russian, and especially of the Sovi

- Page 119 and 120: station in England. This book, as h

- Page 121 and 122: Uspekhi Matematich. Nauk, vol. 10,

- Page 123 and 124: variety and detachment of those lat

- Page 125 and 126:

46. On the distribution of the regr

- Page 127 and 128:

119. On the Markov method of establ

- Page 129 and 130:

No lesser difficulties than those e

- Page 131 and 132:

Separate spheres of work considerab

- Page 133 and 134:

10. Anderson, O. Letters to Karl Pe

- Page 135 and 136:

Hier sind, im Allgemeinen, ganz ana

- Page 137 and 138:

Jedenfalls, glaube ich erwiesen zu

- Page 139 and 140:

werde ich das ganze Material in kur

- Page 141 and 142:

considered as the limiting case of

- Page 143 and 144:

and, inversely,] = m ...1 2 N[ ch h

- Page 145 and 146:

µ 2 2 = m 2 2 - 2m 2 m 1 2 + m 1 4

- Page 147 and 148:

(x k - x k+1 ) … (x k - x +) = E(

- Page 149 and 150:

the thus obtained relations as pert

- Page 151 and 152:

[1/S(S - 1)(S - 2)][(Si = 1Sx i ) 3

- Page 153 and 154:

( N −1)((S − N )(2NS− 3S− 3

- Page 155 and 156:

µ 5 + 2µ 2 µ 3 = U [S/S] 5 + 2U

- Page 157 and 158:

case, the same property is true wit

- Page 159 and 160:

It follows that the question about

- Page 161 and 162:

then expressed my doubts). And Gned

- Page 163 and 164:

For Problem 1, formula (7) shows th

- Page 165 and 166:

Let us calculate now, by means of f

- Page 167 and 168:

ϕ′1(x)1E(a|x 1 ; x 2 ; …; x n

- Page 169 and 170:

Theorem 3. If the prior density 3

- Page 171 and 172:

P( ≤ ≤ |, 1 , 2 , …, s )

- Page 173 and 174:

6. A Sensible Choice of Confidence

- Page 175 and 176:

0 = A 0 n, = B2, = B2, 0 = C 0 n

- Page 177 and 178:

Note also that (95),(96), (83),(85)

- Page 179 and 180:

Γ(n / 2)Γ [( n −1) / 2]k = (1/2

- Page 181 and 182:

f (x 1 , x 2 , …, x n ) = 1 if x

- Page 183 and 184:

and the probability of achieving no

- Page 185 and 186:

E = kEµ. (14)In many particular ca

- Page 187 and 188:

a = np, b = np 2 = a 2 /n, = a/nand

- Page 189 and 190:

with number (2k - 2), we commit an

- Page 191 and 192:

(67)which is suitable even without

- Page 193 and 194:

" = 1/[1 - e - ], = - ln [1 - (1/

- Page 195 and 196:

Such structures are entirely approp

- Page 197 and 198:

11. As a result of its historical d

- Page 199 and 200:

exaggeration towards a total denial