Course Notes - Department of Mathematics and Statistics

Course Notes - Department of Mathematics and Statistics

Course Notes - Department of Mathematics and Statistics

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

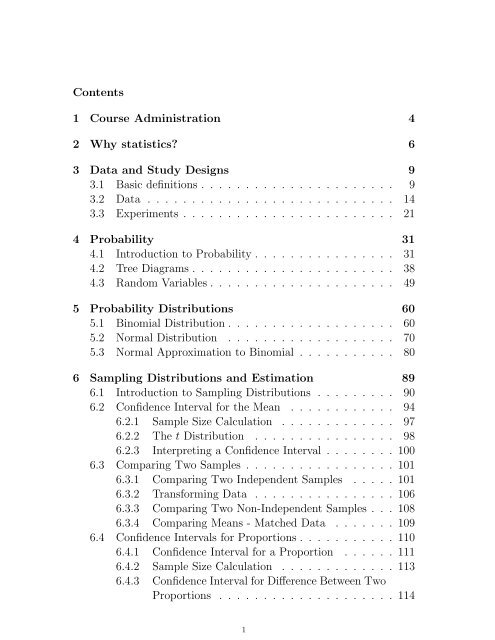

Contents1 <strong>Course</strong> Administration 42 Why statistics? 63 Data <strong>and</strong> Study Designs 93.1 Basic definitions . . . . . . . . . . . . . . . . . . . . . . 93.2 Data . . . . . . . . . . . . . . . . . . . . . . . . . . . . 143.3 Experiments . . . . . . . . . . . . . . . . . . . . . . . . 214 Probability 314.1 Introduction to Probability . . . . . . . . . . . . . . . . 314.2 Tree Diagrams . . . . . . . . . . . . . . . . . . . . . . . 384.3 R<strong>and</strong>om Variables . . . . . . . . . . . . . . . . . . . . . 495 Probability Distributions 605.1 Binomial Distribution . . . . . . . . . . . . . . . . . . . 605.2 Normal Distribution . . . . . . . . . . . . . . . . . . . 705.3 Normal Approximation to Binomial . . . . . . . . . . . 806 Sampling Distributions <strong>and</strong> Estimation 896.1 Introduction to Sampling Distributions . . . . . . . . . 906.2 Confidence Interval for the Mean . . . . . . . . . . . . 946.2.1 Sample Size Calculation . . . . . . . . . . . . . 976.2.2 The t Distribution . . . . . . . . . . . . . . . . 986.2.3 Interpreting a Confidence Interval . . . . . . . . 1006.3 Comparing Two Samples . . . . . . . . . . . . . . . . . 1016.3.1 Comparing Two Independent Samples . . . . . 1016.3.2 Transforming Data . . . . . . . . . . . . . . . . 1066.3.3 Comparing Two Non-Independent Samples . . . 1086.3.4 Comparing Means - Matched Data . . . . . . . 1096.4 Confidence Intervals for Proportions . . . . . . . . . . . 1106.4.1 Confidence Interval for a Proportion . . . . . . 1116.4.2 Sample Size Calculation . . . . . . . . . . . . . 1136.4.3 Confidence Interval for Difference Between TwoProportions . . . . . . . . . . . . . . . . . . . . 1141

7 Hypothesis Testing 1197.1 Hypothesis Test for Mean . . . . . . . . . . . . . . . . 1217.2 Hypothesis Test For Proportion . . . . . . . . . . . . . 1227.3 Hypothesis Test Difference Two Means . . . . . . . . . 1237.4 Hypothesis Test Difference Two Proportions . . . . . . 1277.5 Interpreting the p-value . . . . . . . . . . . . . . . . . 1297.6 Significance <strong>and</strong> Conclusiveness . . . . . . . . . . . . . 1307.7 Power . . . . . . . . . . . . . . . . . . . . . . . . . . . 1338 Contingency Tables 1398.1 Introduction to Contingency Tables . . . . . . . . . . . 1408.2 Relative Risk (RR) . . . . . . . . . . . . . . . . . . . . 1438.3 Attributable Risk (AR) . . . . . . . . . . . . . . . . . . 1448.4 Odds Ratio (OR) . . . . . . . . . . . . . . . . . . . . . 1448.5 Confidence Intervals for Risk Measures. . . . . . . . . . 1468.6 Chi Square Test for Contingency Tables. . . . . . . . . 1538.6.1 Simpson’s Paradox . . . . . . . . . . . . . . . . 1588.6.2 Test for Trend . . . . . . . . . . . . . . . . . . . 1609 ANOVA 1669.1 One Factor ANOVA . . . . . . . . . . . . . . . . . . . 1679.1.1 The ANOVA Model . . . . . . . . . . . . . . . . 1699.1.2 Partitioning the Sum <strong>of</strong> Squares . . . . . . . . . 1699.1.3 F Distribution . . . . . . . . . . . . . . . . . . . 1719.1.4 Computational Formulae . . . . . . . . . . . . . 1719.2 Post ANOVA Analysis . . . . . . . . . . . . . . . . . . 1749.2.1 CI for the Mean . . . . . . . . . . . . . . . . . . 1769.2.2 CI for the Difference Between Two Means . . . 1779.2.3 Multiple Comparisons . . . . . . . . . . . . . . 1789.3 ANOVA Assumptions . . . . . . . . . . . . . . . . . . . 1789.4 Two factor ANOVA . . . . . . . . . . . . . . . . . . . . 1799.4.1 Block Designs . . . . . . . . . . . . . . . . . . . 1839.5 Two Factor Factorial Experiments . . . . . . . . . . . . 1869.5.1 Interpreting the Interaction Effect . . . . . . . . 1912

10 Regression 20210.1 Introduction to Regression . . . . . . . . . . . . . . . . 20310.2 Checking fit <strong>of</strong> Regression . . . . . . . . . . . . . . . . 21210.3 Confidence Intervals <strong>and</strong> Regression . . . . . . . . . . . 21810.4 Correlation . . . . . . . . . . . . . . . . . . . . . . . . 22310.5 Multiple regression . . . . . . . . . . . . . . . . . . . . 23010.6 Are all the variables required? . . . . . . . . . . . . . . 23810.7 Analysis <strong>of</strong> Covariance . . . . . . . . . . . . . . . . . . 24510.8 Logistic Regression . . . . . . . . . . . . . . . . . . . . 253A Tools for assignments 267B Summary <strong>of</strong> Formulae 2713

1 <strong>Course</strong> AdministrationAdministration• Lectures: Monday, Tuesday, Wednesday, Thursday ∗ at 8am or10am• Tutorials will be cafeteria style, students are free to attend atany scheduled tutorial time. Tutorials are held in the North CAL(computer laboratory, opposite entrance to the Science Library inthe Science III building)..• Tutorials will be held 9am to 3pm Tuesday through Thursday4

Resource Page<strong>Department</strong> <strong>of</strong> <strong>Mathematics</strong> <strong>and</strong> <strong>Statistics</strong> (http://www.maths.otago.ac.nz)• Download course information• Access <strong>and</strong> submit assignments <strong>and</strong> mastery tests (assignmentsavailable from 9am Mondays)• Book slots for mastery tests• View assignment/test results• Questionnaire - please fill out (voluntary)Assessment• Internal assessment will count for 1/3 <strong>of</strong> your final mark withplussage (your internal assessment counts only if it helps i.e. wewill take the better <strong>of</strong> your final mark or 1/3 internal + 2/3 final).• Internal component is made up <strong>of</strong> two parts:- 1/3 from assignments, 8 in total (due Friday 9am in semesterweeks 2-4, 6-7 <strong>and</strong> 9-11)- 2/3 from short mastery tests, 3 in total (in semester weeks 5,8 <strong>and</strong> 12)Assignments/Tests• All on-line• Assignments can be submitted from anywhere in the world• You will need access to R- Free s<strong>of</strong>tware which you can download5

- CAL labs- Halls <strong>of</strong> Residence• Tests are only administered in North CALPolicy on collaboration• Tests <strong>and</strong> assignment questions are individualised• There may be some questions requiring written answers• On assignments (but not tests) we are happy for students to discussanswers. However,• We insist that you answer questions in your own words.- Our s<strong>of</strong>tware checks students answers against the entire class2 Why statistics?Why do we study statistics?• <strong>Statistics</strong> refers to both data <strong>and</strong> to the theory <strong>and</strong> logic supportingthe toolkit <strong>of</strong> techniques we use to study data.• The main reason students study ‘<strong>Statistics</strong>’ is because they areforced to...• We are curious <strong>and</strong> we collect data in order to underst<strong>and</strong> betterthe world around us- the persuasive power <strong>of</strong> numbers• Published numbers have a power they <strong>of</strong>ten don’t deserve.- if a statistic seems unbelievable, don’t believe it• Research <strong>and</strong> management (policy).6

3 Data <strong>and</strong> Study Designs3.1 Basic definitionsDefinitions• Each question has a numerical answer (counts, probabilities, proportions,...).• Need a toolkit for numerical description <strong>and</strong> for answering numericalquestions.• Inference – formal name given to learning from data using statisticaltools.• We need some agreed terminology.Population• Population Complete set <strong>of</strong> entities or elements or units or subjectsthat we wish to describe or make inference about. Real orhypothetical.Well-defined- The collection <strong>of</strong> words in poems by W. B. Yeats.- All the rimu trees in Tongariro National Park.Not well-defined- The population <strong>of</strong> New Zeal<strong>and</strong>. Right now? Past? Future?- Banks Peninsula Hector’s dolphins. Which dolphins shouldwe include?- Target population in a drug trial. All alive or who will ever beborn? Over a certain age? Only those people who can affordthe drug?Which population have we studied? Which are we interested in?9

Sample• A Sample is a subset <strong>of</strong> a population.A ‘census’ is a complete enumeration/sample <strong>of</strong> a population.Rare.Samples need to be ‘representative’. Only guaranteed by r<strong>and</strong>omselection.What if r<strong>and</strong>om sampling is impossible?- We have to assume that our sample is representative.- Not testable <strong>and</strong> dangerous. Faith in our procedure.10

Parameter• Parameter: Fixed number that characterises a population.- The number <strong>of</strong> people alive in NZ at midnight on 1/1/2000.- The average height <strong>of</strong> all 2013 STAT110 students.Parameters are either known or unknown, hypothetical, or real.Much <strong>of</strong> statistics is about obtaining intelligent guesses for parameters.Usually represented by a Greek letter: α, β, γ, δ, . . ..Hypothetical?• Does the parameter really exists?WomenMenTime140 160 180 200●●Asymptote = 2hr 14min 51sec●●● ●● ●●●●●●●● ● ●● ● ●● ●●●Time130 140 150 160 170●●●●●Asymptote = 1hr 58min 48sec●●●●●●● ●●●● ●●●● ● ●●●●●●●●●1970 1980 1990 20001920 1960 2000YearYearR<strong>and</strong>om variable• R<strong>and</strong>om variable Mathematically precise definition not reallyhelpful.11

2h 15:25 (2003)2h 03:38 (2011)- An unknown quantity that varies in an unpredictable way.- Once observed we refer to a realised value.12

TypesDiscrete - can put in one-to-one correspondence with the countingnumbers.Continuous - can be expressed on a continuous scale in whichevery value is possible.Categorical - restricted to one <strong>of</strong> a set <strong>of</strong> categories. For example‘Heads’ or ‘Tails’.R<strong>and</strong>om variables notationNotation Represented by upper case Roman lettersLower case Roman letter represents the observed value.Pr(X = x) means ‘the probability that the r<strong>and</strong>om variableX takes the value x.e.g., Pr(X = 1.2).R<strong>and</strong>om variables described by probability distributions.Observed values <strong>of</strong> r<strong>and</strong>om variables are data.Statistic• A statistic is a numerical summary <strong>of</strong> data.• An estimate is a special kind <strong>of</strong> statistic used as an intelligentguess for a parameter.- Usually denote an estimate by a circumflex: ˆµ is an estimate<strong>of</strong> µ.- Remember ˆµ is statistic <strong>and</strong> an estimate, µ is a parameter.Model• A model is a mathematical description <strong>of</strong> the data generatingmechanism.- Expressed in terms <strong>of</strong> parameters <strong>and</strong> r<strong>and</strong>om variables.13

• Think <strong>of</strong> it as a metaphor - if we repeatedly toss a coin we findthe sequence <strong>of</strong> ‘heads’ <strong>and</strong> ‘tails’ behaves in the same way as asequence <strong>of</strong> independent samples from a Bernoulli distribution.- Outcomes <strong>of</strong> coin tosses are data.- A Bernoulli distribution exists in theory only.3.2 DataData <strong>and</strong> inference• Proper inference depends on how our data were collected.• “In 20 tosses <strong>of</strong> a coin I obtained 20 heads”• But I tossed it 45 times.EarthquakesSouthern end <strong>of</strong> the alpine fault. Source: Te Ara - the Encyclopedia <strong>of</strong> New Zeal<strong>and</strong>• Ruptured 1230, 1460, 1615 <strong>and</strong> 171714

- How do geologists know?- Sediment disturbances, radiocarbon dating <strong>of</strong> disturbed material,tree rings.• Intervals: 230, 155, 102, ≥ 293 years.- Overdue?Earthquake II• Observational – obtained by recording natural events.- Could the measurement have favoured the observed values?• Synthetic – reconstructed from other data.- Not the data we wish we had.- Reconstruction introduces error (1717 accurate to nearest year;before 1717 accurate to ± 50 years)• Last interval is censored – it is at least 293 years.• Need to take into account when expressing uncertainties associatedwith any predictions.15

Major sources <strong>of</strong> data• Sample surveys - study subjects selected through r<strong>and</strong>om sampling.- Probability samples <strong>and</strong> non-probability samples- Do not trust results from non-probability samples (judgmentsampling, snowball sampling, quota sampling, convenience sampling)- Examples: Questionnaires, quadrat sampling etc• Experiments - deliberate manipulation <strong>of</strong> variables to see response.- Replication, r<strong>and</strong>omisation <strong>and</strong> control.- Sometimes one (or more) element is missing - quasi-experiments.• Observational studies - descriptive or quasi-experimental- If descriptive, probability sampling should be used for reliableinference.- If experimental, absence <strong>of</strong> r<strong>and</strong>omisation implies weaker inference.Sample surveys• Inefficient (usually) to try <strong>and</strong> sample whole population- Usually impossible - even with a ‘census’• Which items do we include?- Need to guard against introducing bias.• Even with r<strong>and</strong>om sampling have to be watch out for nonresponsebias.16

Source: skyscrapercity.comSampling frame• Sampling frame - List <strong>of</strong> items in a population from which asample is drawn.• Rarely coincides with the entire population <strong>of</strong> interest.- Telephone numbers- Electoral roll- lists <strong>of</strong> licence holders• Often don’t exist- List <strong>of</strong> all Hector’s dolphins in New Zeal<strong>and</strong>?- List <strong>of</strong> all potential buyers <strong>of</strong> a new drug• Even without a list we can ensure an unbiased sample if everyindividual has the same chance <strong>of</strong> being drawn.Example 1: Gamebird surveys• Gamebird hunting licence required – natural sampling frame.• Questionnaires used to estimate gamebird harvest in the 1980s.• Mailout plus one reminder then a telephone sample <strong>of</strong> r<strong>and</strong>omlyselected nonrespondents.17

Gamebird survey results4 May 25 May 15 Jun 29 JunEstimate SE Estimate SE Estimate SE Estimate SEMallards harvestedR 6.17 0.58 4.36 0.67 2.80 0.51 2.38 0.41N 5.23 0.75 4.02 0.62 1.74 0.44 1.24 0.34Hours huntedR 9.67 0.57 8.18 1.16 5.43 0.76 4.91 0.65N 6.85 0.59 5.66 0.81 3.16 0.61 1.71 0.34Harvest by respondents (R) <strong>and</strong> non-respondents (N) to gamebird hunting questionnaire.Example 2: 1936 Presidential election• Literary Digest predicted 2:1 victory to L<strong>and</strong>on (R) over Roosevelt(D).• Election: L<strong>and</strong>on 2 states Roosevelt 46!• Mailout to more than 10 million – 2 million responded• Frame: readers <strong>of</strong> Literary Digest, telephone numbers, registeredcar owners.• George Gallup used a quota sample <strong>of</strong> 50,000 people to (a) predicta win for Roosevelt <strong>and</strong> (b) used a sample <strong>of</strong> 3000 from theLiterary Digest frame to predict that Literary Digest would mispredict.Probability sampling• We want our frame to match the population <strong>of</strong> interest <strong>and</strong> a wayto draw a representative sample.• Probability sampling is the only way to ensure representativeness• Simple r<strong>and</strong>om sample For a finite population <strong>of</strong> size N drawa sample <strong>of</strong> size n such that each possible sample has the sameprobability.- Lotto - each draw <strong>of</strong> 6 balls has the same probability18

- 1 in 3.8 million - 3.8 million distinct sequences, all equallylikely.- Sampling without replacementSimple r<strong>and</strong>om sample• Easy to analyse• let y 1 , . . . , y n denote the observed valuesEstimate population mean:∑ ni=1ˆµ = ȳ =y i.n‘add the values up <strong>and</strong> divide by their number’.Estimate population total:ˆT = N nn∑y i = Nȳ.‘take the sample mean <strong>and</strong> multiply by the population size.’i=1Stratified sampling• Much <strong>of</strong> statistical design theory is about controlling variation.• More variable data means less precise inference (signal vs noise).• Stratified sampling useful when the population comprises differenttypes <strong>of</strong> similar individuals.- A stratum is a population sub-division <strong>of</strong> similar units• Take a simple r<strong>and</strong>om sample from within each stratum.- More precise for the same expenditure.- Might be interested in the results by stratum.• Can take different sized samples from different strata.- Device for reducing the overall variability.• Formulae for estimates more complicated.- Consult an expert.19

Replication <strong>and</strong> r<strong>and</strong>omisation• Replication Responses vary among subjects. Replication allowsus to separate out treatment effects from chance effects.• R<strong>and</strong>omisation Ensures that effects <strong>of</strong> unmeasured factors areequalised across the treatment groups.Control• Provides context for evaluating the effect <strong>of</strong> interest.• Often a placebo - determine existence <strong>of</strong> an effect• Sometimes a st<strong>and</strong>ard treatment – differences relative to the st<strong>and</strong>ard.- Effect <strong>of</strong> surgical sterilization on possum populations - shamsurgery.- Effect <strong>of</strong> lactate buffers on 800m time to exhaustion at 20kmph- salt tablets.- Growth <strong>and</strong> survival <strong>of</strong> starved paua larvae - no starvation.22

Important designs• Completely r<strong>and</strong>omised designExperimental units allocated to treatment groups r<strong>and</strong>omly.- No restriction on the allocations apart from the numbers ineach group.- Each possible allocation has the same probability <strong>of</strong> beingselected.• R<strong>and</strong>omised block designR<strong>and</strong>om allocation <strong>of</strong> treatments within blocks <strong>of</strong> similar subjects.- Reduces unexplained error by removing between-block variationBlock what you can, r<strong>and</strong>omize what you cannot.Repeated measures• Individuals can act as their own blocks• Each individual receives each treatment, usually in a differentorder- Effect <strong>of</strong> lactate buffers on time to exhaustion at 20kmh- Each subject received each <strong>of</strong> the 4 treatments in r<strong>and</strong>omorder.• Paired t-test special case <strong>of</strong> analysis when just two treatments(eg, treatment <strong>and</strong> control)- Need special care where the order <strong>of</strong> treatment matters (e.g.,learning)23

Polio vaccine trial• Acute viral disease spread by person-to-person contactPolio cases (deaths in red) in NZCases0 200 400 600 800 1000 12001920 1940 1960 1980 2000YearPolio vaccine trial• Mass vaccination <strong>of</strong> children in NZ in 1961 <strong>and</strong> 1962• Followed US trials involving 1.8M children- Trial needed because <strong>of</strong> earlier failures- Massive size needed because <strong>of</strong> low prevalence• Controversy over the design – parental consent required <strong>and</strong> soonly studying volunteers.Polio vaccine trial II• Two designs usedA. Observed control - 1st <strong>and</strong> 3rd graders acted as controls <strong>and</strong>2nd graders with parental consent vaccinated.24

B. R<strong>and</strong>omised control - <strong>of</strong> all children who had parental consent,half were r<strong>and</strong>omly allocated to the control group <strong>and</strong> theother half vaccinated.- The control group were “vaccinated” with a saline solution- Children, Drs, researchers didn’t know which child was inwhich group until after the experiment.Polio vaccine trial IIIDesign Trt group n Cases Rate (per 100,000)Observed Control Vaccinated 221998 56 25.2Observed Control Controls 725173 391 53.9R<strong>and</strong>omised Control Vaccinated 200745 57 28.4R<strong>and</strong>omised Control Placebo 201229 142 70.6• Observed control design biased against the vaccine.- Confounding <strong>of</strong> vaccine effect <strong>and</strong> ‘parental consent’ effect.- Children from poorer families had a natural level <strong>of</strong> immunity<strong>and</strong> less likely to receive consent for involvement in the trial.Chance effect or real?• Just by chance things almost always differ. Could this be chance?• Suppose we have two unfair coins, a ‘Control’ coin <strong>and</strong> a ‘Vaccine’coin• We toss the control coin 201,229 times <strong>and</strong> the other one 200,645times.• If they had the same chance <strong>of</strong> coming up heads what is theprobability <strong>of</strong> observing the outcome 142 heads for the controlcoin <strong>and</strong> 57 for the vaccine coin, or a more extreme one?- More than one billion to one against- Coins are not the same.25

Confounding• Between 1972 <strong>and</strong> 1974 1/6 <strong>of</strong> women on electoral roll <strong>of</strong> Whickhamsurveyed.• Followed up 20 years laterSmokerYes No TotalDead 139 230 369Alive 443 502 945582 732 1314Smokers Death rate = 139/582 or 23.9%.Non-smokers Death rate = 230/732 or 31.41%.• <strong>Statistics</strong> don’t lie!Simpson’s paradoxAgeSmoking status 18-24 25-34 35-44 45-54 54-64 65-74 75+Smoker 3.6 2.4 12.8 20.8 44.3 80.6 100Non-smoker 1.6 3.2 5.8 15.4 33.1 78.3 100Proportion dead by the time <strong>of</strong> the follow up survey.• Death rate is higher for smokers in every age group except one!• Few <strong>of</strong> the older women (65+) were smokers at the time <strong>of</strong> theoriginal survey but most had died by the time <strong>of</strong> follow-up.• Smokers had already disappeared from these age groups at thetime <strong>of</strong> the initial survey.Dealing with confounders• In experiments we r<strong>and</strong>omize to equalize all other effects acrossour treatment groups.• In observational studies we must measure potential confounders- Always a risk that we have missed confounders26

- Reason why observational studies provide a weaker form <strong>of</strong>evidence.• Confounding can be usefulDeliberate confounding• STAT110 questionnaire - last question• We deliberately confounded response with coin toss to provideanonymity• Out <strong>of</strong> 267 responses, 108 tails <strong>and</strong> 159 heads- We expect ∼ 133.5 tails- 133.5-108 = 25.5 is our guess for the number <strong>of</strong> missing ’tails’reported as ’heads’ because <strong>of</strong> drug use.• 25.5/133.5 = 0.191 - guess that approx. 19.1% <strong>of</strong> the class havetried drugs (22.6% last year)..• Good model for the confounding (coin toss) - can undo the confounding.Cohort Studies• Whickham smoking study is a cohort study- longitudinal- prospective - outcome <strong>of</strong> interest becomes manifest over time- observational - alternative to experiment when these are impossible• Famous local example: Dunedin Multidisciplinary Health <strong>and</strong> Developmentstudy- Cohort study <strong>of</strong> 1,037 people born in Dunedin in 1972/1973- Follow up: 3,5,7,9,11,13,15,18,21,26, <strong>and</strong> 32; 38 underway.• Cohort studies may also look backward in time (retrospective)27

Case-Control Study• Retrospective• Doll-Hill study <strong>of</strong> British cancer patients (1948-1952).- Cases = lung cancer- Controls = other forms <strong>of</strong> cancer, matched to the cases by age<strong>and</strong> sex.Cases ControlsSmokers 1350 1296Nonsmokers 7 61Total 1357 1357• Nonsmokers 7/1357 = 0.51% <strong>of</strong> the male cases but 61/1357 =4.5% <strong>of</strong> the male controls• Implicates smoking as a factorTrout Cod• Case-control studies commonly associated with epidemiology• Can be applied usefully in other settings• Habitat selection <strong>of</strong> trout cod on the Murray River- Cases = locations where radio-tagged trout cod were found- Controls = r<strong>and</strong>omly selected locations28

Things that go wrong• Even the best studies strike problems• Missing data- Survey nonresponse- Recording error- Treatments may fail- Study plots may be destroyed (demonic intrusion)Why are the data missing?• Should always ask why the data are missing• If the ‘missingness mechanism’ is related to the response <strong>of</strong> interestthen may cause bias- e.g., Non-response bias- ‘Non-ignorable’ missingness requires specialised help• Censoring is a special case- Right-censoring - true value is larger than the recorded value- Left-censoring - true value is smaller than the recorded value- Interval-censored - true value lies between 2 known values• In survival studies longer-lived individuals more likely to be rightcensored29

Good <strong>and</strong> Bad studies• Reliable samples <strong>and</strong> surveys will:1. Employ formal probability-based sampling when selecting individualsto sample.2. Employ well-constructed sampling frames, <strong>and</strong> these shouldinclude the entire population <strong>of</strong> interest or nearly so.• Reliable experiments will3. R<strong>and</strong>omly allocate treatments to subjects to avoid confounding<strong>and</strong> employ a control.4. Use blocking to remove unwanted sources <strong>of</strong> variation.5. Select study units that represent the population <strong>of</strong> interest.This should be done using r<strong>and</strong>om selection.Compromise• Compromise is <strong>of</strong>ten unavoidable- R<strong>and</strong>omisation <strong>of</strong> treatments may be impossible ethically- It may be impossible to sample individuals r<strong>and</strong>omly.• Don’t substitute ‘difficult’ for impossible- A smaller number <strong>of</strong> well-designed studies is better than awhole lot <strong>of</strong> cheap ones.• Watch out for designs that cheat.30

4 ProbabilityFred’s DayFred awoke one morning <strong>and</strong> headed <strong>of</strong>f to the doctor. In the waitingroom for the doctor the 23 waiting paitents were asked their birthdays.Fred could not help but overhear that two <strong>of</strong> the patients were born onthe same day, it was not his birthday though. During his appointmentFred got tested for a rare disease (1 in 10000 people suffer this disease),the test returns a positive result in 95% <strong>of</strong> cases <strong>of</strong> people who actuallyhave the disease, <strong>and</strong> 6% <strong>of</strong> cases when people don’t have the disease.Fred’s doctor informed him that he had returned a positive result.Should Fred be worried about returning a positive test result? Whatis the likelihood he has the disease?What is the probability <strong>of</strong> 2 out <strong>of</strong> 23 people in a room sharing abirthday? What are the odds that they share a specific birthday?In the next section we will learn some skills that will help us answerthese questions.4.1 Introduction to ProbabilityI have data now what?• Now we have learnt about collecting data, we want to know whatwe can do with itFirst <strong>of</strong> all: What is Probability?• There is no consistent definition <strong>of</strong> probability• Statisticians can be split into two main groups who have differingviews on probability.• Frequentists consider probability to be the relative frequency ‘inthe long run’ <strong>of</strong> outcomes.31

• Bayesians consider probability to be a way to represent an individual’sdegree <strong>of</strong> belief in a statement given the evidence.• Consider these statements.• We can quantify these probabilities.What is the probability I win lotto tonight?What is the probability I roll a 6.• Based on personal <strong>and</strong> subjective beliefWhat is the probability I pass Stat110?What is probability I will do an OE after graduating?Some definitions• Experiment = process by which observations/measurements areobtained e.g. tossing a fair die• Event = outcome <strong>of</strong> experiment e.g. getting a 6• Sample space = set <strong>of</strong> all possible outcomes e.g. 1, 2, 3, 4, 5, 6Conditions for a valid probability1. Each probability is between 0 <strong>and</strong> 12. The sum <strong>of</strong> the probabilities over all possible simple events is 1.In other words, the total probability for all possible outcomes <strong>of</strong>a r<strong>and</strong>om circumstance is equal to 1. (As long as these events aremutually exclusive)What does probability mean?• If the event A cannot happen then Pr(A) = 0• If the event A is certain to happen then Pr(A) = 1• Let’s say we have a mouse trap.• The event that the mouse we trap is a male <strong>and</strong> pregnant has aprobability Pr(A) = 0 <strong>of</strong> occuring because this is impossible32

• The event that the mouse we trap is either male or female hasa probability Pr(A) = 1 <strong>of</strong> occuring because it will definitely beone or the other.Calculating Probabilities• Probability <strong>of</strong> an event A isPr(A) =no. <strong>of</strong> experiments resulting in Alarge no. <strong>of</strong> repetitions= n AN• We don’t always need to conduct the experiments as we can makesensible assumptions i.e. die or coin is fair (prob <strong>of</strong> each outcome= 1/6 or 1/2 respectively)Some more things to knowComplementary Events• Two events are complementary if all outcomes are either <strong>of</strong> thetwo events e.g head or tail on fair coin• Ā is called the complement <strong>of</strong> A.Pr(A) + Pr(Ā) = 1The RulesAddition RulePr(A or B) = Pr(A ∪ B) = Pr(A) + Pr(B) − Pr(A ∩ B)Multiplication RulePr(A <strong>and</strong> B) = Pr(A ∩ B) = Pr(A) Pr(B|A)Addition rule - special caseMutually Exclusive Events• There is no intersection between the two events. In lay termsevents are said to be mutually exclusive if they cannot occur togetheri.e. getting heads <strong>and</strong> tails33

• In this case the addition rule simplifies toPr(A or B) = Pr(A ∪ B) = Pr(A) + Pr(B)• Because Pr(A ∩ B) cannot occurMultiplication rule - special caseIndependent Events• When the occurence <strong>of</strong> one event does not effect the outcome <strong>of</strong>another event. i.e. getting 3 heads in a row• In this case the multiplication rule simplifies toPr(A <strong>and</strong> B) = Pr(A ∩ B) = Pr(A) Pr(B)• Because Pr(B|A) = Pr(B), since B no longer relys on A happening.Blood donor example• The probability <strong>of</strong> being in each <strong>of</strong> the 4 blood groups (Dunedindonor centre)Blood Type ProbabilityA 0.38B 0.11AB 0.04O 0.47Blood donor example - Addition Rule• What is the probability that a person is either A or B?Pr(A or B) = Pr(A) + Pr(B)= 0.38 + 0.11= 0.4934

Blood donor example - Multiplication RuleWhat is the probability that 3 r<strong>and</strong>omly selected people have bloodgroup O?Pr(O) × Pr(O) × Pr(O) = 0.47 3= 0.104(under the assumption independence)Hospital Patients• A survey <strong>of</strong> hospital patients shows that the probability a patienthas high blood pressure given he/she is diabetic is 0.85. If 10%<strong>of</strong> the patients are diabetic <strong>and</strong> 25% have high blood pressure:• Find the probability a patient has both diabetes <strong>and</strong> high bloodpressure.• Are the conditions <strong>of</strong> diabetes <strong>and</strong> high blood pressure independent?Hospital Patients - Relevant information• Let A be the event ‘A patient has high blood pressure’• Let B be the event ‘A patient is diabetic”• Pr(A|B) = 0.85• Pr(B) = 0.10• Pr(A) = 0.25Hospital Patients - Question 1• Find the probability a patient has both diabetes <strong>and</strong> high bloodpressure.Pr(A ∩ B) = Pr(A | B) × Pr(B)= 0.85 × 0.10= 0.08535

Hospital Patients - Question 2• Are the conditions <strong>of</strong> diabetes <strong>and</strong> high blood pressure independent?• Remember when discussing the special case <strong>of</strong> the multiplicationrule we said if A <strong>and</strong> B are independent then:Pr(A | B) = P r(A)• We can use this to test for independence.Pr(A | B) = 0.85Pr(A) = 0.25Pr(A) ≠ Pr(A | B)• ∴ A <strong>and</strong> B are not independentCalculating Conditional probabilities• Conditional Events• Two events are conditional if the probability <strong>of</strong> one event changesdepending on the outcome <strong>of</strong> another event e.g EXAMPLEPr(A ∩ B) = Pr(A) Pr(B | A)Pr(A ∩ B) ÷ Pr(A) = Pr(A) Pr(B | A) ÷ Pr(A)P r(A ∩ B)Pr(B | A) =Pr(A)Pr(B | A) =P r(A ∩ B)Pr(A)• Important not to interpret conditional results as unconditional• What is the probability <strong>of</strong> buying ice cream?• Hot day = High, Cold day = Low36

Fair Die Example• A fair die is thrown. A is the event ’a number greater than 3 isthrown’ <strong>and</strong> B is the event ’an even number is thrown’• Find Pr(A ∪ B) <strong>and</strong> Pr(A ∩ B)Pr(A) =3/6 = 1/2 Pr(B) =3/6 = 1/2Fair Die Example - Visualisation• A fair die is thrown. A is the event ‘a number greater than 3 isthrown’ <strong>and</strong> B is the event ‘an even number is thrown’524613Pr(A ∪ B) = (2 4 5 6)Pr(A ∩ B) = (4 6)Fair Die Example• Find Pr(A ∩ B)• Use multiplication rule, because events are not independent :• We can find the conditional probability <strong>of</strong> Pr(B | A)Pr(A ∩ B)Pr(B | A) =Pr(A)P r(B | A) = 1/31/2P r(B | A) = 2/337

• Or we can calculate P r(A ∩ B)Pr(A ∩ B) = Pr(A) Pr(B | A)Pr(A ∩ B) = 1/2 × 2/3Pr(A ∩ B) = 1/3• The decision on which to calculate depends on the informationwe have.• Find P r(A ∪ B)• Use addition rule, because events are not mutually exclusivePr(A ∪ B) = Pr(A) + Pr(B) − Pr(A ∩ B)Pr(A ∪ B) = 1/2 + 1/2 − 1/3Pr(A ∪ B) = 2/34.2 Tree DiagramsTree Diagrams• Useful for helping calculate the probability <strong>of</strong> a combined event• The stages <strong>of</strong> the combined event can be independent or dependent• A dependent stage means the probability at each branch is conditionalon earlier outcomes.• Tree diagrams show all possible outcomes38

Tree diagram rules• Add Vertically.• Multiply across.• They give a good way to visualise sample space restrictions resultingfrom conditioning <strong>and</strong> help to calculate joint <strong>and</strong> conditionalprobabilitiesBasic Tree DiagramsBA¯BBĀ¯B• In this simple example each <strong>of</strong> the levels <strong>of</strong> the tree diagram havetwo possibilities.• Note A + Ā = 1 <strong>and</strong> B + ¯B = 1. Since if A does not occur Āmust, similarly if B does not occur ¯B must.• Also note if the events A <strong>and</strong> B are not independant than theprobability B occurs after A is not necessarily the same as theprobability B occurs after Ā39

H<strong>and</strong>edness vs Gender - Combined Probabilities0.5696F0.89140.1086R 0.5077L 0.06190.4304M0.8897R 0.38290.1102L 0.0474H<strong>and</strong>edness Summary• What is the probability <strong>of</strong> each <strong>of</strong> being Left h<strong>and</strong>ed?Pr(L) = Pr(L ∩ F ) + Pr(L ∩ M)Pr(L) = 0.0619 + 0.0474Pr(L) = 0.1093• What is the probability <strong>of</strong> each <strong>of</strong> being right h<strong>and</strong>ed?Pr(R) = Pr(R ∩ F ) + Pr(R ∩ M)Pr(R) = 0.5077 + 0.3829Pr(R) = 0.8906Independent Stages• Stephens Isl<strong>and</strong> is an uninhabited isl<strong>and</strong> in Cook Strait wheretuatara are being re-established. For some years three locationshave been visited on the isl<strong>and</strong> <strong>and</strong> tuatara have been found ata location with probability 0.4. At any visit X represents thenumber <strong>of</strong> locations out <strong>of</strong> three at which tuatara are observed.X can take values 0, 1, 2, or 3. Find the probabilities that 0, 1,2, or 3 locations have tuatara on a visit. T is the event ‘locationhas tuatara’ <strong>and</strong> N is the complementary event ‘location has notuatara’40

Tree Diagrams - Independent Stages0.60.4TN0.40.60.40.6TNTN0.40.60.40.60.40.60.40.6T X = 3N X = 2T X = 2N X = 1T X = 2N X = 1T X = 1N X = 0Independent Stages• Find the probability <strong>of</strong> seeing tuatara at two <strong>of</strong> the three sites:P r(X = 2) = P r(TTN, TNT, NTT)= 0.4 × 0.4 × 0.6 +0.4 × 0.6 × 0.4 +0.6 × 0.4 × 0.4= 0.096 + 0.096 + 0.096= 0.288• Find the probability <strong>of</strong> seeing tuatara at one <strong>of</strong> the three sites• Take advantage <strong>of</strong> the fact that all possibilities add to 1P r(X = 2) = 0.288P r(X = 0) = 0.6 × 0.6 × 0.6 = 0.216P r(X = 3) = 0.4 × 0.4 × 0.4 = 0.06441

P r(X = 1) = 1 − 0.288 − 0.216 − 0.064= 0.43242

Dependent Stages• Andrew, John <strong>and</strong> Mark play a game <strong>of</strong> chicken. There are sixsimilar cars, two <strong>of</strong> which have had the brakes disabled. Eachperson chooses a car at r<strong>and</strong>om, drives at high speed towards ariver, <strong>and</strong> brakes in time to stop. The boys decide to proceed inalphabetical order• Find Pr(each will lose) <strong>and</strong> Pr(no loser) where the game stopswhen the first boy drives into the river.2/6Andrew LosesJohn Loses4/63/52/52/4Mark Loses2/4No loserPr(Andrew loses) = 2/6= 1/3Pr(John loses) = 4/6 × 2/5= 4/15Pr(Mark loses) = 4/6 × 3/5 × 2/4= 3/15Pr(no loser) = 4/6 × 3/5 × 2/4= 1/5DefinitionsSensitivity = Pr(B| A) The probability that a person with thedisease returns a positive result.Specificity = Pr(¯B| Ā) The probability that a person withoutthe disease returns a negative result.43

Definitions IIPositive Predictive Value = Pr(A|B) The proportion <strong>of</strong> patientswith positive test results who are correctly diagnosed.Negative Predictive Value = Pr(Ā|¯B) The proportion <strong>of</strong> patientswith negative test results who are correctly diagnosed.Screening Programmes• A patient with certain symptoms consuled her doctor to be checkedfor a cancer, <strong>and</strong> she undergoes a biopsy.• With this test there is a probability <strong>of</strong> 0.90 that a woman withthe cancer shows a positive biopsy, <strong>and</strong> a probability <strong>of</strong> only 0.001that a healthy woman incorrectly shows a positive biopsy.• Historical information also suggests that the prevalence <strong>of</strong> thiscancer in the population is 1 in 10000.• Find the probability that a woman has the cancer given the biopsysays she does (i.e. does the biopsy diagnose true patient status?).• Let A be the event ‘woman has the cancer’ <strong>and</strong> B be the event‘biopsy is positive’.• Pr(A) = 1/10000 = 0.0001 (disease prevalence)• Pr(B| A) = 0.90 (conditional probability)• Pr(B| Ā)= 0.001 44

0.90BBiopsy +ve (true positive)0.00009A0.00010.10¯BBiopsy -ve (false negative)0.000010.99990.001BBiopsy +ve (false positive)0.00100Ā0.999¯BBiopsy -ve (true negative)0.99890Probability that the test is +ve = 0.00009 + 0.00100 = 0.00109Positive Predictive value• Find the positive predictive value Pr(A|B). To calculate this weuse the conditional probability formulaP r(A ∩ B)P r(A| B) =P r(B)P r(Have disease <strong>and</strong> test positive)P r(A| B) =P r(test positive)P r(True positive)P r(A| B) =P r(Total positive)P r(A| B) = 0.000090.00109P r(A| B) = 0.083• Only 8.3% <strong>of</strong> those women identified as having the disease actuallydo.45

Negative Predictive value• Find the positive predictive value Pr(A|B). To calculate this weuse the conditional probability formulaP r(Ā| ¯B) P r(Ā ∩ ¯B)=P r(¯B)P r(Ā| ¯B) P r(Don’t have disease <strong>and</strong> test negative)=P r(test negative)P r(Ā| ¯B) P r(True negative)=P r(Total negative)P r(Ā| ¯B) = 0.998900.99891P r(Ā| ¯B) = 0.9999• 99.99% <strong>of</strong> those women identified as not having the disease actuallydo.Classification table• Sometimes the information is presented in a different manner.• Hooker Sealions• Closure <strong>of</strong> the squid fishery in the sub Antartic isl<strong>and</strong>s due toHooker sea lion bycatch is a costly issue for fishing companies<strong>and</strong> much research is carried out on this. The following tableclassifies a sample <strong>of</strong> 219 vessels according to vessel nation <strong>and</strong>bycatch status over nine years.NZ Russia TotalNo by-catch 90 100 190Bycatch 6 23 29Total 96 123 219• Estimate the probability that a sampled vessel is Russian.• Given that the sampled vessel had by-catch what is the probabilitythat it is Russian.• Let B be By-catch, <strong>and</strong> ¯B be no-bycatch46

Calculating Probabilities• Estimate the probability that a sampled vessel is Russian.• The estimated probability a sampled vessel is Russian is 123219 =0.562Tree Diagram23123B23219R123219100123¯B10021996219696B6219NZ9096¯B90219Calculating Conditional Probabilities• Given that the sampled vessel had by-catch what is the probabilitythat it is Russian.• First calculate the total probability <strong>of</strong> a by-catchPr(B) = 23219 + 6219Pr(B) = 29219• Now use the conditional probabilityPr(R ∩ B)Pr(R | B) =Pr(B)Pr(R | B) =2321929219Pr(R | B) = 2329Pr(R | B) = 0.79347

Sensitive Survey Questions• Sometimes we are interested in sensitive survey questions.• For example the drug question in the questionaire.• The reason the question was phrased like this is it protects theindividual respondents.• If you got “heads” OR if you have ever smoked marijuana or usedany other illicit drug select “head”, otherwise select “tails”.Tree DiagramH0.50.51 - θTTθH (drugs)Sensitive Survey Question• So we can use this information to calculate probability <strong>of</strong> Druguse.P r(H) = P r(H) + P r(H(Drugs))P r(H) = 0.5 + (0.5 × (θ))• From the survey we got Pr(H) = 187305 = 0.61310.6131 = 0.5 + (0.5 × (θ))0.6131 − 0.5 = 0.5 + (0.5 × (θ)) − 0.50.1131 × 2 = (0.5 × (θ)) × 20.2262 = θ48

4.3 R<strong>and</strong>om VariablesR<strong>and</strong>om Variables• A r<strong>and</strong>om variable has values which depend on the outcome <strong>of</strong> ar<strong>and</strong>om experiment.• R<strong>and</strong>om variables are labelled with a capital letter.• They can be discrete or continuousR<strong>and</strong>om Variables - Discrete Example• Consider the Tuatara example• The three previous locations are visited on 50 occasions <strong>and</strong> thenumber <strong>of</strong> locations with tuatara found are recorded each time.fX = x i f i i nPr(X = x i )0 8 0.16 0.2161 22 0.44 0.4322 15 0.30 0.2883 5 0.10 0.064Total 50 1.00 1.000• X is the r<strong>and</strong>om variable• X is discrete• The 50 responses are summarised as relative frequencies.• If many trials are carried out then the relative frequencies <strong>of</strong> eachx i ’s stabilise to give the probabilities for each outcome.• This set <strong>of</strong> probabilities forms the probability distribution49

Probability DistributionNote that for a probability distribution:• The sum <strong>of</strong> all the probabilities Pr(X = x i ) adds to one (same asfor relative frequency distribution).4∑Pr(X = x i ) = 1i=1• All probabilites are between 0 <strong>and</strong> 1.Describing Probability Distributions• Just as for a data set, we can describe a probability distributionby finding the mean (to describe the centre) <strong>and</strong> by finding thevariance or st<strong>and</strong>ard deviation (to describe the variability).If X is the probability distribution then:• µ X is the mean <strong>of</strong> X, <strong>and</strong>• σ 2 Xis the variance <strong>of</strong> XCalculating the Mean• For a sample <strong>of</strong> n values from the distribution, each x i occurs f itimes <strong>and</strong> there k possible values <strong>of</strong> i.• The sample mean is:¯x ==k∑x i f ii=1nk∑ f ix ini=1µ x =k∑x i Pr (X = x i )i=150

Calculating the Variance• The sample variance is:σ 2 x =k∑(x i − µ x ) 2 Pr (X = x i )i=1Finding the mean• Consider the Tuatara exampleX = x i Pr(X = x i ) x i Pr(X = x i )0 0.216 01 0.432 0.4322 0.288 0.5763 0.064 0.192Total 1.000 1.2• The mean number tuatara we see on each visit is 1.2Finding the variance• Consider the Tuatara exampleX = x i Pr(X = x i ) (x i − µ X ) 2 (x i − µ X ) 2 Pr(X = x i )0 0.216 (0 − 1.2) 2 = 1.44 0.3111 0.432 (1 − 1.2) 2 = 0.04 0.0172 0.288 (2 − 1.2) 2 = 0.64 0.1843 0.064 (3 − 1.2) 2 = 3.24 0.207Total 1.000 5.36 0.72• Variance is 0.72• St<strong>and</strong>ard deviation is just the square root <strong>of</strong> the variance, hencethe s.d. = √ 0.72 = 0.85Contagious disease• A person infected with a disease can pass it on to others• Let the r<strong>and</strong>om variable X be the number <strong>of</strong> others infected bythis person.This time instead <strong>of</strong> setting up the table, lets just use the formula:51

X = x i Pr(X = x i )0 0.101 0.252 0.403 0.204 0.05Meanµ X = 0(0.10) + 1(0.25) + 2(0.40) + 3(0.20) + 4(0.05) = 1.85Varianceσ 2 X = (0 − 1.85)2 × 0.10 + (1 − 1.85) 2 × 0.25 + (2 − 1.85) 2 ×0.40 + (3 − 1.85) 2 × 0.20 + (4 − 1.85) 2 × 0.05 = 1.0275St<strong>and</strong>ard Deviationσ X =√σ 2 X = √ 1.0275 = 1.0137Combining R<strong>and</strong>om Variables• Often we are interested in the mean <strong>and</strong> the variance <strong>of</strong> a rescaledr<strong>and</strong>om variable, or in the mean <strong>and</strong> variance <strong>of</strong> sums (or differences)<strong>of</strong> r<strong>and</strong>om variables.• We will look at some properties <strong>of</strong> r<strong>and</strong>om variables (both discrete<strong>and</strong> continuous)Modifying R<strong>and</strong>om Variables• If X is an independent r<strong>and</strong>om variable <strong>and</strong> a <strong>and</strong> b are constants.• Consider a new r<strong>and</strong>om variable Y, where:Y = a + bX• The mean <strong>of</strong> Y can be calculated by:µ Y = a + bµ X• The variance <strong>of</strong> Y can be calculated by:σY 2 = b2 σX252

Examples• Consider a data set XX = 3, 4, 5, 6, 7• So µ X = 5 <strong>and</strong> σX 2 = 2.5• Now consider Y = 2 × XX = 6, 8, 10, 12, 14• In this case a = 0 <strong>and</strong> b = 2 soµ Y = a + bµ X = 0 + 2 × 5 = 10σY 2 = b2 σX 2 = 22 × 2.5 = 10• So µ X = 5 <strong>and</strong> σX 2 = 2.5• Now consider Y = 4 × X + 3X = 15, 19, 23, 27, 31• In this case a = 3 <strong>and</strong> b = 4 soµ Y = a + bµ X = 3 + 4 × 5 = 23σY 2 = b2 σX 2 = 42 × 2.5 = 40Temperature Problem• Temperatures can be recorded in degrees Fahrenheit. Suppose ar<strong>and</strong>om variable F measures January temperature ( ◦ F) in Dunedin.• Daily maximum summer temperatures have a mean <strong>of</strong> 70 ◦ F witha st<strong>and</strong>ard deviation <strong>of</strong> 5 ◦ F.• Use the conversion formula C = 5 9(F-32) to find the mean <strong>and</strong>st<strong>and</strong>ard deviation for the temperature in degrees Celsius.53

Rearranging the FormulaC = 5 (F − 32)9= 5 ( ) 59 F − 9 × 32= 5 9 F − 1609= − 1609 + 5 9 FCalculating the mean• So to find µ C = µ a+bF , a = − 1609<strong>and</strong> b = 5 9µ C = a + bµ F= − 1609 + ( 59 × 70 )= 21.1 ◦ CCalculating the st<strong>and</strong>ard deviation• So to find σ 2 C = σ2 a+bF , a = −160 9<strong>and</strong> b = 5 9σC 2 = b2 σF2( ) 2 5= × 5 29= 7.716 ◦ C• The St<strong>and</strong>ard deviation is just the square root <strong>of</strong> the variance√7.716 = 2.78 ◦ C54

Combining 2 R<strong>and</strong>om Variables• If X <strong>and</strong> Y are an independent r<strong>and</strong>om variables <strong>and</strong> a <strong>and</strong> b areconstants.• Consider a new r<strong>and</strong>om variable Z, where:Z = aX + bY• The mean <strong>of</strong> Z can be calculated by:µ Z = aµ X + bµ Y• The variance <strong>of</strong> Z can be calculated by:σ 2 Z = a2 σ 2 X + b2 σ 2 YThings to look out for:• a <strong>and</strong> b are 1• Then the new r<strong>and</strong>om variable Z is:Z = X + Y• The mean <strong>of</strong> Z can be calculated by:µ Z = µ X + µ Y• The variance <strong>of</strong> Z can be calculated by:σZ 2 = σ2 X + σ2 Y• a <strong>and</strong> b are -1• Then the new r<strong>and</strong>om variable Z is:Z = −X + −YZ = −X − Y• The mean <strong>of</strong> Z can be calculated by:µ Z = −1 × µ X + −1 × µ X55

• The variance <strong>of</strong> Z can be calculated by:σ 2 Z = (−1)2 × σ 2 X + (−1)2 × σ 2 Yσ2 Z = 1 × σ2 X + 1 × σ2 Y56

Fred’s DayShould Fred be worried about returning a positive test result? Whatis the likelihood he has the disease?Remember that the prevalence <strong>of</strong> his disease is 1 in 10000 <strong>and</strong> theprobability that the diagnostic test returns a positive result is 95% whenpeople have the disease, <strong>and</strong> 6% <strong>of</strong> cases when people don’t have thedisease. We learned during this section that conditional probabilitiesare not necessarily reversible, so the probability that one has a diseasegiven they have a positive test result (what we currently have) is notthe same as the probability <strong>of</strong> having a positive test result given onehas the disease (what we wish to know). The first step would be tosummarize our information using a tree diagram. (For this diagram T= Test Positive, <strong>and</strong> D = Has disease)From this tree diagram it is easy to see the total probability <strong>of</strong> gettinga positive test is 0.0599 (False positive) + 0.000095 (True Postive) =0.059995. Therefore as we have learned it is just a matter <strong>of</strong> dividingthe probability <strong>of</strong> a true positive by the probability <strong>of</strong> getting a positivetest, to find the probability <strong>of</strong> Fred having the disease given he has apositive test result.57

Pr(D ∩ T)Pr(D| T) =Pr(T)P r(D| T) = 0.0000950.0599P r(D | T) = 0.00158So from this result Fred should not be very worried at all, as thereis only a 0.2% chance <strong>of</strong> him actually having the disease given apositive test result. Note this very different to the 95% chance <strong>of</strong>returning a positive test given you have the disease. Having a betterunderst<strong>and</strong>ing <strong>of</strong> conditional probability can help Fred sleep a lot easier.Now to the second problem <strong>of</strong> the day, at first Fred thought it wasamazing two people out <strong>of</strong> twenty people shared a birthday, but afterremembering his <strong>Statistics</strong> class he decided to work out the probability<strong>of</strong> this occuring. If he could work out the probability that no one <strong>of</strong>the 23 shared a birthday he could work out, the probability 2 or morepeople did, by subtracting that answer from 1. (For simplicity sake wewill ignore leap years).To calculate the probability <strong>of</strong> people not sharing the same birthday,we assume each <strong>of</strong> the 23 people are an independant event so we cancombine them using the simplified multiplication rule. The probabilitythat person 1 does not share a birthday is equal to 365365since there isno one for him to clash with, the probability that person 2 does notshare a birthday is equal to 364365, since the birthday <strong>of</strong> the first personis no excluded from the possible birthdays, the probability that person3 does not share birthday becomes 363365<strong>and</strong> so on <strong>and</strong> so forth. Sothe probability that no one shares a birthday in the 23 people equals365365 × 365 364 × 363365 . . . × 343365 = 0.4927.58

Now using the complementary event rule we can substract thisprobability from 1, meaning there is a 50.73% chance that at least two<strong>of</strong> the people share birthday so this seemingly remarkable coincidenceoccurs more <strong>of</strong>ten than not.The probability that someone in the room had the same birthday asFred is slightly different, since the probability <strong>of</strong> each <strong>of</strong> the otherpeople not sharing Fred’s birthday would be 364365, so the probability noone shares Fred’s birthday is (365 364)22= 0.9414. Using complimentaryevents this tells us the probability someone shares Fred’s Birthday is1 − 0.9414 = 0.0586. This is quite rare event, so would have beensurprising.An underst<strong>and</strong>ing <strong>of</strong> what we are actually asking, <strong>and</strong> a basic underst<strong>and</strong>ing<strong>of</strong> probability can make seemingly impossible conincidencesactually appear quite reasonable.59

5 Probability DistributionsFred’s Rugby TeamFred is a avid rugby player, <strong>and</strong> has started coaching using some innovativetechniques to improve pace. Out <strong>of</strong> Fred’s team <strong>of</strong> 15, 5 havedeveloped leg injuries. Fred wondered if maybe his coaching had somethingto do with this, so he went onto Google, <strong>and</strong> found a studythat suggested that 15% <strong>of</strong> rugby players develop leg injuries. Is thereenough evidence to suggest Fred’s coaching techniques are causing moreinjuries than normal?Fred also wanted to know if his new training techinique were working.He had read that 40 metre sprint times for players are normallydistributed with a mean <strong>of</strong> 7 seconds <strong>and</strong> a st<strong>and</strong>ard deviation <strong>of</strong> 0.6seconds. What times would his players need to record to be in thetop 10% <strong>of</strong> players? What is the probability a player could run the 40metres in 6.1 seconds?5.1 Binomial Distribution• Arises when investigating proportions e.g.population with diabetes.proportion <strong>of</strong> adult• Each individual has or does not have diabetes (binary outcome).• Let Y be the r<strong>and</strong>om variable for an individual outcome <strong>of</strong> aperson in the population.• There are two outcomes: Y = 1 <strong>and</strong> Y = 0 - generally speakingthis refers to Success <strong>and</strong> Failure respectively.• The parameter π represents the unknown proportion <strong>of</strong> 1’s occuring.Probability DistributionThe probability distribution <strong>of</strong> Y is:Y = y i Pr(Y=y i )1 (Success) π0 (Failure) 1 − π60

Mean <strong>of</strong> Binary DistributionThe mean is colliquially defined as what is the average outcome <strong>of</strong>an event.Mean = µ Y = 1 × π + 0 × (1 − π) = πVariance <strong>of</strong> Binary DistributionVariance(σY 2 ) = (1 − π)2 × π + (0 − π) 2 × (1 − π)= π (1 − π) 2 + π 2 (1 − π)= π (1 − π) (1 − π + π)= π (1 − π)Distribution <strong>of</strong> the Binomial distribution• Suppose we take a sample <strong>of</strong> size n from the underlying population<strong>and</strong> look at the distribution <strong>of</strong> the number <strong>of</strong> successes.• Total number <strong>of</strong> successes :X = Y 1 + Y 2 + Y 3 + . . . + Y n• Where all the Y i ’s are independent <strong>of</strong> each other.• This is the Binomial distribution.Mean <strong>and</strong> Variance <strong>of</strong> the Binomial distributionCombining r<strong>and</strong>om variables gives the mean <strong>of</strong> X as:The variance <strong>of</strong> X is given as:µ X = π Y1 + π Y2 + π Y3 + . . . + π Ynσ 2 X = σ2 Y 1+ σ 2 Y 2+ σ 2 Y 3+ . . . + σ 2 Y n61

Since all the Y’s come from the same population then:π Y1 = π Y2 = π Y3 = . . . = π Yn = πσ 2 Y 1= σ 2 Y 2= σ 2 Y 3= . . . = σ 2 Y n= σ 2Hence the mean <strong>of</strong> X is given as:µ X = π Y1 + π Y2 + π Y3 + . . . + π Yn= π + π + π + . . . + π= nπAnd the variance <strong>of</strong> X is:σ 2 X = σ2 Y 1+ σ 2 Y 2+ σ 2 Y 3+ . . . + σ 2 Y n= σ 2 + σ 2 + σ 2 + . . . + σ 2= n × σ 2= nπ (1 − π)YOU NEED TO KNOW• Mean <strong>of</strong> the Binomial, Xnπ• Variance <strong>of</strong> the Binomial, Xnπ (1 − π)What is p?Sometimes the value <strong>of</strong> the parameter π is not known <strong>and</strong> we needto have an estimator for this.• Use p where p = X n• X is the number <strong>of</strong> successes.• n is the number <strong>of</strong> trials.The formulae simplify:62

• Mean number <strong>of</strong> successes• Variance <strong>of</strong> number <strong>of</strong> successesnpnp (1 − p)THREE CONDITIONS1. Outcome is binary.2. We have n independent trials.3. Probability <strong>of</strong> success π must stay constant.<strong>Notes</strong>• Outcome is binary.There may be more than two possible outcomes, as long as theoutcomes can be combined into two subsets.One subset is success, the other is failure. e.g. ‘Rolling a 4’is a “success” - Any other number is a “failure” or ‘Having Blueeyes’ is a “success” - Any other eye color is a “failure”<strong>Notes</strong> About the Conditions• Probability <strong>of</strong> success π must stay constant.Sampling without replacement from a small population doesnot produce a binomial r<strong>and</strong>om variable.For example, suppose a class consists <strong>of</strong> 10 boys <strong>and</strong> 10 girls.Five are r<strong>and</strong>omly selected to be in a play <strong>and</strong> X = the number<strong>of</strong> girls selected.This is not binomially distributed because each time an individualis removed from the sample the probability that a girl isselected changes.63

PROBABILITY OF X SUCCESSESThe probability <strong>of</strong> x successses where x takes the values 0 to n isgiven by:( nP r (X = x) = πx)x (1 − π) n−xWhere ( nx) is the binomial coefficient( n n!=x)x! (n − x)!Consider the TuataraStephens Isl<strong>and</strong> is an uninhabited isl<strong>and</strong> in Cook Strait where tuataraare being re-established. For some years three locations havebeen visited on the isl<strong>and</strong> <strong>and</strong> tuatara have been found at a locationwith probability 0.4. At any visit X represents the number <strong>of</strong> locationsout <strong>of</strong> three at which tuatara are observed (X can take values0, 1, 2, or 3). Find the probabilities that 0, 1, 2 or 3 locations havetuatara on a visit. T is the event ‘location has tuatara’ <strong>and</strong> N is thecomplementary event ‘location has no tuatara’.Calculating Probabilities• Find the probability <strong>of</strong> seeing Tuatara at two <strong>of</strong> the three sites.P r(X = 2) = P r(T T N, T NT, NT T )= 0.096 + 0.096 + 0.096= 0.288• This is a binomial example with n = 3, π = 0.4.• In this case we are interested in the probability <strong>of</strong> two successes.64

Using the Formulan = 3, x = 2, π =0.4P r (X = x) =P r (X = 2) =( nπx)x (1 − π) n−x( 30.42)2 (1 − 0.4) 3−2P r (X = 2) = 0.2880This is <strong>of</strong> course a very simple example, for more complicated examplesinstead <strong>of</strong> using the formula all the time we can use tables ors<strong>of</strong>tware to find probabilities.Family make-up exampleA report suggests that 75% <strong>of</strong> Maori children under 18 live withboth parents. A r<strong>and</strong>om sample <strong>of</strong> 20 Maori children is selected, <strong>and</strong>X is the binomial r<strong>and</strong>om variable for the number <strong>of</strong> these 20 who livewith both parents.1. Define the parameters <strong>of</strong> the distribution <strong>of</strong> X2. Find Pr(X = 15)3. Find the probability that 11 or fewer live with both parents i.e.Pr(X ≤ 11).4. A r<strong>and</strong>om sample <strong>of</strong> 20 NZ Caucasian children had only 11 livingwith both parents. Does this result provide any evidence to supportthe claim that 75% <strong>of</strong> NZ Caucasian children live with bothparents?Family make-up example solutions1. X is binomial with n = 20 <strong>and</strong> π = 0.7565

2.( nP r (X = x) = πk)x (1 − π) n−x( ) 20P r (X = 15) = 0.75 15 (1 − 0.75) 20−151520!=15! (20 − 15)! 0.7515 (1 − 0.75) 5= 0.2023Or just use RcmdrDistributions > Discrete Distributions > BinomialDistribution > Binomial Probabilities3. P r (X ≤ 11) = P r (X = 0, 1, 2, . . . , 11) can be replaced by P r (Y ≥ 9) =P r (Y = 20, 19, 18, . . . , 9) . Just use RcmdrDistributions > Discrete Distributions > BinomialDistribution > Binomial tail Probabilities = 0.04104. If π = 0.75 is assumed for Caucasian families, Pr(X ≤ 11) is verysmall (0.0410 is less than 0.05). This gives us evidence that theprobability is less than 75% for NZ Caucasian children. We rejectthe claim that π = 0.75 for Caucasian families can conclude fewerlive with both parents. (because 11 is in the direction <strong>of</strong> fewerrather than more)Note that instead if 12 out <strong>of</strong> 20 <strong>of</strong> the NZ Caucasian childrenwere living with both parents there would now be no evidence fromour data to suppose the situation is any different among Caucasianfamilies. Pr(X ≤ 12) = 0.1010 which is not small.Wait, What?• Where do the conclusions come from?• The p-value, the probability that an event will occur given a setn <strong>and</strong> π.66

• A probability less than 0.05 is (by convention) taken to imply anevent is rare or unlikely to occur• A probability above 0.05 <strong>of</strong>ten means an event is not unusual.Cancer drug exampleThe st<strong>and</strong>ard drug for treating a cancer is claimed to halve thetumour size in 30% <strong>of</strong> all patients treated. Suppose X is the binomialr<strong>and</strong>om variable for the number <strong>of</strong> patients in a sample <strong>of</strong> seven whohave their tumour size halved.1. List the conditions which must be met if X is binomial2. Write down the probability that three <strong>of</strong> the patients have theirtumour size halved3. Find the probability that three or more <strong>of</strong> the patients have theirtumour size halved.4. In a pilot study in Auckl<strong>and</strong>, three out <strong>of</strong> seven patients given anew drug had their tumour size halved. What conclusion if anycan be drawn about the new drug? Explain how you reach yourconclusionCancer drug example solutions1. List the conditions which must be met if X is binomial• Patients need to be independent, so we can’t select people fromthe same family or with any other common factor.• Two outcomes only - Cancer halved, or not halved.• Constant probability <strong>of</strong> tumour size halved over all the patients.- Can’t have some medical breakthrough in middle <strong>of</strong> trial.2. Write down the probability that three <strong>of</strong> the patients have theirtumour size halved = 0.2269.3. Find the probability that three or more <strong>of</strong> the patients have theirtumour size halved = 0.2269 + 0.0972 + 0.0250 + 0.0036 + 0.0002.67

Use R comm<strong>and</strong>er or R excel4. In a pilot study in Auckl<strong>and</strong>, three out <strong>of</strong> seven patients given anew drug had their tumour size halved. What conclusion if anycan be drawn about the new drug? Explain how you reach yourconclusion• There is no reason to suppose the new drug is any different to thest<strong>and</strong>ard.• Probability <strong>of</strong> three or more is 0.3529 which is large meaning theresult with the new drug is consistent with the 30% before.Cancer drug example solutions note• The reason that 0.3529 is means that there is no difference is NOTbecause 0.3529 is close to 0.3.• The reason is this number is greater than 0.05.Endangered bird egg exampleA scientist has established over a long period <strong>of</strong> time that only 30%<strong>of</strong> the eggs laid by an endangered bird species result in the successfulrearing <strong>of</strong> a chick1. A sample <strong>of</strong> 10 <strong>of</strong> these eggs is monitored. Find the probabilitythat at least half <strong>of</strong> the 10 eggs result in the successful rearing <strong>of</strong>a chick2. A second sample <strong>of</strong> 20 eggs is monitored. Find the probabilitythat at least half <strong>of</strong> the 20 eggs result in the successful rearing <strong>of</strong>a chick3. Two breeding programmes for this endangered bird on two seperate<strong>of</strong>f-shore isl<strong>and</strong>s were investigated. On Isl<strong>and</strong> A, 5 out <strong>of</strong> the10 eggs, <strong>and</strong> on Isl<strong>and</strong> B, 10 out <strong>of</strong> 20 eggs resulted in the successfulrearing <strong>of</strong> chicks. Comment on the success or otherwise <strong>of</strong>the two breeding programmes in light <strong>of</strong> your answers in (1) <strong>and</strong>(2)68

Endangered bird egg example solutions1. A sample <strong>of</strong> 10 <strong>of</strong> these eggs is monitored. Find the probabilitythat at least half <strong>of</strong> the 10 eggs result in the successful rearing <strong>of</strong>a chick2. A second sample <strong>of</strong> 20 eggs is monitored. Find the probabilitythat at least half <strong>of</strong> the 20 eggs result in the successful rearing <strong>of</strong>a chickUse R comm<strong>and</strong>er3. Two breeding programmes for this endangered bird on two seperate<strong>of</strong>f-shore isl<strong>and</strong>s were investigated. On Isl<strong>and</strong> A, 5 out <strong>of</strong> the10 eggs, <strong>and</strong> on Isl<strong>and</strong> B, 10 out <strong>of</strong> 20 eggs resulted in the successfulrearing <strong>of</strong> chicks. Comment on the success or otherwise <strong>of</strong>the two breeding programmes in light <strong>of</strong> your answers in (1) <strong>and</strong>(2)Isl<strong>and</strong> B has a higher rate <strong>of</strong> success than 30% there is no evidencethat Isl<strong>and</strong> A has a higher success rate.69

5.2 Normal DistributionDISTRIBUTION SHAPE• Demonstration - Family <strong>of</strong> Binomial Distributions.• The shape <strong>of</strong> the binomial distribution becomes more symmetricalfor larger n <strong>and</strong> for π closer to 0.5.NORMAL PROBABLILITY DISTRIBUTION• The ‘bell-shaped’ curve (a.k.a. the normal distribution) allows usto calculate probabilities associated with observed sample resultswhen we are dealing with(a) Continuous, <strong>and</strong>(b) sample means.PROBABLILITY DISTRIBUTIONCompare a relative frequency histogram with a probability distribution.• Demonstration: Grass intake per bite by cows• Relative frequency histogram represents a sample (smaller number<strong>of</strong> individuals).• Probability density function represents a population (large number<strong>of</strong> individuals).• The probability P r(a < X < b) is found using the area under thecurve between X = a <strong>and</strong> X = b.70

RELATIVE FREQUENCY HISTOGRAMNormal Fit to Height Datasample mean = 172.5cm, sample st<strong>and</strong>ard deviation = 10.6 (1dp)AREAS UNDER THE CURVE71

Normal Distribution <strong>Notes</strong>• The graph is symmetrical about µ (centre).• The two parameters, µ <strong>and</strong> σ, completely define the normal distribution.We sayX ∼ N(µ, σ 2 ).• Demonstration: Shape <strong>of</strong> normal distributions• Increasing µ moves the curve but does not change its shape.• Increasing σ spreads the curve more widely about X = µ but doesnot alter the centre.AREAS UNDER THE CURVE• Probabilities are equivalent to areas under the normal distributioncurve.• Total area under the curve is equal to 1 (0.5 either side <strong>of</strong> mean).• The probability P r(a < X < b) is found using the area under thecurve between X = a <strong>and</strong> X = b.• Areas under the curve can be found by integrating the equationfor the normal curve.EQUATION OF THE NORMAL CURVE• For the general normal distribution:f(X) = 1 √2πσe − 1 2( X−µσ) 2 .• The parameters µ <strong>and</strong> σ are estimated by the sample mean, x<strong>and</strong> sample st<strong>and</strong>ard deviation, s.• This equation simplifies nicely for the st<strong>and</strong>ard normal distribution(µ = 0 <strong>and</strong> σ = 1):f(Z) = 1 √2πe − 1 2 Z2 .72

Finding Areas Under the Curve• In reality we don’t have to integrate this expression.• Happily we can use R-comm<strong>and</strong>er (or tables).AREAS UNDER THE STANDARD NORMAL CURVE USING R-COMMANDER• Areas under this curve are found by choosing Distributions >Continuous distributions > Normal distribution > Normal probabilities.• Enter variable value(s), mu (mean), sigma (st<strong>and</strong>ard deviation).• For the st<strong>and</strong>ard normal leave the entries 0 for mu <strong>and</strong> 1 for sigma.• Choose the tail (upper/lower).• Can shortcut using the script window: use pnorm(value, lower.tail =T RUE/F ALSE).SOME EXAMPLESAlways draw a diagram to identify the area you want. pnorm(z)in Rcmdr gives the cumulative probability under the STANDARDnormal curve up to Z = z.• Find P r(0 < Z < 1.64)pnorm(1.64) - 0.5 = 0.94945 - 0.5 = 0.449573

• Find P r(Z > 1.64)pnorm(1.64,lower.tail=FALSE) = 0.0505• Find P r(1 < Z < 1.64)pnorm(1.64) - pnorm(1) = 0.94945 - 0.8413 = 0.108274

• Find P r(−1 < Z < 1.64)pnorm(1.64) - pnorm(-1) = 0.7908• Find P r(−1 < Z < 1)pnorm(1)-pnorm(-1) = 0.682775

• Find P r(−2 < Z < 2)pnorm(2)-pnorm(-2) = 0.9545qnorm(prob) in R gives the value <strong>of</strong> Z for a given cumulative probability.• Find the value Z above which 25% <strong>of</strong> the area lies.qnorm(0.75) which gives Z=0.674576

INVERSE PROBLEMS USING R-COMMANDER• Normal quantiles are found by choosing Distributions > Continuousdistributions > Normal distribution > Normal quantiles.• Enter probability, mu (mean), sigma (st<strong>and</strong>ard deviation).• For the st<strong>and</strong>ard normal enter 0 for mu <strong>and</strong> 1 for sigma.• Choose the tail (upper/lower).• Can shortcut using the script window: useqnorm(probability, mean, sd, lower.tail = T RUE/F ALSE).THE GENERAL NORMAL DISTRIBUTION• This is the non-st<strong>and</strong>ard normal distribution.• This distribution has non-zero mean µ X <strong>and</strong> variance σ 2 X .• We say X ∼ N(µ X , σ 2 X ).• Recall the equation for the curve:f(X) = 1 √2πσe − 1 2( X−µσ) 2 .Calculating Probabilities• Probabilities are equivalent to areas under the normal distributioncurve.• The probability P r(a < X < b) is found using the area under thecurve between X = a <strong>and</strong> X = b.P r(a < X < b) = P r( a − µσ= P r( a − µσ< X − µσ< Z < b − µσ )< b − µσ )• The value <strong>of</strong> Z can be thought <strong>of</strong> as the number <strong>of</strong> st<strong>and</strong>arddeviations X is away from the mean.77

AREAS UNDER THE CURVE USING R-COMMANDER• Areas under this curve are found by choosing Distributions >Continuous distributions > Normal distribution > Normal probabilities.• Enter variable value(s), mu (mean), sigma (st<strong>and</strong>ard deviation).• Choose the tail (upper/lower).• Can shortcut using the script window: use pnorm(value, mean, sd, lower.tail =T RUE/F ALSE)Calculating Probabilities• Demonstration: Probabilities from z-scores (apple weights)• Demonstration: Finding other normal probabilities, have a playwith this one yourself78

CALCULATING PROBABILITIESAssume that heights <strong>of</strong> students enrolled in 100 level university papershave a normal distribution with mean µ X = 170cm <strong>and</strong> st<strong>and</strong>arddeviation σ X = 10.Find the proportion <strong>of</strong> students with a height between 180-190cm.pnorm(190,170,10) - pnorm(180,170,10) = 0.1359Find the percentage <strong>of</strong> students taller than 185cm.pnorm(185,170,10,lower.tail=FALSE) which gives 6.68% (2dp)79

Find the height which is exceeded by 10% <strong>of</strong> students.qnorm(0.9,170,10)= 182.82 cm (2dp)5.3 Normal Approximation to BinomialNORMAL APPROXIMATION TO BINOMIAL• Recall that the binomial distribution shape is close to symmetricalfor large n <strong>and</strong> π close to 0.5.• If a large sample is selected from a population <strong>of</strong> binary values,the probabilities <strong>of</strong> the observed outcomes can be found using thenormal distributionN(µ X , σ 2 X )where µ X = nπ <strong>and</strong> σ 2 X= nπ(1 − π).80

CONTINUITY CORRECTION• Normal probability function overlays the binomial histogram.• The area <strong>of</strong> one bar in the histogram represents the binomialprobability <strong>of</strong> obtaining x successes.• This is equal to the area under the normal curve between x − 1 2<strong>and</strong> x + 1 2 .Demonstration• Normal approximation example – left h<strong>and</strong>ednessLimitations• This approximation is good only if n is large <strong>and</strong> π is not close to0 or 1. This ensures symmetry.• Also good only if nπ ± 3 √ nπ(1 − π) gives two values between 0<strong>and</strong> n. Approximately 99% <strong>of</strong> the possible values should lie withinthese limits indicating a near symmetrical distribution.– can use this as a test.81

WHEN IS THE USE OF A NORMAL APPROXIMATION APPROPRIATE?A variety <strong>of</strong> studies suggest that 11% <strong>of</strong> the world population isleft-h<strong>and</strong>ed (for interest, 34 out <strong>of</strong> 304, or 11.2% <strong>of</strong> STAT110 studentswho gave answers for the questionnaire are left-h<strong>and</strong>ed). Consider thesituation where the sample size is two.Sample size = 2Here the mean is n × π = 2 × 0.11 = 0.22 <strong>and</strong> the st<strong>and</strong>arddeviation is √ n × π × (1 − π) = √ 2 × 0.11 × 0.89 = 0.445. nπ ±3 √ nπ(1 − π) = 0.22 ± 3 × 0.445 = -1.11 <strong>and</strong> 1.55. These values arenot between 0 <strong>and</strong> n = 2.• For this example n is small <strong>and</strong> π is is far from 0.5. The distributionis not symmetrical.• nπ ± 3 √ nπ(1 − π) does not give two values between 0 <strong>and</strong> n.Unappropriate to use the normal approximation to the binomial inthis situation.82

Sample size = 10Consider the situation where the sample size is increased to 10.Here the mean is n × π = 10 × 0.11 = 1.1 <strong>and</strong> the st<strong>and</strong>arddeviation is √ n × π × (1 − π) = √ 10 × 0.11 × 0.89 = 0.99. nπ ±3 √ nπ(1 − π) = 1.1 ± 3 × 0.99 = -1.87 <strong>and</strong> 4.07. These values arenot between 0 <strong>and</strong> n = 10, therefore not appropriate to use normalapproximation to the binomial here (distribution is skewed).Sample size = 100Consider the situation where the sample size is increased to 100.83

Here the mean is n × π = 100 × 0.11 = 11 <strong>and</strong> the st<strong>and</strong>arddeviation is √ n × π × (1 − π) = √ 100 × 0.11 × 0.89 = 3.13. nπ ±3 √ nπ(1 − π) = 11 ± 3 × 3.13 = 1.61 <strong>and</strong> 20.39. These values arebetween 0 <strong>and</strong> n = 100, therefore appropriate to use normal approximationto the binomial here.EXAMPLESuppose that a r<strong>and</strong>om sample <strong>of</strong> 500 students in another 100 levelclass has 70 left h<strong>and</strong>ed people. Assuming that the proportion <strong>of</strong> lefth<strong>and</strong>edpeople in both papers is 11%, find the probability that 70 ormore from a sample <strong>of</strong> 500 students are left-h<strong>and</strong>ed. What conclusionwould you draw about the proportion <strong>of</strong> left-h<strong>and</strong>ed students in thedifferent papers? Justify your answer.Find the probability that 70 or more students are left h<strong>and</strong>ed. π =0.11 n = 500 Find P r(X ≥ 70)Mean = nπ = 500×0.11 = 55 St<strong>and</strong>ard deviation = √ 500 × 0.11 × 0.89 =6.996(3dp)P r(X ≥ 69.5) = 0.0191184

ConclusionWhat conclusion would you draw about the proportion <strong>of</strong> lefth<strong>and</strong>edstudents in the different papers? We observed 70 left-h<strong>and</strong>edstudents in the different paper. This is a rare event (the probability<strong>of</strong> 70 or more students being left-h<strong>and</strong>ed is less than 0.05). We canconclude that the proportion <strong>of</strong> left-h<strong>and</strong>ed students differs in the twopapers (i.e. π is greater than 11% in the different paper).FURTHER EXAMPLEIt is claimed cancer tumour size is halved in 30% <strong>of</strong> all patientsusing the current treatment. A new drug was used on 70 patientswith the cancer.(a) Suppose Y is the binomial r<strong>and</strong>om variable for the number <strong>of</strong>patients who have their tumour size halved. Write down the mean<strong>and</strong> st<strong>and</strong>ard deviation <strong>of</strong> Y . µ Y = nπ = 70 × 0.3 = 21 σ Y =√ √ nπ(1 − π) = 21 × 0.7 = 3.83(b) In a study, thirty out <strong>of</strong> seventy patients administered the st<strong>and</strong>arddrug experience a halving <strong>of</strong> their tumours. Find the probabilitythat 30 or more out <strong>of</strong> 70 have their tumours halved. Pr(Y≥ 29.5)=pnorm(29.5,21,3.83,lower.tail=FALSE) = 0.0132(c) In a study 30 out <strong>of</strong> 70 patients in Auckl<strong>and</strong> administered thisnew drug had their tumour size halved. What conclusion canbe drawn about the new drug? There is evidence that the new85

drug is more effective than the st<strong>and</strong>ard because the probability<strong>of</strong> 30 or more successes is less than 0.05. This indicates that theobserved 30 (or more) is not likely to occur unless the new drughas a beneficial effect.Fred’s Rugby TeamIs there enough evidence to suggest Fred’s coaching techniques are causingmore injuries than normal?Can this be dealt with using the binomial distribution? Does it satisfythe 3 assumptions?1. Independence ? We will assume that if one player injures their legthis has no effect on other players injurying theirleg.2. Binary outcome ? We can define the outcome as binary. Leg Injured/LegUninjured3. Constant probability <strong>of</strong> success? We will assume that the probability<strong>of</strong> injury is the same for everyone.So this problem can be tackled using a binomial distribution with n =15 <strong>and</strong> π = 0.15. We wish to know what is the probability that 5 ormore players are injured given these conditions. Using RCmdr we findthis probability is 0.0617. Since this probability is greater than 5%, thisis NOT a rare event, therefore there is no evidence Fred’s techniquesare causing more leg injuries than normal.What times would Fred’s players need running to be in the top 10% <strong>of</strong>players?We can solve all these problems fairly easily using a computer, but itdoes help to draw some pictures to get an idea <strong>of</strong> what is going on. Thereason we draw these pictures, is that when we get our solution fromthe calculator we can decide if it is reasonable.86

10%5.5 6.0 6.5 7.0 7.5 8.0 8.5XWe know that the upper half <strong>of</strong> the curve is 50%, <strong>and</strong> half <strong>of</strong> this areawill be 25%, if we halve one more time than the area will be be 12.5%.We would estimate that our value should be about 8. Using the qnormfunction in R, qnorm(p = 0.9, mean = 7, sd = 0.6, lower.tail=TRUE)we find the value is 7.7689, which fits with our guesstimate.7.76895.5 6.0 6.5 7.0 7.5 8.0 8.5X87

What is the probability a player could run the 40 metres in 6.1 seconds??5.5 6.0 6.5 7.0 7.5 8.0 8.5XAgain we will start by drawing a picture. From this picture we can seethat the probability should be quite low. It will definately be lower than0.5, <strong>and</strong> just visually we would imagine it will around 25% Using thepnorm function in R, pnorm(x = 6.1, mean = 7, sd = 0.7 ,lower.tail =TRUE), we find that the p-value is 0.0668. Since this value is above 0.05there is no evidence that Fred’s players are different than the averageplayer <strong>of</strong> similar age.0.06685.5 6.0 6.5 7.0 7.5 8.0 8.5X88