Document de synthèse Antoine CHAMBAZ l'Habilitation à Diriger ...

Document de synthèse Antoine CHAMBAZ l'Habilitation à Diriger ...

Document de synthèse Antoine CHAMBAZ l'Habilitation à Diriger ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

UNIVERSITÉ PARIS DESCARTES<strong>Document</strong> <strong>de</strong> synthèseprésenté par<strong>Antoine</strong> <strong>CHAMBAZ</strong>en vue <strong>de</strong> l’obtention <strong>de</strong>l’Habilitation à <strong>Diriger</strong> <strong>de</strong>s RecherchesSpécialité : Mathématiques AppliquéesEstimation et test <strong>de</strong> l’ordre <strong>de</strong> lois, <strong>de</strong> l’importance <strong>de</strong> variableset <strong>de</strong> paramètres causaux ; applications biomédicalessoutenue le 13 décembre 2011<strong>de</strong>vant le jury composé <strong>de</strong> :Stéphane BoucheronFabienne ComteRandal DoucElisabeth GassiatMarc LavielleStéphane RobinJean-Christophe ThalabardAad van <strong>de</strong>r VaartProfesseur (Université Paris Di<strong>de</strong>rot), rapporteurProfesseur (Université Paris Descartes)Professeur (Telecom SudParis), rapporteurProfesseur (Université Paris Sud)Directeur <strong>de</strong> recherche (INRIA)Directeur <strong>de</strong> recherche (INRA), prési<strong>de</strong>ntProfesseur (Université Paris Descartes)Professeur (Vrije Universiteit Amsterdam), rapporteur

Felix qui potuit rerum cognoscere causas !Virgile, GeorgicaIn relating what follows I must confess to acertain chronological vagueness. The eventsthemselves I can see in sharp focus, and Iwant to think they happened that same evening,and there are good reasons to supposethey did. In a narrative sense they presenta nice neat package, effect dutifully trippingalong at the heels of cause. Perhaps it isthe attraction of such simplicity that makesme suspicious, that along with the convictionthat real life seldom works this way.R. Russo, The risk pool

RemerciementsJe tiens tout d’abord à remercier Elisabeth Gassiat et Marc Lavielle, qui m’ont initié au métier<strong>de</strong> chercheur. Vous m’avez ouvert <strong>de</strong>s horizons insoupçonnés et <strong>de</strong>meurez pour moi <strong>de</strong>s exemples,tout simplement.Je suis très reconnaissant à Stéphane Boucheron, Randal Douc et Aad van <strong>de</strong>r Vaart d’avoiraccepté d’évaluer mon document <strong>de</strong> synthèse en vue <strong>de</strong> l’obtention <strong>de</strong> l’habilitation à diriger lesrecherches. C’est un honneur que <strong>de</strong> décrocher l’habilitation sur la foi <strong>de</strong> rapports <strong>de</strong> chercheursaussi talentueux que vous !Je suis très honoré que Fabienne Comte, Jean-Christophe Thalabard et Stéphane Robin aientaccepté <strong>de</strong> participer à mon jury <strong>de</strong> soutenance. Stéphane, tu m’es un modèle <strong>de</strong> polyvalence statistique.Jean-Christophe, je me régale et m’enrichis <strong>de</strong> chacune <strong>de</strong> nos nombreuses conversationssur la statistique, la mé<strong>de</strong>cine et la causalité. Fabienne, brillante et par trop mo<strong>de</strong>ste, je veux teremercier ici pour ta générosité humaine et scientifique jamais démentie. Ton soutien toutes cesannées et particulièrement lors <strong>de</strong> la préparation <strong>de</strong> cette habilitation a été déterminant.Outre Fabienne, je veux remercier avec sympathie mes bienveillantes collègues ValentineGenon-Catalot et Catherine Huber (ainsi que la regrettée Minh-Thu Hoang) pour leur expertisescientifique maintes fois sollicitée et pour la confiance qu’elles m’ont manifestée en me confiantles cours vacants d’épidémiologie, bien que je fusse alors un parfait néophyte.Je dois d’une certaine façon à ces cours l’éveil d’une vive curiosité pour les applications <strong>de</strong> lastatistique aux sciences biomédicales et à la causalité. Et donc aussi, <strong>de</strong> fil en aiguille, ma relationscientifique nouée avec Mark van <strong>de</strong>r Laan, qui a été et <strong>de</strong>meure extrêmement enrichissante.J’admire, Mark, l’unité, la profon<strong>de</strong>ur et la puissance <strong>de</strong> ta vision statistique. Te côtoyer, échangeret construire avec toi sont un formidable privilège.Je voudrais aussi dire le plaisir que c’est <strong>de</strong> collaborer avec ma proche collègue A<strong>de</strong>line Samsonet Christophe Denis à la préparation du doctorat <strong>de</strong> Christophe, promis à un bel avenir.Je suis heureux <strong>de</strong> pouvoir remercier la plupart <strong>de</strong> mes proches collaborateurs que je n’ai pasencore cités, que nos projets soient finalisés ou pas, pour tout ce qu’ils m’ont apporté : IsabelleBonan, Jean Bouyer, Cristina Butucea, Michel Chavance, Dominique Choudat, Eric Denion, IsabelleDrouet, Daniel Eugène, Aurélien Garivier, Susan Gruber, Annamaria Guolo, Erwin Idoux,Christophe Magnani, Laurence Meyer, Lee Moore, Christian Néri, Pierre Neuvial, Grégory Nuel,Jean-Clau<strong>de</strong> Pairon, Sherri Rose, Michael Rosenblum, Judith Rousseau, Aurore Schmitt, WilsonToussile, Pascale Tubert-Bitter, Cristiano Sammy Varin, Pierre-Paul Vidal. Merci aussi à GillesBlanchard, Ivan Gentil et Catherine Matias, amis <strong>de</strong> la première heure et chercheurs accomplis,avec qui j’ai déjà eu la chance <strong>de</strong> collaborer ou pas. . . encore !Le laboratoire MAP5 et l’UFR <strong>de</strong> Mathématiques et Informatique sont un cadre <strong>de</strong> travail trèsépanouissant. Je remercie chaleureusement : Sylvain Durand, pour avoir coordonné sûrement macandidature à l’habilitation, et Hermine Biermé, pour les conseils prodigués en chemin ; BernardYcart, Christine Graffigne et Annie Raoult, entreprenants directeurs successifs du laboratoire ; laprécieuse Marie-Hélène Gbaguidi, gestionnaire du laboratoire ; les ingénieux Vincent Delos, AzedineMani, Maïk Mercuri, Laurent Moineau et Thierry Rae<strong>de</strong>rsdorff ; l’ensemble <strong>de</strong>s membres du MAP5et <strong>de</strong> l’UFR, avec un clin d’œil appuyé à mes collègues statisticiens d’hier et aujourd’hui, Avner,Chantal, Elodie, Flora, Hector, Jean-Clau<strong>de</strong>, Jérôme, Marie-Luce, Olivier, Rachid, Yves, et unemention spéciale attendrie à Servane Gey et Pierre Calka (toujours MAP5, <strong>de</strong> cœur au moins).Merci à la volée à Norv et Kate Brasch, Re-Cheng et Jonathan Jaffe, Elissa et Alan Kittner,nos amis américains !Je dédie ce travail à mes quatre amours : Julie, Lou, Fausto et Claire.

TABLE DES MATIÈRES 7Table <strong>de</strong>s matièresIntroduction 11 Estimation et test <strong>de</strong> l’ordre d’une loi 31.1 Consistance (cas indépendant) . . . . . . . . . . . . . . . . . . . . . . . . . . . 31.1.1 Estimation par maximum <strong>de</strong> vraisemblance pénalisé . . . . . . . . . . . . 41.1.2 Longueur <strong>de</strong> co<strong>de</strong>, maximum <strong>de</strong> vraisemblance et estimation par longueur<strong>de</strong> co<strong>de</strong> pénalisée . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61.2 Vitesses <strong>de</strong> convergence (cas indépendant) . . . . . . . . . . . . . . . . . . . . . 81.2.1 Erreurs d’estimation et erreurs <strong>de</strong> test <strong>de</strong> l’ordre d’une loi . . . . . . . . . 81.2.2 Estimation par maximum <strong>de</strong> vraisemblance pénalisé . . . . . . . . . . . . 91.2.3 Estimation bayésienne . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101.3 Consistance (cas dépendant) . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121.3.1 Estimation <strong>de</strong> l’ordre d’un champ <strong>de</strong> ruptures par minimum <strong>de</strong> contrastepénalisé . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 121.3.2 Estimation <strong>de</strong> l’ordre d’une chaîne <strong>de</strong> Markov cachée par maximum <strong>de</strong>vraisemblance et longueur <strong>de</strong> co<strong>de</strong> pénalisés . . . . . . . . . . . . . . . . 151.3.3 Estimation <strong>de</strong> l’ordre d’une chaîne <strong>de</strong> Markov à régime markovien par maximum<strong>de</strong> vraisemblance et longueur <strong>de</strong> co<strong>de</strong> pénalisés . . . . . . . . . . . 161.4 Application à l’étu<strong>de</strong> du maintien postural (1/2) . . . . . . . . . . . . . . . . . . 201.4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201.4.2 Description succincte <strong>de</strong>s données . . . . . . . . . . . . . . . . . . . . . 211.4.3 Un modèle <strong>de</strong> maintien postural . . . . . . . . . . . . . . . . . . . . . . 222 Estimation <strong>de</strong> l’importance <strong>de</strong> variables (cadre observationnel) 252.1 Amiante et cancer du poumon en France . . . . . . . . . . . . . . . . . . . . . . 262.1.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 262.1.2 Description succincte <strong>de</strong>s données . . . . . . . . . . . . . . . . . . . . . 272.1.3 Un modèle ad hoc <strong>de</strong> régression seuillée . . . . . . . . . . . . . . . . . . 282.1.4 Estimation par maximum <strong>de</strong> vraisemblance pondérée . . . . . . . . . . . 292.1.5 Nombre moyen d’années <strong>de</strong> vie perdues . . . . . . . . . . . . . . . . . . 312.2 A propos <strong>de</strong> l’estimation par minimisation <strong>de</strong> perte ciblée . . . . . . . . . . . . . 312.2.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 312.2.2 Le principe TMLE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 332.2.3 TMLE <strong>de</strong> l’excès <strong>de</strong> risque . . . . . . . . . . . . . . . . . . . . . . . . . 362.3 Probabilité <strong>de</strong> succès d’un programme <strong>de</strong> FIV en France . . . . . . . . . . . . . 372.3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

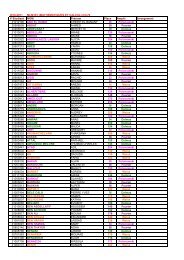

2.3.2 Description succincte <strong>de</strong>s données . . . . . . . . . . . . . . . . . . . . . 382.3.3 TMLE <strong>de</strong> la probabilité <strong>de</strong> succès d’un programme <strong>de</strong> FIV en France . . . 392.4 Mesure non-paramétrique <strong>de</strong> l’importance d’une variable . . . . . . . . . . . . . 412.4.1 Une mesure inédite . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 412.4.2 TMLE <strong>de</strong> la mesure non-paramétrique <strong>de</strong> l’importance d’une variable . . 422.4.3 Etu<strong>de</strong> <strong>de</strong> simulations inspirée <strong>de</strong>s données TCGA . . . . . . . . . . . . . 432.5 Application à l’étu<strong>de</strong> du maintien postural (2/2) . . . . . . . . . . . . . . . . . . 462.5.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 462.5.2 Classification selon le maintien postural . . . . . . . . . . . . . . . . . . 463 Estimation et test <strong>de</strong> l’importance <strong>de</strong> variables (cadre expérimental) 513.1 Ciblage <strong>de</strong>s analyses cliniques réponses-adaptatives . . . . . . . . . . . . . . . . 523.1.1 Ciblage du schéma optimal . . . . . . . . . . . . . . . . . . . . . . . . . 523.1.2 Etu<strong>de</strong> asymptotique <strong>de</strong> l’estimateur du maximum <strong>de</strong> vraisemblance . . . 543.1.3 Etu<strong>de</strong> asymptotique <strong>de</strong> la procédure <strong>de</strong> test groupes-séquentielle . . . . . 563.2 Ciblage <strong>de</strong>s analyses cliniques réponses-adaptatives et ajustées aux covariables . . 583.2.1 Formalisme statistique et i<strong>de</strong>ntification du schéma optimal . . . . . . . . 583.2.2 Modèle <strong>de</strong> travail, stratégie d’adaptation et initialisation <strong>de</strong> l’estimation . 593.2.3 Construction du TMLE . . . . . . . . . . . . . . . . . . . . . . . . . . . 613.2.4 Etu<strong>de</strong> asymptotique du TMLE . . . . . . . . . . . . . . . . . . . . . . . 623.2.5 Etu<strong>de</strong> asymptotique <strong>de</strong> la procédure <strong>de</strong> test groupes-séquentielle fondéesur le TMLE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63Perspectives 65Liste <strong>de</strong>s publications 69Bibliographie 70Curriculum Vitæ 79Articles 87Référence [A1] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99Référence [A2] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118Référence [A3] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131Référence [A4] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 138Référence [A5] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 146Référence [A6] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 160Référence [A7] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 176Référence [A8] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 193Référence [A9] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 201Référence [A10] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213Référence [A11] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 221Référence [A12] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234Référence [A13] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 250Référence [A14] . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 271

3Chapitre 1Estimation et test <strong>de</strong> l’ordre d’une loiUne première partie <strong>de</strong> mes travaux concerne l’estimation et le test <strong>de</strong> l’ordre d’une loi, dontvoici le cadre statistique.Soit le modèleM=∪ K∈K M K ⊂M NP (modèle non-paramétrique), la collection K d’indicesétant dotée d’une relation d’ordre pour laquelle la famille <strong>de</strong> modèles{M K :K∈ K} estéventuellement emboîtée (i.e. éventuellement telle queK K ′ impliqueM K ⊂M K ′). PourtoutP∈M NP , l’ordre Ψ(P) <strong>de</strong>P (relatif àM) est l’indiceΨ(P) = min{K∈ K :P∈M K }(avec convention Ψ(P) =∞ siP∉M). Etant données <strong>de</strong>s réalisations obtenues sousP, c’estun paramètre <strong>de</strong>P que l’on peut vouloir estimer, ou bien tester : étant donnéK 0 ∈ K, a-t-on“Ψ(P) K 0 ” ou bien “Ψ(P)≻K 0 ” ?Les exemples emblématiques et historiques sont ceux <strong>de</strong> l’estimation <strong>de</strong> l’ordre d’une sérietemporelle, d’une chaîne <strong>de</strong> Markov (sur alphabet fini) et d’un mélange en localisation. Je n’aipas travaillé sur l’estimation <strong>de</strong> l’ordre d’une série temporelle, mais je dois beaucoup aux résultatspionniers obtenus dans [3, 40, 42], les premiers que j’aie lus, qui ont influencé d’autres articles quim’ont directement inspiré et que je citerai plus tard. Je n’ai pas non plus travaillé sur l’estimation<strong>de</strong> l’ordre d’une chaîne <strong>de</strong> Markov ; toutefois ce problème est intimement lié à celui <strong>de</strong> l’estimation<strong>de</strong> l’ordre d’une chaîne <strong>de</strong> Markov cachée (hmm, pour “hid<strong>de</strong>n Markov mo<strong>de</strong>l”), auquel je me suisconsacré. Le cas <strong>de</strong>s mélanges en localisation constitue enfin l’un <strong>de</strong>s exemples clefs considérésdans mes articles.Je me suis intéressé <strong>de</strong>puis mes travaux <strong>de</strong> thèse [A1-A2] à l’étu<strong>de</strong> du comportement asymptotique<strong>de</strong> divers estimateurs <strong>de</strong> l’ordre d’une loi en termes <strong>de</strong> consistance et <strong>de</strong> vitesses <strong>de</strong>convergence [A1-A6], à partir <strong>de</strong> données indépendantes [A2-A3,A5] ou dépendantes [A1,A4-A6],selon <strong>de</strong>s approches fréquentistes [A1-A2,A4-A6], bayésiennes [A3] et informationnelles [A4-A6].J’ai illustré mes résultats par diverses étu<strong>de</strong>s <strong>de</strong> simulations [A4-A5], et j’ai tiré partie <strong>de</strong> métho<strong>de</strong>sd’estimation <strong>de</strong> l’ordre d’une loi dans le cadre d’une application biomédicale à l’étu<strong>de</strong> du maintienpostural chez l’homme [A5-A6]. Des détails suivent.1.1 Consistance d’estimateurs <strong>de</strong> l’ordre d’une loi à partir <strong>de</strong> donnéesindépendantes [A2,A5]Je rassemble dans cette section un ensemble <strong>de</strong> résultats obtenus soit seul [A2] soit en collaborationavec Aurélien Garivier (Laboratoire Traitement et Communication <strong>de</strong> l’Information, CNRS et

4 Estimation et test <strong>de</strong> l’ordre d’une loiTélécom ParisTech) et Elisabeth Gassiat (Laboratoire <strong>de</strong> Mathématiques, Université Paris Sud 11)[A5]. Le principe moteur <strong>de</strong> [A2] est d’exprimer les événements caractéristiques <strong>de</strong> sous-estimationet sur-estimation en termes d’événements concernant la mesure empirique afin <strong>de</strong> tirer partie <strong>de</strong>la théorie <strong>de</strong>s processus empiriques. L’article [A5] repose quant à lui sur une approche inspirée <strong>de</strong>la théorie <strong>de</strong> l’information.1.1.1 Estimation par maximum <strong>de</strong> vraisemblance pénaliséLes résultats concernent <strong>de</strong>s collections{M K :K∈ K} <strong>de</strong> nature paramétrique : chaqueM K s’écrit sous la formeM K ={P θ =p θ dµ :θ∈Θ K }, la collection d’espaces métriques{(Θ K ,d K ) :K∈ K} étant emboîtée (K≤K ′ implique Θ K ⊂ Θ K ′). Ici, on peut se ramenersans perte <strong>de</strong> généralité au cas où K⊂N ∗ , l’éventuelle connaissance a priori d’une borne supérieureK max sur K étant susceptible <strong>de</strong> simplifier gran<strong>de</strong>ment l’étu<strong>de</strong> <strong>de</strong> la consistance. Les <strong>de</strong>ux exemplessuivants rentrent dans ce cadre <strong>de</strong> travail.Exemple. Mélange en localisation. SoitC ⊂ R un ensemble compact,S K ={π ∈ R K + :∑ Kk=1π k = 1} leK-simplexe et{φ m :m∈C} un ensemble <strong>de</strong> <strong>de</strong>nsités telles que chaqueφ m dµ soit <strong>de</strong> moyennem(d’où le terme “localisation”). Quel que soitK≥ 1, Θ K =C K ×S K etM K ={p θ dµ :θ∈Θ K } oùp θ = ∑ Kk=1 π k φ mk (d’où le terme “mélange”,p θ dµ étant la loi marginale <strong>de</strong>X relativement à la loi jointe <strong>de</strong> (Z,X) telle queZ =m k avecprobabilitéπ k et, sachantZ,X a pour <strong>de</strong>nsité conditionnelleφ Z ). Nous nous intéresseronstout particulièrement aux cas oùφ m (·) =ϕ((·−m)/σ) est la <strong>de</strong>nsité (par rapport à lamesure <strong>de</strong> Lebesgue) <strong>de</strong> la loiN(m,σ 2 ) (σ 2 connu) et oùφ m est la <strong>de</strong>nsité (par rapport àla mesure <strong>de</strong> comptage) <strong>de</strong> la loi Poisson(m).Exemple. Ruptures (cas indépendant). SoitC⊂ R un ensemble compact et (X,B,pdλ) unespace probabilisé avecX⊂ R D (D≥2) ensemble ouvert etλla mesure <strong>de</strong> Lebesgue.D’après [56], il existe une métriquedsur l’ensemble CP b <strong>de</strong>s partitions <strong>de</strong> Cacciopoli dénombrables<strong>de</strong>X dont les “périmètres” sont majorés parb>0 telle que (CP b ,d) soit un espacemétrique compact. On peut aisément marquer une partitionτ ={τ j } j≥1 ∈ CP b , i.e. associerm j ∈C à chaqueτ j , et étendre la métriqued<strong>de</strong> telle sorte que l’ensemble <strong>de</strong>s partitions<strong>de</strong> CP b marquées muni <strong>de</strong> cette métrique étendue constitue un espace compact. Pour toutK≥ 1, nous notons (Θ K ,d) l’espace métrique compact <strong>de</strong>s partitions <strong>de</strong> CP b marquéestelles que ∫ τ jpdλ = 0 sauf pour au plusK <strong>de</strong>s indicesj≥ 1, etM K ={p θ dµdλ :θ∈Θ K }oùp θ (x,y)dλ(x)dµ(y) =ϕ((y− ∑ j≥1 m j1{x∈τ j })/σ)p(x)dxdy (ϕ est la <strong>de</strong>nsité <strong>de</strong> laloiN(0, 1)).Le premier exemple correspond au cas <strong>de</strong> figure où l’on observe un certain trait dans une populationhétérogène. Il est délicat car la perte d’i<strong>de</strong>ntifiabilité lorsque l’on sur-estime l’ordre du mélangeinduit notamment la singularité <strong>de</strong> la matrice d’information <strong>de</strong> Fisher, rendant ainsi pour le moinssubtil le recours à <strong>de</strong>s développements <strong>de</strong> Taylor (nous y reviendrons). Le second exemple (originaldans la littérature dédiée à l’i<strong>de</strong>ntification <strong>de</strong> l’ordre d’une loi) s’inspire <strong>de</strong> la thématique <strong>de</strong> lasegmentation variationnelle d’une image (à ceci près qu’au lieu d’observer l’image toute entière,on ne la lit qu’en <strong>de</strong>s points tirés au hasard). Notez que dans le second exemple, les ensemblesΘ K ne sont pas <strong>de</strong> dimension finie.Soit doncO 1 ,...,O n n copies indépendantes <strong>de</strong>O∼P 0 =p 0 dµ∈M NP et une certainecollection{M K :K∈ K} comme décrite ci-<strong>de</strong>ssus. On noteP n la mesure empirique associée.Nous nous appuyons sur la fonction <strong>de</strong> log-vraisemblancel n (θ) = ∑ ni=1 logp θ (O i ) (toutθ∈

Consistance (cas indépendant) 5∪ K∈K Θ K ) pour construire notre premier estimateur <strong>de</strong> l’ordre <strong>de</strong>P 0 (qualifié <strong>de</strong> global dans [A2],où une version locale est aussi étudiée) :K MLn = min arg maxK∈K{supl n (θ)−pen(n,K)θ∈Θ K}, (1.1)l’indispensable terme <strong>de</strong> pénalisation pen <strong>de</strong>vant satisfaire a minima les conditions pen(n,·) croissantepour toutn≥1, et pour toutK∈ K, lim n pen(n,K) =∞ et lim n n −1 pen(n,K) = 0.Cas d’un modèle mal spécifié.Notons KL la divergence <strong>de</strong> Kullback-Leibler (définie par KL(P|Q) =P log dPdQ siP≪Q,KL(P|Q) =∞ sinon ; pourMun ensemble <strong>de</strong> loi, nous écrivons KL(P|M) = inf Q∈M KL(P|Q)et KL(M|Q) = inf P∈M KL(P|Q)).On voit sans peine que lorsque le modèle est mal spécifié (i.e. quandP 0 ∉M), la consistance(qui prend une forme dégénérée du fait que Ψ(P 0 ) =∞) découle <strong>de</strong> la loi <strong>de</strong>s grands nombrespourP n .Spécifiquement, sous <strong>de</strong>s conditions très générales visant essentiellement à garantir que lesclassesG K ={logp θ − logp 0 :θ∈Θ K } <strong>de</strong>s log-rapports <strong>de</strong> vraisemblances sontP 0 -Glivenko-Cantelli, si pour toutK

6 Estimation et test <strong>de</strong> l’ordre d’une loigran<strong>de</strong> “régularité” <strong>de</strong>s log-vraisemblances. Concrètement, en substituant par “peeling” la borne(valable pour toutK ′ >K≥ Ψ(P 0 ))( ∣ ∣supθ∈Θ K ′∣ (P n−P 0 ) logp θ− logp 0∣KL(P 0 |P θ )∣) 2≥ supθ∈Θ K ′P n logp θ − supθ∈Θ KP n logp θ ≡ ∆ n (K,K ′ )à la plus évi<strong>de</strong>nte borne sup θ∈ΘK ′ |(P n−P 0 )(logp θ − logp 0 )|≥∆ n (K,K ′ ) (cf Proposition A.1dans [A2]), on peut remplacer (1.2) parlog lognlim sup = 0, (1.3)n pen(n,K)}si les classes{logpθ −logp 0:θ∈ΘKL(P 0 |P θ ) 1/2 K , KL(P 0 |P θ )>0 <strong>de</strong>s log-vraisemblances renormalisées (plutôtque lesG K ) sontP 0 -Donsker (cf Theorem 4 dans [A2]). Ce résultat s’applique à l’exemple dumélange en localisation (cas gaussien).1.1.2 Longueur <strong>de</strong> co<strong>de</strong>, maximum <strong>de</strong> vraisemblance et estimation par longueur<strong>de</strong> co<strong>de</strong> pénaliséeLa question <strong>de</strong> l’estimation <strong>de</strong> l’ordre d’un modèle est aussi très pertinente en théorie <strong>de</strong>l’information, où elle est associée à <strong>de</strong>s questions d’optimalité <strong>de</strong> procédures <strong>de</strong> codage.NotonsAun alphabet fini etA n l’ensemble <strong>de</strong>s mots ànlettres dansA. Il se trouve quel’on sait associer <strong>de</strong> façon générique un codage surA n à toute loi <strong>de</strong> probabilité surA n <strong>de</strong> tellesorte que la longueur du co<strong>de</strong> <strong>de</strong> tout mot soit proportionnelle au logarithme <strong>de</strong> la probabilité <strong>de</strong>ce mot (on parle donc <strong>de</strong> “probabilité <strong>de</strong> codage” ; c’est une conséquence du Théorème <strong>de</strong> Kraft-McMillan, cf [18]). En tirant profit d’inégalités comparant la longueur <strong>de</strong> <strong>de</strong>ux co<strong>de</strong>s fondéssur <strong>de</strong>ux probabilités <strong>de</strong> codage (l’une s’écrivant sous la forme du maximum <strong>de</strong> vraisemblancecorrectement renormalisé, l’autre sous la forme d’un “mélange à la Krichevsky-Trofimov”), Finesso[26], Liu and Narayan [58] ont démontré la consistance <strong>de</strong> <strong>de</strong>ux estimateurs <strong>de</strong> l’ordre d’une loi(dont un estimateur par maximum <strong>de</strong> vraisemblance pénalisé) sous l’hypothèse qu’une borne apriori sur l’ordre <strong>de</strong> la loi est connue, lorsque cette loi est une chaîne <strong>de</strong> Markov (son ordre estalors son paramètre <strong>de</strong> mémoire) ou bien une hmm (son ordre est alors le cardinal <strong>de</strong> l’espaced’états <strong>de</strong> la chaîne <strong>de</strong> Markov cachée) à émissions dans un alphabet fini. En exploitant plusfinement <strong>de</strong> telles inégalités, Gassiat and Boucheron [32] sont parvenus à s’affranchir du besoin<strong>de</strong> disposer d’une borne a priori dans le cas hmm. Dans leur sillage, nous avons considéré dans[A5] le cas d’une hmm à émissions gaussiennes ou poissonniennes (alphabet infini donc) et saforme dégénérée (mémoire <strong>de</strong> la chaîne <strong>de</strong> Markov égale à zéro) qui coïnci<strong>de</strong> avec notre exempledu mélange en localisation.Plaçons-nous ainsi dans le cadre <strong>de</strong> notre exemple du mélange en localisation.SoitK∈ K = N ∗ . Notonsδ K la <strong>de</strong>nsité <strong>de</strong> la loi <strong>de</strong> Dirichlet <strong>de</strong> paramètre ( 1 2 ,..., 1 2 ) surS K etγ τ celle <strong>de</strong> la loi Gamma(τ, 1 2 ). Soitν K la loi sur Θ K =C K ×S K telle quedν K ((m 1 ,...,m K ),π) =∏ Kk=1ϕ(m k /τ)dm k ×δ K (π)dπ (cas gaussien) oudν K ((m 1 ,...,m K ),π) = ∏ Kk=1 γ τ (m k )dm k ×δ K (π)dπ (cas poissonnien). On associe àν K la statistique <strong>de</strong> longueur <strong>de</strong> co<strong>de</strong>∫lc n (K) =− logΘ K n ∏i=1p θ (O i )dν K (θ), (1.4)ainsi appelée parce que l’argument du logarithme peut être vu comme une certaine probabilité<strong>de</strong> codage (<strong>de</strong> type “mélange à la Krichevsky-Trofimov”), évaluée en (O 1 ,...,O n ). Le choix <strong>de</strong>

Consistance (cas indépendant) 7ν K est pragmatique : il permet <strong>de</strong> calculer la valeur exacte <strong>de</strong>lc n (K) ! L’alphabet d’émissionn’étant pas fini, il n’est pas possible <strong>de</strong> mettre au point une probabilité <strong>de</strong> codage fondée surle maximum <strong>de</strong> vraisemblance (parce qu’on ne peut pas le renormaliser correctement), et lastatistique− sup θ∈ΘK l n (θ) ne s’interprète pas comme une statistique <strong>de</strong> longueur <strong>de</strong> co<strong>de</strong> (àconstante additive près) évaluée en (O 1 ,...,O n ). On peut néanmoins toujours comparerlc n (K)et− sup θ∈ΘK l n (θ), avec un encadrement <strong>de</strong> la forme (cf Theorem 2 dans [A5])0≤lc n (K)−(− supθ∈Θ Kl n (θ))≤ 1 2 dim(Θ K) logn +KR n +r Kn (1.5)oùR n est un terme aléatoire (R n = max i≤n Oi 2/2τ 2 dans le cas gaussien etR n =τmax i≤n O idans le cas poissonnien) etr Kn est un terme déterministe (connu explicitement) négligeable <strong>de</strong>vantles <strong>de</strong>ux termes précé<strong>de</strong>nts.Deux caractéristiques démarquent (1.5) <strong>de</strong> ses contreparties dans [26, 58, 32], prix à payerpour l’infinitu<strong>de</strong> <strong>de</strong> l’alphabet d’émission : primo, l’absence d’une borne inférieure strictementpositive (due notamment au fait que l’on n’a pas renormalisé le maximum <strong>de</strong> vraisemblance pouren faire une loi <strong>de</strong> codage) ; secundo, l’apparition du terme aléatoireR n dans la borne supérieure.On retrouve bien en revanche le premier terme <strong>de</strong> type bic, avec la particularité qu’il est le termedominant dans le cas poissonnien (R n =O P (logn/(log logn) 1/2 ), cf Lemma 4 dans [A5]) maispas dans le cas gaussien (R n =O P (logn), cf Lemma 3 dans [A5]).Soit finalement le second estimateur <strong>de</strong> l’ordre <strong>de</strong>P 0 fondé sur la statistique <strong>de</strong> longueur <strong>de</strong>co<strong>de</strong>lc n (K) :Kn lc = min arg min{lc n (K) + pen(n,K)} (1.6)K∈Kpour un terme <strong>de</strong> pénalisation pen à calibrer. L’estimateurKn lc est <strong>de</strong> type mdl (pour “minimum<strong>de</strong>scription length”, le principe <strong>de</strong> sélection <strong>de</strong> modèle introduit par Rissanen [66]).On parvient à prouver que même si l’on ne connaît pas <strong>de</strong> borne a priori sur Ψ(P 0 ), on aasymptotiquementKn ML =Kn lc = Ψ(P 0 )P 0 -presque sûrement dès lors quepen(n,K) = 1 2K∑(α + dim(Θ k )) logn +S Kn +s Knk=1pour toutα>2, les termesS Kn ets Kn étant dédiés aux contrôles respectifs <strong>de</strong>R n dans (1.5)(S Kn est doncO(logn) dans le cas gaussien etO(logn/(log logn) 1/2 ) dans le cas poissonnien)et <strong>de</strong>r Kn (cf Theorems 5 et 6 dans [A5]).La preuve repose essentiellement sur la loi <strong>de</strong>s grands nombres pourP n (qui permet <strong>de</strong> montrerque l’on ne sous-estime pas Ψ(P 0 ) asymptotiquement) et sur (1.5) combinée à un changement<strong>de</strong> probabilité et au lemme <strong>de</strong> Borel-Cantelli (pour montrer que l’on ne sur-estime pas Ψ(P 0 )asymptotiquement). La pénalité, lour<strong>de</strong>, évoque celle du critère bic, mais sous une forme cumulée(avec <strong>de</strong> surcroît le terme <strong>de</strong> contrôleS Kn ). Il est légitime <strong>de</strong> se <strong>de</strong>man<strong>de</strong>r si le recours à unepénalisation est vraiment nécessaire dans la définition <strong>de</strong>Kn lc : après tout,Kn lc relève aussi duparadigme bayésien, et <strong>de</strong>vrait donc à ce titre bénéficier <strong>de</strong> l’effet d’auto-pénalisation dont lesestimateurs bayésiens profitent [46]. Par exemple, on a évoqué plus tôt comment le critère bicdécoule d’un développement <strong>de</strong> Laplace <strong>de</strong> la vraisemblance intégrée : cela s’écrirait icilc n (K) =− sup θ∈ΘK l n (θ)+ 1 2 dim(Θ K) logn+O P (1) si le modèle était “régulier” (au sens <strong>de</strong> [77], ce qu’iln’est pas !). Dans la même veine, j’ai démontré avec Judith Rousseau (cf [A3] et la Section 1.2.3)que l’on peut estimer efficacement Ψ(P 0 ) dans le cadre du mélange en localisation (cas gaussien)

8 Estimation et test <strong>de</strong> l’ordre d’une loien se fondant sur <strong>de</strong>s comparaisons <strong>de</strong> vraisemblances marginales sans les pénaliser. Il existetoutefois <strong>de</strong>s exemples où une version non pénalisée <strong>de</strong>Kn lc est inconsistante alors queKn ML estconsistant [19].Remarque.L’obtention par Aurélien Garivier, Elisabeth Gassiat et moi-même du résultat <strong>de</strong>consistance pourKn ML dans le cadre du modèle <strong>de</strong> mélange en l’absence d’une borne a priorisur Ψ(P 0 ) était une jolie réussite, malgré la forme certainement sous-optimale <strong>de</strong> la pénalitéassociée. On sait <strong>de</strong>puis le tour <strong>de</strong> force réalisé récemment par Gassiat and Van Han<strong>de</strong>l [33]qu’une pénalité <strong>de</strong> la forme pen(n,K) =Kω(n) avec log logn =o(ω(n)) (on retrouvenotre condition (1.3)) suffit à garantir la consistance <strong>de</strong>K MLn (dans le cas gaussien) enl’absence d’une borne a priori sur Ψ(P 0 ). Par ailleurs, cette forme est optimale au sens où lechoix pen(n,K) =δK log logn pourδ> 0 assez petit conduit àK MLn ≠ Ψ(P 0 ) infinimentsouventP 0 -presque sûrement (cf [33, Proposition 4.4] ; <strong>de</strong> surcroît, leur résultat s’étend aucas où l’ensembleC n’est pas compact, cf [33, Proposition 4.5]).1.2 Vitesses <strong>de</strong> convergence d’estimateurs <strong>de</strong> l’ordre d’une loi àpartir <strong>de</strong> données indépendantes [A2,A3]Je rassemble dans cette section un ensemble <strong>de</strong> résultats obtenus soit seul [A2] soit en collaborationavec Judith Rousseau (Laboratoire CEREMADE, Université Paris Dauphine) [A3]. Rappelonsque le principe moteur <strong>de</strong> [A2] est d’exprimer les événements caractéristiques <strong>de</strong> sousestimationet sur-estimation en termes d’événements concernant la mesure empirique afin <strong>de</strong> tirerpartie <strong>de</strong> la théorie <strong>de</strong>s processus empiriques. L’article [A3] repose quant à lui sur une approchebayésienne et <strong>de</strong>s techniques bayésiennes non-paramétriques.1.2.1 Erreurs d’estimation et erreurs <strong>de</strong> test <strong>de</strong> l’ordre d’une loiSoit une borne a prioriK max telle que Ψ(P 0 )∈K={1,...,K max } et un certainK 0 ∈K\{K max }. On souhaite tester “Ψ(P 0 )≤K 0 ” (hypothèse nulle) contre “Ψ(P 0 )>K 0 ”, soit <strong>de</strong>façon équivalente “P 0 ∈M K0 ” contre “P 0 ∉M K0 ”. SiK n est un estimateur <strong>de</strong> Ψ(P 0 ) construità partir <strong>de</strong>P n alors il est naturel <strong>de</strong> déci<strong>de</strong>r <strong>de</strong> rejeter l’hypothèse nulle lorsqueK n >K 0 . Notantα n etβ n les erreurs <strong>de</strong> type I et <strong>de</strong> type II d’un tel test, on voit aisément queα n ≤P 0 (K n >K 0 )etβ n ≤P 0 (K n 0 ete o > 0 telles que lim sup n n −1 logP 0 (K n K 0 )≤−e o ?– Si oui,e u ete o peuvent-elles arbitrairement gran<strong>de</strong>s ?– Si non, que se passe-t-il à une vitesse sous-exponentielle ?Nous présentons dans les Sections 1.2.2 et 1.2.3 un ensemble <strong>de</strong> réponses à ces questions,tirées <strong>de</strong> [A2] et [A3].L’étu<strong>de</strong> <strong>de</strong> procédures <strong>de</strong> test <strong>de</strong> l’ordre d’une loi en termes <strong>de</strong> vitesses <strong>de</strong> convergences a suscitémoins <strong>de</strong> travaux que l’étu<strong>de</strong> <strong>de</strong>s propriétés <strong>de</strong> consistance. Sans prétendre à l’exhaustivité, onpeut citer (chronologiquement) [41, modèles exponentiels], [25, modèle <strong>de</strong> Markov à espace d’états

Vitesses <strong>de</strong> convergence (cas indépendant) 9fini], [20, 21, modèle <strong>de</strong> mélange et processus ARMA], [36, modèles caractérisés par l’existenced’une statistique exhaustive], [49, modèles réguliers], [32, hmm à émissions dans un alphabet fini]et [12, processus auto-régressif].Notons que [A2], [32, 12] adoptent une approche similaire pour traiter le problème dans <strong>de</strong>scadres différents. On y trouve notamment trois réponses partielles aux <strong>de</strong>ux premières questions ci<strong>de</strong>ssus,obtenues toutes trois comme corollaire du lemme <strong>de</strong> Stein [4, Theorem 2.1]. Les réponsesétaient curieusement inédites dans le cadre i.i.d <strong>de</strong> [A2] (cf Lemma 3 et Theorem 6 dans [A2]) :quel que soit l’estimateurK n <strong>de</strong> Ψ(P 0 ), si lim sup n P 0 (K n < Ψ(P 0 )) Ψ(P 0 )) Ψ(P 0 )) = 0, (1.7)1n logP 0(K n < Ψ(P 0 ))≥−KL(M Ψ(P0 )−1|P 0 ). (1.8)Ainsi, siK n a <strong>de</strong>s probabilités <strong>de</strong> sous-estimation et sur-estimation <strong>de</strong> Ψ(P 0 ) éloignées <strong>de</strong> 0 et1, alors (1.7) nous enseigne que la vitesse <strong>de</strong> sur-estimation ne peut pas être exponentielle enntandis que (1.8) nous apprend que la vitesse <strong>de</strong> sous-estimation peut être exponentielle enn, endésignant <strong>de</strong> plus un exposant optimal (le membre <strong>de</strong> droite <strong>de</strong> (1.8)).1.2.2 Estimation par maximum <strong>de</strong> vraisemblance pénaliséVitesse <strong>de</strong> sous-estimation.Le résultat le plus marquant <strong>de</strong> [A2] est relatif à la vitesse <strong>de</strong> sous-estimation <strong>de</strong>Kn ML (unrésultat comparable concernant la version locale <strong>de</strong>Kn ML est aussi obtenu). Il découle <strong>de</strong> la miseen évi<strong>de</strong>nce que l’événement <strong>de</strong> sous-estimation relève <strong>de</strong>s gran<strong>de</strong>s déviations <strong>de</strong>P n .Plus spécifiquement, sous un jeu <strong>de</strong> conditions faibles comprenant notamment l’hypothèseque les log-vraisemblances admettent un (plutôt que tout) moment exponentiel relativement àP 0 ainsi qu’une hypothèse <strong>de</strong> type “existence <strong>de</strong> sieves finis” (cf Theorem 7, condition (ii) dans[A2]), il apparaît qu’il existe une constancec>0 telle quelim supn1n logP 0(K MLn < Ψ(P 0 ))≤−c, (1.9)mettant ainsi en lumière le fait que la vitesse <strong>de</strong> sous-estimation décroît bien exponentiellementenn(cf Theorem 7 dans [A2]). Ce résultat s’applique aux exemples du mélange en localisation(cas gaussien) et aussi à celui <strong>de</strong>s ruptures.Ce théorème a bénéficié d’un joli concours <strong>de</strong> circonstances : alors que j’abordais l’étu<strong>de</strong><strong>de</strong>s gran<strong>de</strong>s déviations <strong>de</strong>P n paraissait un résultat, dû à Léonard et Najim [55], qui s’avéralittéralement ad hoc pour conclure (cf la justification fournie dans la Remark 2 <strong>de</strong> [A2]) !Sans surprise, la constantecs’exprime comme l’infimum <strong>de</strong> la fonction <strong>de</strong> taux du principe <strong>de</strong>gran<strong>de</strong>s déviations sur un certain ensemble. Sous un nouveau jeu d’hypothèses dont la plus contraignanteexige que les modèlesM K soient exponentiels (nos exemples du mélange en localisationet <strong>de</strong>s ruptures sont donc exclus), il s’est avéré possible <strong>de</strong> prouver quec = KL(M Ψ(P0 )−1|P 0 )c’est-à-dire que la vitesse <strong>de</strong> sous-estimation <strong>de</strong>K MLn est exponentielle ennet optimale (au regard<strong>de</strong> (1.8) ; cf Theorem 8 dans [A2]).

10 Estimation et test <strong>de</strong> l’ordre d’une loiLa preuve <strong>de</strong> ce résultat d’optimalité est <strong>de</strong> nature géométrique au sens <strong>de</strong> la divergenceKL, avec une particularité : les projections au sens <strong>de</strong> KL sont qualifiées d’inversées car elles sefont relativement au second argument <strong>de</strong> KL plutôt qu’au premier (cas le plus classique). On nepeut donc a priori pas invoquer l’espèce d”’inégalité <strong>de</strong> Pythagore” dont jouissent les projections<strong>de</strong> KL classiques. Heureusement, les projections <strong>de</strong> KL inversées satisfont aussi une inégalité <strong>de</strong>Pythagore pour <strong>de</strong>s modèles exponentiels, comme prouvé dans l’esprit <strong>de</strong> [16] (cf Lemma 5 dans[A2]).Vitesse <strong>de</strong> sur-estimation.Alors que l’événement <strong>de</strong> sous-estimation relève <strong>de</strong>s gran<strong>de</strong>s déviations <strong>de</strong>P n , l’événement <strong>de</strong>sur-estimation est intrinsèquement lié aux moyennes déviations <strong>de</strong>P n .Spécifiquement, moyennant que la classeG Kmax soitP 0 -Donsker et qu’elle ait une fonctionenveloppe admettant un moment exponentiel relativement àP 0 , si par exemple pen(n,K) =D(K)ω(n) avecDcroissante etω(n) =n 1−δ (pourδ∈]0, 1/2[) alorslim supn1n 1−2δ logP 0(K MLn > Ψ(P 0 )) Ψ(P 0 )) 0) moyennant}que la classe <strong>de</strong>s log-vraisemblances renormaliséeslogpθ −logp 0KL(P 0 |P θ ):θ∈Θ Kmax , KL(P 0 |P θ ) 1/2 > 0 (plutôt queG Kmax ) soitP 0 -Donsker et qu’elleait une fonction enveloppe admettant un moment exponentiel relativement àP 0 . Ceci est assezcontraignant, et ce second résultat ne s’applique pas à l’exemple du mélange en localisation.1.2.3 Estimation bayésienneLa littérature bayésienne consacrée à l’estimation dans <strong>de</strong>s modèles <strong>de</strong> mélange, et donc enparticulier à la sélection du nombre <strong>de</strong> composantes (i.e. <strong>de</strong> l’ordre <strong>de</strong> la loi), est vaste. Il n’y avaiten revanche, lorsque Judith Rousseau et moi avons entrepris [A3] et à notre connaissance, aucuntravail dédié spécifiquement à l’obtention <strong>de</strong> propriétés fréquentistes <strong>de</strong> vitesses <strong>de</strong> convergencepour <strong>de</strong>s estimateurs bayésiens <strong>de</strong> l’ordre d’une loi dans un cadre non-régulier. Certes, Ishwaranet al. [45] étudient un estimateur bayésien <strong>de</strong> la distribution <strong>de</strong> mélange (la loi ∑ Kk=1 π k Dirac mkdans les notations <strong>de</strong> notre exemple du mélange en localisation) lorsque le nombre <strong>de</strong> composantesest inconnu (et borné a priori), mais estimer l’ordre <strong>de</strong> la loi à partir <strong>de</strong> l’estimateur <strong>de</strong> la loi <strong>de</strong>mélange serait sans aucun doute sous-optimal dans la mesure où celui-ci converge à la mo<strong>de</strong>stevitessen −1/4 .Soit donc Π une loi a priori sur∪ Kmaxk=1 Θ k (les Θ k étant paramétriques) satisfaisantdΠ(θ) =π(k)π k (θ)dθ (pour toutθ∈Θ k etk≤K max ). On note Π(k|P n ) la loi a posteriori sur K qui endécoule, à partir <strong>de</strong> laquelle on construit notre estimateur bayésien <strong>de</strong> Ψ(P 0 ) :K B n = min{k≤K max : Π(k|P n )≥Π(k + 1|P n )}

Vitesses <strong>de</strong> convergence (cas indépendant) 11(celui-ci est qualifié <strong>de</strong> local ; nous en étudions aussi une version globale dans [A3]). Comme nousen discutions plus tôt,Kn B jouit d’une forme d’auto-pénalisation et il n’est donc pas nécessaired’introduire un équivalent aux pénalités utilisées dans les définitions <strong>de</strong>Kn ML etKn lc .Vitesse <strong>de</strong> sous-estimation.En adoptant une technique <strong>de</strong> type bayésien non-paramétrique dans l’esprit <strong>de</strong> l’article fondateur[34], on obtient sous <strong>de</strong>s hypothèses classiques qu’il existe <strong>de</strong>ux constantesc,c ′ > 0 (connuesexplicitement) telle que, pour toutn≥1,1n logP 0(K B n< Ψ(P 0 ))≤−c + logc′n , (1.11)mettant ainsi en lumière le fait que la vitesse <strong>de</strong> sous-optimisation <strong>de</strong>Kn B décroît bien exponentiellementenn(cf Theorem 1 dans [A3]). Ce résultat s’applique notamment à l’exemple du mélangeen localisation (cas gaussien, avec même la possibilité d’associer une variance différente à chaqueétat du mélange, cf Corollary 1 dans [A3]) ainsi qu’à une version temporelle <strong>de</strong> l’exemple <strong>de</strong>sruptures (oùX serait égal à R et où les partitions seraient déterminées par un nombre finis <strong>de</strong>points <strong>de</strong> rupture). Il faut noter que la constantec<strong>de</strong> (1.11) est inférieure à la constante optimaledéduite du lemme <strong>de</strong> Stein et qui apparaît dans le membre <strong>de</strong> droite <strong>de</strong> (1.8)Le jeu d’hypothèses qui sous-tend (1.11) requiert notamment que les fonctions (logp θ −logp 0 )admettent un (plutôt que tout) moment exponentiel relativement àP 0 au moins pourθdans unδ-voisinageSδ k <strong>de</strong>θk 0 tel quep θ0k soit la <strong>de</strong>nsité <strong>de</strong> la projection <strong>de</strong> KL (inversée) <strong>de</strong>P 0 surM k , et que ces voisinagesSδ k soient chargés par la loi a prioriπ k. La démonstration repose sur laconstruction <strong>de</strong> tests <strong>de</strong> voisinages (cf Proposition B.1 dans [A3]) et sur un argument <strong>de</strong> chaînageinspirés <strong>de</strong> [34].Vitesse <strong>de</strong> sur-estimation.Toujours dans l’esprit bayésien non-paramétrique, on obtient sous un autre jeu d’hypothèsesqu’il existe trois constantesc 1 ,c 2 ,c 3 > 0 (dont nous discuterons la nature ci-après) telles que,pour toutn≥3,1logn logP 0(K B n> Ψ(P 0 ))≤−c 1 +c 2log lognlogn + c 3logn(1.12)(cf Theorem 3 dans [A3]). Ce résultat est à rapprocher <strong>de</strong> (1.10).Parmi les hypothèses requises pour obtenir (1.12) figure une condition portant sur la façondont décroît la masse a priori <strong>de</strong>δ n -voisinagesS Ψ(P 0)+1δ npourδ n → 0 dans Θ Ψ(P0 )+1. Cettecondition fait intervenir une notion <strong>de</strong> dimension effectiveD 1 (Ψ(P 0 )+1) <strong>de</strong> Θ Ψ(P0 )+1 relativementà Θ Ψ(P0 ). Elle peut différer <strong>de</strong> la dimension vectorielle, avec par exempleD 1 (Ψ(P 0 ) + 1) =dim(Θ Ψ(P0 )+1)−1 = dim(Θ Ψ(P0 ))+1 pour le modèle <strong>de</strong> mélange en localisation (cas gaussien) etD 1 (Ψ(P 0 )+1) = dim(Θ Ψ(P0 )+1)+Ψ(P 0 )−2 = dim(Θ Ψ(P0 ))+Ψ(P 0 ) pour le modèle <strong>de</strong> ruptures.Un secon<strong>de</strong> condition se substitue à la contrainte d’existence d’une développement <strong>de</strong> Laplace <strong>de</strong>la vraisemblance intégrée. Elle introduit une autre notion <strong>de</strong> dimension effectiveD 2 (Ψ(P 0 )) <strong>de</strong>Θ Ψ(P0 ), qui peut coïnci<strong>de</strong>r avec la dimension vectorielle (comme c’est le cas pour le modèle <strong>de</strong>mélange en localisation, cas gaussien) ou pas (comme c’est le cas pour le modèle <strong>de</strong> ruptures). Lesconstantesc 2 etc 3 dépen<strong>de</strong>nt explicitement (et presqu’exclusivement) <strong>de</strong>s dimensionsD 1 (Ψ(P 0 )+

12 Estimation et test <strong>de</strong> l’ordre d’une loi1) etD 2 (Ψ(P 0 )). Enfin, la <strong>de</strong>rnière hypothèse vraiment digne d’être évoquée s’exprime en termes<strong>de</strong> contrôle <strong>de</strong> l’entropie d’anneaux <strong>de</strong> la formeS Ψ(P 0+1)2(j+1)δ n\S Ψ(P 0)+12jδ n.Le résultat (1.12) s’applique notamment à l’exemple du mélange en localisation (cas gaussienavec encore la possibilité d’associer une variance différente à chaque état du mélange) ainsi qu’àla version temporelle <strong>de</strong> l’exemple <strong>de</strong>s ruptures évoquée plus tôt. Dans l’exemple du mélangeen localisation, on obtient ainsiP 0 (Kn> B Ψ(P 0 )) =O((logn) 3Ψ(P0) /n 1/2 ) (cf Theorem 4 dans[A3]). La vérification <strong>de</strong>s hypothèses est délicate. Elle implique en particulier l’étu<strong>de</strong> <strong>de</strong> la structuregéométrique du mélange, que nous avons abordée à l’ai<strong>de</strong> du concept <strong>de</strong> paramétrisation coniquelocale initialement introduit et exploité dans [21].1.3 Consistance d’estimateurs <strong>de</strong> l’ordre d’une loi à partir <strong>de</strong> donnéesdépendantes [A1,A4-A6]Je rassemble dans cette section un ensemble <strong>de</strong> résultats obtenus soit seul [A1], soit en collaborationavec Catherine Matias (Laboratoire Statistique et Génome, CNRS et Université d’Evry Vald’Essonne) [A4], Aurélien Garivier (Laboratoire Traitement et Communication <strong>de</strong> l’Information,CNRS et Télécom ParisTech) et Elisabeth Gassiat (Laboratoire <strong>de</strong> Mathématiques, Université ParisSud 11) [A5]. Les articles [A5,A4] reposent sur une approche inspirée <strong>de</strong> la théorie <strong>de</strong> l’information.L’article [A1] met en œuvre une approche ad hoc relevant <strong>de</strong> laM-estimation.1.3.1 Estimation <strong>de</strong> l’ordre d’un champ <strong>de</strong> ruptures par minimum <strong>de</strong> contrastepénaliséDétection <strong>de</strong> ruptures dans un champ aléatoire.Le problème <strong>de</strong> la détection <strong>de</strong> ruptures recouvre un large spectre <strong>de</strong> sujets [7, 13, 15] unifiéspar un cadre <strong>de</strong> travail commun : l’observation d’un processus aléatoire dont la loi est globalementhétérogène mais localement homogène. Le cas le plus étudié est sans doute celui où le processusaléatoire est une série chronologique (ou bien plusieurs séries chronologiques observées simultanément),avec <strong>de</strong>s applications biomédicales très diverses comprenant par exemple la recherche<strong>de</strong> zones <strong>de</strong> gain ou <strong>de</strong> perte <strong>de</strong> nombre <strong>de</strong> copies d’ADN (cf Section 2.4) ou encore l’étu<strong>de</strong> dumaintien postural (cf Section 1.4). Dans [A1] (mon premier travail publié), le processus aléatoired’intérêt est un champ aléatoire in<strong>de</strong>xé par R D et à valeurs dans R q , que l’on n’observe quepartiellement en <strong>de</strong>s points tirés au hasard. L’article [A1] repose pourtant sur l’adaptation <strong>de</strong>stechniques développées par Lavielle [51] et Lavielle et Moulines [52] pour détecter <strong>de</strong>s rupturesdans une série chronologique (D =q = 1) suivie en continu.Le cadre statistique <strong>de</strong> [A1] peut être résumé succinctement ainsi.Soit un champ (Y x ) x∈X <strong>de</strong> variables aléatoires (éventuellement dépendantes). On observe cechamp ponctuellement en (X i ) i≥1 suite <strong>de</strong> variables aléatoires i.i.d tirées indépendamment duchamp, d’où (Y i ≡Y Xi ) i≥1 . Pour toutK∈ K ={1,...,K max }, soitM ′ K ={P θ :θ∈Θ K } unensemble <strong>de</strong> lois candidates pour la suite d’observations (O i ≡ (X i ,Y i )) i≥1 tel que :– la loi marginale commune <strong>de</strong>sX i sousP θ ne dépend ni <strong>de</strong>θni <strong>de</strong>K ;– chaqueθ ={(τ k ,ϑ k )} k≤K ∈ Θ K est constitué d’une partitionτ ={τ k } k≤K (<strong>de</strong> cardinalK)<strong>de</strong>X marquée par une famille{ϑ k } k≤K <strong>de</strong> paramètres fini-dimensionnels <strong>de</strong>ux à <strong>de</strong>uxdistincts ;– sousP θ , la loi conditionnelle <strong>de</strong>Y i sachantX i dépend <strong>de</strong>θ k si et seulement si (ssi)X i ∈τ k .

Consistance (cas dépendant) 13SoitM K =∪ k≤K M ′ K (la famille <strong>de</strong> modèles{M K :K ≤K max } est ainsi emboîtée). Ensupposant que la vraie loiP 0 <strong>de</strong> (O i ) i≥1 satisfaitP 0 ∈M=∪ K≤Kmax M K (en d’autres termes,que le modèleMest bien spécifié), l’objectif statistique est d’estimer l’ordre <strong>de</strong> la loiP 0 et lapartition marquéeθ 0 ={(τk 0,ϑ0 k )} k≤Ψ(P 0 )∈ Θ Ψ(P0 ) telle queP 0 =P θ 0.On suppose disposer pour cela d’une fonction <strong>de</strong> contraste ad hoc (ϑ,ϑ ′ )↦→w(ϑ,ϑ ′ ) telleque :–w(ϑ,ϑ ′ )≥0 avec égalité ssiϑ =ϑ ′ ;– il existeψ 1 ,ψ 2 (continuement différentiables) etξ(garantissant queξ(Y X1 ) et chaqueξ(Y x ) admettent un moment d’ordre un) telles que la décompositionw(ϑ 0 j ,ϑ) =ψ 1(ϑ) +ψ 2 (ϑ) ⊤ E(ξ(Y x )|X =x) soit valable pour toutk≤ Ψ(P 0 ),x∈τk 0 et paramètreϑ.Evi<strong>de</strong>mment, la fonction <strong>de</strong> contraste dépend <strong>de</strong> la nature <strong>de</strong>s changements auxquels on s’intéresse: par exemple en moyenne (cf l’exemple ci-après), ou en moyenne et variance (cas aussiconsidéré dans [A1]).Exemple. Ruptures (cas dépendant). SoitC ⊂ R un ensemble compact et (X,B,P X ) unespace probabilisé avecX⊂ R D (D≥2). SoitF 0 ⊂B un ensemble fixé etF l’ensemble<strong>de</strong> toutes les unions finies (et <strong>de</strong> toutes les intersections) d’éléments (<strong>de</strong> paires d’éléments)<strong>de</strong>F 0 . On note PF 0 l’ensemble <strong>de</strong>s partitions finiesτ ={τ j } j∈J <strong>de</strong>X telles que chaqueτ j soit une union finie d’élémentsτ jl ∈F 0 qui sont <strong>de</strong>ux à <strong>de</strong>ux disjoints et tels quemin l P X (τ jl )≥δ>0. En particulier, les partitions sont constituées d’un maximum <strong>de</strong>K max éléments. On suppose queδ (ou bien une borne inférieure surδ) est connue. On peutaisément marquer une partitionτ ={τ j } j∈J ∈ PF 0 , i.e. associerm j ∈C à chaqueτ j . PourtoutK≥ 1, nous notons (Θ K ,d) l’espace, muni d’une pseudo-distanced, <strong>de</strong>s partitions <strong>de</strong>PF 0 marquées telles que (a) card(J) =K, (b) les marquesm j soient <strong>de</strong>ux à <strong>de</strong>ux distinctes.Enfin, il existe un champ strictement stationnaire (Y x) ′ x∈X <strong>de</strong> variables aléatoires réellescentrées <strong>de</strong> même variance tel que, pour toutK≥ 1,M ′ K ={P θ :θ∈Θ K } est l’ensemble<strong>de</strong>s lois <strong>de</strong>O 1 = (X 1 ,Y 1 ),...,O n = (X n ,Y n ),... telles que, sousP θ ,X 1 ,...,X n ,... sonti.i.d <strong>de</strong> même loiP X et indépendantes <strong>de</strong> (Y x) ′ x∈X et, pour touti≥n,K∑Y i = m j 1{X i ∈τ j } +Y X ′ i.j=1La pseudo-distance<strong>de</strong>st caractérisée en <strong>de</strong>ux temps : pour Θ K ∋θ={(τ j ,m j ) :j≤K}et Θ K ′∋θ ′ ={(τ ′ j ′,m′ j ′) :j′ ≤K ′ },d(θ,θ ′ ) =d 1 (θ,θ ′ ) +d 2 (θ,θ ′ ), le premier terme (resp.second terme) correspondant à la comparaison <strong>de</strong>s partitions (resp. marques) :)d 1 (θ,θ ′ ) = max min Pj ′ ≤K ′ X((∪ j∈κ τ j ) △τ j ′ κ⊂{1,...,K} ′ ,d 2 (θ,θ ′ ) = max max ‖m j −m ′j ′ ≤K ′ j ′‖ 2,j∈κ j ′oùA △Best la différence symétrique entreAetB etκ j ′ désigne le plus petit sous-ensemble<strong>de</strong>{1,...,K} réalisant le minimum pour chaquej ′ ≤K ′ dans la définition <strong>de</strong>d 1 (θ,θ ′ ).Celle-ci généralise directement la pseudo-distance naturelle dans le casD=1.La fonction <strong>de</strong> contraste ad hoc est caractérisée ici par les fonctionsψ 1 ,ψ 2 etξ telles queψ 1 (m) =m 2 ,ψ 2 (m) =−2m,ξ(y) =y.

14 Estimation et test <strong>de</strong> l’ordre d’une loiEstimation <strong>de</strong> l’ordre du champ aléatoire par minimum <strong>de</strong> contraste pénalisé.La procédure statistique d’estimation mise en œuvre dans [A1] relève <strong>de</strong> laM-estimation :pour une pénalité <strong>de</strong> la forme pen(n,K) =Kω(n) à calibrer, l’estimateur <strong>de</strong> (K 0 ,{(τ 0 j ,ϑ0 j )} j≤K 0)est défini par{ n}(Kn,θ w n w ∑ K∑ ( )) = arg min ψ 1 (m k ) +ψ 2 (m k ) ⊤ ξ(Y i ) 1{X i ∈τ k } + pen(n,K)K≤K max i=1k=1θ∈Θ K(implicitement ci-<strong>de</strong>ssus, l’indiceθs’écritθ={(τ k ,m k ) :k≤K}).L’étu<strong>de</strong> <strong>de</strong> la consistance et <strong>de</strong> la vitesse <strong>de</strong> convergence <strong>de</strong>θ w n en supposant Ψ(P 0 ) connu(cf Theorems 5.2 et 5.4 dans [A1]) permet <strong>de</strong> déterminer comment calibrerω(n) pour étendreles résultats au cas où Ψ(P 0 ) est aussi estimé (cf Theorem 6.1 dans [A1]). On obtient ainsi laconsistance (faible) <strong>de</strong> (K w n,θ w n ) sous un jeu d’hypothèses satisfaites dans l’exemple <strong>de</strong>s ruptures.Plus spécifiquement, il apparaît dans l’exemple <strong>de</strong>s ruptures quelim nP 0 (K w n = Ψ(P 0 ) etd(θ w n,θ 0 )≤ε) = 1pour toutε>0dès lors quen h/2 =o(ω(n)) pour une constanteh∈]1, 2[ introduite dans leshypothèses qui, <strong>de</strong> façon intéressante, ne dépend pas <strong>de</strong> la structure <strong>de</strong> dépendance du champsous-jacent. Au regard <strong>de</strong>s résultats <strong>de</strong> consistance déjà exposés dans la Section 1.1, la pénalisation(polynômiale enn) est très forte. On verra en Sections 1.3.2 et 1.3.3 qu’elle est encore forte auregard <strong>de</strong>s résultats <strong>de</strong> consistance que j’ai obtenus dans un cadre <strong>de</strong> dépendance markovienne.Peut-être est-ce le prix à payer pour le peu <strong>de</strong> conditions imposées à la forme <strong>de</strong> dépendance enjeu dans le champ sous-jacent.Les preuves développées dans [A1] reposent essentiellement sur une quantification <strong>de</strong> laconcentration <strong>de</strong>P n,X (la mesure empirique <strong>de</strong>sX i ) autour <strong>de</strong>P 0,X (sa vraie contrepartie)d’une part, et sur le contrôle <strong>de</strong> fluctuations maximales <strong>de</strong> la forme sup G∈G ‖Σ n (G)‖ ∞ pourΣ n (B) = ∑ ni=1 (ξ(Y i )−E(ξ(Y i )|X i ))1{X i ∈B} (toutB∈B) d’autre part. La quantification<strong>de</strong> la concentration <strong>de</strong>P n,X découle facilement d’arguments classiques (symétrisation, inégalité<strong>de</strong> Hoeffding) lorsque l’on suppose que les partitions sont composées d’éléments appartenant àune classe <strong>de</strong> Vapnik-Červonenkis (VC) <strong>de</strong> dimension <strong>de</strong> VC finie (cf Proposition 3.3 dans [A1]).Quant au contrôle <strong>de</strong>s fluctuations maximales, il s’agit <strong>de</strong> garantir qu’il existeC 1 > 0 eth∈]1, 2[tels que, pour toutε>0 etB∈B,P(supF∈F)∣{‖Σ n (F∩B)‖ ∞ }≥ε∣X 1 ,...,X n ≤ C (1 ∑ n hε 2 1{X i ∈B})(1.13)P 0,X -presque sûrement. Cette condition est-elle raisonnable ? Lorsque la fonctionξ est à valeursréelles (valable pour l’exemple <strong>de</strong>s ruptures ; on remplace donc les‖·‖ ∞ par <strong>de</strong>s valeurs absolues),(1.13) est satisfaite dès lors qu’il existeC 2 > 0 eth∈[1, 2) tels que, pour toutp>2 etB∈B,(E (|Σ n (B)| p |X 1 ,...,X n )≤C p ∑ n hp/22 pp/2 1{X i ∈B})(1.14)i=1P 0,X -presque sûrement. L’inégalité (1.14), <strong>de</strong> type “Marcinkiewicz-Zygmund” relâchée (en raison<strong>de</strong> la puissancehdans la borne supérieure) et la démonstration <strong>de</strong> ce qu’elle implique (1.13)i=1

Consistance (cas dépendant) 15(cf Proposition 7.3 dans [A1]), sont largement inspirées du travail mené par De<strong>de</strong>cker dans [24].Grâce à (1.14), on peut exhiber <strong>de</strong>s exemples concrets où (1.13) est satisfaite. Soit par exempleX = Z D un réseau régulier et (Z x =ξ(Y x )−E(ξ(Y x ))) x∈X centré, borné et strictement stationnaire: si (Z x ) x∈X estm-dépendant (moralement, si pour toutx∈X ,Z x est indépendante <strong>de</strong>toute famille constituée d’un nombre fini <strong>de</strong>Z x ′ à distance au moinsm<strong>de</strong>xsurX ) alors (1.13)est satisfaite (cf Proposition 7.5 dans [A1], ainsi que les Propositions 7.4 et 7.6 pour d’autresexemples).1.3.2 Estimation <strong>de</strong> l’ordre d’une chaîne <strong>de</strong> Markov cachée par maximum <strong>de</strong>vraisemblance et longueur <strong>de</strong> co<strong>de</strong> pénalisésJ’ai présenté dans la Section 1.1.2 une partie seulement <strong>de</strong>s résultats obtenus dans [A5] encollaboration avec Aurélien Garivier et Elisabeth Gassiat, en me concentrant sur ceux relatifs à l’estimation<strong>de</strong> l’ordre d’un mélange en localisation à partir <strong>de</strong> données indépendantes. Nous avonsaussi mené (pour ainsi dire en parallèle) l’étu<strong>de</strong> du problème d’estimation <strong>de</strong> l’ordre d’une loilorsque celle-ci est une hmm à émissions gaussiennes ou poissonniennes (cf [14] pour la monographie<strong>de</strong> référence sur les modèles <strong>de</strong> Markov cachés) :Exemple. Mélange en localisation par hmm. Reprenons les objetsC,S K ,φ m <strong>de</strong> l’exemple dumélange en localisation (cas indépendant) tel que présenté en Section 1.1.1. Soitπ 0 ∈S Karbitrairement fixé. Quel que soitK≥ 1, on pose Θ K =C K × ∏ Kk=1 S K etM K ={P θ :θ∈Θ K } : iciP θ pourθ=(m 1 ,...,m K ,π 1 ,...,π K )∈Θ K est la loi <strong>de</strong> la suite (O i ) i≥1 àvaleurs dans R (cas gaussien) ou N (cas poissonnien) déduite par projection <strong>de</strong> la loi jointe P θ<strong>de</strong>s suites ((O i ) i≥1 , (Z i ) i≥0 ) caractérisée par la décomposition suivante <strong>de</strong> la vraisemblancesous P θ au tempsn:n−1 ∏P θ (Z 0 ,Z 1 ,O 1 ,...,Z n ,O n ) =π 0,Z0 × π Zi ,Z i+1×i=1n∏φ mZi (O i )i=1(ainsi : (Z i ) i≥0 est une chaîne <strong>de</strong> Markov <strong>de</strong> loi initiale et <strong>de</strong> transitions paramétrées parπ 0 ,π 1 ,...,π K ; (O 1 ,...,O n ) sont conditionnellement indépendantes sachant (Z 0 ,...,Z n ) ;sachant (Z 0 ,...,Z n ),O i a pour <strong>de</strong>nsité conditionnelleφ mZi ).L’exemple présenté ci-<strong>de</strong>ssus entre bien dans la catégorie <strong>de</strong>s modèles <strong>de</strong> mélange dans la mesureoù la loi marginale <strong>de</strong> chaque observationO i est un mélange comme décrit dans l’exemple dumélange en localisation <strong>de</strong> la Section 1.1.1. Il diffère du modèle <strong>de</strong> mélange indépendant carl’état du mélange à un instantidonné (soitZ i ) dépend <strong>de</strong> la suite <strong>de</strong>s états précé<strong>de</strong>nts (soitZ 0 ,...,Z i−1 , au sens d’une chaîne <strong>de</strong> Markov).Plaçons-nous donc dans le cadre <strong>de</strong> l’exemple du mélange en localisation par hmm et reprenonsles notations <strong>de</strong> la Section 1.1.2 : K = N ∗ ,δ K est la loi <strong>de</strong>nsité <strong>de</strong> la loi <strong>de</strong> Dirichlet <strong>de</strong> paramètre( 1 2 ,..., 1 2 ),γ τ est la <strong>de</strong>nsité <strong>de</strong> la loi Gamma <strong>de</strong> paramètre (τ, 1 2 ).Nous nous intéressons à la consistance <strong>de</strong> l’estimateurK MLn du maximum <strong>de</strong> vraisemblance<strong>de</strong> l’ordre Ψ(P 0 ) <strong>de</strong> la loiP 0 construit partir <strong>de</strong> l’observation <strong>de</strong> (O 1 ,...,O n )∼P 0 ∈M=∪ K∈K M K . L’estimateurK MLn s’écrit toujours comme dans (1.1), avec un terme <strong>de</strong> pénalité àcalibrer et une log-vraisemblancel n (θ) sousθ=(m 1 ,...,m K ,π 1 ,...,π K )∈Θ K qui satisfait∑ n∏ n∏l n (θ) = log π 0,z0 × π zi ,z i+1× φ mzi (O i ).z 0 ,...,z n∈{1,...,K} i=1 i=1

16 Estimation et test <strong>de</strong> l’ordre d’une loiLe principe qui sous-tend [A5] est, on l’a déjà vu et commenté, l’introduction pour toutK≥ 1d’une statistique <strong>de</strong> longueur <strong>de</strong> co<strong>de</strong> associée à une loi a prioriν K sur Θ K :∫lc n (K) =− log e ln(θ) dν K (θ)Θ K(1.15)(la définition est très similaire à (1.4)), et sa comparaison à la statistique du maximum <strong>de</strong>log-vraisemblance. Spécifiquement, soit pour toutK ≥ 1 la loiν K sur Θ K caractérisée pardν K (m 1 ,...,m K ,π 1 ,...,π K ) = ∏ Kk=1 ϕ(m k /τ)dm k × ∏ Kk=1 δ K (π k )dπ k (dans le cas gaussien)oudν K (m 1 ,...,m K ,π 1 ,...,π K ) = ∏ Kk=1 γ τ (m k )dm k × ∏ Kk=1 δ K (π k )dπ k (dans le cas poissonnien).Ce choix (pragmatique, car il permet le calcul exact <strong>de</strong> la valeur <strong>de</strong>lc n (K)) induit unencadrement <strong>de</strong> la différence entre les statistiques <strong>de</strong> longueur <strong>de</strong> co<strong>de</strong> et <strong>de</strong> maximum <strong>de</strong> logvraisemblancequi s’écrit exactement sous la forme (1.5) (cf Theorem 1 dans [A5] ; le termedéterminister Kn n’a pas la même forme que dans le cas indépendant du modèle <strong>de</strong> mélange enlocalisation). La statistique <strong>de</strong> longueur <strong>de</strong> co<strong>de</strong> peut aussi être utilisée à <strong>de</strong>s fins d’estimation <strong>de</strong>l’ordre Ψ(P 0 ) <strong>de</strong> la loiP 0 : on introduit ainsi l’estimateurK lcn comme dans (1.6), pour un terme<strong>de</strong> pénalité à calibrer.Finalement, on démontre que même si l’on ne connaît pas <strong>de</strong> borne a priori sur Ψ(P 0 ), on aasymptotiquementK MLn =K lcn = Ψ(P 0 )P 0 -presque sûrement dès lors quepen(n,K) = 1 2K∑(α + dim(Θ k )) logn +S Kn +s Knk=1pour toutα>2, les termesS Kn ets Kn étant dédiés aux contrôles respectifs <strong>de</strong>R n dans (1.5)(S Kn est doncO(logn) dans le cas gaussien etO(logn/(log logn) 1/2 ) dans le cas poissonnien)et <strong>de</strong>r Kn (cf Theorems 5 et 6 dans [A5]).Une étu<strong>de</strong> <strong>de</strong> simulations (que nous ne résumons pas ici ; cf la Section 4 <strong>de</strong> [A5]) illustre lasomme <strong>de</strong>s résultats théoriques obtenus dans [A5].Remarque. A propos <strong>de</strong> la vitesse <strong>de</strong> sur-estimation (exemple hmm). On obtient dans lecours <strong>de</strong> la preuve le contrôle suivant <strong>de</strong> la vitesse <strong>de</strong> sur-estimation associée àK n =KnMLouK n =Kn lc : il existe <strong>de</strong>ux constantesc 1 ,c 2 > 0 telles que, pournassez grand,1logn P 0(K n > Ψ(P 0 ))≤− min( α 2 ,c 1) + c 2logn(1.16)avecc 1 = 3 2 dans le cas gaussien etc 1 = 2 dans le cas poissonnien (c 2 varie aussi selon lescas), oùαest le paramètre intervenant dans la définition <strong>de</strong> la pénalité. Ces inégalités sontcomparables à (1.10) et (1.12).1.3.3 Estimation <strong>de</strong> l’ordre d’une chaîne <strong>de</strong> Markov à régime markovien parmaximum <strong>de</strong> vraisemblance et longueur <strong>de</strong> co<strong>de</strong> pénalisésJe me suis aussi consacré à la question <strong>de</strong> l’estimation <strong>de</strong> l’ordre d’une chaîne <strong>de</strong> Markovà régime markovien avec Catherine Matias [A4]. L’étu<strong>de</strong> <strong>de</strong> cet exemple était motivée par <strong>de</strong>sconsidérations applicatives.

Consistance (cas dépendant) 17Motivations biologiques.Les séquences biologiques (e.g. séquences d’ADN ou <strong>de</strong> protéines) sont aujourd’hui produites<strong>de</strong> façon routinière (grâce aux progrès et à la démocratisation <strong>de</strong>s diverses techniques <strong>de</strong> séquençage)pour être exploitées dans une variété d’analyses biologiques. Les modèles <strong>de</strong> Markov cachésont joué un rôle prépondérant dans la modélisation et l’analyse <strong>de</strong> la composition et <strong>de</strong> l’évolution<strong>de</strong> ces séquences, vues comme <strong>de</strong>s suites <strong>de</strong> variables aléatoires à valeurs dans un alphabetfini (l’alphabet <strong>de</strong>s quatre nucléoti<strong>de</strong>s que sont l’adénine, la thymine, la cytosine et la guaninedans l’exemple <strong>de</strong>s séquences d’ADN ; l’alphabet <strong>de</strong>s vingt-<strong>de</strong>ux aci<strong>de</strong>s aminés protéinogènes dansl’exemple <strong>de</strong>s séquences <strong>de</strong> protéines). Ainsi, la modélisation par hmm (cf l’exemple du mélangeen localisation <strong>de</strong> la Section 1.3.2 avec <strong>de</strong>s émissions selon une loi sur l’alphabet d’intérêt plutôtque sur R ou N) <strong>de</strong> séquences hétérogènes a été assez largement utilisée. On interprète dans cecadre les états cachés comme <strong>de</strong>s régimes (e.g., dans l’exemple du séquençage <strong>de</strong> l’ADN, régioncodante ou région non-codante). La loi du temps <strong>de</strong> séjour dans un régime donné étant géométrique,une telle modélisation est d’autant plus appropriée que l’on sait que la séquence présenteune succession <strong>de</strong> zones homogènes. Il ne faut cependant pas perdre <strong>de</strong> vue que les variables <strong>de</strong> laséquence observée sont alors i.i.d conditionnellement aux régimes : cette contrainte imposée par lamodélisation hmm est jugée trop restrictive dans certains cas (e.g., dans l’exemple du séquençage<strong>de</strong> l’ADN, on sait bien que les régions codantes <strong>de</strong> l’ADN sont organisées par codons (i.e. lasuccession <strong>de</strong> trois nucléoti<strong>de</strong>s) ; dans ce cas, une solution simple pourrait consister à substituerà l’alphabet <strong>de</strong>s quatre nucléoti<strong>de</strong>s celui <strong>de</strong> tous les triplets <strong>de</strong> nucléoti<strong>de</strong>s).Pour cette raison notamment, la communauté s’est penchée sur les modèles <strong>de</strong> chaînes <strong>de</strong>Markov à régime markovien (cmrm). Egalement appelés modèles auto-régressifs à régimes markoviens,ils ont été introduits à l’origine en économétrie [37]. Dans cette classe <strong>de</strong> modèles (quenous définissons ci-<strong>de</strong>ssous), les états cachés constituent toujours une chaîne <strong>de</strong> Markov, maisconditionnellement à ceux-ci les observations forment une secon<strong>de</strong> chaîne <strong>de</strong> Markov (dont lestransitions dépen<strong>de</strong>nt du régime). Ainsi, Nicolas et al. [62] entreprennent l’étu<strong>de</strong> <strong>de</strong> l’hétérogénéité<strong>de</strong> l’unique chromosome <strong>de</strong> Bacillus subtilis à l’ai<strong>de</strong> d’une cmrm choisie suivant <strong>de</strong>s critèresessentiellement biologiques afin <strong>de</strong> détecter <strong>de</strong>s segments atypiques <strong>de</strong> longueur approximative25kb (1kb correspond à 1, 000 nucléoti<strong>de</strong>s) le long du chromosome <strong>de</strong> longueur totale 4, 200kb.C’est <strong>de</strong> la volonté <strong>de</strong> mettre au point un argument statistique <strong>de</strong> sélection <strong>de</strong> modèle pour appuyerces critères biologiques <strong>de</strong> choix <strong>de</strong> modèle qu’est né [A4].Notion d’ordre.Commençons par définir rigoureusement une cmrm (on utilise la notationx j i pour représentertoute suitex i ,...,x j ;S K représente toujours leK-simplexe) :Exemple. Chaîne <strong>de</strong> Markov à régime markovien (cmrm). SoitAun alphabet <strong>de</strong> cardinalfini (et connu) notér. Quel que soit (K,M)∈N ∗ ×N, posons Θ K,M = ∏ Kk=1 S K × ∏ Kr Mk=1 S retM K,M ={P θ :θ∈Θ K,M } : ici,P θ pourθ=(π 1 ,...,π K , (π zo M )1 z≤K,o M1 ∈AM ) est laloi <strong>de</strong> la suite (O i ) i≥1 à valeurs dansAdéduite par projection <strong>de</strong> la loi jointe P θ <strong>de</strong>s suites((O i ) i≥1 , (Z i ) i≥1 ) caractérisée par la décomposition suivante <strong>de</strong> la vraisemblance sous P θau tempsn(les conventions <strong>de</strong> notation d’usage s’appliquent sin≤M ouM = 0) :n−1P θ (Z 1 ,O 1 ,...,Z n ,O n ) =µ θ (Z 1 ,O1 M ∏)× π Zi ,Z i+1×i=1n∏i=M+1π Zi O i−1i−M ,O i

18 Estimation et test <strong>de</strong> l’ordre d’une loioùµ θ est la loi initiale sur{1,...,K}×A M qui fait <strong>de</strong> P θ une loi stationnaire (ainsi :(Z i ) i≥1 est une chaîne <strong>de</strong> Markov (<strong>de</strong> mémoire un) sur{1,...,K} dont les transitionssont paramétrées parπ 1 ,...,π K ; conditionnellement à (Z i ) i≥1 , (O i ) i≥1 est une chaîne <strong>de</strong>Markov surA<strong>de</strong> mémoireM, la loi conditionnelle <strong>de</strong>O j sachant ((Z i ) i≥1 ,O j−11 ) étantcaractérisée pour toutj>M parπ Zj O j−1j−M; les <strong>de</strong>ux chaînes sont initialisées <strong>de</strong> telle sorteque P θ soit stationnaire).Notons que la log-vraisemblancel n (θ) sousθ=(π 1 ,...,π K , (π zo M )1 z≤K,o M1 ∈A M )∈Θ K,Ms’écritl n (θ) = log∑z 1 ,...,z n∈{1,...,K}n−1µ θ (z 1 ,O1 M ∏)× π zi ,z i+1×i=1n∏i=M+1π zi O i−1i−M ,O i .Contrairement aux autres exemples introduits jusqu’ici, l’exemple <strong>de</strong>s chaînes <strong>de</strong> Markov àrégime markovien ne se voit pas associer naturellement une famille emboîtée <strong>de</strong> modèles (cequi justifie a posteriori l’emploi <strong>de</strong> l’expression “éventuellement emboîtée” en introduction <strong>de</strong> cechapitre) : certesM K,M ⊂M K,M+1 etM K,M ⊂M K+1,M pour tout (K,M)∈N ∗ × N, maisil n’est pas possible <strong>de</strong> déterminer une relation d’ordre sur N ∗ × N qui fasse <strong>de</strong> la famille{M K,M : (K,M)∈N ∗ × N} une famille emboîtée. Par ailleurs, étant donnéP 0 ∈M=∪ (K,M)∈N ∗ ×NM K,M , il n’existe en général pas d’unique couple (K 0 ,M 0 ) tel queP 0 ∈M K0 ,M 0etP 0 n’appartienne à aucun <strong>de</strong>sM K,M M K0 ,M 0(on dit queP 0 “admet plusieurs représentationsminimales”). La définition-même d’une notion d’ordre <strong>de</strong> la loiP 0 s’en trouve menacée. . . Leproblème <strong>de</strong> l’i<strong>de</strong>ntification <strong>de</strong> l’ordre d’un processus ARMA(p,q) peut sembler à première vueprésenter le même défaut, le paramètre structurel (p,q) y étant notamment aussi bivarié. Il existecependant pour tout processus ARMA(p,q) une unique représentation minimale ARMA(p 0 ,q 0 ), lecouple (p 0 ,q 0 ) jouant donc naturellement le rôle d’ordre <strong>de</strong> cette loi (<strong>de</strong>s propriétés <strong>de</strong> consistance<strong>de</strong> toute une gamme <strong>de</strong> procédures d’estimation <strong>de</strong> cet ordre ont déjà été obtenues [39, 65, 21]).Nous proposons <strong>de</strong> contourner le problème dans l’esprit du principe mdl (déjà évoqué dans lacf Section 1.1.2) : siP 0 ∈M admet plusieurs représentations minimales, alors on définit l’ordre<strong>de</strong> la loiP 0 comme étant l’indice <strong>de</strong> la représentation minimale associée au modèle <strong>de</strong> plus petitedimension. Spécifiquement, on définit pour toutP 0 ∈M :Ψ(P 0 ) = min{(K,M)∈(N ∗ × N,) :P 0 ∈M K,M },la relation d’ordre étant telle que (K 1 ,M 1 )≺(K 2 ,M 2 ) ssi dim(Θ K1 ,M 1)

Consistance (cas dépendant) 19qui est <strong>de</strong> type longueur <strong>de</strong> co<strong>de</strong> pénalisée pour la longueur <strong>de</strong> co<strong>de</strong>∫lc n (K,M) =− log e ln(θ) dν K,M (θ)Θ K,Massociée aux lois a prioriν K,M sur Θ K,M (tout (K,M)∈N ∗ × N) telles queKdν K,M (π 1 ,...,π K , (π zo M )1 z≤K,o M1 ∈A M ) = ∏δ K (π k )dπ k ×k=1∏z≤K,o M 1 ∈AM δ r (π zo M1)dπ zo M1(on rappelle queδ K est la <strong>de</strong>nsité <strong>de</strong> la loi <strong>de</strong> Dirichlet <strong>de</strong> paramètre ( 1 2 ,..., 1 2) sur leK-simplexe).Ce choix est intéressant car il permet <strong>de</strong> nouveau <strong>de</strong> comparer les statistiques du maximum <strong>de</strong>log-vraisemblance et <strong>de</strong> longueur <strong>de</strong> co<strong>de</strong>, avec un jeu d’inégalités <strong>de</strong> la forme0≤lc n (K,M)−(− supθ∈Θ K,Ml n (θ))≤ 1 2 dim(Θ K,M) logn +r KMnpour un certain terme résiduelr KMn (cf Lemma 3.4 dans [A4] et (1.5)).Finalement, on démontre que même si l’on ne connaît pas <strong>de</strong> borne a priori sur Ψ(P 0 ), on aasymptotiquementKn ML =Kn lc = Ψ(P 0 )P 0 -presque sûrement dès lors quepen(n,K) = 1 2∑(k,m)(K,M)(αf(k,m) + dim(Θ k,m )) logn +s KMnpour toutα>2, la fonctionf : (N ∗ ×N,)→Nayant pour unique contrainte d’être strictementcroissante et le termes KMn étant dédié au contrôle <strong>de</strong>s termes résiduelsr KMn qui apparaissentci-<strong>de</strong>ssus (cf Theorem 3.1 dans [A4]).Remarque. A propos <strong>de</strong> la vitesse <strong>de</strong> sur-estimation (exemple mcmr). On obtient dans lecours <strong>de</strong> la preuve le contrôle suivant <strong>de</strong> la vitesse <strong>de</strong> sur-estimation associée àK n =KnMLouK n =Kn lc : il existe une constantesc>0 telles que, pournassez grand,1logn P 0(K n > Ψ(P 0 ))≤− α 2 + clogn .Cette inégalité est comparable à (1.10), (1.12) et (1.16).Etu<strong>de</strong> <strong>de</strong> simulations.Une étu<strong>de</strong> <strong>de</strong> simulations (dont le schéma s’inspire <strong>de</strong> [62]) illustre les résultats que nous venons<strong>de</strong> présenter ; nous n’en présentons qu’un bref résumé (cf la Section 4 <strong>de</strong> [A4] pour les détails).Les divers maxima <strong>de</strong> log-vraisemblance y sont approchés via l’algorithme EM. L’étu<strong>de</strong> suggèreque le régime asymptotique <strong>de</strong>K MLn est atteint pour <strong>de</strong>s longueurs <strong>de</strong> séquences supérieures ouégales à 50, 000. La substitution d’une authentique pénalité <strong>de</strong> type bic (plutôt que <strong>de</strong> type biccumulé comme choisie ci-<strong>de</strong>ssus) semble garantir que le régime asymptotique est atteint pour <strong>de</strong>slongueurs <strong>de</strong> chaînes supérieures ou égales à 25, 000 (les résultats sont en revanche très mauvaispour une longueur <strong>de</strong> chaînes égale à 15, 000).Pour conclure, Nicolas et al. [62] choisissent (sur la base <strong>de</strong> considérations essentiellementbiologiques) une cmrm d’ordre (K,M) = (3, 2) ; on obtientK MLn = (3, 0) (une cmrm d’ordre(3, 0) est en fait une hmm) ; la substitution d’une authentique pénalité <strong>de</strong> type bic conduit à unordre estimé égal à (2, 1) : difficile <strong>de</strong> se faire une opinion !