An Introduction to Genetic Algorithms - Boente

An Introduction to Genetic Algorithms - Boente

An Introduction to Genetic Algorithms - Boente

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Chapter 2: <strong>Genetic</strong> <strong>Algorithms</strong> in Problem Solving<br />

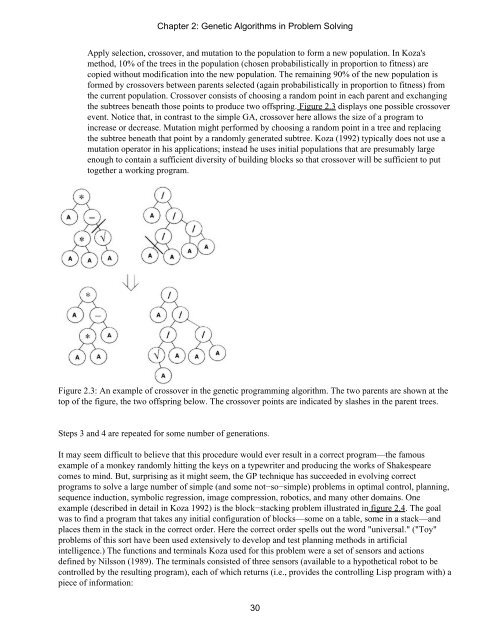

Apply selection, crossover, and mutation <strong>to</strong> the population <strong>to</strong> form a new population. In Koza's<br />

method, 10% of the trees in the population (chosen probabilistically in proportion <strong>to</strong> fitness) are<br />

copied without modification in<strong>to</strong> the new population. The remaining 90% of the new population is<br />

formed by crossovers between parents selected (again probabilistically in proportion <strong>to</strong> fitness) from<br />

the current population. Crossover consists of choosing a random point in each parent and exchanging<br />

the subtrees beneath those points <strong>to</strong> produce two offspring. Figure 2.3 displays one possible crossover<br />

event. Notice that, in contrast <strong>to</strong> the simple GA, crossover here allows the size of a program <strong>to</strong><br />

increase or decrease. Mutation might performed by choosing a random point in a tree and replacing<br />

the subtree beneath that point by a randomly generated subtree. Koza (1992) typically does not use a<br />

mutation opera<strong>to</strong>r in his applications; instead he uses initial populations that are presumably large<br />

enough <strong>to</strong> contain a sufficient diversity of building blocks so that crossover will be sufficient <strong>to</strong> put<br />

<strong>to</strong>gether a working program.<br />

Figure 2.3: <strong>An</strong> example of crossover in the genetic programming algorithm. The two parents are shown at the<br />

<strong>to</strong>p of the figure, the two offspring below. The crossover points are indicated by slashes in the parent trees.<br />

Steps 3 and 4 are repeated for some number of generations.<br />

It may seem difficult <strong>to</strong> believe that this procedure would ever result in a correct program—the famous<br />

example of a monkey randomly hitting the keys on a typewriter and producing the works of Shakespeare<br />

comes <strong>to</strong> mind. But, surprising as it might seem, the GP technique has succeeded in evolving correct<br />

programs <strong>to</strong> solve a large number of simple (and some not−so−simple) problems in optimal control, planning,<br />

sequence induction, symbolic regression, image compression, robotics, and many other domains. One<br />

example (described in detail in Koza 1992) is the block−stacking problem illustrated in figure 2.4. The goal<br />

was <strong>to</strong> find a program that takes any initial configuration of blocks—some on a table, some in a stack—and<br />

places them in the stack in the correct order. Here the correct order spells out the word "universal." ("Toy"<br />

problems of this sort have been used extensively <strong>to</strong> develop and test planning methods in artificial<br />

intelligence.) The functions and terminals Koza used for this problem were a set of sensors and actions<br />

defined by Nilsson (1989). The terminals consisted of three sensors (available <strong>to</strong> a hypothetical robot <strong>to</strong> be<br />

controlled by the resulting program), each of which returns (i.e., provides the controlling Lisp program with) a<br />

piece of information:<br />

30