IRIS RECOGNITION BASED ON HILBERT–HUANG TRANSFORM 1 ...

IRIS RECOGNITION BASED ON HILBERT–HUANG TRANSFORM 1 ...

IRIS RECOGNITION BASED ON HILBERT–HUANG TRANSFORM 1 ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Advances in Adaptive Data Analysis<br />

Vol. 1, No. 4 (2009) 623–641<br />

c○ World Scientific Publishing Company<br />

<strong>IRIS</strong> <strong>RECOGNITI<strong>ON</strong></strong> <strong>BASED</strong> <strong>ON</strong><br />

<strong>HILBERT–HUANG</strong> <strong>TRANSFORM</strong><br />

ZHIJING YANG∗ , ZHIHUA YANG † and LIHUA YANG∗,‡ ∗School of Mathematics and Computing Science<br />

Sun Yat-sen University, Guangzhou 510275, China<br />

† Information Science School<br />

GuangDong University of Business Studies<br />

Guangzhou 510320, China<br />

‡ mcsylh@mail.sysu.edu.cn<br />

As a reliable approach for human identification, iris recognition has received increasing<br />

attention in recent years. This paper proposes a new analysis method for iris recognition<br />

based on Hilbert–Huang transform (HHT). We first divide a normalized iris image into<br />

several subregions. Then the main frequency center information based on HHT of each<br />

subregion is employed to form the feature vector. The proposed iris recognition method<br />

has nice properties, such as translation invariance, scale invariance, rotation invariance,<br />

illumination invariance and robustness to high frequency noise. Moreover, the experimental<br />

results on the CASIA iris database which is the largest publicly available iris<br />

image data sets show that the performance of the proposed method is encouraging and<br />

comparable to the best iris recognition algorithm found in the current literature.<br />

Keywords: Iris recognition; empirical mode decomposition (EMD); Hilbert–Huang transform<br />

(HHT); main frequency center.<br />

1. Introduction<br />

With increasing demands in automated personal identification, biometric authentication<br />

has been receiving extensive attention over the last decade. Biometrics<br />

employs various physiological or behavioral characteristics, such as fingerprints,<br />

face, iris, retina and palmprints, etc., to accurately identify each individual. 13,29<br />

Among these biometric techniques, iris recognition is tested as one of the most<br />

accurate manner of personal identification. 4,6,14,22,23<br />

The human iris, a thin circular diaphragm lying between the cornea and the lens,<br />

has an intricate structure and provides many minute characteristics such as furrows,<br />

freckles, crypts, and coronas. 2 These visible characteristics, which are generally<br />

called the texture of the iris, are unique to each subject. 6,7,14,24 The iris patterns of<br />

the two eyes of an individual or those of identical twins are completely independent<br />

and uncorrelated. Additionally, the iris is highly stable over a person’s lifetime<br />

and lends itself to noninvasive identification since it is an externally visible internal<br />

623

624 Z.Yang,Z.Yang&L.Yang<br />

organ. All these desirable properties make iris recognition suitable for highly reliable<br />

personal identification.<br />

For the last decade, a number of researchers have worked on iris recognition and<br />

have achieved great progress. According to the various feature extractions, existing<br />

iris recognition methods can be roughly divided into four major categories: the<br />

phase-based methods, 6,7,23 the zero-crossing representation-based methods, 4,22 the<br />

texture analysis-based methods 14,24 and intensity variation analysis methods. 15,16<br />

A phase-based method is a process of phase demodulation. Daugman 6,7 made use<br />

of multiscale Gabor filters to demodulate texture phase structure information of<br />

the iris. Then the filter outputs were quantized to generate a 2048-bit iriscode<br />

to describe an iris. Tisse et al. 23 encoded the instantaneous phase and emergent<br />

frequency with the analytic image (two-dimensional Hilbert transform) as iris features.<br />

The zero-crossings of wavelet transform provide meaningful information of<br />

image structures. Boles and Boashash 4 calculated zero-crossing representation of<br />

one-dimensional wavelet transform at various resolution levels of a virtual circle on<br />

an iris image to characterize the texture of the iris. Wildes et al. 24 represented the<br />

iris texture with a Laplacian pyramid constructed with four different resolution levels.<br />

Tan et al. 14 proposed a well-known texture analysis method by capturing both<br />

global and local details from an iris with the Gabor filters at different scales and<br />

orientations. As an intensity variation analysis method, Tan et al. constructed a set<br />

of one-dimensional intensity signals to contain the most important local variations<br />

of the original two-dimensional iris image. Then the Gaussian–Hermite moments of<br />

such intensity signals are used as distinguishing features. 16<br />

Fourier and Wavelet descriptors have been used as powerful tools for feature<br />

extraction which is a crucial processing step for pattern recognition. However, the<br />

main drawback of those methods is that their basis functions are fixed and do not<br />

necessarily match the varying nature of signals. Hilbert–Huang transform (HHT)<br />

developed by Huang et al. is a new analysis method for nonlinear and nonstationary<br />

data. 11 It can adaptively decompose any complicated data set into a finite<br />

number of intrinsic mode functions (IMFs) that become the bases representing the<br />

data by empirical mode decomposition (EMD). With Hilbert transform, the IMFs<br />

yield instantaneous frequencies as functions of time. The final presentation of the<br />

results is a time–frequency–energy distribution, designated as the Hilbert spectrum<br />

that gives sharp identifications of salient information. Therefore, it brings not<br />

only high decomposition efficiency but also sharp frequency and time localizations.<br />

Recently, the HHT has received more attention in terms of interpretations 9,18,21 and<br />

applications. Its applications have spread from ocean science, 10 biomedicine, 12,20<br />

speech signal processing, 25 image processing, 3 pattern recognition 19,26,28 and<br />

so on. Recently, EMD is also used for iris recognition as a low pass filter<br />

in Ref. 5.<br />

Since a random iris pattern can be seen as a texture, many well-developed texture<br />

analysis methods have been adapted to recognize the iris. 14,24 An iris consists<br />

of some basic elements which are similar to each other and interlaced each other,

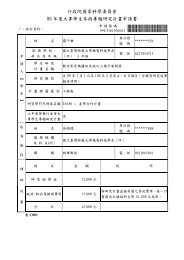

Iris image preprocessing<br />

Iris image Localization<br />

Normalization<br />

Iris Recognition Based on Hilbert–Huang Transform 625<br />

Feature<br />

extraction Classification<br />

HHT LDA<br />

Fig. 1. Diagram of the proposed method.<br />

Output<br />

i.e. an iris image is generally periodical to some extent. Therefore the approximate<br />

period is an effective feature for the iris recognition. By employing the main frequency<br />

center presented in our previous works 26,27 of the Hilbert marginal spectrum<br />

as an approximation for the period of an iris image, a new iris recognition method<br />

based on HHT is proposed in this paper. Unlike directly using the residue of the<br />

EMD decomposed iris image for recognition in Ref. 5, the proposed method utilizes<br />

the main frequency center information as the feature vector which is particularly<br />

rotation invariant. In comparison with the existing iris recognition methods, the<br />

proposed algorithm has an excellent percentage of correct classification, and possesses<br />

very nice properties, such as translation invariance, scale invariance, rotation<br />

invariance, illumination invariance and robustness to high frequency noise. Figure 1<br />

illustrates the main steps of our method.<br />

The remainder of this paper is organized as follows. Brief descriptions of image<br />

preprocessing are provided in Sec. 2. A new feature extraction method and matching<br />

are given in Sec. 3. Experimental results and discussions are reported in Sec. 4.<br />

Finally, conclusions of this paper are summarized in Sec. 5.<br />

2. Iris Image Preprocessing<br />

An iris image, contains not only the iris but also some irrelevant parts (e.g. eyelid,<br />

pupil, etc.). A change in the camera-to-eye distance may also result in variations in<br />

the size of the same iris. Therefore, before feature extraction, an iris image needs<br />

to be preprocessed to localize and normalize. Since a full description of the preprocessing<br />

method is beyond the scope of this paper, such preprocessing is introduced<br />

briefly as follows.<br />

The iris is an annular part between the pupil (inner boundary) and the sclera<br />

(outer boundary). Both the inner boundary and the outer boundary of a typical iris<br />

can approximately be taken as circles. This step detects the inner boundary and<br />

the outer boundary of the iris. Since the localization method proposed in Ref. 14 is<br />

a very effective method, we adopt it here. The main steps are briefly introduced as<br />

follows. Since the pupil is generally darker than its surroundings and its boundary<br />

is a distinct edge feature, it can be found by using edge detection (Canny operator<br />

in experiments). Then a Hough transform is used to find the center and radius of<br />

the pupil. Finally, the outer boundary will be detected by using edge detection and

626 Z.Yang,Z.Yang&L.Yang<br />

(a) (b)<br />

(c)<br />

Fig. 2. Iris image preprocessing: (a) original image; (b) localized image; (c) normalized image.<br />

Hough transform again in a certain region determined by the center of the pupil.<br />

A localized image is shown in Fig. 2(b).<br />

Irises from different people may be captured in different sizes and, even for<br />

irises from the same eye, the size may change due to illumination variations and<br />

other factors. It is necessary to compensate for the iris deformation to achieve more<br />

accurate recognition results. Here, we counterclockwise unwrap the iris ring to a<br />

rectangular block with a fixed size (64 × 512 in our experiments). 6,14 That is, the<br />

original iris in a Cartesian coordinate system is projected into a doubly dimensionless<br />

pseudopolar coordinate system. The normalization not only reduces to a<br />

certain extent distortion caused by pupil movement but also simplifies subsequent<br />

processing. A normalized image is shown in Fig. 2(c).<br />

3. Feature Extraction and Matching<br />

3.1. The Hilbert–Huang Transform<br />

The Hilbert–Huang Transform (HHT) was proposed by Huang et al., 11 which is<br />

an important method for signal processing. It consists of two parts: the empirical<br />

mode decomposition (EMD) andtheHilbert spectrum. With EMD, any complicated<br />

data set can be decomposed into a finite and often small number of intrinsic mode<br />

functions (IMFs). An IMF is defined as a function satisfying the following two<br />

conditions: (1) it has exactly one zero-crossing between any two consecutive local<br />

extrema; (2) it has zero local mean.

Iris Recognition Based on Hilbert–Huang Transform 627<br />

By the EMD algorithm, any signal x(t) can be decomposed into finite IMFs,<br />

cj(t)(j =1, 2,...,n), and a residue r(t), where n is the number of IMFs, i.e.<br />

x(t) =<br />

n<br />

cj(t)+r(t). (1)<br />

j=1<br />

Having obtained the IMFs by EMD, we can apply the Hilbert transform to each<br />

IMF, cj(t), to produce its analytic signal zj(t) =cj(t) +iH[cj(t)] = aj(t)e iθj(t) .<br />

Therefore, x(t) can also be expressed as<br />

x(t) =Re<br />

n<br />

aj(t)e iθj (t) + r(t). (2)<br />

j=1<br />

Equation (2) enables us to represent the amplitude and the instantaneous frequency<br />

as functions of time in a three-dimensional plot, in which the amplitude is contoured<br />

on the time–frequency plane. The time–frequency distribution of amplitude<br />

is designated as the Hilbert spectrum, denoted by H(f,t) whichgivesatime–<br />

frequency–amplitude distribution of a signal x(t). HHT brings sharp localizations<br />

both in frequency and time domains, so it is very effective for analyzing nonlinear<br />

and nonstationary data.<br />

With the Hilbert spectrum defined, the Hilbert marginal spectrum can be<br />

defined as<br />

h(f) =<br />

T<br />

0<br />

H(f,t)dt. (3)<br />

The Hilbert marginal spectrum offers a measure of total amplitude (or energy)<br />

contribution from each frequency component.<br />

3.2. Main frequency and main frequency center<br />

It is found that the Hilbert marginal spectrum h(f) has some properties, which can<br />

be used to extract features for iris recognition. Specifically, the main frequency center<br />

of the Hilbert marginal spectrum can be served as a feature to identify different<br />

irises. The “main frequency” and “main frequency center” concepts proposed by us<br />

have been clear described and discussed in our previous works. 26,27 We have shown<br />

that the main frequency center can characterize the approximate period very well.<br />

Here, we only review the definitions of main frequency, main frequency center and<br />

other related concepts as follows.<br />

Definition 1 (Main frequency). Let x(t) be an arbitrary time series and h(f)<br />

be its Hilbert marginal spectrum, then fm is called as the main frequency of x(t), if<br />

h(fm) ≥ h(f), ∀f.<br />

Definition 2 (Average Hilbert marginal spectrum of signal series). Let<br />

X = {xj(t)|j =1, 2,...,N}, whereeachxj(t) isatimeseries,andhj(f) bethe

628 Z.Yang,Z.Yang&L.Yang<br />

Hilbert marginal spectrum of xj(t). The average Hilbert marginal spectrum of X<br />

is defined as<br />

H(f) = 1<br />

N<br />

hj(f). (4)<br />

N<br />

j=1<br />

f H m is called as the average main frequency of X if f H m satisfies H(f H m ) ≥ H(f), ∀f.<br />

For a given set of signal series, in which signals are approximately periodic, the<br />

main frequency can characterize the approximate period very well. Unfortunately, in<br />

some cases a signal may not have a unique main frequency. To handle this situation,<br />

all the possible main frequencies have to be considered. Therefore, we can utilize<br />

the gravity frequencies, which is called the “main frequency center”, instead of the<br />

“main frequency”.<br />

Definition 3 (Main frequency center). Let H(fi) (j =1, 2,...,W)bethe<br />

average Hilbert marginal spectrum of X = {xj(t)|j =1, 2,...,N}. Assume H(fi)<br />

is monotone decreasing respect to i. The main frequency center of X is defined as<br />

fC(X) =<br />

M<br />

i=1 fiH(fi)<br />

M<br />

i=1<br />

H(fi) , (5)<br />

where M is the minimum integer satisfying M i=1 H(fi) ≥ P W i=1 H(fi), and 0 <<br />

P < 1 is a given constant. Then e =(1/M ) M i=1 H(fi) is called the energy of<br />

fC(X).<br />

As can be seen from its definition, the main frequency center is the weighted<br />

mean of several frequencies whose Hilbert marginal spectrums are largest. There<br />

and the main frequency center<br />

is a gap between the average main frequency f H m<br />

fC, but the main frequency center will be a steadier recognition feature. Therefore<br />

the main frequency center fC is used instead of the average main frequency f H m to<br />

characterize the approximate period of signal series.<br />

3.3. Iris feature extraction<br />

Although all normalized iris templates have the same size, eyelashes and eyelids<br />

may still appear on the templates and degrade recognition performance. We find<br />

that the upper portion of a normalized iris image (corresponding to regions closer to<br />

the pupil) provides the most useful information for recognition (see Fig. 3). Therefore,<br />

the region of interest (ROI) is selected to remove the influence of eyelashes<br />

and eyelids. That is, the features are extracted only from the upper 75% section<br />

(48 × 512) that is closer to the pupil in our experiments (see Fig. 3). It is known<br />

that an iris has a particularly interesting structure and provides abundant texture<br />

information. Furthermore if we vertically divide the ROI of a normalized image into<br />

three subregions as shown in Fig. 3, the texture information in the subregion will<br />

be more distinct.

ROI {<br />

Iris Recognition Based on Hilbert–Huang Transform 629<br />

Fig. 3. The normalized iris image is vertically divided into four subregions. The three subregions<br />

1,2and3aretheROI.<br />

210<br />

200<br />

190<br />

180<br />

170<br />

160<br />

150<br />

140<br />

130<br />

120<br />

0 50 100 150 200 250 300 350 400 450 500<br />

x<br />

1<br />

2<br />

3<br />

4<br />

200<br />

150<br />

100<br />

0<br />

50<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−50<br />

0<br />

50<br />

50 100 150 200 250 300 350 400 450 500<br />

c1<br />

c2<br />

c3<br />

c4<br />

c5<br />

r<br />

0<br />

−50<br />

0<br />

50<br />

50 100 150 200 250 300 350 400 450 500<br />

0<br />

−50<br />

0<br />

50<br />

50 100 150 200 250 300 350 400 450 500<br />

0<br />

−50<br />

0 50 100 150 200 250 300 350 400 450 500<br />

20<br />

0<br />

−20<br />

0<br />

200<br />

50 100 150 200 250 300 350 400 450 500<br />

150<br />

0 50 100 150 200 250 300 350 400 450 500<br />

Fig. 4. Left, the 11th line signal of the normalized iris image in Fig. 3 along the horizontal<br />

direction. Right, the EMD decomposition result of the 11th line signal.<br />

An iris consists of some basic elements which are similar each other and interlaced<br />

each other. Hence, an iris image is generally periodic to some extent along<br />

some directions, that is, some approximate periods are embed in the iris image. As<br />

an example, let us observe the 11th line signal of the normalized iris image in Fig. 3<br />

along the horizontal direction, as show in the left of Fig. 4. It can be seen that most<br />

of the durations of the waves are similar, i.e. some main frequencies embed in the<br />

signal. As we know, the EMD can extract the low-frequency oscillations very well. 11<br />

With EMD, the decomposition result of the 11th line signal is shown in the right of<br />

Fig. 4. It can be seen that two main approximate periods are extracted in the third<br />

and fourth IMFs. To show it clearly, we plot the original signal (solid line) and the<br />

third IMF (dash line) together in the interval [320, 450] in the left of Fig. 5. It can<br />

be seen that this IMF characterizes the proximate period of the waveform quite well<br />

and the period is about 15 (i.e. the frequency is about 1/15 ≈ 0.067). Similarly, we<br />

plot the original signal (solid line) and the fourth IMF (dash line) together in the<br />

interval [320, 450] in the right of Fig. 5. It can be seen that this IMF characterizes<br />

the proximate period and the variety of the amplitude. This period is about 26 (i.e.<br />

the frequency is about 1/26 ≈ 0.0385).<br />

According to Eq. (3), we can compute the Hilbert marginal spectrum of the<br />

11th line signal of the normalized iris image in Fig. 3, as shown in the left of<br />

Fig. 6. It is evident that the two main frequencies can be extracted from the Hilbert<br />

marginal spectrum correctly. Based on lots of experiments and analysis we found

630 Z.Yang,Z.Yang&L.Yang<br />

210<br />

200<br />

190<br />

180<br />

170<br />

160<br />

150<br />

140<br />

130<br />

120<br />

320 340 360 380 400 ϖϖ<br />

420 440<br />

210<br />

200<br />

190<br />

180<br />

170<br />

160<br />

150<br />

140<br />

130<br />

120<br />

320 340 360 380 400 420 440<br />

Fig. 5. Left, the original signal (solid line) and the third IMF (dash line) in the interval [320,<br />

450]. Right, the original signal (solid line) and the fourth IMF (dash line) in [320, 450].<br />

Frequency Content<br />

700<br />

600<br />

500<br />

400<br />

300<br />

200<br />

100<br />

0<br />

0 0.038 0.068 0.1 0.2<br />

Frequency (Hz)<br />

0.3 0.4 0.45<br />

Frequency Content<br />

700<br />

600<br />

500<br />

400<br />

300<br />

200<br />

100<br />

0<br />

0 0.036 0.069 0.1 0.2<br />

Frequency (Hz)<br />

0.3 0.4 0.45<br />

Frequency Content<br />

700<br />

600<br />

500<br />

400<br />

300<br />

200<br />

100<br />

f C<br />

0<br />

0 0.051 0.1 0.2<br />

Frequency (Hz)<br />

0.3 0.4 0.45<br />

Fig. 6. Left, the Hilbert marginal spectrum of the 11th line signal of the normalized iris in Fig. 3.<br />

Middle, the Hilbert marginal spectrum of the 12th line signal. Right, the average Hilbert marginal<br />

spectrum and the main frequency center fC of the line signal series in the first subregion of the<br />

normalized iris in Fig. 3.<br />

that the main frequency information of signals along the same direction in the same<br />

subregion of an iris is similar. As an example, we compute the Hilbert marginal<br />

spectrum of the 12th line signal of the normalized iris image in Fig. 3, as shown in<br />

the middle of Fig. 6. It can be seen that the Hilbert marginal spectrums of the 12th<br />

signal is very similar with that of the 11th signal. Then we compute the average<br />

Hilbert marginal spectrum of the signal series along the horizontal direction in the<br />

first subregion of Fig. 3, as shown in the right of Fig. 6. It can be seen that it is<br />

not only coincident with the Hilbert marginal spectrum of each line but also more<br />

concentrated. Therefore, based on the average Hilbert marginal spectrum of the<br />

line signal series in each subregion, we can obtain the main frequency center fC<br />

described as Eq. (5) as a reliable feature of the iris.<br />

Since the orientation information is a very important pattern in an iris, 14,24<br />

the main frequency center along the horizontal direction is not only enough to<br />

characterize its texture information. To characterize the orientation information,<br />

the features along the other directions should be considered. First of all, we should

α ( ) α<br />

Iris Recognition Based on Hilbert–Huang Transform 631<br />

Fig. 7. The generation of the signal along angle α of each subregion: choose one point of the first<br />

column and connect all the dotted lines.<br />

present the generation of signal series along angle α of each subregion of an iris.<br />

The generation method can be simply described as follows. As shown in Fig. 7, the<br />

rectangle frame denotes one of the subregion of an iris. Firstly choose one point of<br />

the first column and connect all the dotted lines. Then we obtain one signal along<br />

angle α of the subregion. Similarly, we can generate all the signal series (totally<br />

16 signals) along angle α in the subregion. In experiments, we totally choose 18<br />

directions: 0 ◦ , 10 ◦ ,...,170 ◦ .<br />

It is found that it has a good classification performance when the main frequency<br />

centers described as Eq. (5) are used as features for iris recognition. The features<br />

can cluster the samples of same class of iris. As an example, we show three samples<br />

of the same iris and their main frequency centers of 18 directions in I1 in Fig. 8. It<br />

can be seen that the features cluster very well. Furthermore, the gaps are usually<br />

existent for the samples from different classes of iris images. This implies that the<br />

selected features have really a good classification ability for the iris images.<br />

It is also found that the energy e of the main frequency center is also a good<br />

feature for classification. It can reflect the image contrast of different classes of iris.<br />

The higher the contrast is, the larger energy the image has. In other words, a signal<br />

will have a larger energy if it waves in a larger amplitude. Thus, a larger energy<br />

in marginal spectrum can be expected if an iris has the higher contrast. It can be<br />

seen from Fig. 9 that in these three iris classes (a), (b) and (c), the class (a) has<br />

the highest contrast while the class (c) has the lowest contrast. It indicates that the<br />

class (a) has generally the largest energy while the class (c) has the smallest energy<br />

of the main frequency center along the same orientation. An encouraging result is<br />

received as shown in Fig. 9(d). It implies that the energies of the main frequency<br />

center should be also used as features to classify the irises.<br />

As is known, that we choose the main frequency center and its energy as features,<br />

now the feature extraction algorithm is presented as follows.<br />

Algorithm 1 (Feature extraction algorithm). Given a normalized iris image<br />

I(i, j), let its three subregions from the top down divided as Fig. 3 be I1, I2<br />

and I3.<br />

D = {di|di =(i − 1)10,i=1, 2,...,18} — the feature orientations.<br />

. . . . . .

632 Z.Yang,Z.Yang&L.Yang<br />

Main Frequency Center<br />

0.07<br />

0.06<br />

0.05<br />

0.04<br />

0.03<br />

(a) (b) (c)<br />

0 20 40 60 80<br />

Orientation<br />

100 120 140 160<br />

(d)<br />

Fig. 8. (a)–(c) are three samples of the same iris; (d) their main frequency centers of 18 orientations<br />

in I1.<br />

Step 1: Calculate the main frequency centers and energies of 18 orientations of<br />

I1 to form the main frequency vector, f1, by the following six steps.<br />

(1) Let i =1.<br />

(2) Generate 16 signals along orientation di as described in Fig. 7 to form<br />

the signal set, denoted by X.<br />

(3) Calculate the average Hilbert marginal spectrum of X.<br />

(4) Calculate the main frequency center fC(I1(di)) and its corresponding<br />

energy e(I1(di)) of X.<br />

(5) Let i = i +1, if i ≤ 18, then go back to (2); otherwise go to (6).<br />

(6) Obtain the main frequency vector f1 as follows<br />

f1 =(fC(I1(0)),...,fC(I1(170)),e(I1(0)),...,e(I1(170))).<br />

(a)<br />

(b)<br />

(c)

Energy<br />

600<br />

400<br />

200<br />

Iris Recognition Based on Hilbert–Huang Transform 633<br />

(a) (b) (c)<br />

0 20 40 60 80 100 120 140 160<br />

Orientation<br />

(d)<br />

Fig. 9. (a)–(c) are three samples from three different iris classes; (d) each class contains three<br />

samples. The energies of the main frequency center of 18 orientations in I1.<br />

Step 2: Calculate the main frequency centers and its energies of 18 orientations of<br />

I2 similarly to form the main frequency vector<br />

f2 =(fC(I2(0)),...,fC(I2(170)),e(I2(0)),...,e(I2(170))).<br />

Step 3: Calculate the main frequency centers and its energies of 18 orientations of<br />

I3 similarly to form the main frequency vector<br />

f3 =(fC(I3(0)),...,fC(I3(170)),e(I3(0)),...,e(I3(170))).<br />

Step 4: Finally the feature vector of the iris is defined as<br />

The feature vector contains 108 components.<br />

F =(f1,f2,f3).<br />

(a)<br />

(b)<br />

(c)

634 Z.Yang,Z.Yang&L.Yang<br />

The proposed iris feature vector has nice properties. Let us discuss its invariance<br />

and the robustness to noise as follows.<br />

3.3.1. Invariance<br />

It is desirable to obtain an iris representation invariant to translation, scale, rotation<br />

and illumination. In our method, translation invariance and approximate scale<br />

invariance are achieved by normalizing the original image at the preprocessing step.<br />

Rotation invariance is important for an iris representation since changes of head<br />

orientation and binocular vergence may cause eye rotation. Most existing schemes<br />

achieve approximate rotation invariance either by rotating the feature vector before<br />

matching4,6,7,15,23 or by defining several templates which denote other rotation<br />

angles for each iris class in the database. 5,14 In our algorithm, the annular iris is<br />

unwrapped into a rectangular image. Therefore, rotation in the original image just<br />

corresponds to translation in the normalized image (for example, clockwise rotation<br />

of 90◦ in the original image just corresponds to circle translation 128 pixels towards<br />

the left side in the normalized image, as shown in Figs. 10(a)–(d)). Fortunately,<br />

translation invariance can easily be achieved in our method. Since translation in<br />

the original signal will just result in almost the same translation of all IMFs and<br />

the residue, 11 as shown in Fig. 10(e), (f). Furthermore, if let g(t) =g(t − t0), we<br />

have<br />

H[g](t) = 1<br />

π p.v.<br />

∞<br />

g(t<br />

−∞<br />

′ )<br />

t − t ′ dt′ = 1<br />

π p.v.<br />

∞<br />

g(t<br />

−∞<br />

′ )<br />

(t − t0) − t ′ dt′ = H[g](t − t0).<br />

Therefore, translation in the IMFs just results in the same translation in its Hilbert<br />

transform so as its instantaneous frequencies. Then the Hilbert marginal spectrums<br />

of the original signal and the translation signal will be the same. Finally, the main<br />

frequency center and its energy based on the average Hilbert marginal spectrum of<br />

signal series will also keep the same. Additionally, we can also observe this property<br />

intuitively. Since our feature is based on the vertical subregion of the normalized<br />

image, translation in the normalized image will not change the information of the<br />

subregion. Therefore, the feature will keep the same.<br />

Since the illuminations of iris images may be different caused by the position<br />

of light sources, the feature invariant to illumination will be also important. To<br />

investigate the effect of illumination, let us consider two iris images from the same<br />

iris image with different illuminations shown in Figs. 11(a) and 11(b). As can be<br />

seen from their EMD decomposition results in Figs. 11(c) and 11(d), their difference<br />

is mainly on the residues. The residue of Fig. 11(c) is about 190, while the residue<br />

of Fig. 11(d) is about 130. As we know the residue of EMD is removed before<br />

computing the Hilbert marginal spectrum, so the illumination variation will not<br />

influence the main frequency center and its energy. If we remove the residues of<br />

all lines from the original images, the residual images of Figs. 11(a) and 11(b)<br />

are shown in Figs. 11(e) and 11(f), respectively. It can be seen that it not only<br />

compensates for the nonuniform illumination but also improves the contrast of the

x<br />

c1<br />

c2<br />

c3<br />

c4<br />

c5<br />

Iris Recognition Based on Hilbert–Huang Transform 635<br />

(a) (b)<br />

(c) (d)<br />

200<br />

150<br />

100<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

50<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−50<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

10<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−10<br />

0<br />

5<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−5<br />

0<br />

180<br />

160<br />

50 100 150 200 250 300 350 400 450 500<br />

140<br />

0 50 100 150 200 250 300 350 400 450 500<br />

c6<br />

r<br />

200<br />

150<br />

100<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

50<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−50<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

10<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−10<br />

0<br />

5<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−5<br />

0<br />

180<br />

160<br />

50 100 150 200 250 300 350 400 450 500<br />

140<br />

0 50 100 150 200 250 300 350 400 450 500<br />

(e) (f)<br />

Fig. 10. (a) Original image; (b) the image after rotating 90 ◦ clockwise; (c) the normalized image<br />

of the original image; (d) the normalized image of the rotated image; (e) the EMD decomposition<br />

result for the 10th line along horizon of the original normalized image; (f) the EMD decomposition<br />

result for the 10th line along horizon of the rotated normalized image.<br />

image. Therefore other than most of other iris recognition methods, our method<br />

need not do the enhancement processing in the iris image preprocessing.<br />

3.3.2. Robustness to noise<br />

Just as stated in Refs. 3, 11 and 26 the high frequency noise is mainly contained in<br />

the first IMF. Moreover, it is found that the first IMF is not a significant component<br />

to characterize the iris structure. To be robust to high frequency noise, the first IMF<br />

is removed when the Hilbert marginal spectrum is calculated in our method. Though<br />

it leads a slight change for the main frequency, it can relieve the high frequency<br />

noises.<br />

x<br />

c1<br />

c2<br />

c3<br />

c4<br />

c5<br />

c6<br />

r

636 Z.Yang,Z.Yang&L.Yang<br />

x<br />

c1<br />

c2<br />

c3<br />

c4<br />

c5<br />

c6<br />

r<br />

250<br />

200<br />

150<br />

(a) (b)<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

10<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−10<br />

0<br />

10<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−10<br />

0<br />

200<br />

180<br />

50 100 150 200 250 300 350 400 450 500<br />

160<br />

0 50 100 150 200 250 300 350 400 450 500<br />

x<br />

c1<br />

c2<br />

c3<br />

c4<br />

c5<br />

c6<br />

r<br />

200<br />

150<br />

100<br />

50<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

20<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−20<br />

0<br />

10<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−10<br />

0<br />

10<br />

0<br />

50 100 150 200 250 300 350 400 450 500<br />

−10<br />

0<br />

150<br />

50 100 150 200 250 300 350 400 450 500<br />

100<br />

0 50 100 150 200 250 300 350 400 450 500<br />

(c) (d)<br />

(e) (f)<br />

Fig. 11. (a) Iris image with bright illumination; (b) the same iris image with dark illumination;<br />

(c) the EMD decomposition result of the 10th line of (a); (d) the EMD decomposition result of<br />

the 10th line of (b); (e) the result by removing all the residues of lines from (a); (f) the result by<br />

removing all the residues of lines from (b).<br />

To show the robustness of our method to noise, we added 20 dB Gauss white<br />

noise to the iris image as shown in Fig. 12(a) and calculate the main frequency<br />

centers and the energies of 18 orientations in I1 of the original normalized image<br />

and the noise normalized image, as shown in Figs. 12(b) and 12(c), respectively.<br />

It can be seen that most features of the noisy iris image just have small changes<br />

compared with those of the original iris. Therefore, the proposed feature is robust<br />

to high frequency noise.<br />

3.4. Iris matching<br />

After feature extraction, an iris image is represented as a feature vector of length<br />

108. To improve computational efficiency and classification accuracy, Linear Discriminant<br />

Analysis (LDA) is first used to reduce the dimensionality of the feature<br />

vector and then the Euclidean similarity measure is adopted for classification. LDA<br />

is a linear statistic classification method, which intends to find a linear transform T<br />

as such that, after its application, the scatter of sample vectors is minimized within<br />

each class, and the scatter of those mean vectors around the total mean vector can<br />

be maximized simultaneously. Further details of LDA may be found in Ref. 8.

Main Frequency Center<br />

0.06<br />

0.05<br />

0.04<br />

0.03<br />

0.02<br />

0 20 40 60 80<br />

Orientation<br />

100 120 140 160<br />

Iris Recognition Based on Hilbert–Huang Transform 637<br />

Original features<br />

Noise features<br />

(a)<br />

Energy<br />

500<br />

400<br />

300<br />

200<br />

Original features<br />

Noise features<br />

0 20 40 60 80<br />

Orientation<br />

100 120 140 160<br />

(b) (c)<br />

Fig. 12. (a) The original iris image; (b) the main frequency centers of 18 orientations in I1 of the<br />

original normalized image (“o”) and those of the noisy normalized image (“•”); (c) the energies<br />

of 18 orientations in I1 of the original normalized image (“o”) and those of the noisy normalized<br />

image (“•”).<br />

4. Experimental Results<br />

To evaluate the performance of the proposed method, we applied it to the widely<br />

used database named CASIA iris database. 1 The database includes 2255 iris images<br />

from 306 different eyes (hence, 306 different classes). The captured iris images are<br />

8-bit gray images with a resolution of 320 × 280.<br />

4.1. Performance of the proposed method<br />

For each iris class, we choose three samples taken at the first session for training and<br />

all samples captured at the second and third sessions serve as test samples. Therefore,<br />

there are 918 images for training and 1337 images for testing. Figure 13(a)<br />

describes variations of the correct recognition rate (CRR) with changes of dimensionality<br />

of the reduced feature vector using the LDA. From this figure, we can see<br />

that with increasing dimensionality of the reduced feature vector, the recognition<br />

rate also increases rapidly. However, when the dimensionality of the reduced feature

638 Z.Yang,Z.Yang&L.Yang<br />

Correct recognition rate<br />

100<br />

99.5<br />

99<br />

98.5<br />

98<br />

97<br />

96<br />

95<br />

94<br />

93<br />

92<br />

91<br />

10 20 30 40 50 60 70 80 90<br />

Dimensionality of the feature vector<br />

Density (£¥)<br />

40<br />

35<br />

30<br />

25<br />

20<br />

15<br />

10<br />

5<br />

intra-class distribution<br />

inter-class distribution<br />

0<br />

0 5 10 15 20 25 30 35 40<br />

Normalized matching distance<br />

(a) (b)<br />

Fig. 13. (a) Recognition results using features of different dimensionality using LDA. (b) Distributions<br />

of intra- and inter-class distances.<br />

vector is up to 70 or higher, the recognition rate starts to level off at an encouraging<br />

CRR of about 99.4%. In the experiments, we utilize the Euclidian similarity<br />

measure for matching. The results indicate that the proposed method will be highly<br />

feasible in practical applications.<br />

To evaluate the performance of the proposed method in verification mode,<br />

each tested iris image is compared with all the trained iris images on the CASIA<br />

database. Therefore, the total number of comparisons is 1337 × 918 = 1,227,366,<br />

where the total number of intra-class comparisons is 1337 × 3 = 4011, and that<br />

of inter-class comparisons is 1337 × 915 = 1,223,355. Figure 13(b) shows distributions<br />

of intra- and inter-class matching distances on the CASIA iris database.<br />

As shown in Fig. 13(b), we can find that the distance between the intra- and the<br />

inter-class distributions is large, and the portion that overlaps between the intraand<br />

the inter-class is very small. This proves that the proposed features are highly<br />

discriminating.<br />

In verification mode, the receiver operating characteristic (ROC) curve and<br />

equal error rate (EER) are used to evaluate the performance of the proposed<br />

method. 17 The ROC curve is a false accept rate (FAR) versus false reject rate<br />

(FRR) curve, which measures the accuracy of matching process and shows the<br />

overall performance of an algorithm. Points on this curve denote all possible system<br />

operating states in different tradeoffs. The EER is the point where the FAR<br />

and the FRR are equal in value. The smaller the EER is, the better the algorithm<br />

is. Figure 14(a) shows the ROC curve of the proposed method on the CASIA iris<br />

databases. It can be seen that the performance of our algorithm is very high and<br />

the EER is only 0.27%. If we choose the threshold as 17.2, the CRR is up to 99.7%<br />

when the FRR is less than 0.15%.

False Reject Rate(%)<br />

0<br />

2.5<br />

2<br />

1.5<br />

1<br />

0.5<br />

0.1 0.2 0.3<br />

False Accept Rate(%)<br />

0.4 0.5<br />

Iris Recognition Based on Hilbert–Huang Transform 639<br />

Correct recognition rate<br />

100<br />

90<br />

80<br />

70<br />

60<br />

50<br />

40<br />

30<br />

20<br />

10<br />

0<br />

40dB 30dB 20dB<br />

Signal−to−noise ratio<br />

10dB<br />

(a) (b)<br />

Fig. 14. (a) The ROC curve of our method on CASIA iris database. (b) Recognition results of<br />

adding different SNR to the tested iris images.<br />

As discussed in the above section, our method is robust to high frequency noise.<br />

To evaluate the robustness of our method to noise, we added Gauss white noise with<br />

different signal-to-noise ratio (SNR) to the test iris images. The result is shown in<br />

Fig. 14(b). The result is encouraging, since the correct recognition rates are 97.2%,<br />

95.6%, 92.2% and 87.5%, respectively, when SNR = 40, 30, 20 and 10 dB.<br />

4.2. Comparison with existing methods<br />

Among existing methods for iris recognition, those proposed by Daugman, 7 Wildes<br />

et al., 4 and Tan et al., 14,15 respectively, are the best known. Furthermore, they<br />

characterize the iris from different viewpoints. To further prove the effectiveness<br />

of our method, we make comparison with the above four methods on the CASIA<br />

iris database. The experimental results are shown in the Table 1. It can be seen<br />

that the performance of our method is encouraging and comparable to the best<br />

iris recognition algorithm while the dimension of our feature vector is very low.<br />

Furthermore, as discussed above our method is rotation-invariant and illuminationinvariant.<br />

Besides it is robust to high frequency noise.<br />

Table 1. The feature dimension and correct recognition rate comparisons<br />

of several well-known methods.<br />

Methods Feature dimension Correct recognition rate (%)<br />

Boles and Boashash 4 1024 92.4<br />

Daugman 7 2048 100<br />

Tan and co-workers 15 660 99.6<br />

Tan and co-workers 14 1536 99.43<br />

Our method 108 99.4

640 Z.Yang,Z.Yang&L.Yang<br />

5. Conclusions<br />

Recently, iris recognition has received increasing attention for human identification<br />

due to its high reliability. HHT is an analysis method for nonlinear and<br />

nonstationary data. In this paper, we have presented an efficient iris recognition<br />

method based on HHT by extracting the main frequency center information. This<br />

new method benefits a lot: firstly, its dimension of feature vector is very low compared<br />

with the other famous methods; secondly, other than most of other iris<br />

recognition methods, our method need not do the enhancement processing in the<br />

iris image preprocessing and is illumination-invariant; thirdly, unlike most existing<br />

methods to achieve approximate rotation invariance by defining several templates<br />

denoting other angles, our method is really rotation-invariant; fourthly, it is robust<br />

to high frequency noise; Moreover, the experimental results on the CASIA iris<br />

database show that the correct recognition rate of the proposed method is encouraging<br />

and comparable to the best iris recognition algorithm. In addition, the proposed<br />

method has demonstrated that HHT is a powerful tool for feature extraction<br />

and will be useful for many other pattern recognitions.<br />

Acknowledgments<br />

Portions of the research in this paper use the CASIA iris image database collected<br />

by Institute of Automation, Chinese Academy of Sciences.<br />

This work is supported by NSFC (Nos. 10631080, 60873088, 60475042), and<br />

NSFGD (No. 9451027501002552).<br />

References<br />

1. CASIA iris image database, http://www.sinobiometrics.com.<br />

2. F. H. Adler, Physiology of the Eye (Mosby, 1965).<br />

3. N. Bi, Q. Sun, D. Huang, Z. Yang and J. Huang, Robust image watermarking based on<br />

multiband wavelets and empirical mode decomposition, IEEE Trans. Image Process.<br />

16(8) (2007) 1956–1966.<br />

4. W. Boles and B. Boashash, A human identification technique using images of the iris<br />

and wavelet transform, IEEE Trans. Signal Process. 46(4) (1998) 1185–1188.<br />

5. C.-P. Chang, J.-C. Lee, Y. Su, P. S. Huang and T.-M. Tu, Using empirical<br />

mode decomposition for iris recognition, Comput. Standards Interfaces 31(4) (2009)<br />

729–739.<br />

6. J. Daugman, High confidence visual recognition of persons by a test of statistical<br />

independence, IEEE Trans. Pattern Anal. Mach. Intell. 15(11) (1993) 1148–1161.<br />

7. J. Daugman, Statistical richness of visual phase information: Update on recognizing<br />

persons by iris patterns, Int. J. Comput. Vision 45(1) (2001) 25–38.<br />

8. R. A. Fisher, The use of multiple measures in taxonomic problems, Ann. Eugenics 7<br />

(1936) 179–188.<br />

9. P. Flandrin, G. Rilling and P. Goncalves, Empirical mode decomposition as a filter<br />

bank, IEEE Signal Process. Lett. 11(2) (2004) 112–114.<br />

10. N. E. Huang, Z. Shen and S. R. Long, A new view of nonlinear water waves: The<br />

Hilbert spectrum, Ann. Rev. Fluid Mechanics 31 (1999) 417–457.

Iris Recognition Based on Hilbert–Huang Transform 641<br />

11. N. E. Huang, Z. Shen, S. R. Long, M. C. Wu, H. H. Shih, Q. Zheng, N.-C. Yen, C. C.<br />

Tung and H. H. Liu, The empirical mode decomposition and the Hilbert spectrum<br />

for nonlinear and non-stationary time series analysis, Proc. Roy. Soc. London A 454<br />

(1998) 903–995.<br />

12. W. Huang, Z. Shen, N. E. Huang and Y. C. Fung, Engineering analysis of biological<br />

variables: An example of blood pressure over 1 day, Proc. Natl. Acad. Sci. USA 95<br />

(1998) 4816–4821.<br />

13. A. Jain, R. Bolle and S. Pankanti, Biometrics: Personal Identification in Networked<br />

Society (Kluwer, Norwell, MA, 1999).<br />

14. L. Ma, T. Tan, Y. Wang and D. Zhang, Personal identification based on iris texture<br />

analysis, IEEE Trans. Patt. Anal. Mach. Intell. 25(12) (2003) 1519–1533.<br />

15. L. Ma, T. Tan, Y. Wang and D. Zhang, Efficient iris recognition by characterizing<br />

key local variations, IEEE Trans. Image Process. 13(6) (2004) 739–750.<br />

16. L. Ma, T. Tan, D. Zhang and Y. Wang, Local intensity variation analysis for iris<br />

recognition, Patt. Recogn. 37(6) (2005) 1287–1298.<br />

17. A. Mansfield and J. Wayman, Best Practices in Testing and Reporting Performance<br />

of Biometric Devices (National Physical Laboratory of UK, 2002).<br />

18. S. Meignen and V. Perrier, A new formulation for empirical mode decomposition based<br />

on constrained optimization, IEEE Signal Process. Lett. 14(12) (2007) 932–935.<br />

19. J. C. Nunes, S. Guyot and E. Delechelle, Texture analysis based on local analysis of<br />

the bidimensional empirical mode decomposition, Machine Vis. Appl. 16(3) (2005)<br />

177–188.<br />

20. S. C. Phillips, R. J. Gledhill, J. W. Essex and C. M. Edge, Application of the Hilbert–<br />

Huang transform to the analysis of molecular dynamic simulations, J. Phys. Chem.<br />

A 107 (2003) 4869–4876.<br />

21. G. Rilling and P. Flandrin, One or two frequencies? The empirical mode decomposition<br />

answers, IEEE Trans. Signal Process. 56(1) (2007) 85–95.<br />

22. C. Sanchez-Avila and R. Sanchez-Reillo, Iris-based biometric recognition using dyadic<br />

wavelet transform, IEEE Aerosp. Electron. Syst. Mag. 17 (2002) 3–6.<br />

23. C. Tisse, L. Martin, L. Torres and M. Robert, Person identification technique using<br />

human iris recognition, in Proc. Vision Interface (2002), pp. 294–299.<br />

24. R.Wildes,J.Asmuth,G.Green,S.Hsu,R.Kolczynski,J.MateyandS.McBride,A<br />

machine-vision system for iris recognition, Mach. Vision Appl. 9 (1996) 1–8.<br />

25. Z. Yang, D. Huang and L. Yang, A novel pitch period detection algorithm based on<br />

Hilbert–Huang transform, Lecture Notes Comput. Sci. 3338 (2004) 586–593.<br />

26. Z. Yang, D. Qi and L. Yang, Signal period analysis based on Hilbert–Huang transform<br />

and its application to texture analysis, in Proceedings of the Third International Conference<br />

on Image and Graphics, Hong Kong, China (18–20 Dec. 2004), pp. 430–433.<br />

27. Z. Yang and L. Yang, A novel texture classification method using multidirections<br />

main frequency center, in Proceedings of the 2007 International Conference<br />

on Wavelet Analysis and Pattern Recognition, Beijing, China (2–4 Nov. 2007),<br />

pp. 1372–1376.<br />

28. Z. Yang, L. Yang, D. Qi and C. Y. Suen, An EMD-based recognition method for<br />

Chinese fonts and styles, Patt. Recogn. Lett. 27 (2006) 1692–1701.<br />

29. D. Zhang, Automated Biometrics: Technologies and Systems (Kluwer, Norwell, MA,<br />

2000).