UNIT-4: PARALLEL COMPUTER MODELS STRUCTURE - Csbdu.in

UNIT-4: PARALLEL COMPUTER MODELS STRUCTURE - Csbdu.in

UNIT-4: PARALLEL COMPUTER MODELS STRUCTURE - Csbdu.in

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

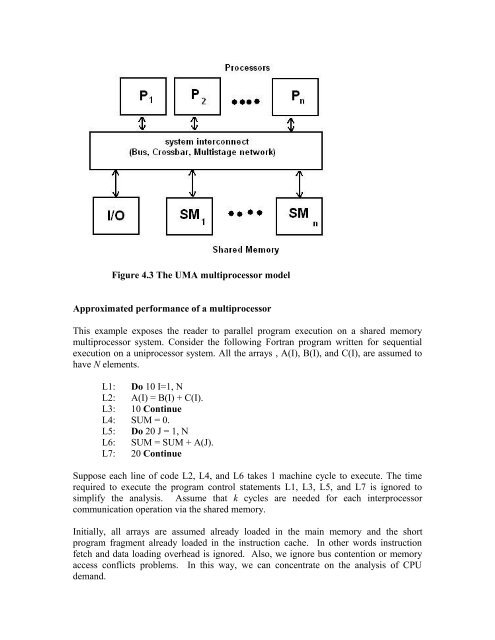

Figure 4.3 The UMA multiprocessor model<br />

Approximated performance of a multiprocessor<br />

This example exposes the reader to parallel program execution on a shared memory<br />

multiprocessor system. Consider the follow<strong>in</strong>g Fortran program written for sequential<br />

execution on a uniprocessor system. All the arrays , A(I), B(I), and C(I), are assumed to<br />

have N elements.<br />

L1: Do 10 I=1, N<br />

L2: A(I) = B(I) + C(I).<br />

L3: 10 Cont<strong>in</strong>ue<br />

L4: SUM = 0.<br />

L5: Do 20 J = 1, N<br />

L6: SUM = SUM + A(J).<br />

L7: 20 Cont<strong>in</strong>ue<br />

Suppose each l<strong>in</strong>e of code L2, L4, and L6 takes 1 mach<strong>in</strong>e cycle to execute. The time<br />

required to execute the program control statements L1, L3, L5, and L7 is ignored to<br />

simplify the analysis. Assume that k cycles are needed for each <strong>in</strong>terprocessor<br />

communication operation via the shared memory.<br />

Initially, all arrays are assumed already loaded <strong>in</strong> the ma<strong>in</strong> memory and the short<br />

program fragment already loaded <strong>in</strong> the <strong>in</strong>struction cache. In other words <strong>in</strong>struction<br />

fetch and data load<strong>in</strong>g overhead is ignored. Also, we ignore bus contention or memory<br />

access conflicts problems. In this way, we can concentrate on the analysis of CPU<br />

demand.