Curl-Curl-Eigenvalue Equation - Institut für Allgemeine ...

Curl-Curl-Eigenvalue Equation - Institut für Allgemeine ...

Curl-Curl-Eigenvalue Equation - Institut für Allgemeine ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

j ≡ 0<br />

S<br />

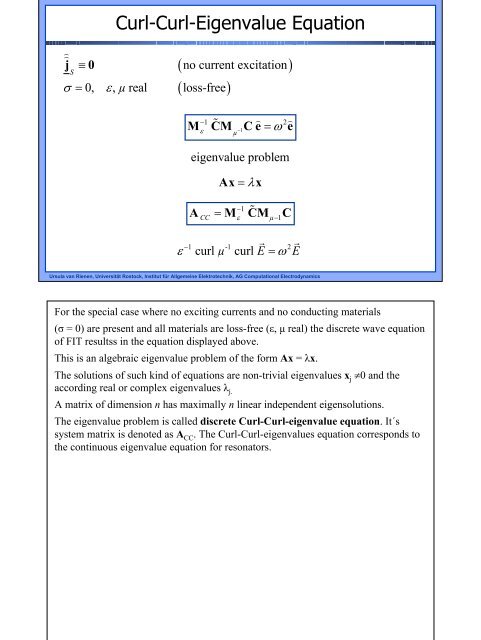

<strong>Curl</strong>-<strong>Curl</strong>-<strong>Eigenvalue</strong> <strong>Equation</strong><br />

σ = 0, ε, µ real loss-free<br />

( no current excitation)<br />

( )<br />

M CM <br />

Ce = ω e<br />

−1 2<br />

ε<br />

−1<br />

µ<br />

ε<br />

eigenvalue problem<br />

Ax = λx<br />

A = M CM C<br />

−1<br />

CC ε µ −1<br />

<br />

curl µ curl E = ω E<br />

−1 -1 2<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

For the special case where no exciting currents and no conducting materials<br />

(σ = 0) are present and all materials are loss-free (ε, µ real) the discrete wave equation<br />

of FIT resultss in the equation displayed above.<br />

This is an algebraic eigenvalue problem of the form Ax = λx.<br />

The solutions of such kind of equations are non-trivial eigenvalues x j ≠0 and the<br />

according real or complex eigenvalues λ j.<br />

A matrix of dimension n has maximally n linear independent eigensolutions.<br />

The eigenvalue problem is called discrete <strong>Curl</strong>-<strong>Curl</strong>-eigenvalue equation. It´s<br />

system matrix is denoted as A CC . The <strong>Curl</strong>-<strong>Curl</strong>-eigenvalues equation corresponds to<br />

the continuous eigenvalue equation for resonators.

<strong>Curl</strong>-<strong>Curl</strong>-<strong>Eigenvalue</strong> <strong>Equation</strong><br />

1/2<br />

Use transformation e' = Mε<br />

e to derive an equation with<br />

symmetric system matrix<br />

<br />

<br />

M CM CM <br />

e'<br />

= e'<br />

1/2 −1/2 2<br />

ε −1<br />

ε<br />

ω<br />

µ<br />

system matrix<br />

1/2 −1/2<br />

A'<br />

= Mε<br />

ACC<br />

Mε<br />

= M CM CM<br />

=<br />

−1/2 −1/2<br />

ε<br />

µ −1<br />

ε<br />

−1/2 −1/2 −1/2 −1/2<br />

( Mε<br />

CM <br />

µ )( Mε<br />

CM <br />

µ )<br />

T<br />

is symmetric<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

<strong>Eigenvalue</strong>s of both equations are the quadratic resonance frequencies ω 2 and the<br />

eigenvectors correspond each to the fields of the according resonator modes.<br />

Now we can study the properties of the FIT discretization by analyzing the algebraic<br />

properties of the system matrix A CC .<br />

In the actual form of the curl-curl-eigenvalue equation the matrix A CC is nonsymmetric.<br />

Yet, using the transformation given above for the electric grid voltage we receive the<br />

above formulation with real symmetric system matrix A’. The eigenvalues of A CC and<br />

A’ are identical.

Solution Space of <strong>Eigenvalue</strong> Problem<br />

λ = ω ≥ 0 ∀ λ = eigenvalue of A '<br />

2<br />

i i i<br />

Consistency between mathematical model and physics is based upon<br />

T<br />

• Symmetry of the curl operators: C<br />

= C<br />

• Possibility to split the material operators:<br />

M = M M and M = M M<br />

ε<br />

1/2 1/2 1/2 1/2<br />

ε ε<br />

µ<br />

µ µ<br />

(real diagonal matrices with non-negative entries)<br />

Consider<br />

<br />

SC <br />

M C e = ω S <br />

M e = ω S<br />

d = 0<br />

2 2<br />

−<br />

µ 1 ε<br />

= 0<br />

<br />

⇒ either ω = 0 or Sd = 0<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

The solutions of the discrete eigenvalue equation are not only of interest if we directly want to<br />

determine the oscillating modes in resonators but they also hold information about the behaviour<br />

of time-variable fields obtained by FIT-discretization.<br />

Using purely algebraic reasoning we can get information about the location of the eigenvalues:<br />

Because of it´s symmetry the matrix A’ (and thus also A CC ) has purely real eigenvalues. From it´s<br />

representation as product of two matrices transposed to each other it also follows that all<br />

eigenvalues are non-negative (A’ is positive semi-definite).<br />

Thus, also the eigenfrequencies ω j are real and non-negative. This corresponds to the physical<br />

property that loss-free resonators have real eigenfrequencies, only.<br />

This consistency between the mathematical model and physics is not self-evident. In this case it is<br />

based on the properties of<br />

• the symmetry of the discrete curl-operators of FIT,<br />

• the possibility to decompose the material operators because they are real diagonal matrices with<br />

non-negative entries.<br />

A further hint about the solution space of the eigenvalue problem can be obtained by the following<br />

physical consideration: If we multiply the curl-curl-eigenvalue equation from left by M ε and build<br />

the divergence next (multiplying from left the discrete divergence operator) we get the equation<br />

displayed above. This means that for all eigensolutions either the eigenfrequency ω or the<br />

divergence of the electric flux density have to vanish.

Solution Space of <strong>Eigenvalue</strong> Problem<br />

Solution space L<br />

can be decomposed:<br />

L= L<br />

S<br />

∪ L<br />

d<br />

2 2<br />

ω = 0 ω ≠0<br />

1. Subspace LS<br />

of electrostatic solutions with ω = 0 :<br />

<br />

i potential approach: E = − grad ϕ and e =− −<br />

Φ<br />

(<br />

)<br />

T<br />

( S )<br />

T<br />

T<br />

i because of curl grad = 0 and CS<br />

= SC = 0<br />

<br />

<br />

⇒ curl E = 0 and Ce = 0<br />

S<br />

⇒ ω = 0<br />

<br />

i in general: div D ≠0 and<br />

S<br />

S<br />

S<br />

<br />

Sd ≠0<br />

S<br />

S<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

The solution space L of the <strong>Curl</strong>-<strong>Curl</strong>-eigenvalue equation thus can be split into two disjoint<br />

subspaces: L S is the space of static solutions since it holds all eigensolutions λ=ω²=0.<br />

L d is the space of dynamic solutions which all have resonance frequencies above zero and thus<br />

correspond to harmonic oscillating fields.<br />

1. For the eigenvectors, i.e. the discrete field vectors, of both solution classes we find, using the<br />

properties of FIT – discretization:<br />

Electrostatic fields with ω=0:<br />

Such fields may be represented as gradient of a scalar potential. Because of curl grad=0<br />

the curl of the electric field and electric grid voltage is vanishing for these modes. Inserting this<br />

into the curl-curl-eigenvalue equations we obtain ω=0 as it should be.<br />

In a grid of N P points there exist about N p linear independent potential vectors ϕ and thus about N P<br />

linear independent static eigenmodes (ignoring points with fixed potential, as e.g. at the boundary).<br />

This means that the eigenvalue λ=0 is (about) N P -fold degenerate.<br />

In general, the potential distribution does not fulfil the Laplace equation such that we need to<br />

assume charges in the grid points for the existence of such solutions. This means that the<br />

divergence of the flux density is not equal to zero.<br />

Note: In problems with multiple conductors (structures with several disjoint ideal conductors),<br />

there exist also some modes with ω=0 which are divergence- and curl-free. We will not treat these<br />

cases here.

Solution Space of <strong>Eigenvalue</strong> Problem<br />

2. Subspace L of dynamic solutions with ω ≠ 0 :<br />

d<br />

i from Faraday's law we have:<br />

<br />

<br />

D5 ≠ 0<br />

<br />

curl E ≠0 and Ce ≠0<br />

d<br />

i from Ampere's law we get:<br />

1 1 <br />

Dd = curl Hd and dd = Ch <br />

d<br />

jω<br />

jω<br />

i because of div curl = 0 and SC =<br />

0 we have<br />

<br />

<br />

div = 0 and Sd = 0<br />

D d d<br />

L= L<br />

S<br />

∪ L<br />

d<br />

2 2<br />

ω = 0 ω ≠ 0<br />

d<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Dynamic eigen-oscillations with ω≠0: According to Faraday´s induction law these modes<br />

possess a non- vanishing curl-contribution.<br />

The corresponding electric grid flux can be represented with help of Ampère´s law. Because of<br />

div curl=0 the dynamic modes are divergence – free.<br />

The exact separation of both subspaces with the provable curl-and divergence-freeness of the<br />

corresponding modes thus holds in the discrete case just because the matrix operators fulfil the<br />

necessary conditions. This is not at all self-evident and for some other discretization methods it<br />

does not hold!<br />

According to our previous considerations, the eigenvalue problem of dimension 3N P has the<br />

about N P – fold degenerated eigenvalue ω² = 0 and only about 2N p non-zero eigenvalues.<br />

The static modes, however, are meaningful solutions of Maxwell´s equations if and only if the<br />

charge distribution which results from div D s ≠ 0 is allowed for the solution domain. Since this<br />

is generally not the case as usually div D = 0 is assumed for harmonic problems our system<br />

matrix turns out to be „too large“ by about 1/3.

Solution Space of <strong>Eigenvalue</strong> Problem<br />

Im{ λ}<br />

N-fold eigenvalue 0 (static solutions)<br />

2 2<br />

2 Re<br />

ω 1<br />

ω<br />

2<br />

… ω<br />

{ λ}<br />

2 N<br />

smallest (non-zero) eigenvalue = fundamental oscillation<br />

Symmetry and positive semi-definiteness of A'<br />

⇒ orthogonality of the modes:<br />

<br />

e' ⋅ e' = 0 ∀i, j withλ ≠λ<br />

i j i j<br />

Orthogonality condition after back-transformation:<br />

<br />

ei⋅ Mεej = 0 and ei⋅ d<br />

j<br />

= 0 ( i ≠ j)<br />

4 x stored electric energy (non-zero only for i = j)<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Consequently, we should search for some transformation which reduces the dimension of the<br />

system matrix from 3N P to 2N P . Building in the discrete Gauss law for electricity with<br />

vanishing right hand side (no charges) would yield such a transformation but destroy the<br />

band structure of the matrix affording higher computational effort for it´s storage.<br />

Instead of this it is more elegant to control the static modes by a modified formulation of the<br />

wave equation and/or by specific algebraic solution methods which will be treated later.<br />

Another property of the solution of the <strong>Curl</strong>-<strong>Curl</strong> matrix A´. For two eigenvectors, each, of<br />

this matrix orthogonality holds if the corresponding eigenvalues are not equal to each other.<br />

Hence, orthogonality holds for all pairs of non-degenerated modes – also for each pair build<br />

by one dynamic and one static mode. For degenerated dynamic modes (with λ i =λ j ≠0)<br />

orthogonal linear combinations can always be found.<br />

After back-transformation from A` to A cc we obtain the orthogonality relation for dynamic<br />

eigenmodes given above.<br />

The scalar product of the vectors of electric grid voltage and electric grid flux formally<br />

corresponds to four times of the stored electric energy which is non-vanishing only if both<br />

vectors belong to the same field.

Grid Dispersion <strong>Equation</strong><br />

Plane waves:<br />

j( t k r)<br />

E = E e ω − <br />

⋅<br />

0<br />

2<br />

2 2 2 2 ω<br />

Dispersion equation: k = kx + ky + kz<br />

=<br />

2<br />

c<br />

With<br />

<br />

− jk⋅r<br />

− jk jk<br />

x x − y y − jkz<br />

z<br />

e = e e e<br />

− jk ∆x − jk ∆y<br />

− jkz<br />

∆z<br />

x y z<br />

x<br />

y<br />

T = e , T = e , T = e<br />

we find the phase factor<br />

by which the complex grid voltage is multiplied<br />

when the wave proceeds by one step size in x-, y- or z-direction<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Based on the discrete wave equation we will introduce next the so–called grid dispersion equation<br />

which allows for an extensive analysis of the properties of the Maxwell-Grid-<strong>Equation</strong> (MGE) for timedependant<br />

fields.<br />

The basis for the derivation is Fourier´s theorem according to which each wave may be represented as<br />

sum of elementary waves. In electrical engineering, this theorem is mainly applied to purely timedependant<br />

signals. It may also be applied to space-dependant electromagnetic fields: Then, elementary<br />

waves are fields which can be represented in space and time by harmonic functions, i.e. sine, cosine or<br />

the complex exponential function. Physically, these are just plane waves.<br />

In homogeneous space (vacuum) the plane waves are solution of (continuous) Maxwell´s equations for<br />

which the frequency ω or and the wave number k = |k| fulfil the dispersion equation.<br />

The idea of the following analysis is to check under which conditions plane waves exist on the grid, i.e.<br />

we can derive an according representation for the discrete field vectors which solve the MGE. First, we<br />

will study the time-harmonic case.<br />

In order to eliminate the influence of the boundary conditions we assume an indefinitely large Cartesian<br />

computation grid and equidistant step size in all three coordinate direction, i.e ∆u=∆v=∆w for all i=1,...,<br />

I, j=1,...,J, K=1, ..., K. Further, we assume constant material coefficients ε 0 , µ 0 and σ=0 (vacuum),<br />

Taking the continuous definition of a plane wave we impress the given electric field of the plane wave<br />

into the vector components of the electric grid voltage by evaluation of the corresponding path integral<br />

for each edge in the grid. Then, we find that the complex grid voltage has to be multiplied with the<br />

phase factors given above as the wave proceeds one grid step in x-, y- and z-direction, respectively.<br />

Thus, the complete discrete field vector can be explained by one voltage component per direction in<br />

space which will be denoted as shown here (a hat as sign for the wave amplitudes). As arbitrary origin<br />

for these discrete wave amplitudes we choose the three voltage components associated to some grid<br />

point n 0 (usual indexing).

e<br />

We have<br />

= E<br />

4 z<br />

Grid Dispersion <strong>Equation</strong><br />

Regard Faraday's induction law:<br />

<br />

b<br />

<br />

e = E<br />

⋅T<br />

1 x<br />

3 y z<br />

= B<br />

<br />

e<br />

= E<br />

1 y<br />

<br />

− jωb = e − e + e −e<br />

<br />

1 1 3 2 4<br />

= e1( 1− T z) + e4( − 1+<br />

T y)<br />

x y( ) <br />

z z( y)<br />

<br />

e = E<br />

⋅ T<br />

2 z y<br />

z<br />

x y<br />

and − jω<br />

B = E 1− T + E − 1 + T for the wave amplitudes<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

In the next step we will complete the time derivative of the magnetic flux component<br />

according to Faraday´s induction law for the corresponding grid areas. As we study<br />

the frequency domain, the time derivative is given by multiplication by јω.<br />

With the notations of the sketch we get the relations shown here.

Discrete induction law:<br />

Grid Dispersion <strong>Equation</strong><br />

<br />

<br />

⎛ 0 −( T 1)<br />

1 x<br />

x<br />

z − T y − ⎞⎛E<br />

⎞ ⎛B<br />

⎞<br />

⎜<br />

⎟⎜<br />

⎟ ⎜ ⎟<br />

T 1 0 ( 1)<br />

<br />

<br />

⎜ z<br />

− − T<br />

x<br />

− ⎟⎜Ey⎟=−jω<br />

⎜By⎟<br />

⎜ ( T 1)<br />

1 0 <br />

<br />

y<br />

T ⎟<br />

⎝<br />

− −<br />

x<br />

−<br />

⎠⎜E z ⎟ ⎜B<br />

z ⎟<br />

⎝ ⎠ ⎝ ⎠<br />

C<br />

⎛ ∆x<br />

⎞<br />

⎜<br />

⎟<br />

⎛H<br />

<br />

x ⎞ ⎜<br />

µ ∆y ∆z<br />

⎟ ⎛B<br />

x ⎞<br />

⎜ ⎟<br />

<br />

⎜<br />

∆ y<br />

⎟ ⎜ ⎟<br />

⇒ <br />

⎜H<br />

y⎟=⎜ ⎟ ⎜B<br />

y⎟<br />

x<br />

, etc.<br />

µ z x<br />

H<br />

⎜<br />

∆ ∆<br />

⎟<br />

⎜ <br />

z ⎟ ⎜B<br />

z⎟<br />

⎝ ⎠ ⎜<br />

∆z<br />

⎟ ⎝ ⎠<br />

⎜<br />

µ ∆x∆y⎟<br />

⎝<br />

⎠<br />

∆ x=∆<br />

homogeneous<br />

material<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

M<br />

−1<br />

µ<br />

Thus, for all three directions in space, we obtain a mapping of the discrete induction<br />

law to the 3 x 3 system of equations as displayed above.<br />

So we have determined the magnetic flux components. Next, we can use the material<br />

equations to determine the wave amplitudes of the magnetic grid voltages. The<br />

discrete relation for the simple case of a homogeneous equidistant grid is given here.

Grid Dispersion <strong>Equation</strong><br />

Regard Ampere's law:<br />

1<br />

H<br />

x<br />

T<br />

y<br />

H y<br />

D z<br />

C<br />

<br />

1<br />

H<br />

T<br />

x<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

y<br />

H x<br />

jω<br />

D<br />

Discrete law:<br />

−1 −1<br />

⎛ 0 −( 1−T z ) 1−T ⎞<br />

y ⎛H<br />

<br />

x<br />

⎞ ⎛D<br />

x<br />

⎞<br />

⎜ ⎟ ⎜ ⎟ ⎜ ⎟<br />

⎜ −1 −1<br />

1−T 0 <br />

z<br />

−( 1− T<br />

⎟<br />

x ) ⎜ H y ⎟ = jω<br />

⎜ Dy<br />

⎟<br />

⎜ ⎟ ⎜ −1 −1<br />

<br />

⎜−( 1−T<br />

) 1 0 H z D z<br />

y<br />

−T<br />

⎟⎜ ⎟ ⎟ ⎜ ⎟<br />

x<br />

⎝<br />

⎠⎝ ⎠ ⎝ ⎠<br />

<br />

z<br />

=<br />

( 1 −1 ) 1<br />

( 1<br />

−<br />

T ) <br />

y<br />

H x T<br />

x<br />

H<br />

− − + −<br />

y<br />

Next, we will apply the discrete Ampère´s law to the corresponding dual area as<br />

shown in this sketch. The inverse phase factors in the resulting equation just have the<br />

effect of a displacement in negative coordinate direction.<br />

Again, we can summarize the equations for all three directions in space obtaining the<br />

system of equations given here.<br />

As we can easily verify the system matrices („curl operators“) again fulfil the relation<br />

that the one on the primary grid equals the Hermitian one the dual grid (Hermitian<br />

matrix = transposed plus conjugate complex).

Grid Dispersion <strong>Equation</strong><br />

Electric material matrix:<br />

⎛ ∆x<br />

⎞<br />

⎜<br />

⎟<br />

⎛E<br />

<br />

x ⎞ ⎜ε<br />

∆y<br />

∆z<br />

⎟ ⎛D<br />

x ⎞<br />

⎜ ⎟<br />

<br />

⎜ ∆ y<br />

⎟ ⎜ ⎟<br />

⎜E<br />

<br />

y⎟= ⎜ ⎟ ⎜D<br />

y⎟<br />

ε z x<br />

E<br />

⎜ ∆<br />

∆<br />

⎜ <br />

z ⎟<br />

⎟<br />

⎜D<br />

z<br />

⎜<br />

⎟<br />

⎝ ⎠ ∆z<br />

⎟ ⎝ ⎠<br />

⎜<br />

ε ∆x∆y⎟<br />

⎝<br />

<br />

⎠<br />

−1<br />

M<br />

ε<br />

Amplitude vector:<br />

⎛E<br />

⎞<br />

x<br />

⎜ ⎟<br />

e = ⎜E<br />

⎟<br />

y<br />

⎜E<br />

⎟<br />

⎝<br />

z<br />

⎠<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Finally , the electric material matrix is applied in order to transform the electric grid<br />

fluxes into grid voltages.<br />

Thus we have transformed all equations now to the three-dimensional space of wave<br />

amplitudes.

1<br />

Grid Dispersion <strong>Equation</strong><br />

The local <strong>Curl</strong>-<strong>Curl</strong>-wave equation<br />

−1 H −1<br />

2<br />

Mε<br />

C Mµ<br />

Ce<br />

= ω e<br />

has to hold for e<br />

!<br />

( ) ( )<br />

jx<br />

Using the relation e = cos x + jsin x the lengthy solution<br />

finally yields<br />

λ = 0 (the static solution),<br />

λ<br />

λ<br />

c<br />

2<br />

2<br />

⎛<br />

kx<br />

x ⎛ ⎛ky∆y⎞⎞<br />

⎜<br />

⎛ ⎛ ∆ ⎞⎞ sin<br />

sin<br />

⎛ ⎛kz∆z⎞⎞<br />

⎜ ⎜ ⎟⎟<br />

sin<br />

⎜<br />

⎜ ⎜ ⎟<br />

2<br />

⎟ 2 ⎜ ⎜ ⎟<br />

2<br />

⎟<br />

⎝ ⎠ ⎜ ⎝ ⎠⎟<br />

⎝ ⎠<br />

⎜<br />

⎜ ⎟ ⎜ ⎟<br />

∆x ⎜ ∆y ⎟<br />

∆z<br />

⎜<br />

⎜ 2 ⎟ ⎜ 2 ⎟ ⎜<br />

⎝ ⎠ ⎝ 2 ⎟<br />

⎜<br />

⎝ ⎠<br />

⎠<br />

⎝<br />

2<br />

2<br />

=<br />

3<br />

= + +<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

2<br />

⎞<br />

⎟<br />

⎟<br />

⎟<br />

⎟<br />

⎟<br />

⎠<br />

Sequentially applying the equations previously derived, a condition may be<br />

formulated under which the discrete plane wave fulfils the Maxwell-Grid-<strong>Equation</strong>s<br />

as demanded before (thus justifying the approach used here). The electric amplitude<br />

vector has to fulfil the local <strong>Curl</strong>-<strong>Curl</strong>-Wave equation.<br />

This is an algebraic 3x3 eigenvalue equation for the electric amplitude vector with<br />

eigenvalue λ=ω². After some lengthy calculation and paying attention to the relation<br />

e jx = cos(x) + j sin (x) one eigenvalue λ 1 =0 and a double eigenvalue λ 2 = λ 3 as given<br />

above are found. The solution λ=ω²=0 is denoted as static solution. It is not regarded<br />

here. The two solutions λ 2,3 ≠0 describe the discrete waves, searched for. The<br />

corresponding two-dimensional space of their eigenvectors indicates the two<br />

polarisation directions of the plane wave.

Grid Dispersion <strong>Equation</strong><br />

The grid dispersion equation<br />

2<br />

2<br />

2<br />

⎛ ⎛ky∆y⎞⎞<br />

z<br />

⎛ ⎛kx∆x⎞⎞ sin<br />

sin<br />

⎛ ⎛k ∆z⎞⎞<br />

⎜ ⎜ ⎟ sin<br />

2<br />

⎟ ⎜ ⎜ ⎟⎟<br />

2 ⎜ ⎜ ⎟<br />

2<br />

⎟ ω<br />

⎜ ⎝ ⎠⎟ ⎛ ⎞<br />

+ ⎜ ⎝ ⎠⎟<br />

+ ⎜ ⎝ ⎠⎟<br />

=<br />

x y z ⎜ ⎟<br />

⎜ ∆ ⎟ ⎜ ∆ ⎟<br />

∆<br />

⎝ c ⎠<br />

⎜ 2 ⎟ ⎜ 2 ⎟ ⎜ 2 ⎟<br />

⎝ ⎠ ⎝ ⎠ ⎝ ⎠<br />

2<br />

is the condition for the existence of the discrete waves belonging<br />

to the eigenvalues λ ≠ 0.<br />

2,3<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Finally, the condition for the existence of these waves is given as so-called grid<br />

dispersion equation. It connects the properties of the spatial discretization on the left<br />

hand side with the second time derivative of the wave equation which arises as factor<br />

–ω² in frequency domain (the negative sign is compensated by that in Faraday´s<br />

induction law).<br />

The grid dispersion equation fixes how plane waves propagate in the computational<br />

FIT-grid. For example, we can see that the propagation depends on the step size as<br />

well as on the propagation direction. Very important in that is that the grid dispersion<br />

equation tends to the analytic dispersion equation as we build the limits ∆x, ∆y, ∆z,<br />

∆t = 0. This is another proof of the convergence of FIT.<br />

Please note that we used some relevant assumptions on the grid for the derivation<br />

above: an equidistant discretization in all spatial directions, homogeneous material<br />

and no boundary conditions. Nevertheless, the grid dispersion equation is of great<br />

importance for the analysis of FIT´s properties as will get more obvious in the<br />

following chapters.

Solution of the <strong>Eigenvalue</strong> <strong>Equation</strong><br />

−1 2<br />

Loss-free (real ε, σ=0): Mε<br />

CM <br />

µ − 1<br />

C e = ω e<br />

eliminate the N<br />

P<br />

solutions with λi<br />

= 0:<br />

<br />

dynamic modes with: div( ε E) = 0<br />

<br />

T<br />

grad div D = 0 − SSM <br />

ε<br />

e=<br />

0<br />

1/2 1/2 1/2<br />

Transformation e' = e: - M S T SM <br />

e' = 0<br />

M ε ε ε<br />

−<br />

(<br />

<br />

− T<br />

<br />

−1<br />

µ<br />

)<br />

1/2 1/2 1/2 1/2 2<br />

Mε CM CMε + Mε S SMε e <br />

' = ω e'<br />

<br />

-1 1 2<br />

ε µ ε E = ω E<br />

−<br />

( curl curl- grad div )<br />

2<br />

"Nabla-Squared <strong>Eigenvalue</strong> Eq." (curl curl - grad div = - ∇ )<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

The eigenvalue equation of FIT for time-harmonic fields in closed structures (resonators) has<br />

been formulated in the previous chapter. For the loss-free case (real material coefficients and<br />

vanishing conductivity) it is repeated here.<br />

Usually, the dynamic modes with the smallest non-zero eigenvalues λ=ω²≠0 are of technical<br />

interest. As previously found, in this regime the spectrum of the system matrix is determined<br />

by the multiple degenerated eigenvalue λ=0. Since this situation poses great numerical<br />

difficulties for most eigenvalue solvers we will derive an alternative formulation in the<br />

sequel.<br />

Solution methods which search for the smallest eigenvalues and the corresponding<br />

eigenvectors of a matrix would first compute all eigenvalues with ω²=0 and only then they<br />

could compute the fundamental mode as second-smallest eigenvalue.<br />

Therefore, our goal is to eliminate the N P -fold eigenvalue zero in the matrix A CC.<br />

For all dynamic modes searched for we have div (ε E)=0 and the corresponding discrete<br />

equation in grid space. Now we multiply the discrete equation from left with the discrete<br />

gradient operator (continuously, we build the gradient of the left hand side.)<br />

Replacing now the electric grid voltage by its transformed form we find a symmetric form of<br />

the last equation.<br />

Now we subtract this equation from the <strong>Curl</strong>-<strong>Curl</strong>-eigenvalue equation. The resulting<br />

equation is given in the original and in its symmetric form. Also the continuous form is given.

Solution of the <strong>Eigenvalue</strong> <strong>Equation</strong><br />

„Ghostmodes“ in inhomogeneous waveguide<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Because of the vector identity curl curl – grad div = -∇² this equation is also denoted as Nabla-<br />

Squared-<strong>Eigenvalue</strong> equation. Only the dynamic modes are searched for, but not the static fields<br />

(with div D≠0) fulfil this equation.<br />

Since the solution manifold has to be preserved we will study next which solutions replace the<br />

static solutions.<br />

One can show that, again, we obtain two types of solution:<br />

1. eigensolutions with ω² >0, div D=0 and curl E≠0:<br />

These are the modes searched for. (Here, we also count the modes in<br />

multiple conductor systems for which still holds: ω²=0, div D=0, curl<br />

E=0)<br />

2. eigensolutions with ω² >0, div D≠0, curl E=0:<br />

These modes obey to the equation - grad div E=ω² ω µ E and therefore they are no<br />

physically meaningful solutions.<br />

The elimination of the static solutions thus leads to the appearance of unphysical solutions, the socalled<br />

„Ghost Modes“. They always appear when we solve the <strong>Curl</strong>-<strong>Curl</strong>-eigenvalue equation in<br />

the latter form. After the solution of the eigenvalue problem we thus need to distinguish the ghost<br />

modes from the physical dynamic modes.

Solution of the <strong>Eigenvalue</strong> <strong>Equation</strong> (C‘ted)<br />

„Ghostmodes“ in inhomogeneous waveguide<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Numerical approaches and discretization methods which cannot fulfil the relation div<br />

curl=0 on the grid space have no straightforward possibility to distinguish the two<br />

solution types. They often apply the so-called penalty method where the grad divterm<br />

in the eigenvalue equation is weighted by some factor p (penalty factor). After<br />

multiple solution with different values of p the correct (physical) eigensolutions can<br />

be distinguished because they do not show any dependence on p.<br />

With FIT this effort of multiple solution of the eigenvalue problem is not necessary<br />

since the product of the div and curl operator vanishes and thus it is sufficient to<br />

apply the dual divergence operator to the electric grid flux to distinguish the two types<br />

of solution.

Memory Usage<br />

Example:<br />

mesh points:<br />

N =<br />

P<br />

6<br />

10 (double precision: 8 bytes/number)<br />

FIT: 39 bands of entries<br />

N P<br />

(21 have to be stored, symmetry)<br />

⇒ 170 MB (+ some add. vectors)<br />

no band structure:<br />

fill-in-entries by solver (still symmetric!)<br />

⇒<br />

0 .6<br />

2<br />

13<br />

.5 (3N P<br />

) = 3 ∗10 Byte<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Our eigenvalue problem has the dimension N=3 N P and thus also N eigensolutions. The<br />

number N P of grid points may be very large in some applications (up to a few millions) which<br />

involves that a complete solution is generally hardly possible.<br />

In the following, we will describe an iteration method for the solution of such kind of<br />

eigenvalue problems. In the practically interesting case of only a few 10-100 eigenvalues<br />

searched for, this method provides good results with acceptable effort.<br />

All methods described here exploit the fact that the system matrix is very large but sparse: In<br />

the iterative solution of the eigenvalue problem only so-called „matrix-vector-operations“ are<br />

used for which the matrix pattern can directly be exploited for an efficient implementation. In<br />

general, methods which afford a decomposition of the matrix should not be applied since they<br />

destroy the band structure.<br />

As example let us assume a computational grid of N P = 10 6 grid points. According to the<br />

previously found structure the <strong>Curl</strong>-<strong>Curl</strong>-System matrix has 39 bands of length N P where<br />

only 21 of those have to be stored because of symmetry reasons. For a double-precision<br />

calculation we need 8 Byte per floating point number which yields a storage requirement of<br />

about 170 MB. Additionally, we need to store some auxiliary vectors (depends on the solution<br />

method). This is easily affordable on modern PC´s or workstations.<br />

Yet, if we would loose our band structure and would need to store the complete matrix<br />

(because of fill-ins produced by the solution method) we would end up with 0.5* (3N P )² = 4.5<br />

* 10 12 numbers or 3.6 * 10 13 Byte (still for a symmetric matrix!)

von Mises-Iteration<br />

x<br />

A<br />

( 0)<br />

is real symmetric:<br />

( ) 1 2 3 N<br />

Ax = λx dim A = N λ > λ ≥ λ ≥… ≥ λ<br />

filled with random numbers, expressed as linear combination of e:<br />

x<br />

( 0)<br />

N<br />

= ∑αie<br />

i=<br />

1<br />

i<br />

i<br />

Iteration scheme x<br />

( v) ( v−1)<br />

= Ax<br />

leads to:<br />

⎡<br />

⎤<br />

v<br />

N<br />

⎢<br />

N<br />

( v)<br />

v v<br />

⎛λ<br />

⎞ ⎥<br />

i<br />

x = ∑αλ i i<br />

ei = λ1 ⎢α1e1+ ∑⎜ ⎟ αiei⎥<br />

i= 1 ⎢ i=<br />

2⎝λ1<br />

⎠ ⎥<br />

⎢<br />

<br />

⎣<br />

→0forv→∞<br />

⎥⎦<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

As introduction we will study the von Mises method, also called „power iteration“,<br />

to determine a specific solution for an eigenvalue problem of the form<br />

Ax = λx, dim (A) = N.<br />

We assume that the matrix A is real symmetric with eigenvalues<br />

| λ 1 | > | λ 2 | ≥ | λ 3 | ≥ ... ≥ | λ N |.<br />

We search for the (uniquely defined) eigenvalue λ 1 with large absolute value and<br />

the corresponding eigenvector x 1 .<br />

We start with an initial vector x (0) filled with random numbers. Since the<br />

eigenvectors of A build a complete orthogonal system we can represent x (0) as<br />

linear combination of eigenvectors e 1 , ..., e N as displayed above.<br />

Now we use the iteration scheme<br />

x (v) = Ax (v-1)<br />

And get the expression given above.<br />

The iteration converges against the eigenvector e 1 belonging to the eigenvalue λ 1<br />

with larges absolute value.

<strong>Eigenvalue</strong> Solver<br />

Rayleigh-quotient:<br />

( v) ( v)<br />

( v)<br />

x ⋅ Ax ⎛ ⎛ λ<br />

( )<br />

ε<br />

⎞<br />

R x = = λ<br />

( v) ( v)<br />

∞<br />

⎜<br />

∞+Ο⎜ ⎟<br />

x ⋅ x<br />

⎝λ<br />

⎝<br />

∞ ⎠<br />

λ =R<br />

1<br />

( v)<br />

( x )<br />

εv+∞<br />

⎞<br />

⎟<br />

⎠<br />

Normalization during iteration:<br />

( v) ( v−1) ( v)<br />

x = Ax , x =<br />

x<br />

x<br />

( v)<br />

( v)<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

A very good estimation of this eigenvalue can be obtained by the Rayleighquotient<br />

given above.<br />

In order to avoid a fast increase of the vector components of eigenvalues with |<br />

λ i | > 1 during the iteration a normalization is carried out after each step of the<br />

von Mises- iteration.<br />

The vector norm used in the normalization is arbitrary.

Iteration Scheme<br />

1. Choose randomly filled vector<br />

( 0)<br />

x<br />

2. For ν=1,2,3,… build:<br />

( v) ( v−<br />

)<br />

( v)<br />

( v)<br />

( v)<br />

1 ,<br />

3. Build Rayleigh quotient<br />

x<br />

x<br />

= Ax<br />

=<br />

x<br />

x<br />

λ =R<br />

( )<br />

( )<br />

1<br />

x v<br />

4. Repeat step 2-3 until the desired accuracy is reached<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

The complete iteration is given above.

Spectral Shift<br />

The von Mises-iteration yields the eigenvector with largest eigenvalue.<br />

Often the eigenvector corresponding to the smallest eigenvalue is<br />

of special interest.<br />

Define A ' with:<br />

( λ s) ( )<br />

Ax ' = Ax+ sx= + x x: Eigenvector of A<br />

System matrix A' with eigenvectors x, but eigenvalues are shifted:<br />

λ'<br />

= λ + s<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

With the von Mises-iteration in the previous form only the maximal eigenvalue (absolute value)<br />

of a matrix and it´s corresponding eigenvector can be found. Yet, for practical applications just<br />

the smallest eigenvalues are of interest (the fundamental mode of a cavity resonator, e.g.).<br />

By help of a spectral shift the same method can also be used to compute the smallest<br />

eigenvalues of a matrix. To do so the iteration is carried out with the slightly modified matrix<br />

A‘ = A + sI which has the same eigenvectors as A but shifted eigenvalues λ‘= λ + s as<br />

the above given consideration shows.<br />

If the spectrum of A is known in a good approximation the spectral shift s can now be chosen<br />

such that the smallest eigenvalue λ k<br />

searched for gets the eigenvalue λ k ’ of A’ with largest<br />

absolute value and can thus be computed with the von Mises-iteration. However, with this<br />

procedure we have to face the problem that numerical cancellation might occur for finite<br />

computational precision in the final back-transformation λ k = λ k<br />

’- s.<br />

Another problem arises in case that an eigenvalue λ i<br />

matrix A’ will get singular then.<br />

is chosen for s since the transformed

Inverse Iteration<br />

−1<br />

Iteration of :<br />

A<br />

1<br />

= λ ⇒ =<br />

λ<br />

−1<br />

Ax x A x x<br />

In connection with spectral shift, each arbitrary<br />

eigenvalue may be computed:<br />

−<br />

( s ) 1<br />

A'<br />

= A−<br />

I<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

If we take the inverse A -1 instead of A for our iteration the algorithm will converge<br />

against that eigenvalue of A with smallest absolute value because of<br />

Ax = λx ⇒ A -1 x = 1/ λ · x .<br />

But this advantage has to be paid by the much higher numerical effort of the inverse<br />

iteration: Either we have to build A -1 once a priori or we have to solve a linear system<br />

in each step.<br />

Nevertheless this method is often used since in connection with a spectral shift A’ =<br />

(A – S I) -1 each arbitrary eigenvalue of the spectrum may be computed.

Sub-Space-Iteration<br />

Very large, sparse matrix A,<br />

dim( A) = N, λ ≥ 0 real<br />

Look for basis vectors (not necessarily eigenvectors):<br />

( j)<br />

p<br />

∑<br />

i=<br />

1<br />

j<br />

i<br />

i<br />

( 1 )<br />

v = c e j = … p<br />

Eliminate all components of unwanted eigenvectors<br />

by iteration with<br />

( = 1…<br />

)<br />

A−<br />

r k n<br />

I k<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

The sub-space iteration described in the following simultaneously computes several<br />

eigenvectors in each application. The basic idea is to compute several basis vectors<br />

during the iteration which together span a subspace of the complete solution space<br />

where the searched eigensolution lie in this subspace.<br />

Given a very large sparse real matrix A of dimension N x N with eigenvalues λ.<br />

Further we assume that all eigenvalues are real and non-negative<br />

( symmetric positive semi-definite matrix.)<br />

We are searching for the „first“ p eigensolution, i.e. those eigenvectors belonging to<br />

the p smallest eigenvalues ( with p=10; ....100)

Sub-Space Iteration<br />

The computation of the eigensolution is done in two steps:<br />

1. The p so-called basis vectors spanning the sub-space<br />

containing the eigenvectors, searched for, are computed.<br />

2. Using these basis vectors, the given N x N- eigenvalue<br />

problem is transformed into a smaller eigenvalue problem of<br />

dimension p x p. The spectrum of this smaller problem holds<br />

the searched eigenvectors and can be solved by a direct<br />

solution method.<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

The computation of the eigensolution is done in two steps:<br />

1. The p so-called basis vectors spanning the sub-space containing the eigenvectors,<br />

searched for, are computed.<br />

2. Using these basis vectors, the given N x N- eigenvalue problem is transformed into<br />

a smaller eigenvalue problem of dimension p x p. the spectrum of this smaller<br />

problem holds the searched eigenvectors and can be solved by a direct solution<br />

method.

Sub-Space-Iteration<br />

0<br />

( r )<br />

( r )<br />

( 0)<br />

( 0)<br />

( 0)<br />

i=<br />

1<br />

( λ r)<br />

1 1 i i 1 i<br />

i=<br />

1<br />

n N n<br />

( λ r )<br />

n k i i k i<br />

k= 1 i= 1 k=<br />

1<br />

N<br />

v = v = α e<br />

v = A− I v = α − e<br />

∏<br />

v = A− I v = α − e<br />

=<br />

∑<br />

N<br />

∑<br />

∑ ∏<br />

N<br />

∑<br />

i=<br />

1<br />

i<br />

α P<br />

i<br />

( λ )<br />

e<br />

i n i i<br />

( λ) = ( λ−<br />

)<br />

with polynomial of the order n:<br />

P r<br />

n<br />

n<br />

∏<br />

k = 1<br />

k<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

Thus we need to search for a set of basis vector, i.e. linear independent vectors v (j)<br />

which each can be represented as linear combination of all searched eigenvectors e j<br />

(i<br />

=1…p). (The vectors v (j) themselves need not to be eigenvectors of A.)<br />

Then, an arbitrary vector of this sub-space of p basis vectors does not hold any<br />

component of the remaining (not interesting) eigenvectors e i<br />

with i = p+1, …,<br />

N.<br />

Therefore, our goal in the computation of the basis vectors is to eliminate all<br />

components of these undesired eigenvectors starting with some arbitrary initial vector<br />

v (0) .<br />

This can be reached by repeated multiplication of the initial vector v (0) with the<br />

matrix<br />

A - r k<br />

I (k = 1, …, n).<br />

The spectral shift by r k<br />

can vary now in each iteration step.<br />

Analogous to the procedure in the von Mises-iteration we receive the representation<br />

given above with a polynomial P n<br />

of order n, i.e. the number of iterations.

Solution of the <strong>Eigenvalue</strong> <strong>Equation</strong><br />

20<br />

15<br />

10<br />

5<br />

0<br />

−5<br />

Suppressed domain<br />

0 1 2 3 4 5<br />

Tschebyscheff polynomial of degree n=20, shifted and scaled<br />

Ursula van Rienen, Universität Rostock, <strong>Institut</strong> <strong>für</strong> <strong>Allgemeine</strong> Elektrotechnik, AG Computational Electrodynamics<br />

We can interpret the real values r i<br />

as zeros of the polynomial P n<br />

. We see that the<br />

components of the single eigenvectors e i<br />

contained in the initial vector v (0) are<br />

suppressed or “accelerated” according to the polynomial value P n<br />

(λ i<br />

).<br />

Now, the so-called accelerating polynomial and it´s zeros r k , respectively, may be<br />

chosen such that after sufficiently long iteration the basis vectors only hold<br />

components of the searched eigenvectors.<br />

Appropriate are e.g. the Tschebyscheff polynomials which are well-known from<br />

filtering techniques. Their degree n is fitted to the prescribed accuracy conditions.