Proceedings of the Workshop on Discourse in Machine Translation

Proceedings of the Workshop on Discourse in Machine Translation

Proceedings of the Workshop on Discourse in Machine Translation

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

all feats<br />

all feats + coref<br />

ML Method Acc:Tra<strong>in</strong> Acc:Test BLEU Acc:Tra<strong>in</strong> Acc:Test<br />

Basel<strong>in</strong>e 60.70 59.30 0.1401 60.70 59.30<br />

Orig<strong>in</strong>al TectoMT – – 0.1404 – –<br />

Vowpal Wabbit (passes=30) 90.62 75.69 – 90.83 75.87<br />

Vowpal Wabbit (passes=20) 89.99 76.43 0.1403 90.20 76.98<br />

Vowpal Wabbit (passes=10) 87.78 76.24 – 87.78 76.61<br />

Vowpal Wabbit (passes=30, l2=0.001) 71.23 66.11 – 83.03 77.16<br />

Vowpal Wabbit (passes=20, l2=0.001) 82.19 74.95 – 78.19 74.40<br />

Vowpal Wabbit (passes=10, l2=0.001) 75.03 70.17 – 72.81 70.17<br />

Vowpal Wabbit (passes=30, l2=0.00001) 90.52 75.69 – 90.94 76.06<br />

Vowpal Wabbit (passes=20, l2=0.00001) 89.99 76.43 – 90.09 76.98<br />

Vowpal Wabbit (passes=10, l2=0.00001) 87.67 76.24 – 87.67 76.61<br />

AI::MaxEntropy 85.99 76.61 0.1403 86.09 76.98<br />

sklearn.neighbors (k=1) 91.57 71.64 – 93.36 72.19<br />

sklearn.neighbors (k=3) 84.62 72.01 – 84.93 71.82<br />

sklearn.neighbors (k=5) 84.93 74.77 0.1403 84.72 75.87<br />

sklearn.neighbors (k=10) 82.51 73.30 – 83.14 75.87<br />

sklearn.tree 93.36 73.66 0.1403 94.10 71.82<br />

sklearn.SVC (kernel=l<strong>in</strong>ear) 90.83 75.51 0.1402 91.15 76.80<br />

sklearn.SVC (kernel=poly) 60.70 59.30 – 60.70 59.30<br />

sklearn.SVC (kernel=rbf) 71.23 68.69 – 73.76 71.27<br />

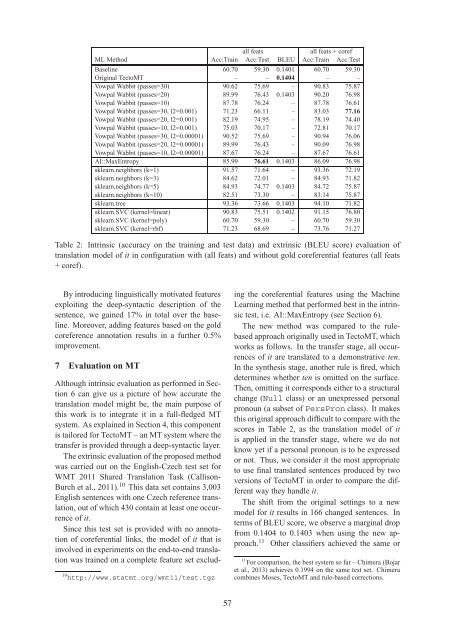

Table 2: Intr<strong>in</strong>sic (accuracy <strong>on</strong> <str<strong>on</strong>g>the</str<strong>on</strong>g> tra<strong>in</strong><strong>in</strong>g and test data) and extr<strong>in</strong>sic (BLEU score) evaluati<strong>on</strong> <str<strong>on</strong>g>of</str<strong>on</strong>g><br />

translati<strong>on</strong> model <str<strong>on</strong>g>of</str<strong>on</strong>g> it <strong>in</strong> c<strong>on</strong>figurati<strong>on</strong> with (all feats) and without gold coreferential features (all feats<br />

+ coref).<br />

By <strong>in</strong>troduc<strong>in</strong>g l<strong>in</strong>guistically motivated features<br />

exploit<strong>in</strong>g <str<strong>on</strong>g>the</str<strong>on</strong>g> deep-syntactic descripti<strong>on</strong> <str<strong>on</strong>g>of</str<strong>on</strong>g> <str<strong>on</strong>g>the</str<strong>on</strong>g><br />

sentence, we ga<strong>in</strong>ed 17% <strong>in</strong> total over <str<strong>on</strong>g>the</str<strong>on</strong>g> basel<strong>in</strong>e.<br />

Moreover, add<strong>in</strong>g features based <strong>on</strong> <str<strong>on</strong>g>the</str<strong>on</strong>g> gold<br />

coreference annotati<strong>on</strong> results <strong>in</strong> a fur<str<strong>on</strong>g>the</str<strong>on</strong>g>r 0.5%<br />

improvement.<br />

7 Evaluati<strong>on</strong> <strong>on</strong> MT<br />

Although <strong>in</strong>tr<strong>in</strong>sic evaluati<strong>on</strong> as performed <strong>in</strong> Secti<strong>on</strong><br />

6 can give us a picture <str<strong>on</strong>g>of</str<strong>on</strong>g> how accurate <str<strong>on</strong>g>the</str<strong>on</strong>g><br />

translati<strong>on</strong> model might be, <str<strong>on</strong>g>the</str<strong>on</strong>g> ma<strong>in</strong> purpose <str<strong>on</strong>g>of</str<strong>on</strong>g><br />

this work is to <strong>in</strong>tegrate it <strong>in</strong> a full-fledged MT<br />

system. As expla<strong>in</strong>ed <strong>in</strong> Secti<strong>on</strong> 4, this comp<strong>on</strong>ent<br />

is tailored for TectoMT – an MT system where <str<strong>on</strong>g>the</str<strong>on</strong>g><br />

transfer is provided through a deep-syntactic layer.<br />

The extr<strong>in</strong>sic evaluati<strong>on</strong> <str<strong>on</strong>g>of</str<strong>on</strong>g> <str<strong>on</strong>g>the</str<strong>on</strong>g> proposed method<br />

was carried out <strong>on</strong> <str<strong>on</strong>g>the</str<strong>on</strong>g> English-Czech test set for<br />

WMT 2011 Shared Translati<strong>on</strong> Task (Callis<strong>on</strong>-<br />

Burch et al., 2011). 10 This data set c<strong>on</strong>ta<strong>in</strong>s 3,003<br />

English sentences with <strong>on</strong>e Czech reference translati<strong>on</strong>,<br />

out <str<strong>on</strong>g>of</str<strong>on</strong>g> which 430 c<strong>on</strong>ta<strong>in</strong> at least <strong>on</strong>e occurrence<br />

<str<strong>on</strong>g>of</str<strong>on</strong>g> it.<br />

S<strong>in</strong>ce this test set is provided with no annotati<strong>on</strong><br />

<str<strong>on</strong>g>of</str<strong>on</strong>g> coreferential l<strong>in</strong>ks, <str<strong>on</strong>g>the</str<strong>on</strong>g> model <str<strong>on</strong>g>of</str<strong>on</strong>g> it that is<br />

<strong>in</strong>volved <strong>in</strong> experiments <strong>on</strong> <str<strong>on</strong>g>the</str<strong>on</strong>g> end-to-end translati<strong>on</strong><br />

was tra<strong>in</strong>ed <strong>on</strong> a complete feature set exclud-<br />

10 http://www.statmt.org/wmt11/test.tgz<br />

<strong>in</strong>g <str<strong>on</strong>g>the</str<strong>on</strong>g> coreferential features us<strong>in</strong>g <str<strong>on</strong>g>the</str<strong>on</strong>g> Mach<strong>in</strong>e<br />

Learn<strong>in</strong>g method that performed best <strong>in</strong> <str<strong>on</strong>g>the</str<strong>on</strong>g> <strong>in</strong>tr<strong>in</strong>sic<br />

test, i.e. AI::MaxEntropy (see Secti<strong>on</strong> 6).<br />

The new method was compared to <str<strong>on</strong>g>the</str<strong>on</strong>g> rulebased<br />

approach orig<strong>in</strong>ally used <strong>in</strong> TectoMT, which<br />

works as follows. In <str<strong>on</strong>g>the</str<strong>on</strong>g> transfer stage, all occurrences<br />

<str<strong>on</strong>g>of</str<strong>on</strong>g> it are translated to a dem<strong>on</strong>strative ten.<br />

In <str<strong>on</strong>g>the</str<strong>on</strong>g> syn<str<strong>on</strong>g>the</str<strong>on</strong>g>sis stage, ano<str<strong>on</strong>g>the</str<strong>on</strong>g>r rule is fired, which<br />

determ<strong>in</strong>es whe<str<strong>on</strong>g>the</str<strong>on</strong>g>r ten is omitted <strong>on</strong> <str<strong>on</strong>g>the</str<strong>on</strong>g> surface.<br />

Then, omitt<strong>in</strong>g it corresp<strong>on</strong>ds ei<str<strong>on</strong>g>the</str<strong>on</strong>g>r to a structural<br />

change (Null class) or an unexpressed pers<strong>on</strong>al<br />

pr<strong>on</strong>oun (a subset <str<strong>on</strong>g>of</str<strong>on</strong>g> PersPr<strong>on</strong> class). It makes<br />

this orig<strong>in</strong>al approach difficult to compare with <str<strong>on</strong>g>the</str<strong>on</strong>g><br />

scores <strong>in</strong> Table 2, as <str<strong>on</strong>g>the</str<strong>on</strong>g> translati<strong>on</strong> model <str<strong>on</strong>g>of</str<strong>on</strong>g> it<br />

is applied <strong>in</strong> <str<strong>on</strong>g>the</str<strong>on</strong>g> transfer stage, where we do not<br />

know yet if a pers<strong>on</strong>al pr<strong>on</strong>oun is to be expressed<br />

or not. Thus, we c<strong>on</strong>sider it <str<strong>on</strong>g>the</str<strong>on</strong>g> most appropriate<br />

to use f<strong>in</strong>al translated sentences produced by two<br />

versi<strong>on</strong>s <str<strong>on</strong>g>of</str<strong>on</strong>g> TectoMT <strong>in</strong> order to compare <str<strong>on</strong>g>the</str<strong>on</strong>g> different<br />

way <str<strong>on</strong>g>the</str<strong>on</strong>g>y handle it.<br />

The shift from <str<strong>on</strong>g>the</str<strong>on</strong>g> orig<strong>in</strong>al sett<strong>in</strong>gs to a new<br />

model for it results <strong>in</strong> 166 changed sentences. In<br />

terms <str<strong>on</strong>g>of</str<strong>on</strong>g> BLEU score, we observe a marg<strong>in</strong>al drop<br />

from 0.1404 to 0.1403 when us<strong>in</strong>g <str<strong>on</strong>g>the</str<strong>on</strong>g> new approach.<br />

11 O<str<strong>on</strong>g>the</str<strong>on</strong>g>r classifiers achieved <str<strong>on</strong>g>the</str<strong>on</strong>g> same or<br />

11 For comparis<strong>on</strong>, <str<strong>on</strong>g>the</str<strong>on</strong>g> best system so far – Chimera (Bojar<br />

et al., 2013) achieves 0.1994 <strong>on</strong> <str<strong>on</strong>g>the</str<strong>on</strong>g> same test set. Chimera<br />

comb<strong>in</strong>es Moses, TectoMT and rule-based correcti<strong>on</strong>s.<br />

57