Embedding Information in Grayscale Images - Signal Processing ...

Embedding Information in Grayscale Images - Signal Processing ...

Embedding Information in Grayscale Images - Signal Processing ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Embedd<strong>in</strong>g</strong> <strong>Information</strong> <strong>in</strong> <strong>Grayscale</strong> <strong>Images</strong><br />

Marten van Dijk and Frans Willems<br />

Philips Research Laboratories, E<strong>in</strong>dhoven<br />

Abstract<br />

<strong>Grayscale</strong> images can be represented as sequences of <strong>in</strong>teger-valued symbols. If<br />

such a symbol has alphabet {0, 1, · · · , 2 B − 1} it could be represented by B b<strong>in</strong>ary<br />

digits. To embed <strong>in</strong>formation <strong>in</strong> these symbols, we are allowed to distort them. The<br />

distortion measure that we consider here is mean squared error. In this setup there is<br />

a so-called “rate-distortion function” that tells us what the largest embedd<strong>in</strong>g rate is,<br />

given a certa<strong>in</strong> distortion level. First we determ<strong>in</strong>e this rate-distortion function. Next<br />

we compare the performance of LSB modulation to this rate-distortion function. Then<br />

several embedd<strong>in</strong>g codes are proposed: (i) codes that only affect the LSB of a symbol,<br />

(ii) so-called ternary codes <strong>in</strong> which a symbol can be changed by at most one, and (iii)<br />

codes that process a pair of symbols each time.<br />

1 System description<br />

discrete<br />

memoryless<br />

source<br />

X N 1<br />

✲<br />

encoder<br />

Y N<br />

1<br />

✲<br />

dest<strong>in</strong>ation<br />

✻<br />

W<br />

✲<br />

decoder<br />

✲<br />

Ŵ<br />

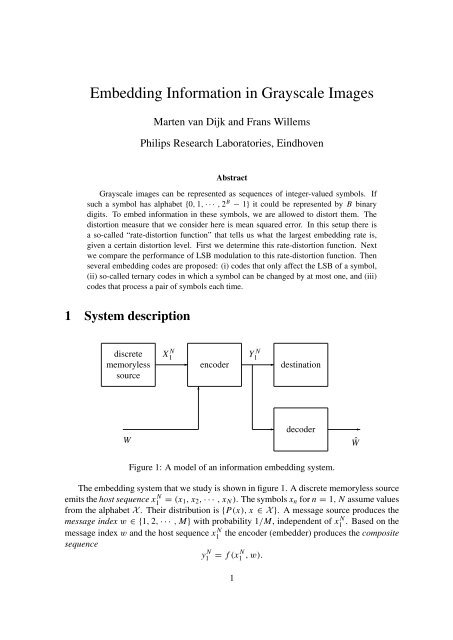

Figure 1: A model of an <strong>in</strong>formation embedd<strong>in</strong>g system.<br />

The embedd<strong>in</strong>g system that we study is shown <strong>in</strong> figure 1. A discrete memoryless source<br />

emits the host sequence x1<br />

N = (x 1, x 2 , · · · , x N ). The symbols x n for n = 1, N assume values<br />

from the alphabet X . Their distribution is {P(x), x ∈ X }. A message source produces the<br />

message <strong>in</strong>dex w ∈ {1, 2, · · · , M} with probability 1/M, <strong>in</strong>dependent of x1 N . Based on the<br />

message <strong>in</strong>dex w and the host sequence x1<br />

N the encoder (embedder) produces the composite<br />

sequence<br />

= f (x 1 N , w).<br />

y N 1<br />

1

The symbols from y1<br />

N = (y 1, y 2 , · · · , y N ) assume values <strong>in</strong> the alphabet Y. We require that<br />

from the composite sequence y1<br />

N the embedded message can be reconstructed, i.e. there is a<br />

decoder produc<strong>in</strong>g the estimate Ŵ = g(y1 N ) such that<br />

Pr{Ŵ ̸= W } = 0.<br />

Moreover the sequences y N 1<br />

must be “close” to x N 1<br />

, i.e. the expected distortion<br />

¯D = E[d(X1 N , Y 1 N )] = ∑ Pr{X1 N = x 1 N } Pr{W = w}d(x 1 N , f (x 1 N , w)) ≤ ,<br />

x1 N ,w<br />

where is the maximum allowable distortion and<br />

d(x1 N , y 1 N ) = 1 ∑<br />

N<br />

n=1,N<br />

D(x n , y n )<br />

is the distortion between x1<br />

N and y 1 N<br />

def<strong>in</strong>e the embedd<strong>in</strong>g-rate R as<br />

, for some specified distortion matrix D(·, ·). F<strong>in</strong>ally we<br />

R = 1 N log 2 (M).<br />

2 The ”rate-distortion” function<br />

We are obviously <strong>in</strong>terested <strong>in</strong> f<strong>in</strong>d<strong>in</strong>g codes that comb<strong>in</strong>e a large embedd<strong>in</strong>g rate R with a<br />

small distortion ¯D. Only recently several authors, e.g. [3] and [1], realized that this setup<br />

is strongly related to the “defect channel”-problem which was first <strong>in</strong>vestigated <strong>in</strong> a general<br />

form by Gelfand and P<strong>in</strong>sker [2]. In [4] the so-called ”rate-distortion function”, that<br />

corresponds to a slightly more general embedd<strong>in</strong>g model than the one we study here, was<br />

determ<strong>in</strong>ed. This function gives the maximum embedd<strong>in</strong>g rate R for a given distortion level<br />

. For our model (Z ≡ Y ) this function turns out to be<br />

R() =<br />

max<br />

{P(y|x): ∑ H(Y |X).<br />

x,y P(x)P(y|x)D(x,y)≤}<br />

Note that this is not a rate-distortion function <strong>in</strong> the usual sense.<br />

3 <strong>Grayscale</strong> symbols, mean squared error distortion<br />

0<br />

1<br />

2<br />

x y<br />

2 B − 1<br />

❅ ❅<br />

<br />

D(x, y) = (x − y) 2<br />

Figure 2: The grayscale alphabet G and mean squared error distortion.<br />

A grayscale symbol assumes a value from an <strong>in</strong>teger alphabet G. The card<strong>in</strong>ality of G<br />

should no be too small. E.g. let G = {0, 1, · · · , 2 B − 1} for some positive <strong>in</strong>teger B, then

each symbol can be represented by a vector of B b<strong>in</strong>ary digits. Now let X = Y = G. If a<br />

grayscale symbol x ∈ G is changed <strong>in</strong>to a grayscale symbol y ∈ G then the result<strong>in</strong>g squared<br />

error distortion is<br />

D(x, y) = (y − x) 2 ,<br />

<br />

see figure 2. To determ<strong>in</strong>e R() for this case, let p i =<br />

∑y−x=i P(x)P(y|x) for all <strong>in</strong>teger<br />

i, then<br />

H(Y |X) = H(Y − X|X) ≤ H(Y − X) = ∑ 1<br />

p i log 2<br />

p<br />

i<br />

i<br />

and<br />

∑<br />

P(x)P(y|x)(y − x) 2 = ∑<br />

x,y<br />

i<br />

p i i 2 .<br />

The correspond<strong>in</strong>g bound for R() can be achieved by tak<strong>in</strong>g i = y − x <strong>in</strong>dependent of x,<br />

which is possible if we assume that |G| is large and we neglect boundary-effects. Now we<br />

have to maximize ∑ i p 1<br />

i log 2 p i<br />

under the constra<strong>in</strong>t ∑ i p ii 2 ≤ . Therefore consider for<br />

some α > 0 the “Gaussian” distribution {pi ∗} where<br />

p ∗ i<br />

<br />

= exp(−αi 2 )/β,<br />

with β = ∑ i exp(−αi 2 ) and hav<strong>in</strong>g variance ∑ i p∗ i i 2 = . Then for any distribution {p i }<br />

with variance ∑ i p ii 2 ≤ we can write<br />

∑<br />

p i ln 1 pi<br />

∗<br />

i<br />

= ∑ i<br />

p i ln(β exp(αi 2 )) = ln β + α ∑ i<br />

p i i 2 ≤ ln β + α,<br />

and therefore its entropy (<strong>in</strong> nats)<br />

∑<br />

p i ln 1 = ∑ p<br />

i i<br />

i<br />

p i ln p∗ i<br />

p i<br />

+ ∑ i<br />

p i ln 1 p ∗ i<br />

≤ ln β + α.<br />

Equality is achieved only for {p i } equal to the Gaussian distribution {pi ∗ }. Therefore to<br />

compute the rate-distortion function we only need to vary α and compute the entropy and<br />

variance of {pi ∗ }. We can now make a plot of R(), see figure 3. This plot shows that to<br />

achieve a rate of 1 bit/symb. we need an average distortion of at least ≈ 0.22.<br />

4 Least-significant bit(s) modulation<br />

Traditional embedd<strong>in</strong>g methods assume that a grayscale symbol x ∈ G is represented as a<br />

b<strong>in</strong>ary vector b B−1 , · · · , b 1 , b 0 such that x = ∑ i=0,B−1 b i2 i . Messages are now embedded<br />

only <strong>in</strong> the least-significant bits (LSB’s) <strong>in</strong> this b<strong>in</strong>ary representation. Therefore this form of<br />

embedd<strong>in</strong>g is called least-significant bits modulation. Assume that message w is embedded<br />

<strong>in</strong> the R LSB’s (R is a positive <strong>in</strong>teger). The follow<strong>in</strong>g tables now show what happens for<br />

R = 1. Observe that for R = 1 the distortion ¯D = 1/4 · 2 = 1/2.

3<br />

2.5<br />

2<br />

1.5<br />

1<br />

0.5<br />

0<br />

0 0.5 1 1.5 2 2.5 3<br />

Figure 3: R() <strong>in</strong> bits per symbol as a function of the distortion per symbol (horizontally),<br />

together with two LSB distortion-rate pairs (o) and time-shar<strong>in</strong>g the R = 1-LSB method (...).<br />

x w = 0 1<br />

· · ·<br />

8=1000 y = 8 9<br />

9=1001 8 9<br />

· · ·<br />

x w = 0 1<br />

· · ·<br />

8=1000 D = 0 1<br />

9=1001 1 0<br />

· · ·<br />

Similarly for R = 2 the average distortion is ¯D = 1/16(6 · 1 + 4 · 4 + 2 · 9) = 5/2. The<br />

distortion-rate pairs ( ¯D, R) = (1/2, 1) and (5/2, 2) are plotted <strong>in</strong> figure 3 denoted by o’-s.<br />

Us<strong>in</strong>g the LSB modulation scheme ( ¯D, R) = (1/2, 1) only for a fraction of the symbols, we<br />

achieve<br />

R( ¯D) = 2 ¯D, for 0 ≤ ¯D ≤ 1/2.<br />

The result<strong>in</strong>g distortion-rate pairs are plotted <strong>in</strong> the figure with a dotted l<strong>in</strong>e. The problem<br />

we want to address next is: ”How can we do better than R( ¯D)/ ¯D = 2?”<br />

5 Coded LSB modulation<br />

In this section we consider cod<strong>in</strong>g methods that affect only the LSB of each grayscale symbol.<br />

The first method is based on Hamm<strong>in</strong>g codes, after that we consider a code based on the<br />

b<strong>in</strong>ary Golay code.<br />

5.1 <strong>Embedd<strong>in</strong>g</strong> based on b<strong>in</strong>ary Hamm<strong>in</strong>g codes<br />

To describe the embedd<strong>in</strong>g code consider the (7, 4, 3) Hamm<strong>in</strong>g code. Fix a certa<strong>in</strong> coset C i ,<br />

for some i = 0, 1, · · · , 7. Consider only the vector of LSB’s of seven host symbols. Denote<br />

this vector by x 7 1 = x 1, x 2 , · · · , x 7 . Determ<strong>in</strong>e a b<strong>in</strong>ary vector y 7 1 = y 1, y 2 , · · · , y 7 ∈ C i

which is closest to x1 7 <strong>in</strong> Hamm<strong>in</strong>g-sense. To obta<strong>in</strong> the composite sequence just replace the<br />

LSB vector x1 7 by y7 1 .<br />

Next we determ<strong>in</strong>e the average distortion ¯D of this method. The Hamm<strong>in</strong>g code is perfect<br />

with d H = 3, thus we will f<strong>in</strong>d a word y1 7 ∈ C i at distance 1 from x1 7 with probability 7/8 and<br />

a word at distance 0 with probability 1/8 (some smoothness of P(x) is assumed here). Hence<br />

¯D = (7/8)/7 = 1/8. The decoder can detect the coset to which the composite sequence y1<br />

7<br />

belongs, hence reliable transmission is possible with rate R = (log 2 8)/7 = 3/7 bit/symbol.<br />

Thus we achieve ( ¯D, R) = (1/8, 3/7). The R/ ¯D-ratio = 24/7 ≈ 3.428 which is a factor<br />

1.714 higher than LSB modulation. A Hamm<strong>in</strong>g code of length 2 m − 1 for m = 2, 3, 4, · · ·<br />

gives<br />

R =<br />

m<br />

2 m − 1 and ¯D = 1<br />

2 m .<br />

Hence the ratio<br />

R/ ¯D = m2m<br />

2 m − 1 ,<br />

which is approximately equal to m for large m (see figure 4).<br />

5.2 <strong>Embedd<strong>in</strong>g</strong> based on the b<strong>in</strong>ary Golay code<br />

Instead of the b<strong>in</strong>ary Hamm<strong>in</strong>g code we can use the (23, 12, 7) b<strong>in</strong>ary Golay code. This<br />

leads to<br />

R = 11<br />

( 23<br />

) (<br />

23 ≈ 0.478 and 1 · 1 + 23<br />

) (<br />

2 · 2 + 23<br />

)<br />

3 · 3<br />

¯D =<br />

≈ 0.124.<br />

2048 · 23<br />

Now the ratio R/ ¯D ≈ 3.85, which is almost a factor two better what can be achieved with<br />

LSB modulation (see figure 4).<br />

1<br />

0.9<br />

0.8<br />

0.7<br />

0.6<br />

0.5<br />

0.4<br />

0.3<br />

0.2<br />

0.1<br />

0<br />

0 0.05 0.1 0.15 0.2 0.25 0.3 0.35 0.4 0.45 0.5<br />

Figure 4: Rate-distortion curve, time-shar<strong>in</strong>g R = 1-LSB modulation (...), b<strong>in</strong>ary Hamm<strong>in</strong>g<br />

codes (o), and the b<strong>in</strong>ary Golay code (x).

6 Ternary embedd<strong>in</strong>g methods<br />

We start this section with a def<strong>in</strong>ition. A grayscale symbol x is said to be <strong>in</strong> class w iff x<br />

mod 3 = w for w = 0, 1, 2. Now we are ready to discuss uncoded ternary embedd<strong>in</strong>g and<br />

after that aga<strong>in</strong> two coded embedd<strong>in</strong>g methods.<br />

6.1 Uncoded ternary modulation<br />

Suppose that message w ∈ {0, 1, 2} is to be embedded <strong>in</strong> the grayscale symbol x. The<br />

decoder determ<strong>in</strong>es the message w simply by look<strong>in</strong>g at the class of y. If x is <strong>in</strong> class<br />

w then y = x is chosen, otherwise we change it <strong>in</strong>to the symbol y <strong>in</strong> class w, such that<br />

D(x, y) = (y − x) 2 is m<strong>in</strong>imal, see the tables below.<br />

x w = 0 1 2<br />

· · ·<br />

9 y = 9 10 8<br />

10 9 10 11<br />

11 12 10 11<br />

· · ·<br />

x w = 0 1 2<br />

· · ·<br />

9 D = 0 1 1<br />

10 1 0 1<br />

11 1 1 0<br />

· · ·<br />

The obta<strong>in</strong>ed embedd<strong>in</strong>g rate R = log 2 3 ≈ 1.585. The correspond<strong>in</strong>g average distortion<br />

¯D = 2/3 · 1 = 2/3. This results <strong>in</strong> the ratio R/ ¯D = 3/2 log 2 3 ≈ 2.378 which is quite good!<br />

6.2 <strong>Embedd<strong>in</strong>g</strong> with ternary Hamm<strong>in</strong>g codes<br />

We can also design codes for the modulat<strong>in</strong>g the class, based on ternary Hamm<strong>in</strong>g codes. For<br />

a given value m, i.e. the number of parity check symbols, the codeword length is (3 m − 1)/2.<br />

Therefore<br />

R = 2m log 2 3<br />

3 m − 1 and ¯D = 2<br />

3 m .<br />

Hence<br />

see figure 5.<br />

R/ ¯D = m3m log 2 3<br />

3 m − 1 ,<br />

6.3 <strong>Embedd<strong>in</strong>g</strong> us<strong>in</strong>g the ternary Golay code<br />

If we use the (11, 6, 5) ternary Golay code we get the follow<strong>in</strong>g rate and distortion:<br />

R = 5 log 2 3<br />

11<br />

≈ 0.7204 and ¯D =<br />

( 11<br />

) (<br />

1 · 1 · 2 + 11<br />

)<br />

2 · 2 · 4<br />

243 · 11<br />

= 42<br />

243 ≈ 0.1728.<br />

Hence R/ ¯D ≈ 4.1682 (see figure 5).

1<br />

0.9<br />

0.8<br />

0.7<br />

0.6<br />

0.5<br />

0.4<br />

0.3<br />

0.2<br />

0.1<br />

0<br />

0 0.05 0.1 0.15 0.2 0.25 0.3 0.35 0.4 0.45 0.5<br />

Figure 5: Rate-distortion curve, time-shar<strong>in</strong>g R = 1-LSB modulation (...), b<strong>in</strong>ary Hamm<strong>in</strong>g<br />

codes (o), ternary Hamm<strong>in</strong>g codes (*), and both Golay codes (x).<br />

7 Two-dimensional codes<br />

F<strong>in</strong>ally we consider a method that modifies pairs of grayscale-symbols (x a , x b ). Therefore<br />

we ”color” the two-dimensional rectangular grid with five colors. A po<strong>in</strong>t and its nearest<br />

neighbors all have a different color, see table below.<br />

x a →<br />

1 2 3 4 0 1 2 3 4 0 1 2 3 4 0<br />

x b 4 0 1 2 3 4 0 1 2 3 4 0 1 2 3<br />

↓ 2 3 4 0 1 2 3 4 0 1 2 3 4 0 1<br />

0 1 2 3 4 0 1 2 3 4 0 1 2 3 4<br />

3 4 0 1 2 3 4 0 1 2 3 4 0 1 2<br />

We want to embed <strong>in</strong> x a and x b a message w ∈ {0, 1, 2, 3, 4}. The decoder just looks at the<br />

color of pair (y a , y b ). If (x a , x b ) does not have color w change it <strong>in</strong>to the nearest neighbor<br />

(y a , y b ) with color w. Now<br />

¯D = 1 · 0 + 4 · 1<br />

5 · 2<br />

= 2/5 and R = log 2 5<br />

2<br />

= 1.16096.<br />

and R/ ¯D = 2.90241. This basic code can be used together with 5-ary Hamm<strong>in</strong>g codes.<br />

For a given m, i.e. the number of parity checks, we get a code length of (5 m − 1)/4 5-ary<br />

symbols. This code processes (5 m − 1)/2 grayscale-symbols and the embedd<strong>in</strong>g rate is<br />

R = 2m log 2 (5)<br />

5 m − 1<br />

and the distortion ¯D = 2<br />

5 m .<br />

The result<strong>in</strong>g distortion-rate pairs are shown <strong>in</strong> figure 6.

1<br />

0.9<br />

0.8<br />

0.7<br />

0.6<br />

0.5<br />

0.4<br />

0.3<br />

0.2<br />

0.1<br />

0<br />

0 0.02 0.04 0.06 0.08 0.1 0.12 0.14 0.16 0.18 0.2<br />

Figure 6: Rate-distortion curve, time-shar<strong>in</strong>g R = 1 LSB modulation (...), b<strong>in</strong>ary Hamm<strong>in</strong>g<br />

codes (o), ternary Hamm<strong>in</strong>g codes (*), the Golay codes (x), and the 5-ary codes (+).<br />

8 Conclusion<br />

We have only looked at small distortions here. Moreover we have concentrated on perfect<br />

codes s<strong>in</strong>ce this was so easy. It can be shown that Hamm<strong>in</strong>g codes are quite good for D → 0.<br />

For a moderate rate we have seen that Golay codes are rather good, but what about even better<br />

codes for even higher rates? How do we generalize the color<strong>in</strong>g results to larger number of<br />

colors and more dimensions?<br />

References<br />

[1] J. Chou, S. Pradhan, L. El Ghaoui and K. Ramchandran, “A Robust Optimization Solution<br />

to the Data Hid<strong>in</strong>g Problem Us<strong>in</strong>g Distributed Source Cod<strong>in</strong>g Pr<strong>in</strong>ciples,” prepr<strong>in</strong>t,<br />

2000.<br />

[2] S. Gelfand and M. P<strong>in</strong>sker, “Cod<strong>in</strong>g for a Channel with Random Parameters”, Problems<br />

of Control and <strong>Information</strong> Theory, vol. 9, pp. 19-31, 1980.<br />

[3] P. Moul<strong>in</strong> and J. O’Sullivan, “<strong>Information</strong>-theoretic Analysis of <strong>Information</strong> Hid<strong>in</strong>g,”<br />

prepr<strong>in</strong>t, 1999.<br />

[4] F.M.J. Willems, ”An <strong>Information</strong>theoretical Approach to <strong>Information</strong> <strong>Embedd<strong>in</strong>g</strong>,”<br />

Proc. 21st Symp. Inform. Theory <strong>in</strong> the Benelux, pp. 255-260, Wassenaar, May 25-26,<br />

2000.