Prosodic Boundary Prediction based on Maximum Entropy Model ...

Prosodic Boundary Prediction based on Maximum Entropy Model ...

Prosodic Boundary Prediction based on Maximum Entropy Model ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

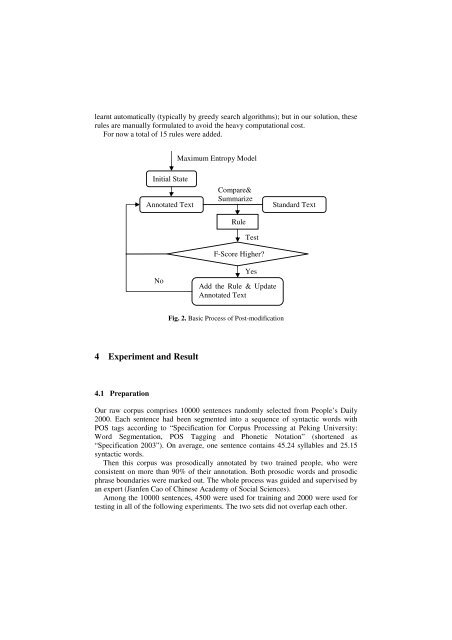

learnt automatically (typically by greedy search algorithms); but in our soluti<strong>on</strong>, these<br />

rules are manually formulated to avoid the heavy computati<strong>on</strong>al cost.<br />

For now a total of 15 rules were added.<br />

<strong>Maximum</strong> <strong>Entropy</strong> <strong>Model</strong><br />

Initial State<br />

Annotated Text<br />

Compare&<br />

Summarize<br />

Rule<br />

Test<br />

F-Score Higher<br />

Standard Text<br />

No<br />

Yes<br />

Add the Rule & Update<br />

Annotated Text<br />

Fig. 2. Basic Process of Post-modificati<strong>on</strong><br />

4 Experiment and Result<br />

4.1 Preparati<strong>on</strong><br />

Our raw corpus comprises 10000 sentences randomly selected from People’s Daily<br />

2000. Each sentence had been segmented into a sequence of syntactic words with<br />

POS tags according to “Specificati<strong>on</strong> for Corpus Processing at Peking University:<br />

Word Segmentati<strong>on</strong>, POS Tagging and Ph<strong>on</strong>etic Notati<strong>on</strong>” (shortened as<br />

“Specificati<strong>on</strong> 2003”). On average, <strong>on</strong>e sentence c<strong>on</strong>tains 45.24 syllables and 25.15<br />

syntactic words.<br />

Then this corpus was prosodically annotated by two trained people, who were<br />

c<strong>on</strong>sistent <strong>on</strong> more than 90% of their annotati<strong>on</strong>. Both prosodic words and prosodic<br />

phrase boundaries were marked out. The whole process was guided and supervised by<br />

an expert (Jianfen Cao of Chinese Academy of Social Sciences).<br />

Am<strong>on</strong>g the 10000 sentences, 4500 were used for training and 2000 were used for<br />

testing in all of the following experiments. The two sets did not overlap each other.