- Page 1 and 2:

TM Apache Solr Reference Guide Cove

- Page 3 and 4:

About This Guide This guide describ

- Page 5 and 6:

Getting Started Solr makes it easy

- Page 7 and 8:

Start Solr in the Foreground Since

- Page 9 and 10:

documents. Do not worry too much ab

- Page 11 and 12:

,price Faceted browsing is one of S

- Page 13 and 14:

for the simplest keyword searching

- Page 15 and 16:

Stop Informational Version Status H

- Page 17 and 18:

-noprompt Start Solr and suppress a

- Page 19 and 20:

The stop command sends a STOP reque

- Page 21 and 22:

$ bin/solr healthcheck -c gettingst

- Page 23 and 24:

collection. For example, the follow

- Page 25 and 26:

An example of this command with the

- Page 27 and 28:

with the fact that DocValues do not

- Page 29 and 30:

Accessing the URL http://hostname:8

- Page 31 and 32:

For an explanation of the various l

- Page 33 and 34:

Java Properties The Java Properties

- Page 35 and 36:

The collection-specific UI screens

- Page 37 and 38:

This screen also lets you adjust va

- Page 39 and 40:

Related Topics Uploading Data with

- Page 41 and 42:

Request-handler (qt) Specifies the

- Page 43 and 44:

about the core, and a secondary men

- Page 45 and 46:

0 13 {!lucene}*:* false _text_ 10

- Page 47 and 48:

This information may be useful for

- Page 49 and 50:

In the Solr universe, documents are

- Page 51 and 52:

The first line in the

- Page 53 and 54:

omitNorms If true, omits the norms

- Page 55 and 56:

TrieField TrieFloatField TrieIntFie

- Page 57 and 58:

Working with Dates Date Formatting

- Page 59 and 60:

UTC: http://localhost:8983/solr/my_

- Page 61 and 62:

Working with External Files and Pro

- Page 63 and 64:

str Stored string value of a field.

- Page 65 and 66:

1 one two three version: 1 stored:

- Page 67 and 68:

faceting 5 true 7 true 7 add multip

- Page 69 and 70:

useDocValuesAsStored If the field h

- Page 71 and 72:

Similarity Similarity is a Lucene c

- Page 73 and 74:

Multiple Commands in a Single POST

- Page 75 and 76:

Add a Dynamic Field Rule The add-dy

- Page 77 and 78:

The replace-field-type command repl

- Page 79 and 80:

Finally, repeated commands can be s

- Page 81 and 82:

81 Apache Solr Reference Guide 6.0

- Page 83 and 84:

id ... List Fields GET

- Page 85 and 86:

INPUT Path Parameters Key Descripti

- Page 87 and 88:

The query parameters can be added t

- Page 89 and 90:

Key collection Description The coll

- Page 91 and 92:

curl http://localhost:8983/solr/get

- Page 93 and 94:

OUTPUT Output Content The output wi

- Page 95 and 96:

Handling text properly will make yo

- Page 97 and 98:

Retrieving DocValues During Search

- Page 99 and 100:

true managed-schema Define an Upda

- Page 101 and 102:

Javadocs for update processor facto

- Page 103 and 104:

400 7 ERROR: [doc=19F] Error add

- Page 105 and 106:

Analyzers An analyzer examines the

- Page 107 and 108:

About Tokenizers The

- Page 109 and 110:

You configure the tokenizer for a t

- Page 111 and 112:

In: "Please, email john.doe@foo.c

- Page 113 and 114:

In: "babaloo" Out:"ba", "bab", "b

- Page 115 and 116:

Extract simple, capitalized words.

- Page 117 and 118:

lement. For example: The foll

- Page 119 and 120:

Example: Classic Filter This f

- Page 121 and 122:

Tokenizer to Filter: "four"(1), "sc

- Page 123 and 124:

In: "the quick brown fox jumped

- Page 125 and 126:

name: (string) The name of the norm

- Page 127 and 128:

appropriate for English language te

- Page 129 and 130:

N-Gram Filter Generates n-gram toke

- Page 131 and 132:

In: "cat concatenate catycat" To

- Page 133 and 134:

In: "jump jumping jumped" Tokenizer

- Page 135 and 136:

In: "To be, or not to be." Token

- Page 137 and 138:

enablePositionIncrements: if lucene

- Page 139 and 140:

couch,sofa,divan teh => the huge,gi

- Page 141 and 142:

useWhitelist: If true, the file def

- Page 143 and 144:

Tokenizer to Filter: "XL-4000/ES"(1

- Page 145 and 146:

The table below presents examples o

- Page 147 and 148:

KeywordRepeatFilterFactory Emits ea

- Page 149 and 150:

Using a Tailored ruleset: custom: (

- Page 151 and 152:

get the default rules for Germany /

- Page 153 and 154:

Language-Specific Factories Thes

- Page 155 and 156:

Arguments: language: (required) ste

- Page 157 and 158:

Out: "preziden", "preziden", "prezi

- Page 159 and 160:

Examples: In: "le chat,

- Page 161 and 162:

Example: Indonesian Solr incl

- Page 163 and 164:

Japanese Tokenizer Tokenizer for Ja

- Page 165 and 166:

In: "tirgiem tirgus" Tokenize

- Page 167 and 168:

Polish Solr provides support fo

- Page 169 and 170:

Factory class: solr.RussianLightSte

- Page 171 and 172:

Spanish Solr includes two stemmers

- Page 173 and 174:

Algorithms discussed in this sectio

- Page 175 and 176:

Caverphone To use this encoding in

- Page 177 and 178:

The " text_general" field type is d

- Page 179 and 180:

Indexing and Basic Data Operations

- Page 181 and 182:

$ bin/post -h Usage: post -c [OPTI

- Page 183 and 184:

The bin/post script currently deleg

- Page 185 and 186:

XML Update Commands Commit and Opti

- Page 187 and 188:

- Page 189 and 190:

In general, the JSON update syntax

- Page 191 and 192:

if split=/ (i.e., you want your JSO

- Page 193 and 194:

curl 'http://localhost:8983/solr/my

- Page 195 and 196:

So, if no params are passed, the en

- Page 197 and 198:

Path /update/csv Default Parameters

- Page 199 and 200:

Topics covered in this section: Key

- Page 201 and 202:

defaultField extractOnly extractFor

- Page 203 and 204:

EEEE, dd-MMM-yy HH:mm:ss zzz EEE MM

- Page 205 and 206:

curl "http://localhost:8983/solr/te

- Page 207 and 208:

Configuration Configuring solrconfi

- Page 209 and 210:

Datasources can still be specifie

- Page 211 and 212:

dateFormat A java.text.SimpleDateFo

- Page 213 and 214:

The URLDataSource type accepts thes

- Page 215 and 216:

deletedPkQuery deltaImportQuery SQL

- Page 217 and 218:

The entity attributes unique to the

- Page 219 and 220:

onError By default, the TikaEntityP

- Page 221 and 222:

While there are use cases where you

- Page 223 and 224:

The HTMLStripTransformer You can us

- Page 225 and 226:

The emailids field in the table can

- Page 227 and 228:

set Modifier Usage Set or replace t

- Page 229 and 230:

$ curl -X POST -H 'Content-Type: ap

- Page 231 and 232:

Method MD5Signature Lookup3Signatur

- Page 233 and 234:

language identification and a field

- Page 235 and 236:

langid.fallbackFields string none n

- Page 237 and 238:

1. Copy solr-uima-VERSION.jar (unde

- Page 239 and 240:

VALID_ALCHEMYAPI_KEY is your Alch

- Page 241 and 242:

Overview of Searching in Solr Solr

- Page 243 and 244:

Velocity Search UI Solr includes a

- Page 245 and 246:

The table below summarizes Solr's c

- Page 247 and 248:

Setting the start parameter to some

- Page 249 and 250:

The debug Parameter The debug param

- Page 251 and 252:

The echoParams Parameter The echoPa

- Page 253 and 254:

The Standard Query Parser's Respons

- Page 255 and 256:

Multiple characters (matches zero o

- Page 257 and 258:

field. For example, suppose an inde

- Page 259 and 260:

Grouping Terms to Form Sub-Queries

- Page 261 and 262:

In addition to the common request p

- Page 263 and 264:

If the calculations based on the pa

- Page 265 and 266:

http://localhost:8983/solr/techprod

- Page 267 and 268:

Examples of Queries Submitted to th

- Page 269 and 270:

it includes parentheses. For exampl

- Page 271 and 272:

dist docfreq(field,val) exists fiel

- Page 273 and 274:

max maxdoc min ms norm( field) not

- Page 275 and 276:

ecip rord scale sqedist Performs a

- Page 277 and 278:

tf top totaltermfreq xor() Term fre

- Page 279 and 280:

is equivalent to: q={!type=dismax q

- Page 281 and 282:

This parser takes a query that matc

- Page 283 and 284:

ds.txt for your collection, and ind

- Page 285 and 286:

One way to model this graph as Solr

- Page 287 and 288: With this alternative document mode

- Page 289 and 290: y wrapping all SHOULD clauses in a

- Page 291 and 292: Re-Ranking Query Parser The ReRankQ

- Page 293 and 294: example configuration below, client

- Page 295 and 296: TermsQueryBuilder UserInputQueryB

- Page 297 and 298: The facet.field parameter identifie

- Page 299 and 300: The default value is fc (except for

- Page 301 and 302: The facet.range.hardend parameter i

- Page 303 and 304: Using the " bin/solr -e techproduct

- Page 305 and 306: "price":{ "min":479.95001220703125,

- Page 307 and 308: 307 Apache Solr Reference Guide 6.0

- Page 309 and 310: ... "start":"2006-01-01T00:00:00Z",

- Page 311 and 312: and exclude those filters when face

- Page 313 and 314: document sample 1 parent 11 Red

- Page 315 and 316: Standard Highlighter The standard h

- Page 317 and 318: hl.usePhraseHighlighter true If set

- Page 319 and 320: hl.maxMultiValuedToExamine integer.

- Page 321 and 322: Related Content HighlightingParamet

- Page 323 and 324: hl.score.b 0.75 Specifies BM25 leng

- Page 325 and 326: Levenshtein metric, which is the sa

- Page 327 and 328: spellcheck Turns on or off SpellChe

- Page 329 and 330: The spellcheck.alternativeTermCount

- Page 331 and 332: 1 0 5 0 dell 1 1 6 17 0 u

- Page 333 and 334: Transforming Result Documents Docum

- Page 335 and 336: childFilter - query to filter which

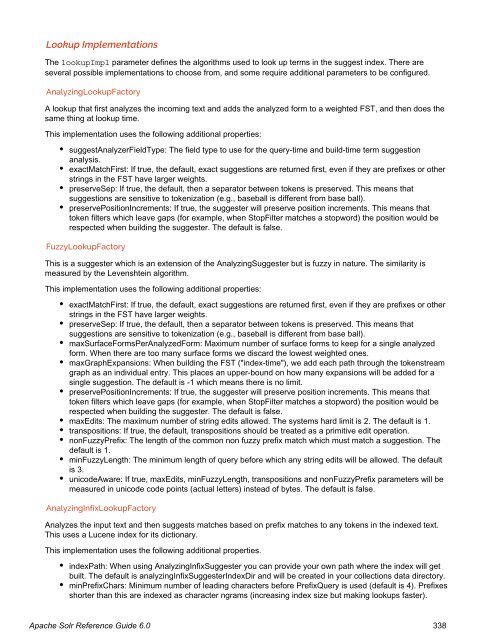

- Page 337: name lookupImpl dictionaryImpl A sy

- Page 341 and 342: This dictionary implementation take

- Page 343 and 344: Example query: http://localhost:898

- Page 345 and 346: solrconfig.xml mySuggester Analyz

- Page 347 and 348: paging, and filtering using common

- Page 349 and 350: Fetching A Large Number of Sorted R

- Page 351 and 352: $ curl '...&rows=10&sort=id+asc&cur

- Page 353 and 354: The psuedo-code for tailing a curso

- Page 355 and 356: group.facet Boolean Determines whet

- Page 357 and 358: http://localhost:8983/solr/techprod

- Page 359 and 360: The Collapsing query parser and the

- Page 361 and 362: q=foo&fq={!collapse field=ISBN}&exp

- Page 363 and 364: To enable the clustering component

- Page 365 and 366: There were a few clusters discover

- Page 367 and 368: clustering When true, clustering co

- Page 369 and 370: The default language can also be se

- Page 371 and 372: See the section SpatialRecursivePre

- Page 373 and 374: sphere; and sqedist, to calculate t

- Page 375 and 376: maxDistErr worldBounds Defines the

- Page 377 and 378: interface being developed allows an

- Page 379 and 380: The parameters below allow you to c

- Page 381 and 382: 0 2 5 3 3 3 3 3 3 3 3 3 Get

- Page 383 and 384: The TermVectorComponent is a search

- Page 385 and 386: Boolean Parameters Description Type

- Page 387 and 388: 0.0 3.0 32 0 10.0 22.0 0.3125 0.7

- Page 389 and 390:

Additional "Expert" local params ar

- Page 391 and 392:

config-file Path to the file that d

- Page 393 and 394:

csv json php phps python ruby smile

- Page 395 and 396:

text/plain Python Response Writer

- Page 397 and 398:

http://localhost:8983/solr/techprod

- Page 399 and 400:

v.layout.enabled v.contentType v.js

- Page 401 and 402:

maxDocs maxTime Integer. Defines th

- Page 403 and 404:

${solr.ulog.dir:} Real Time Get re

- Page 405 and 406:

To export the full sorted result se

- Page 407 and 408:

Some streaming functions act as str

- Page 409 and 410:

expr=search(collection1, zkHost="lo

- Page 411 and 412:

facet(collection1, q="*:*", buckets

- Page 413 and 414:

Parameters Syntax StreamExpression

- Page 415 and 416:

-1, // checkpoint every X tuples, i

- Page 417 and 418:

The order of the streams does not m

- Page 419 and 420:

intersect( search(collection1, q=a_

- Page 421 and 422:

outerHashJoin( search(people, q=*:*

- Page 423 and 424:

Parameters Syntax StreamExpression

- Page 425 and 426:

unique( search(collection1, q="*:*"

- Page 427 and 428:

worker nodes. It involves sorting a

- Page 429 and 430:

{"result-set":{"docs":[ {"count(*)"

- Page 431 and 432:

engine and are generated by the Sta

- Page 433 and 434:

the Data Tables. The worker nodes e

- Page 435 and 436:

The Well-Configured Solr Instance T

- Page 437 and 438:

#core.properties name=collection2 m

- Page 439 and 440:

Managed Schema Default When a is n

- Page 441 and 442:

Merging Index Segments mergePolicyF

- Page 443 and 444:

lockType The LockFactory options sp

- Page 445 and 446:

own request handler, you should mak

- Page 447 and 448:

used instead of the default. First-

- Page 449 and 450:

We've defined three paths with this

- Page 451 and 452:

1000 commitWithin The commitWithin

- Page 453 and 454:

accessed frequently tend to stay in

- Page 455 and 456:

the filterCache will be checked for

- Page 457 and 458:

equestParsers Element The sub-elem

- Page 459 and 460:

2. required between requests. The f

- Page 461 and 462:

updateRequestProcessorChains and up

- Page 463 and 464:

Constructing a chain at request tim

- Page 465 and 466:

stripping index.html), the domain a

- Page 467 and 468:

In Solr, the term core is used to r

- Page 469 and 470:

shareSchema transientCacheSize conf

- Page 471 and 472:

name=my_core_name Placement of core

- Page 473 and 474:

CREATE RELOAD RENAME SWAP UNLOAD ME

- Page 475 and 476:

RELOAD The RELOAD action loads a ne

- Page 477 and 478:

In this example, we use the indexDi

- Page 479 and 480:

Parameter Type Required Default Des

- Page 481 and 482:

{ } "response":{"numFound":1,"start

- Page 483 and 484:

Commands for Common Properties The

- Page 485 and 486:

The Config API does not let you cre

- Page 487 and 488:

Examples Creating and Updating Comm

- Page 489 and 490:

{"overlay":{ "znodeVersion":5, "use

- Page 491 and 492:

curl http://localhost:8983/solr/tec

- Page 493 and 494:

Managed Resources Managed resources

- Page 495 and 496:

Synonyms For the most part, the API

- Page 497 and 498:

{ "responseHeader":{ "status":0, "Q

- Page 499 and 500:

curl http://localhost:8983/solr/tec

- Page 501 and 502:

the signature of the jar that you g

- Page 503 and 504:

Managing Solr This section describe

- Page 505 and 506:

and runs Solr as the solr user. Con

- Page 507 and 508:

Solr process PID running on port 89

- Page 509 and 510:

_JMX_OPTS property in the include f

- Page 511 and 512:

security.json { } "authentication"

- Page 513 and 514:

during initialization. The authoriz

- Page 515 and 516:

curl --user solr:SolrRocks http://l

- Page 517 and 518:

Consult Your Kerberos Admins! Befor

- Page 519 and 520:

If you already have a /security.jso

- Page 521 and 522:

in/solr -c -z server1:2181,server2:

- Page 523 and 524:

st Parameters API, and other APIs w

- Page 525 and 526:

curl --user solr:SolrRocks -H 'Cont

- Page 527 and 528:

in/solr.in.sh example SOLR_SSL_* co

- Page 529 and 530:

working through the previous sectio

- Page 531 and 532:

{ "responseHeader":{ "status":0, "Q

- Page 533 and 534:

SolrCloud Instances In SolrCloud mo

- Page 535 and 536:

authentication from Solr, you need

- Page 537 and 538:

The backup command is an asynchrono

- Page 539 and 540:

In addition to the logging options

- Page 541 and 542:

log4j.appender.file.MaxFileSize=100

- Page 543 and 544:

MBean Request Handler The MBean Req

- Page 545 and 546:

SolrCloud Example Interactive Start

- Page 547 and 548:

startup a SolrCloud cluster using t

- Page 549 and 550:

Then at query time, you include the

- Page 551 and 552:

http://localhost:8983/solr/gettings

- Page 553 and 554:

Each shard serves top-level query r

- Page 555 and 556:

Write Side Fault Tolerance SolrClou

- Page 557 and 558:

Create the instance Creating the in

- Page 559 and 560:

Finally, create your myid files in

- Page 561 and 562:

Changing ACL Schemes Example Usages

- Page 563 and 564:

When solr wants to create a new zno

- Page 565 and 566:

set SOLR_ZK_CREDS_AND_ACLS=-DzkDige

- Page 567 and 568:

maxShardsPerNode integer No 1 When

- Page 569 and 570:

It's possible to edit multiple attr

- Page 571 and 572:

Query Parameters Key Type Required

- Page 573 and 574:

Create a Shard Shards can only crea

- Page 575 and 576:

The CREATEALIAS action will create

- Page 577 and 578:

0 603 0 19 0 67 Delete

- Page 579 and 580:

http://localhost:8983/solr/admin/co

- Page 581 and 582:

The response will include the statu

- Page 583 and 584:

0 5007 0 8 0 1 test2_shard

- Page 585 and 586:

Key Type Required Description role

- Page 587 and 588:

Cluster Status /admin/collections?a

- Page 589 and 590:

} } "127.0.1.1:8900_solr"] Request

- Page 591 and 592:

http://localhost:8983/solr/admin/co

- Page 593 and 594:

http://localhost:8983/solr/admin/co

- Page 595 and 596:

0 9 Examining the clusterstate a

- Page 597 and 598:

0 123 success Already leader 19

- Page 599 and 600:

http://localhost:8983/solr/admin/co

- Page 601 and 602:

-z -zkhost -c -collection -d -con

- Page 603 and 604:

... ${solr.data.dir:} ... 3. The

- Page 605 and 606:

will explain why the request failed

- Page 607 and 608:

The nodes are sorted first and the

- Page 609 and 610:

Defining Rules Rules are specified

- Page 611 and 612:

Updates and deletes are first writt

- Page 613 and 614:

Rely on two storages: an ephemeral

- Page 615 and 616:

Target Configuration Here is a typi

- Page 617 and 618:

core/cdcr?action=QUEUES: Fetches st

- Page 619 and 620:

Output { } "responseHeader": { "sta

- Page 621 and 622:

{ } responseHeader={ status=0, QTim

- Page 623 and 624:

http://localhost:898 ${TargetZk}

- Page 625 and 626:

Legacy Scaling and Distribution Thi

- Page 627 and 628:

Limitations to Distributed Search D

- Page 629 and 630:

0 8 *:* localhost:8983/solr/core1

- Page 631 and 632:

Master and Slave Update Optimizatio

- Page 633 and 634:

http://remote_host:port/solr/core

- Page 635 and 636:

A commit command is issued on the s

- Page 637 and 638:

is a large expense, but not nearly

- Page 639 and 640:

Apache Solr Reference Guide 6.0 639

- Page 641 and 642:

The Solr Wiki contains a list of cl

- Page 643 and 644:

pSolrClient , or CloudSolrClient .

- Page 645 and 646:

upload using binary format, which i

- Page 647 and 648:

}} 'manufacturedate_dt'=>'2005-10-1

- Page 649 and 650:

Solr Glossary Where possible, terms

- Page 651 and 652:

Literally, N data about data. Metad

- Page 653 and 654:

See also #Inverse document frequenc

- Page 655 and 656:

A new graph query parser makes it p

- Page 657 and 658:

Upgrading a Solr Cluster This page

- Page 659 and 660:

proceeding to upgrade the next node