DM1901

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

CASE STUDY: NEW YORK TIMES Dm<br />

The Times to protect one of their most<br />

unique assets migrating from steel filing<br />

cabinets to a cloud-based platform where<br />

journalists can bring visual storytelling to a<br />

whole new level."<br />

Simply storing high-resolution images is<br />

not enough to create a system that photo<br />

editors can easily use. A working asset<br />

management system must allow the users<br />

to be able to browse and search for photos<br />

easily. The Times built a processing pipeline<br />

that stores and processes the photos and<br />

will use cloud technology to process and<br />

recognise text, handwriting and other<br />

details that can be found in the images.<br />

MACHINE LEARNING ADDS INSIGHTS<br />

Storing the images is only one half of<br />

the story. To make an archive like The<br />

Times' morgue even more accessible and<br />

useful, it's beneficial to leverage<br />

additional GCP features. In the case of<br />

The Times, one of the bigger challenges<br />

in scanning their photo archive has been<br />

adding data regarding the contents of<br />

the images. Google's Cloud Vision API<br />

helps fill that gap.<br />

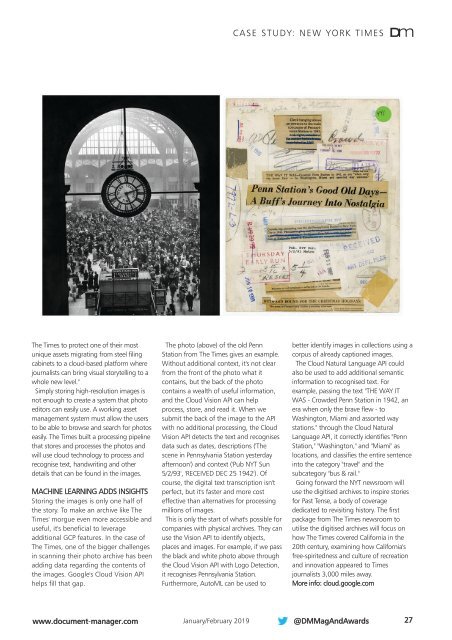

The photo (above) of the old Penn<br />

Station from The Times gives an example.<br />

Without additional context, it's not clear<br />

from the front of the photo what it<br />

contains, but the back of the photo<br />

contains a wealth of useful information,<br />

and the Cloud Vision API can help<br />

process, store, and read it. When we<br />

submit the back of the image to the API<br />

with no additional processing, the Cloud<br />

Vision API detects the text and recognises<br />

data such as dates, descriptions ('The<br />

scene in Pennsylvania Station yesterday<br />

afternoon') and context ('Pub NYT Sun<br />

5/2/93', 'RECEIVED DEC 25 1942'). Of<br />

course, the digital text transcription isn't<br />

perfect, but it's faster and more cost<br />

effective than alternatives for processing<br />

millions of images.<br />

This is only the start of what's possible for<br />

companies with physical archives. They can<br />

use the Vision API to identify objects,<br />

places and images. For example, if we pass<br />

the black and white photo above through<br />

the Cloud Vision API with Logo Detection,<br />

it recognises Pennsylvania Station.<br />

Furthermore, AutoML can be used to<br />

better identify images in collections using a<br />

corpus of already captioned images.<br />

The Cloud Natural Language API could<br />

also be used to add additional semantic<br />

information to recognised text. For<br />

example, passing the text "THE WAY IT<br />

WAS - Crowded Penn Station in 1942, an<br />

era when only the brave flew - to<br />

Washington, Miami and assorted way<br />

stations." through the Cloud Natural<br />

Language API, it correctly identifies "Penn<br />

Station," "Washington," and "Miami" as<br />

locations, and classifies the entire sentence<br />

into the category "travel" and the<br />

subcategory "bus & rail."<br />

Going forward the NYT newsroom will<br />

use the digitised archives to inspire stories<br />

for Past Tense, a body of coverage<br />

dedicated to revisiting history. The first<br />

package from The Times newsroom to<br />

utilise the digitised archives will focus on<br />

how The Times covered California in the<br />

20th century, examining how California's<br />

free-spiritedness and culture of recreation<br />

and innovation appeared to Times<br />

journalists 3,000 miles away.<br />

More info: cloud.google.com<br />

www.document-manager.com<br />

January/February 2019<br />

@DMMagAndAwards<br />

27