5kk80 Assignment 2 – Design-Space Exploration

5kk80 Assignment 2 – Design-Space Exploration

5kk80 Assignment 2 – Design-Space Exploration

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

<strong>5kk80</strong> <strong>Assignment</strong> 2 <strong>–</strong> <strong>Design</strong>-<strong>Space</strong> <strong>Exploration</strong><br />

1 Introduction<br />

Eindhoven University of Technology<br />

Department of Electrical Engineering<br />

3 April 2008<br />

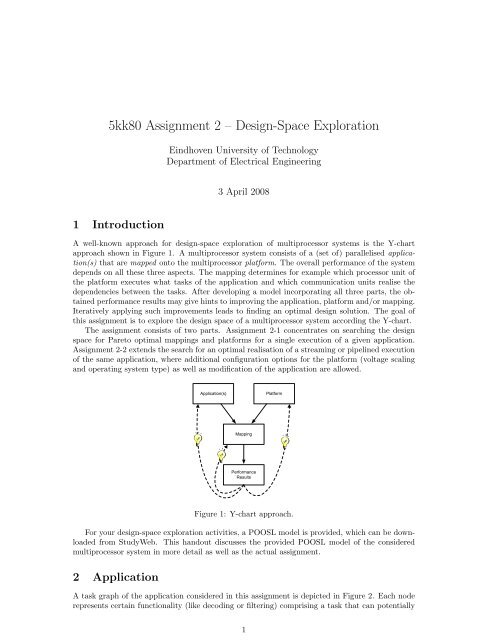

A well-known approach for design-space exploration of multiprocessor systems is the Y-chart<br />

approach shown in Figure 1. A multiprocessor system consists of a (set of) parallelised application(s)<br />

that are mapped onto the multiprocessor platform. The overall performance of the system<br />

depends on all these three aspects. The mapping determines for example which processor unit of<br />

the platform executes what tasks of the application and which communication units realise the<br />

dependencies between the tasks. After developing a model incorporating all three parts, the obtained<br />

performance results may give hints to improving the application, platform and/or mapping.<br />

Iteratively applying such improvements leads to finding an optimal design solution. The goal of<br />

this assignment is to explore the design space of a multiprocessor system according the Y-chart.<br />

The assignment consists of two parts. <strong>Assignment</strong> 2-1 concentrates on searching the design<br />

space for Pareto optimal mappings and platforms for a single execution of a given application.<br />

<strong>Assignment</strong> 2-2 extends the search for an optimal realisation of a streaming or pipelined execution<br />

of the same application, where additional configuration options for the platform (voltage scaling<br />

and operating system type) as well as modification of the application are allowed.<br />

Figure 1: Y-chart approach.<br />

For your design-space exploration activities, a POOSL model is provided, which can be downloaded<br />

from StudyWeb. This handout discusses the provided POOSL model of the considered<br />

multiprocessor system in more detail as well as the actual assignment.<br />

2 Application<br />

A task graph of the application considered in this assignment is depicted in Figure 2. Each node<br />

represents certain functionality (like decoding or filtering) comprising a task that can potentially<br />

1

e executed in parallel with other tasks. The edges represent dependencies between the tasks;<br />

solid lines denote data dependencies, whereas dotted lines indicate control dependencies. Such<br />

dependencies emerge from the communication of information between the tasks, which is implemented<br />

using FIFO buffers. The unit of information that is exchanged between tasks is referred<br />

to as a token, where each token contains 1 Byte of information. The dot with label 4 for buffer<br />

F17 indicates that F17 already contains 4 tokens when the application is started. They allow a<br />

streaming or pipelined execution of the application, where Task1 can be executed at maximum 4<br />

times before Task7 must be executed.<br />

Figure 2: Task graph with data and control dependencies.<br />

Figure 3: Markov chain for determining<br />

scenarios in Task1.<br />

The application in Figure 2 can operate in two modes or scenarios named S1 and S2. The<br />

firing (execution) of a task starts with determining the scenario in which it will operate. Task1<br />

determines the scenario based on the Markov chain (state machine with probabilities) in Figure 3.<br />

This Markov chain models the real functionality of Task1 that interprets certain input data (which<br />

may for example be read from a file) to determine the scenario. Each time Task1 fires, a transition<br />

in the Markov chain is made, where the resulting state determines the scenario. All other tasks<br />

determine the scenario by means of receiving a token from Task1 through their Control port (i.e.,<br />

through the control dependencies in Figure 2). After fixing the scenario, a task continues with<br />

receiving a token on all its other inputs. When all input data has become available, the actual<br />

execution of the task can be performed by the processor node on which the task is mapped. After<br />

completing the execution, the task finalises its firing with producing a token on all its outputs.<br />

For Task1, this also includes sending tokens to the Control port of all other tasks valued with<br />

the scenario in which they operate.<br />

Not all tasks and dependencies are active in both scenarios. Task4 does not perform any<br />

functionality in scenario S2 implying that also no information is communicated through buffers F5<br />

and F12. Moreover, no information is exchanged through F9 in scenario S2. On the other hand,<br />

F11 is inactive in scenario S1. In addition to these variations, the number of tokens produced<br />

and consumed by the tasks using F8 differs for scenarios S1 and S2. A detailed overview of the<br />

resource requirements for the tasks and buffers is given in Tables 1 and 2 respectively. Note that<br />

the execution times in Table 1 are in cycles and not in seconds, see also Section 3.<br />

In <strong>Assignment</strong> 2-1, only a single firing in scenario S1 of each task is considered (the Markov<br />

chain in Figure 3 is not used). In <strong>Assignment</strong> 2-2, the application is to be executed continuously<br />

in a streaming (pipelined) fashion, where all tasks concurrently fire in subsequent scenarios.<br />

Task Graph Transformations <strong>Assignment</strong> 2-1 assumes that the task graph is fixed. For<br />

many applications, it is however possible to adapt the task graph by exploiting for instance task<br />

parallelism. Conversely, some of the overhead (like communication and task switching overhead)<br />

incurred by the parallelisation can be reduced or eliminated by combining tasks. In <strong>Assignment</strong><br />

2

MIPS Scenario S1 Scenario S2<br />

Task Exec. Time (Cycles) Mem. (Bytes) Exec. Time (Cycles) Mem. (Bytes)<br />

Task1 60000 43008 52000 24576<br />

Task2 18000 18432 46000 16384<br />

Task3 24000 36864 55000 49152<br />

Task4 40000 65536 0 0<br />

Task5 85000 20480 65000 28672<br />

Task6 70000 32768 120000 40960<br />

Task7 90000 49152 85000 34816<br />

ARM7 Scenario S1 Scenario S2<br />

Task Exec. Time (Cycles) Mem. (Bytes) Exec. Time (Cycles) Mem. (Bytes)<br />

Task1 40000 32768 48000 34816<br />

Task2 18000 28672 32000 18432<br />

Task3 15500 55296 28000 30720<br />

Task4 24000 24576 0 0<br />

Task5 32500 16384 55000 22528<br />

Task6 64000 20480 46000 36864<br />

Task7 56000 32768 48000 47104<br />

TriMedia Scenario S1 Scenario S2<br />

Task Exec. Time (Cycles) Mem. (Bytes) Exec. Time (Cycles) Mem. (Bytes)<br />

Task1 72000 49152 48000 20480<br />

Task2 15000 24576 32000 12288<br />

Task3 20000 65536 40000 57344<br />

Task4 64000 98304 0 0<br />

Task5 96000 32768 56000 30720<br />

Task6 108000 16384 64000 46080<br />

Task7 80000 12288 98000 22528<br />

Table 1: Resource requirements of the tasks.<br />

2-2, the task graph in Figure 2 is assumed to be the most parallel version of the application.<br />

From this most parallel version, it is allowed to adapt the task graph such that at maximum one<br />

combination of two of the tasks Task2, Task3, Task4, Task5 and Task6 is performed.<br />

Combining tasks affects the control and data dependencies as follows. Since all tasks receive the<br />

same control token indicating the scenario in which they will operate, a single control dependency<br />

is needed from Task1 (instead of two). On the other hand, all data dependencies with other tasks<br />

remain valid, even if this implies multiple data dependencies between two tasks. For example, when<br />

combining Task3 and Task4, one of the control dependencies through F4 and F6 can be removed,<br />

whereas both data dependencies through F3 and F5 must remain to be modelled separately. Instead<br />

of providing a list of profiling information for all allowed task combinations, the following approach<br />

is used to derive the profiling information for the combined task as a rough approximation. On<br />

any processor type and in each scenario, the execution time of the combined task equals the sum of<br />

the execution times of the two original tasks, minus 10% (representing the reduction in overhead).<br />

Similarly, the memory requirement for the combined task equals the maximum of the amount<br />

of memory required by the original tasks, plus the number of tokens communicated between the<br />

original tasks (if any). This approach is illustrated in Figure 4 when combining Task3 and Task4<br />

into TaskX.<br />

3 Platform<br />

The platform considered in this assignment concerns a Network-on-Chip (NoC) based Multi-<br />

Processor System-on-Chip (MPSoC) in a battery-powered embedded device. Four kinds of re-<br />

3

Scenario S1 Scenario S2<br />

Buffer # Tokens # Tokens<br />

F1 2048 2048<br />

F2 1 1<br />

F3 1024 1024<br />

F4 1 1<br />

F5 2048 0<br />

F6 1 1<br />

F7 1 1<br />

F8 1024 2048<br />

Scenario S1 Scenario S2<br />

Buffer # Tokens # Tokens<br />

F9 4096 0<br />

F10 2048 2048<br />

F11 0 4096<br />

F12 4096 0<br />

F13 1 1<br />

F14 3072 3072<br />

F15 1024 1024<br />

F16 1 1<br />

F17 1 1<br />

Table 2: Resource requirements of the buffers (Each token is 1 byte).<br />

MIPS Scenario S1 Scenario S2<br />

Task Exec. Time (Cycles) Mem. (Bytes) Exec. Time (Cycles) Mem. (Bytes)<br />

TaskX 57600 65536 49500 49152<br />

Figure 4: Transformed task graph with derived profiling information for a MIPS.<br />

sources can be distinguished: processor units, communication units, storage units and an energy<br />

source. The considered platform includes one energy resource (the battery), up to four processor<br />

nodes and a NoC. The NoC of the considered platform provides point-to-point connections with<br />

a guaranteed bandwidth and latency. Each node includes a processor unit that runs an operating<br />

system on which tasks can be mapped. The processor unit has a private storage unit (data<br />

memory) to store the code and context of the tasks. Moreover, a node includes a communication<br />

unit on which buffers can be mapped that realise dependencies between the tasks that are mapped<br />

on the processor unit. This communication unit has a private storage unit (buffer memory) to<br />

store the information in the buffers mapped onto the node. On the other hand, the NoC only<br />

includes a communication unit and a storage unit for mapping dependencies between tasks that<br />

are mapped onto different nodes. Any processor, communication and storage unit drains energy<br />

from the battery when it is used.<br />

The platform has several parameters that can be set to obtain an optimal realisation of the<br />

multiprocessor system. Next to the number of processor nodes that can be used, there is a choice<br />

of three different processor types: MIPS, ARM7 and TriMedia 1 . Each processor type has different<br />

characteristics, which are summarised in Table 3. The frequency in Table 3 denotes the base<br />

frequency of the processor unit, while the context switching time refers to the number of cycles<br />

it takes to initialise the execution of another task. The power consumption refers to the power<br />

1 The processor specifications used in this assignment are fictitious. The names do not refer to real processors.<br />

4

MIPS<br />

Frequency 167000000 Cyles per Second<br />

Context Switching Time 1500 Cycles<br />

Power Consumption 0.075 Watt<br />

ARM7<br />

Frequency 100000000 Cyles per Second<br />

Context Switching Time 1200 Cycles<br />

Power Consumption 0.07 Watt<br />

TriMedia<br />

Frequency 200000000 Cyles per Second<br />

Context Switching Time 3200 Cycles<br />

Power Consumption 0.085 Watt<br />

Table 3: Processor specifications.<br />

that is used when the processor is switching tasks or executing a task. Another parameter for<br />

each node is the type of scheduler in the operating system that runs on the processor. The<br />

alternatives are FCFS (representing a first-come first serve scheduling policy without preemption)<br />

and PB (denoting a priority-based scheduling policy with preemption). For the latter type of<br />

scheduler, each task of the application must have a priority assigned to it, ranging from 1 to 7<br />

(higher number means higher priority). The final parameter of a node is the voltage scaling factor<br />

(sV ), which scales the base frequency and power consumption of the processor. Valid voltage<br />

scaling factors for any processor type are 1/4, 1/3, 1/2, 2/3, 3/4 and 1/1. Although the platform<br />

has some other parameters (like bandwidth per connection realised by a communication unit or the<br />

power consumption per communicated and stored Byte), they should remain unchanged during the<br />

assignment. <strong>Assignment</strong> 2-1 only allows changing the number of nodes and the type of processors,<br />

while <strong>Assignment</strong> 2-2 also allows altering the operating system type (including priorities) and<br />

voltage scaling factor.<br />

Operating System The two types of schedulers that can be used for operating system running<br />

on a processor are discussed in a bit more detail. A FCFS scheduler uses a FIFO queue to store ready<br />

tasks, which denote the execution requests that are obtained from the different tasks that are ready<br />

to execute. These requests are put into the queue in the order of reception. In case a request<br />

is available in the queue, the first execution request is granted by executing the corresponding<br />

task without interruptions. An advantage of this type of scheduler is that it is relatively easy to<br />

implement. A disadvantage is that a higher priority task from which an execution request was<br />

received later than a low priority task has to wait until execution of the lower priority task is<br />

finished. The PB scheduler overcomes the latter disadvantage by allowing preemption of any lower<br />

priority task. Instead of a FIFO queue, a PB scheduler maintains a list of execution requests that<br />

is ordered 2 according to the priorities of the involved tasks. Though being more influenceable by<br />

a programmer, a PB scheduler is slightly more difficult to implement. Moreover, preempting a<br />

running task comes at the price of additional context switching, while the memory used by a task<br />

is only freed after completing its execution. Many other schedulers exist for operating systems,<br />

often being much more complex. In the first part of the assignment, only PB schedulers are used,<br />

where the priority of Taski is set equal to i.<br />

Voltage Scaling Changing the supply voltage of the processor units allows trading the energy<br />

used for a given computation load against the execution time. To explain voltage scaling in<br />

more detail, consider that the clock frequency f at which an integrated circuit can operate is<br />

roughly proportional to the supply voltage V for some constant Kf , that is f = Kf · V . Recall<br />

that the energy stored in a capacitor C equals 1<br />

2 · C · V 2 (i.e., proportional to the square of the<br />

2 The PB scheduler orders multiple tasks with equal priority in a (non-preemptive) FIFO fashion.<br />

5

voltage over the capacitor). Therefore, the power P consumed by a processor as the result of<br />

charging and discharging connections is proportional to the square of its supply voltage as well<br />

as to the frequency at which this takes place: P = KP · V 2 · f for some constant KP . As a<br />

result, it can be advantageous to reduce the supply voltage to a processor if the processor has idle<br />

time; frequency (execution speed) can be exchanged for smaller power consumption. Suppose a<br />

processor needs to perform a certain workload of W cycles as indicated in Table 1. The time t<br />

to perform this workload is t = W/f = W/(Kf · V ). The energy E used during that time equals<br />

E = P · t = KP · V 2 · f · W/f = KP · V 2 · W . Now, assume that VB denotes the base supply voltage<br />

and sV the voltage scaling factor, that is V = sV · VB. Then, E = KP · (sV · VB) 2 · W = s2 V · EB,<br />

where EB = KP · V 2 B · W being the base energy used for workload W . The time it takes to execute<br />

the task is t = W/(Kf · V ) = W/(Kf · sV · VB) = tB/sV , where tB = W/(Kf · VB) is the execution<br />

time for the base frequency and supply voltage. Thus, scaling the voltage by a factor sV increases<br />

the execution time by a factor 1/sV and saves energy by a factor of s2 V . From an energy point of<br />

view, a parallel implementation on multiple, slower processors is therefore preferred over a single<br />

very fast processor. This is one of the primary motivations for using MPSoCs. Note also that a<br />

solution that is too fast can be improved by decreasing the voltage scaling factor to make it slower,<br />

while also reducing power consumption. Similarly, a solution that is too slow can be made faster<br />

by increasing the voltage scale factor at the expense of additional power consumption.<br />

4 Mapping<br />

Given a particular (set of) application(s) and platform, the mapping describes which processor<br />

unit, communication unit and storage unit is/are used to realise the execution of tasks and the<br />

communication of tokens. For the considered multiprocessor system, it is only necessary to specify<br />

which tasks of the application are mapped onto which processor nodes since the mapping of<br />

buffers as well as which storage units are used to store what information can be derived from<br />

that automatically. As discussed in Section 3, processor units and communication units have<br />

private memories to store the tasks and tokens respectively. Moreover, each node includes a<br />

communication unit that realises all dependencies between tasks that are mapped on that node,<br />

while all dependencies between tasks mapped on different nodes must be realised by the NoC.<br />

5 Performance Metrics<br />

Application Next to the task graph, an application is characterised by a number of performance<br />

constraints. The following constraints are to be satisfied:<br />

• Latency: ≤ 1/500 seconds;<br />

• Throughput: ≥ 800 Firings of Task7 per second;<br />

• Deadline Miss Probability: ≤ 5%.<br />

Latency is defined here as the time between starting the first firing of Task1 and the corresponding<br />

completion of Task7. In general, the latency can be larger than the time between two<br />

executions of Task1 in case of streaming (pipelined) execution. The throughput indicates the<br />

average number of firings of Task7 per second in such case. Since the application can operate<br />

in different scenarios, there is some variation in the latency possible. Hence, the deadline for the<br />

next completion of Task7 may be missed, but on average, not more than 5% of those completions<br />

are allowed to be late.<br />

Platform Apart from the constraints for the application, there are some optimisation criteria for<br />

the platform. The optimisation criteria for the platform are (in order of importance): (1) energy or<br />

power consumption and (2) amount of resources. The latter refers, amongst others, to the number<br />

of processor units, the size of storage units and the number of concurrent connections to be served<br />

6

y communication units. The overall goal therefore is to look for the minimal configuration of<br />

the system that satisfies the constraints, thereby consuming as little power as possible. Hence, it<br />

makes sense to define some performance metrics for the platform to identify bottlenecks:<br />

• Processor Unit: average utilisation;<br />

• Storage Unit: maximum and average occupancy;<br />

• Communication Unit: maximum and average number of concurrently served connections;<br />

• Battery: peak and average power consumption, where the latter equals the amount of<br />

energy consumed in 1 second.<br />

6 <strong>Assignment</strong><br />

This assignment consists of two parts. The provided POOSL model, which is discussed in more<br />

detail in Section 7, forms the starting point for both parts.<br />

Part 1 <strong>Assignment</strong> 2-1 aims at exploring the trade-off between energy consumption, latency<br />

and number of processor nodes for alternative mappings and processor nodes of different types,<br />

considering a single execution of each task of the application in scenario S1. Use the provided<br />

POOSL model to explore the space of potential solutions that satisfy the latency and deadline<br />

miss constraints for the application and determine a set of feasible, Pareto-optimal configurations<br />

(platform description + mapping).<br />

Recall that in this part of the assignment, the processor units may only use the PB scheduler<br />

type of operating system (where the priority of Taski equals i) and the voltage scaling factors<br />

equal 1/1. Transformation of the task graph is also not considered in this part.<br />

Part 2 In <strong>Assignment</strong> 2-2, the design space is extended with the possibility to transform the<br />

task graph, alternative operating systems (including priorities) and voltage scaling options (which<br />

can be set individually for each of the different nodes). The goal is to find a single optimal solution<br />

in terms of power consumption and amount of resources, subject to the throughput and deadline<br />

miss constraints for the application, which is now executed in a streaming fashion.<br />

Ultimately, 2 June at 9.00h AM, you need to have delivered a short report on your findings<br />

(max. 8 pages, excluding graphs) together with at least the POOSL models that represent your<br />

optimal design solution(s) for both assignment parts, by means of submission to StudyWeb. Please<br />

submit your work as a single zip archive via the <strong>Assignment</strong>2/Submit folder. Studyweb<br />

confirms the correct submission of your work. It is then timestamped and moved to a folder not<br />

visible to you. Please do not resubmit if Studyweb confirmsthe sucessful submission of you file.<br />

Taking the guidelines for documenting experimental research into account, the (individually)<br />

written report should elaborate on at least following aspects for both parts of the assignment:<br />

• The tradeoffs that you have discovered and the resulting design solution(s)<br />

• The approach you used to search the design space for finding your design solution(s), including<br />

an argumentation why you selected this approach<br />

• The impact of streaming execution, task graph transformations, different operating system<br />

types and voltage scaling (Discuss your hypotheses about these aspects in relation to results<br />

you obtained for <strong>Assignment</strong> 2-1)<br />

• The adequacy of abstractions made in the provided model regarding the platform. So,<br />

how well does the model represent a real battery-powered NoC-based MPSoC? One may<br />

for example investigate what abstractions are made in the NoC model for <strong>Assignment</strong> 2 in<br />

7

comparison to those used in <strong>Assignment</strong> 1 and what impact these abstractions may have on<br />

the obtained performance results<br />

If you still have questions, you may contact Bart Theelen (B.D.Theelen@tue.nl) or Marc<br />

Geilen (M.C.W.Geilen@tue.nl). You may also exploit the office hours every Wednesday between<br />

12:30h and 13:30h in PT 9.19. Please announce your visit via B.D.Theelen@tue.nl.<br />

7 Provided Model<br />

This section discusses the provided POOSL model and how it can be used to complete the assignment.<br />

The screen shot of SHESim in Figure 5 shows that it includes a representation of<br />

the Application and the NoC-based MPSoC platform. The SimulationController process is<br />

concerned with terminating the simulation whenever the estimation results for all average performance<br />

metrics have become accurate and is not really a part of the system. It also terminates the<br />

simulation if not all estimations have become accurate after simulating 50 units of model time.<br />

7.1 Application Model<br />

Figure 5: Top-level diagram for the provided model.<br />

The Application cluster models the task graph of Figure 2 and has the following instantiation<br />

parameters that are relevant for performing the assignment:<br />

• Iterate - controls whether to execute the task graph in a streaming fashion or not. Iterate<br />

should be set to false for <strong>Assignment</strong> 2-1 and to true in <strong>Assignment</strong> 2-2;<br />

• MapTaskiTo - indicates on which processor node task i is mapped and hence, the possible<br />

values are "Node1", "Node2", "Node3" and "Node4" (Note that these are Strings; don’t<br />

forget to include the double quotes);<br />

• PriorityTaski - is the integer priority of task i, where a higher number refers to a higher<br />

priority.<br />

The Application cluster captures the structure of processes modelling the tasks and buffers<br />

of Figure 2. The process Task7 includes a monitor to evaluate the percentage of deadline misses<br />

and the throughput metric defined in Section 5. In addition, the latency metric is evaluated in<br />

8

case the Iterate instantiation parameters equals false. The monitoring results can be observed<br />

by inspecting the instance variable Status. On the other hand, the estimation results are logged<br />

to a file named Application.log. Note that no throughput estimation can be given for a single<br />

iteration of the application (i.e., for <strong>Assignment</strong> 2-1).<br />

Modelling Combined Tasks When performing task graph transformations, it is necessary to<br />

adapt process class Task1 and define a new process class for the combined task before instantiating<br />

it in the Application cluster. To enable doing so, the modelled protocols for communicating with<br />

buffers and processor nodes must be obeyed. Note that you must have opened the cluster<br />

class browser on Application when modifying class Task1 because of a bug in SHESim.<br />

Modifying Task1 is relatively easy and only involves changes to the NotifyBuffersAbout-<br />

Mapping, Reserve<strong>Space</strong>ForWrites and PerformWrites methods to ensure that communication<br />

through the control dependency in Figure 2 that has become obsolete is not performed anymore.<br />

For any task, the method NotifyBuffersAboutMapping enables automatic determination<br />

of the mapping of the buffers connected to it. Hence, for Task1, it is necessary to remove the<br />

concurrent activity on sending of the MappedTo message to the involved obsolete buffer. In the<br />

Reserve<strong>Space</strong>ForWrites, the concurrent activity on sending the ReserveRoom message and consecutively<br />

receiving the ReservationSuccessful message must be removed. Finally, in method<br />

PerformWrites, the statement for sending the WriteToken message must be removed.<br />

Defining a process class (let’s assume that it is called TaskX) to model the new combined<br />

task can best be based on defining a subclass of Task (and using an existing Task class definition<br />

as a reference). This process class provides a template that is used for defining all other<br />

tasks (except for Task1) in the provided model. When doing so, defining class TaskX only involves<br />

overloading methods NotifyBuffersAboutMapping, CheckTokenAvailabilityForReads,<br />

Reserve<strong>Space</strong>ForWrites, Release<strong>Space</strong>ForReads and PerformWrites while the reception of<br />

the token from the Control port and the communication with the processor node is already<br />

defined in the superclass Task. Method NotifyBuffersAboutMapping must include a concurrent<br />

activity for informing each buffer connected to TaskX about its mapping. This concurrent<br />

activity concerns the sending of a message MappedTo with MapTo as parameter. The method<br />

CheckTokenAvailabilityForReads models the request and acknowledgement with the buffer at<br />

each input of TaskX about the availability of a token. The protocol prescribes to send the request as<br />

a message InspectTokenAvailability and then receive the acknowledgement by means of a message<br />

TokenAvailable with some parameter of class Token. The method Reserve<strong>Space</strong>ForWrites<br />

involves the specification of a concurrent activity for informing the buffer at each output of TaskX<br />

about the intention to write a token after completing execution. Such a concurrent activity models<br />

the request and acknowledgement regarding the reservation of buffer space. The protocol<br />

prescribes to send the request by means of a message Reserve<strong>Space</strong> with a parameter that represents<br />

the token to be written (or more precisely, the size of the token to be written). To this<br />

end, a new object of class Token must be created and its size in Bytes (see also Table 2) must be<br />

initialised using data method setSize. The acknowledgement is modelled by receiving message<br />

ReservationSuccessful. The method Release<strong>Space</strong>ForReads models informing the buffer at<br />

all inputs about the completion of the execution of the task and hence that the space for the<br />

read token can be released by sending a message Release<strong>Space</strong> to all inputs. Finally, method<br />

PerformWrites informs the buffer at all outputs about the fact that the produced tokens have<br />

become available for consumption by the target tasks.<br />

After defining process class TaskX, an instance can be created in the Application. What remains<br />

to be done is properly connecting the new process with the involved buffers and initialising its<br />

instantiation parameters. Instantiation parameter Name should be a String indicating the task’s<br />

name, that must match with the new entry in the processor specification files MIPS.txt, ARM7.txt<br />

and TriMedia.txt, see also Section 7.2. The instantiation parameters MapTo and Priority initialise<br />

the processor node to which the new task is mapped and the priority of the task respectively.<br />

9

7.2 Platform Model<br />

Figure 6: Cluster class Platform.<br />

The MPSoC cluster in Figure 5 models the considered battery-powered NoC-based MPSoC platform<br />

with at maximum four processor nodes. Figure 6 gives a screen dump of the Platform cluster<br />

class definition indicating the models of the battery, NoC and four processor nodes. Note that<br />

although four processor nodes (i.e., Node1, Node2, Node3 and Node4) are instantiated in the<br />

Platform model, the mapping determines how many nodes are actually used 3 . The process<br />

PlatformMonitor is concerned with terminating the simulation whenever the estimation results<br />

for all average performance metrics have become accurate and is not really a part of the system.<br />

The ProcessorNode cluster class has the following relevant instantiation parameters:<br />

• ProcessorType - denotes the type of processor unit in the node. The possible values are<br />

"MIPS", "ARM7" and "TriMedia" (Note that these are Strings);<br />

• OSPolicy - indicates the type of scheduler for the operating system running on the processor<br />

unit. The possible values are "PB" and "FCFS" (Note that these are Strings);<br />

• VoltageScaleFactor - concerns the voltage scaling factor. Possible values are 1/4, 1/3,<br />

1/2, 2/3, 3/4 and 1/1 (Note that these must be Reals and hence, use e.g. 1/1 or 1.0).<br />

The Battery includes a monitor to evaluate the performance metrics defined in Section 5<br />

by means of the Status instance variable. The estimation results for these performance metrics<br />

are also logged in the file Battery.log. Note that a single iteration of the application will not<br />

provide sufficient information to properly estimate the average power consumption with a detailed<br />

accuracy indication. In this case, only the average power consumption that was observed during<br />

the particular simulation run is provided without any accuracy details. The other resources of<br />

the platform also include monitors, though whether they produce results depends on whether<br />

something is mapped onto the involved resource. The processor utilisation and data memory<br />

occupancy results are logged in files named ProcessorNodei.log, where i is the number of the<br />

node. On the other hand, the utilisation of the communication unit and buffer memory occupancy<br />

results are logged in files named CommunicationNodei.log, where i is again the number of the<br />

3 The instantiated processor, memory and communication resources of an unused node will not consume power.<br />

10

node. Finally, the utilisation of the communication unit and buffer memory occupancy results for<br />

the NoC are logged in file named CommunicationNoC.log.<br />

Processor Specifications The specification of the processor types and corresponding profiling<br />

information for the different tasks in Tables 3 and 1 are stored in the files MIPS.txt, ARM7.txt<br />

and TriMedia.txt. When simulating with SHESim, these 3 processor specification files must be<br />

located in the directory where the image file (originally named SHESim.im) is located. When using<br />

the Rotalumis tool, the 3 processor specification files must be located in the directory where the<br />

exported model file with extension .p4r (default name is model.p4r) is located.<br />

Specifying Combined Tasks When performing task graph transformations, it is necessary to<br />

add the profiling information derived for the combined task as illustrated in Figure 4 to the files<br />

MIPS.txt, ARM7.txt and TriMedia.txt in a similar way as for the original tasks. Ensure that<br />

you use the same task name (between double quotes) in the first column as you used to initialise<br />

the instantiation parameter of the process modelling the new task in the Application.<br />

11