Download Chapters 3-6 (.PDF) - ODBMS

Download Chapters 3-6 (.PDF) - ODBMS

Download Chapters 3-6 (.PDF) - ODBMS

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

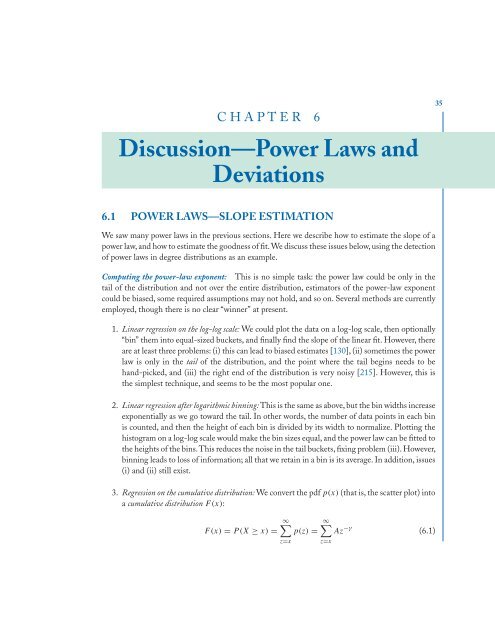

CHAPTER 6<br />

Discussion—Power Laws and<br />

Deviations<br />

6.1 POWER LAWS—SLOPE ESTIMATION<br />

We saw many power laws in the previous sections. Here we describe how to estimate the slope of a<br />

power law, and how to estimate the goodness of fit. We discuss these issues below, using the detection<br />

of power laws in degree distributions as an example.<br />

Computing the power-law exponent: This is no simple task: the power law could be only in the<br />

tail of the distribution and not over the entire distribution, estimators of the power-law exponent<br />

could be biased, some required assumptions may not hold, and so on. Several methods are currently<br />

employed, though there is no clear “winner” at present.<br />

1. Linear regression on the log-log scale: We could plot the data on a log-log scale, then optionally<br />

“bin” them into equal-sized buckets, and finally find the slope of the linear fit. However, there<br />

are at least three problems: (i) this can lead to biased estimates [130], (ii) sometimes the power<br />

law is only in the tail of the distribution, and the point where the tail begins needs to be<br />

hand-picked, and (iii) the right end of the distribution is very noisy [215]. However, this is<br />

the simplest technique, and seems to be the most popular one.<br />

2. Linear regression after logarithmic binning: This is the same as above, but the bin widths increase<br />

exponentially as we go toward the tail. In other words, the number of data points in each bin<br />

is counted, and then the height of each bin is divided by its width to normalize. Plotting the<br />

histogram on a log-log scale would make the bin sizes equal, and the power law can be fitted to<br />

the heights of the bins.This reduces the noise in the tail buckets, fixing problem (iii). However,<br />

binning leads to loss of information; all that we retain in a bin is its average. In addition, issues<br />

(i) and (ii) still exist.<br />

3. Regression on the cumulative distribution: We convert the pdf p(x) (that is, the scatter plot) into<br />

a cumulative distribution F(x):<br />

∞ ∞<br />

F(x) = P(X≥ x) = p(z) = Az −γ<br />

z=x<br />

z=x<br />

(6.1)<br />

35