Data Encryption Based On Protein Synthesis - Nguyen Dang Binh

Data Encryption Based On Protein Synthesis - Nguyen Dang Binh

Data Encryption Based On Protein Synthesis - Nguyen Dang Binh

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

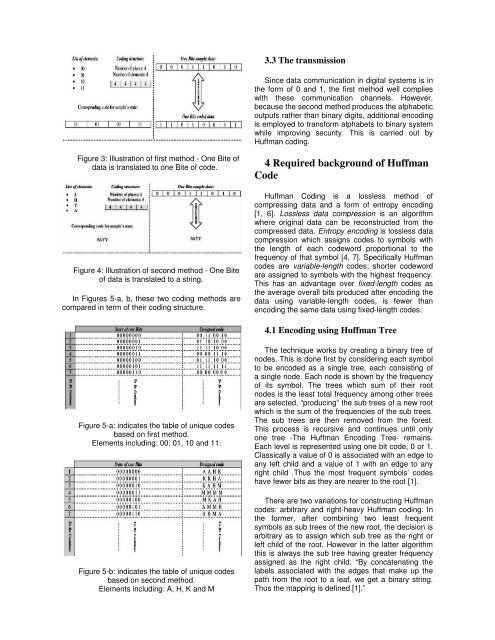

Figure 3: Illustration of first method - <strong>On</strong>e Bite of<br />

data is translated to one Bite of code.<br />

Figure 4: Illustration of second method - <strong>On</strong>e Bite<br />

of data is translated to a string.<br />

In Figures 5-a, b, these two coding methods are<br />

compared in term of their coding structure.<br />

Figure 5-a: indicates the table of unique codes<br />

based on first method.<br />

Elements including: 00, 01, 10 and 11.<br />

Figure 5-b: indicates the table of unique codes<br />

based on second method.<br />

Elements including: A, H, K and M<br />

3.3 The transmission<br />

Since data communication in digital systems is in<br />

the form of 0 and 1, the first method well complies<br />

with these communication channels. However,<br />

because the second method produces the alphabetic<br />

outputs rather than binary digits, additional encoding<br />

is employed to transform alphabets to binary system<br />

while improving security. This is carried out by<br />

Huffman coding.<br />

4 Required background of Huffman<br />

Code<br />

Huffman Coding is a lossless method of<br />

compressing data and a form of entropy encoding<br />

[1, 6]. Lossless data compression is an algorithm<br />

where original data can be reconstructed from the<br />

compressed data. Entropy encoding is lossless data<br />

compression which assigns codes to symbols with<br />

the length of each codeword proportional to the<br />

frequency of that symbol [4, 7]. Specifically Huffman<br />

codes are variable-length codes; shorter codeword<br />

are assigned to symbols with the highest frequency.<br />

This has an advantage over fixed-length codes as<br />

the average overall bits produced after encoding the<br />

data using variable-length codes, is fewer than<br />

encoding the same data using fixed-length codes.<br />

4.1 Encoding using Huffman Tree<br />

The technique works by creating a binary tree of<br />

nodes. This is done first by considering each symbol<br />

to be encoded as a single tree, each consisting of<br />

a single node. Each node is shown by the frequency<br />

of its symbol. The trees which sum of their root<br />

nodes is the least total frequency among other trees<br />

are selected, “producing” the sub trees of a new root<br />

which is the sum of the frequencies of the sub trees.<br />

The sub trees are then removed from the forest.<br />

This process is recursive and continues until only<br />

one tree -The Huffman Encoding Tree- remains.<br />

Each level is represented using one bit code, 0 or 1.<br />

Classically a value of 0 is associated with an edge to<br />

any left child and a value of 1 with an edge to any<br />

right child .Thus the most frequent symbols’ codes<br />

have fewer bits as they are nearer to the root [1].<br />

There are two variations for constructing Huffman<br />

codes: arbitrary and right-heavy Huffman coding. In<br />

the former, after combining two least frequent<br />

symbols as sub trees of the new root, the decision is<br />

arbitrary as to assign which sub tree as the right or<br />

left child of the root. However in the latter algorithm<br />

this is always the sub tree having greater frequency<br />

assigned as the right child. “By concatenating the<br />

labels associated with the edges that make up the<br />

path from the root to a leaf, we get a binary string.<br />

Thus the mapping is defined [1].”