CRANFIELD UNIVERSITY Eleni Anthippi Chatzimichali ...

CRANFIELD UNIVERSITY Eleni Anthippi Chatzimichali ...

CRANFIELD UNIVERSITY Eleni Anthippi Chatzimichali ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

1.6.2 k-fold Cross-Validation<br />

Cross-validation (Wold, 1978) is the most popular validation technique. In<br />

-fold cross-validation, the entire dataset is randomly split into mutually exclusive<br />

subsets – the folds – of approximately equal size (Kohavi, 1995; Duan et al., 2003;<br />

Izenman, 2008). The algorithm of -fold cross-validation performs iterations in<br />

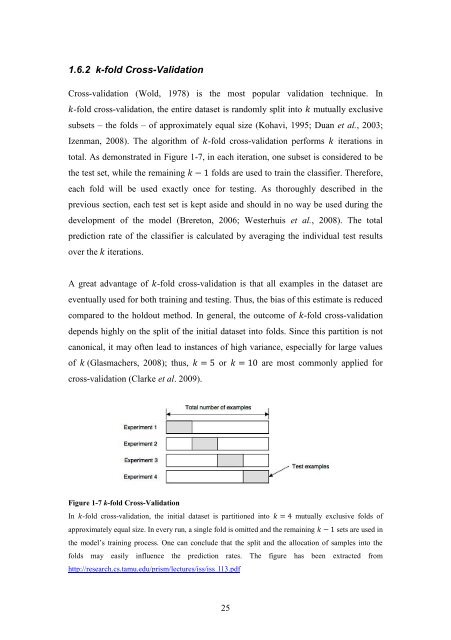

total. As demonstrated in Figure 1-7, in each iteration, one subset is considered to be<br />

the test set, while the remaining folds are used to train the classifier. Therefore,<br />

each fold will be used exactly once for testing. As thoroughly described in the<br />

previous section, each test set is kept aside and should in no way be used during the<br />

development of the model (Brereton, 2006; Westerhuis et al., 2008). The total<br />

prediction rate of the classifier is calculated by averaging the individual test results<br />

over the iterations.<br />

A great advantage of -fold cross-validation is that all examples in the dataset are<br />

eventually used for both training and testing. Thus, the bias of this estimate is reduced<br />

compared to the holdout method. In general, the outcome of -fold cross-validation<br />

depends highly on the split of the initial dataset into folds. Since this partition is not<br />

canonical, it may often lead to instances of high variance, especially for large values<br />

of (Glasmachers, 2008); thus, or are most commonly applied for<br />

cross-validation (Clarke et al. 2009).<br />

Figure 1-7 k-fold Cross-Validation<br />

In -fold cross-validation, the initial dataset is partitioned into mutually exclusive folds of<br />

approximately equal size. In every run, a single fold is omitted and the remaining sets are used in<br />

the model’s training process. One can conclude that the split and the allocation of samples into the<br />

folds may easily influence the prediction rates. The figure has been extracted from<br />

http://research.cs.tamu.edu/prism/lectures/iss/iss_l13.pdf<br />

25