Pit Pattern Classification in Colonoscopy using Wavelets - WaveLab

Pit Pattern Classification in Colonoscopy using Wavelets - WaveLab

Pit Pattern Classification in Colonoscopy using Wavelets - WaveLab

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

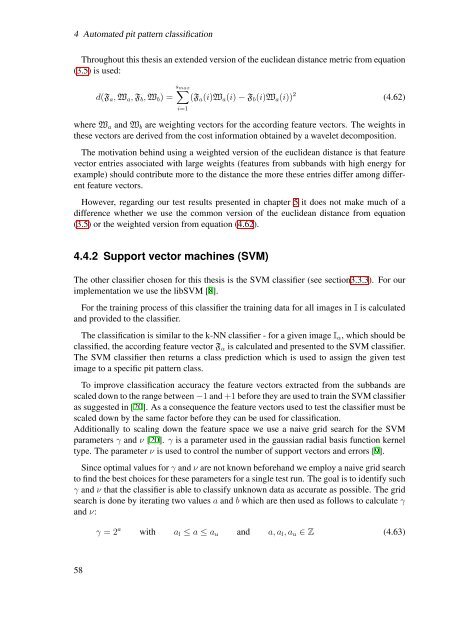

4 Automated pit pattern classification<br />

Throughout this thesis an extended version of the euclidean distance metric from equation<br />

(3.5) is used:<br />

s∑<br />

max<br />

d(F a , W a , F b , W b ) = (F a (i)W a (i) − F b (i)W a (i)) 2 (4.62)<br />

i=1<br />

where W a and W b are weight<strong>in</strong>g vectors for the accord<strong>in</strong>g feature vectors. The weights <strong>in</strong><br />

these vectors are derived from the cost <strong>in</strong>formation obta<strong>in</strong>ed by a wavelet decomposition.<br />

The motivation beh<strong>in</strong>d us<strong>in</strong>g a weighted version of the euclidean distance is that feature<br />

vector entries associated with large weights (features from subbands with high energy for<br />

example) should contribute more to the distance the more these entries differ among different<br />

feature vectors.<br />

However, regard<strong>in</strong>g our test results presented <strong>in</strong> chapter 5 it does not make much of a<br />

difference whether we use the common version of the euclidean distance from equation<br />

(3.5) or the weighted version from equation (4.62).<br />

4.4.2 Support vector mach<strong>in</strong>es (SVM)<br />

The other classifier chosen for this thesis is the SVM classifier (see section3.3.3). For our<br />

implementation we use the libSVM [8].<br />

For the tra<strong>in</strong><strong>in</strong>g process of this classifier the tra<strong>in</strong><strong>in</strong>g data for all images <strong>in</strong> I is calculated<br />

and provided to the classifier.<br />

The classification is similar to the k-NN classifier - for a given image I α , which should be<br />

classified, the accord<strong>in</strong>g feature vector F α is calculated and presented to the SVM classifier.<br />

The SVM classifier then returns a class prediction which is used to assign the given test<br />

image to a specific pit pattern class.<br />

To improve classification accuracy the feature vectors extracted from the subbands are<br />

scaled down to the range between −1 and +1 before they are used to tra<strong>in</strong> the SVM classifier<br />

as suggested <strong>in</strong> [20]. As a consequence the feature vectors used to test the classifier must be<br />

scaled down by the same factor before they can be used for classification.<br />

Additionally to scal<strong>in</strong>g down the feature space we use a naive grid search for the SVM<br />

parameters γ and ν [20]. γ is a parameter used <strong>in</strong> the gaussian radial basis function kernel<br />

type. The parameter ν is used to control the number of support vectors and errors [9].<br />

S<strong>in</strong>ce optimal values for γ and ν are not known beforehand we employ a naive grid search<br />

to f<strong>in</strong>d the best choices for these parameters for a s<strong>in</strong>gle test run. The goal is to identify such<br />

γ and ν that the classifier is able to classify unknown data as accurate as possible. The grid<br />

search is done by iterat<strong>in</strong>g two values a and b which are then used as follows to calculate γ<br />

and ν:<br />

γ = 2 a with a l ≤ a ≤ a u and a, a l , a u ∈ Z (4.63)<br />

58