Slides in PDF - of Marcus Hutter

Slides in PDF - of Marcus Hutter

Slides in PDF - of Marcus Hutter

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Marcus</strong> <strong>Hutter</strong> - 25 - Universal Induction & Intelligence<br />

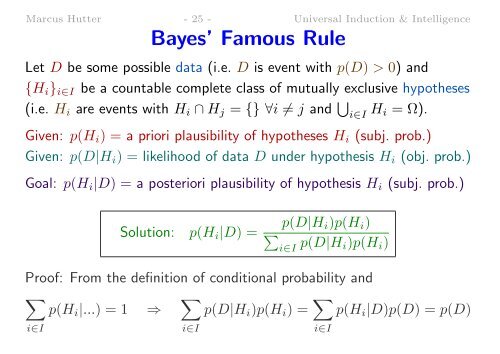

Bayes’ Famous Rule<br />

Let D be some possible data (i.e. D is event with p(D) > 0) and<br />

{H i } i∈I be a countable complete class <strong>of</strong> mutually exclusive hypotheses<br />

(i.e. H i are events with H i ∩ H j = {} ∀i ≠ j and ∪ i∈I H i = Ω).<br />

Given: p(H i ) = a priori plausibility <strong>of</strong> hypotheses H i (subj. prob.)<br />

Given: p(D|H i ) = likelihood <strong>of</strong> data D under hypothesis H i (obj. prob.)<br />

Goal: p(H i |D) = a posteriori plausibility <strong>of</strong> hypothesis H i (subj. prob.)<br />

Solution: p(H i |D) =<br />

p(D|H i )p(H i )<br />

∑<br />

i∈I p(D|H i)p(H i )<br />

Pro<strong>of</strong>: From the def<strong>in</strong>ition <strong>of</strong> conditional probability and<br />

∑<br />

p(H i |...) = 1 ⇒ ∑ p(D|H i )p(H i ) = ∑ p(H i |D)p(D) = p(D)<br />

i∈I<br />

i∈I<br />

i∈I