Slides in PDF - of Marcus Hutter

Slides in PDF - of Marcus Hutter

Slides in PDF - of Marcus Hutter

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

<strong>Marcus</strong> <strong>Hutter</strong> - 45 - Universal Induction & Intelligence<br />

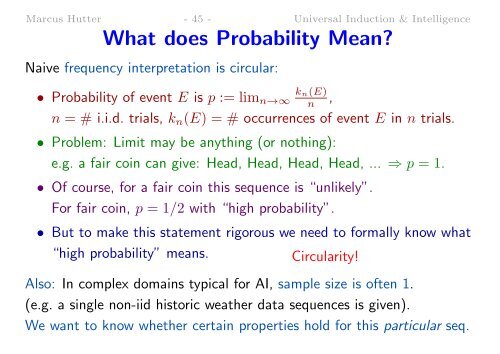

What does Probability Mean<br />

Naive frequency <strong>in</strong>terpretation is circular:<br />

• Probability <strong>of</strong> event E is p := lim n→∞<br />

k n (E)<br />

n ,<br />

n = # i.i.d. trials, k n (E) = # occurrences <strong>of</strong> event E <strong>in</strong> n trials.<br />

• Problem: Limit may be anyth<strong>in</strong>g (or noth<strong>in</strong>g):<br />

e.g. a fair co<strong>in</strong> can give: Head, Head, Head, Head, ... ⇒ p = 1.<br />

• Of course, for a fair co<strong>in</strong> this sequence is “unlikely”.<br />

For fair co<strong>in</strong>, p = 1/2 with “high probability”.<br />

• But to make this statement rigorous we need to formally know what<br />

“high probability” means.<br />

Circularity!<br />

Also: In complex doma<strong>in</strong>s typical for AI, sample size is <strong>of</strong>ten 1.<br />

(e.g. a s<strong>in</strong>gle non-iid historic weather data sequences is given).<br />

We want to know whether certa<strong>in</strong> properties hold for this particular seq.