Slides in PDF - of Marcus Hutter

Slides in PDF - of Marcus Hutter

Slides in PDF - of Marcus Hutter

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

<strong>Marcus</strong> <strong>Hutter</strong> - 37 - Universal Induction & Intelligence<br />

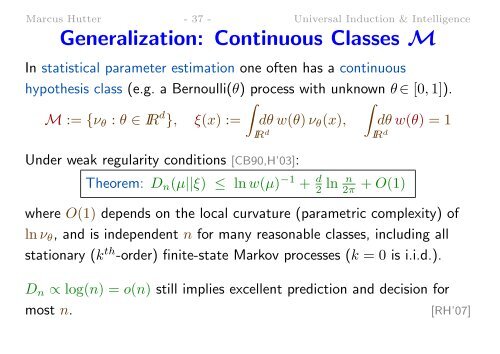

Generalization: Cont<strong>in</strong>uous Classes M<br />

In statistical parameter estimation one <strong>of</strong>ten has a cont<strong>in</strong>uous<br />

hypothesis class (e.g. a Bernoulli(θ) process with unknown θ ∈ [0, 1]).<br />

∫<br />

∫<br />

M := {ν θ : θ ∈ IR d }, ξ(x) := dθ w(θ) ν θ (x), dθ w(θ) = 1<br />

IR d<br />

Under weak regularity conditions [CB90,H’03]:<br />

IR d<br />

Theorem: D n (µ||ξ) ≤ ln w(µ) −1 + d 2 ln n 2π + O(1)<br />

where O(1) depends on the local curvature (parametric complexity) <strong>of</strong><br />

ln ν θ , and is <strong>in</strong>dependent n for many reasonable classes, <strong>in</strong>clud<strong>in</strong>g all<br />

stationary (k th -order) f<strong>in</strong>ite-state Markov processes (k = 0 is i.i.d.).<br />

D n ∝ log(n) = o(n) still implies excellent prediction and decision for<br />

most n.<br />

[RH’07]