Slides in PDF - of Marcus Hutter

Slides in PDF - of Marcus Hutter

Slides in PDF - of Marcus Hutter

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

<strong>Marcus</strong> <strong>Hutter</strong> - 99 - Universal Induction & Intelligence<br />

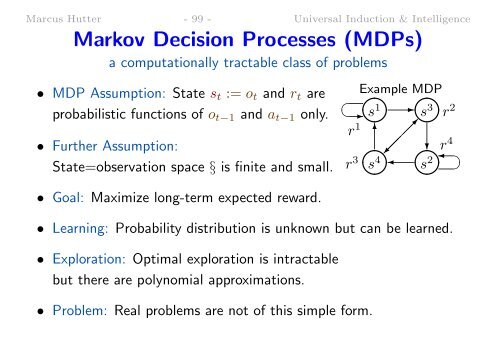

Markov Decision Processes (MDPs)<br />

a computationally tractable class <strong>of</strong> problems<br />

• MDP Assumption: State s t := o t and r t are<br />

probabilistic functions <strong>of</strong> o t−1 and a t−1 only.<br />

• Further Assumption:<br />

State=observation space § is f<strong>in</strong>ite and small.<br />

Example MDP<br />

✞ ✎☞ ✎☞<br />

✝✲ ✍✌<br />

s 1 ✲<br />

✍✌<br />

s 3 r 2<br />

r 1 ✻ ✒<br />

✎☞ ✠ ✎☞ ❄<br />

✛<br />

r 4 ☎<br />

r 3<br />

✍✌<br />

s 4 ✛<br />

✍✌<br />

s 2 ✆<br />

• Goal: Maximize long-term expected reward.<br />

• Learn<strong>in</strong>g: Probability distribution is unknown but can be learned.<br />

• Exploration: Optimal exploration is <strong>in</strong>tractable<br />

but there are polynomial approximations.<br />

• Problem: Real problems are not <strong>of</strong> this simple form.