Grid Computing Cluster â the Development and ... - Lim Lian Tze

Grid Computing Cluster â the Development and ... - Lim Lian Tze

Grid Computing Cluster â the Development and ... - Lim Lian Tze

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

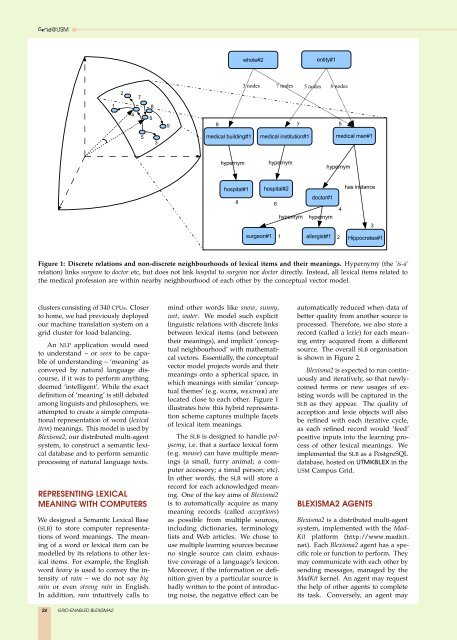

<strong>Grid</strong>@USMwhole#2entity#1273 nodes 7 nodes 5 nodes 6 nodes148699 7553medical building#1medical institution#1medical man#1hypernymhypernymhypernymhospital#1 hospital#2doctor#18 64hypernym hypernymsurgeon#1 1 allergist#1 2has instance3Hippocrates#1Figure 1: Discrete relations <strong>and</strong> non-discrete neighbourhoods of lexical items <strong>and</strong> <strong>the</strong>ir meanings. Hypernymy (<strong>the</strong> ‘is-a’relation) links surgeon to doctor etc, but does not link hospital to surgeon nor doctor directly. Instead, all lexical items related to<strong>the</strong> medical profession are within nearby neighbourhood of each o<strong>the</strong>r by <strong>the</strong> conceptual vector model.clusters consisting of 340 CPUs. Closerto home, we had previously deployedour machine translation system on agrid cluster for load balancing.An NLP application would needto underst<strong>and</strong> – or seen to be capableof underst<strong>and</strong>ing – ‘meaning’ asconveyed by natural language discourse,if it was to perform anythingdeemed ‘intelligent’. While <strong>the</strong> exactdefinition of ‘meaning’ is still debatedamong linguists <strong>and</strong> philosophers, weattempted to create a simple computationalrepresentation of word (lexicalitem) meanings. This model is used byBlexisma2, our distributed multi-agentsystem, to construct a semantic lexicaldatabase <strong>and</strong> to perform semanticprocessing of natural language texts.REPRESENTING LEXICALMEANING WITH COMPUTERSWe designed a Semantic Lexical Base(SLB) to store computer representationsof word meanings. The meaningof a word or lexical item can bemodelled by its relations to o<strong>the</strong>r lexicalitems. For example, <strong>the</strong> Englishword heavy is used to convey <strong>the</strong> intensityof rain – we do not say bigrain or even strong rain in English.In addition, rain intuitively calls tomind o<strong>the</strong>r words like snow, sunny,wet, water. We model such explicitlinguistic relations with discrete linksbetween lexical items (<strong>and</strong> between<strong>the</strong>ir meanings), <strong>and</strong> implicit ‘conceptualneighbourhood’ with ma<strong>the</strong>maticalvectors. Essentially, <strong>the</strong> conceptualvector model projects words <strong>and</strong> <strong>the</strong>irmeanings onto a spherical space, inwhich meanings with similar ‘conceptual<strong>the</strong>mes’ (e.g. water, wea<strong>the</strong>r) arelocated close to each o<strong>the</strong>r. Figure 1illustrates how this hybrid representationscheme captures multiple facetsof lexical item meanings.The SLB is designed to h<strong>and</strong>le polysemy,i.e. that a surface lexical form(e.g. mouse) can have multiple meanings(a small, furry animal; a computeraccessory; a timid person; etc).In o<strong>the</strong>r words, <strong>the</strong> SLB will store arecord for each acknowledged meaning.One of <strong>the</strong> key aims of Blexisma2is to automatically acquire as manymeaning records (called acceptions)as possible from multiple sources,including dictionaries, terminologylists <strong>and</strong> Web articles. We chose touse multiple learning sources becauseno single source can claim exhaustivecoverage of a language’s lexicon.Moreover, if <strong>the</strong> information or definitiongiven by a particular source isbadly written to <strong>the</strong> point of introducingnoise, <strong>the</strong> negative effect can beautomatically reduced when data ofbetter quality from ano<strong>the</strong>r source isprocessed. Therefore, we also store arecord (called a lexie) for each meaningentry acquired from a differentsource. The overall SLB organisationis shown in Figure 2.Blexisma2 is expected to run continuously<strong>and</strong> iteratively, so that newlycoinedterms or new usages of existingwords will be captured in <strong>the</strong>SLB as <strong>the</strong>y appear. The quality ofacception <strong>and</strong> lexie objects will alsobe refined with each iterative cycle,as each refined record would ‘feed’positive inputs into <strong>the</strong> learning processof o<strong>the</strong>r lexical meanings. Weimplemented <strong>the</strong> SLB as a PostgreSQLdatabase, hosted on utmkblex in <strong>the</strong>USM Campus <strong>Grid</strong>.BLEXISMA2 AGENTSBlexisma2 is a distributed multi-agentsystem, implemented with <strong>the</strong> Mad-Kit platform (http://www.madkit.net). Each Blexisma2 agent has a specificrole or function to perform. Theymay communicate with each o<strong>the</strong>r bysending messages, managed by <strong>the</strong>MadKit kernel. An agent may request<strong>the</strong> help of o<strong>the</strong>r agents to completeits task. Conversely, an agent may24 GRID-ENABLED BLEXISMA2