A particular Gaussian mixture model for clustering and its ...

A particular Gaussian mixture model for clustering and its ...

A particular Gaussian mixture model for clustering and its ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

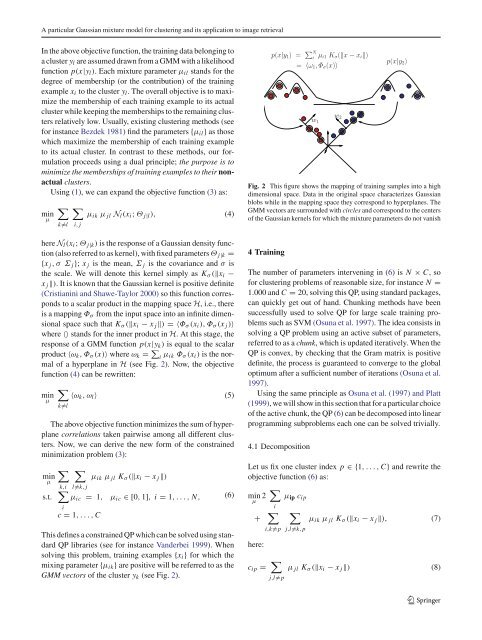

A <strong>particular</strong> <strong>Gaussian</strong> <strong>mixture</strong> <strong>model</strong> <strong>for</strong> <strong>clustering</strong> <strong>and</strong> <strong>its</strong> application to image retrievalIn the above objective function, the training data belonging toaclustery l are assumed drawn from a GMM with a likelihoodfunction p(x|y l ). Each <strong>mixture</strong> parameter µ il st<strong>and</strong>s <strong>for</strong> thedegree of membership (or the contribution) of the trainingexample x i to the cluster y l . The overall objective is to maximizethe membership of each training example to <strong>its</strong> actualcluster while keeping the memberships to the remaining clustersrelatively low. Usually, existing <strong>clustering</strong> methods (see<strong>for</strong> instance Bezdek 1981) find the parameters {µ il } as thosewhich maximize the membership of each training exampleto <strong>its</strong> actual cluster. In contrast to these methods, our <strong>for</strong>mulationproceeds using a dual principle; the purpose is tominimize the memberships of training examples to their nonactualclusters.Using (1), we can exp<strong>and</strong> the objective function (3)as:minµ∑ ∑µ ik µ jl N l (x i ; Θ j|l ), (4)k̸=li, jhere N l (x i ; Θ j|k ) is the response of a <strong>Gaussian</strong> density function(also referred to as kernel), with fixed parameters Θ j|k ={x j ,σ Σ j }; x j is the mean, Σ j is the covariance <strong>and</strong> σ isthe scale. We will denote this kernel simply as K σ (‖x i −x j ‖). It is known that the <strong>Gaussian</strong> kernel is positive definite(Cristianini <strong>and</strong> Shawe-Taylor 2000) so this function correspondsto a scalar product in the mapping space H, i.e., thereis a mapping Φ σ from the input space into an infinite dimensionalspace such that K σ (‖x i − x j ‖) =〈Φ σ (x i ), Φ σ (x j )〉where 〈〉 st<strong>and</strong>s <strong>for</strong> the inner product in H. At this stage, theresponse of a GMM function p(x|y k ) is equal to the scalarproduct 〈ω k ,Φ σ (x)〉 where ω k = ∑ i µ ik Φ σ (x i ) is the normalof a hyperplane in H (see Fig. 2). Now, the objectivefunction (4) can be rewritten:minµ∑〈ω k ,ω l 〉 (5)k̸=lThe above objective function minimizes the sum of hyperplanecorrelations taken pairwise among all different clusters.Now, we can derive the new <strong>for</strong>m of the constrainedminimization problem (3):minµs.t.∑k,i∑l̸=k, jµ ik µ jl K σ (‖x i − x j ‖)∑µ ic = 1, µ ic ∈[0, 1], i = 1,...,N,ic = 1,...,CThis defines a constrained QP which can be solved using st<strong>and</strong>ardQP libraries (see <strong>for</strong> instance V<strong>and</strong>erbei 1999). Whensolving this problem, training examples {x i } <strong>for</strong> which themixing parameter {µ ik } are positive will be referred to as theGMM vectors of the cluster y k (see Fig. 2).(6)p(x|y 1 ) = ∑ Niµ i1 K σ (‖x − x i ‖)= 〈ω 1 ,Φ σ (x)〉w 1w 2p(x|y 2 )Fig. 2 This figure shows the mapping of training samples into a highdimensional space. Data in the original space characterizes <strong>Gaussian</strong>blobs while in the mapping space they correspond to hyperplanes. TheGMM vectors are surrounded with circles <strong>and</strong> correspond to the centersof the <strong>Gaussian</strong> kernels <strong>for</strong> which the <strong>mixture</strong> parameters do not vanish4 TrainingThe number of parameters intervening in (6) isN × C, so<strong>for</strong> <strong>clustering</strong> problems of reasonable size, <strong>for</strong> instance N =1.000 <strong>and</strong> C = 20, solving this QP, using st<strong>and</strong>ard packages,can quickly get out of h<strong>and</strong>. Chunking methods have beensuccessfully used to solve QP <strong>for</strong> large scale training problemssuch as SVM (Osuna et al. 1997). The idea consists insolving a QP problem using an active subset of parameters,referred to as a chunk, which is updated iteratively. When theQP is convex, by checking that the Gram matrix is positivedefinite, the process is guaranteed to converge to the globaloptimum after a sufficient number of iterations (Osuna et al.1997).Using the same principle as Osuna et al. (1997) <strong>and</strong> Platt(1999), we will show in this section that <strong>for</strong> a <strong>particular</strong> choiceof the active chunk, the QP (6) can be decomposed into linearprogramming subproblems each one can be solved trivially.4.1 DecompositionLet us fix one cluster index p ∈{1,...,C} <strong>and</strong> rewrite theobjective function (6)as:min 2 ∑ µi+ ∑here:i,k̸=pc ip = ∑j,l̸=pµ ip c ip∑j,l̸=k,pµ ik µ jl K σ (‖x i − x j ‖), (7)µ jl K σ (‖x i − x j ‖) (8)123