View - Ecole Centrale Paris

View - Ecole Centrale Paris

View - Ecole Centrale Paris

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

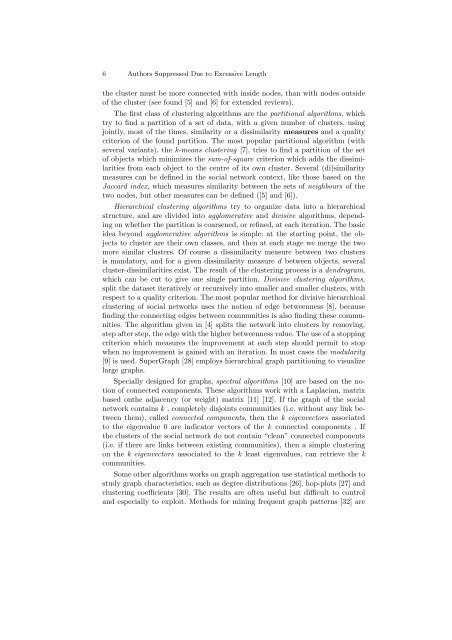

6 Authors Suppressed Due to Excessive Lengththe cluster must be more connected with inside nodes, than with nodes outsideof the cluster (see found [5] and [6] for extended reviews).The first class of clustering algorithms are the partitional algorithms, whichtry to find a partition of a set of data, with a given number of clusters, usingjointly, most of the times, similarity or a dissimilarity measures and a qualitycriterion of the found partition. The most popular partitional algorithm (withseveral variants), the k-means clustering [7], tries to find a partition of the setof objects which minimizes the sum-of-square criterion which adds the dissimilaritiesfrom each object to the centre of its own cluster. Several (di)similaritymeasures can be defined in the social network context, like those based on theJaccard index, which measures similarity between the sets of neighbours of thetwo nodes, but other measures can be defined ([5] and [6]).Hierarchical clustering algorithms try to organize data into a hierarchicalstructure, and are divided into agglomerative and divisive algorithms, dependingon whether the partition is coarsened, or refined, at each iteration. The basicidea beyond agglomerative algorithms is simple: at the starting point, the objectsto cluster are their own classes, and then at each stage we merge the twomore similar clusters. Of course a dissimilarity measure between two clustersis mandatory, and for a given dissimilarity measure d between objects, severalcluster-dissimilarities exist. The result of the clustering process is a dendrogram,which can be cut to give one single partition. Divisive clustering algorithms,split the dataset iteratively or recursively into smaller and smaller clusters, withrespect to a quality criterion. The most popular method for divisive hierarchicalclustering of social networks uses the notion of edge betweenness [8], becausefinding the connecting edges between communities is also finding these communities.The algorithm given in [4] splits the network into clusters by removing,stepafterstep,theedgewiththehigherbetweennessvalue.Theuseofastoppingcriterion which measures the improvement at each step should permit to stopwhen no improvement is gained with an iteration. In most cases the modularity[9] is used. SuperGraph [28] employs hierarchical graph partitioning to visualizelarge graphs.Specially designed for graphs, spectral algorithms [10] are based on the notionof connected components. These algorithms work with a Laplacian, matrixbased onthe adjacency (or weight) matrix [11] [12]. If the graph of the socialnetwork contains k , completely disjoints communities (i.e. without any link betweenthem), called connected components, then the k eigenvectors associatedto the eigenvalue 0 are indicator vectors of the k connected components . Ifthe clusters of the social network do not contain “clean” connected components(i.e. if there are links between existing communities), then a simple clusteringon the k eigenvectors associated to the k least eigenvalues, can retrieve the kcommunities.Some other algorithms works on graph aggregation use statistical methods tostudy graph characteristics, such as degree distributions [26], hop-plots [27] andclustering coefficients [30]. The results are often useful but difficult to controland especially to exploit. Methods for mining frequent graph patterns [32] are