- Page 1:

COMPUTATION AND NEURAL SYSTEMS SERI

- Page 4 and 5:

Library of Congress Cataloging-in-P

- Page 6 and 7:

viPrefacenew professional society h

- Page 8 and 9:

viiiPrefaceprocessing model, we hav

- Page 10 and 11:

xPrefaceThere are, however, several

- Page 12 and 13:

xiiContents4.3 The Hopfield Memory

- Page 15 and 16:

HIntroduction toANS TechnologyWhen

- Page 17 and 18:

Introduction to ANS TechnologyFigur

- Page 19 and 20:

Introduction to ANS TechnologyOutpu

- Page 21 and 22:

Introduction to ANS Technology(b)Fi

- Page 23 and 24:

1.1 Elementary NeurophysiologyCell

- Page 25 and 26:

1.1 Elementary Neurophysiology 11Pr

- Page 27 and 28:

1.1 Elementary Neurophysiology 13Fi

- Page 29 and 30:

1.1 Elementary Neurophysiology 15wh

- Page 31 and 32:

1 .2 From Neurons to ANS 1 7Exercis

- Page 33 and 34:

1.2 From Neurons to ANS 19Notice th

- Page 35 and 36:

1 .2 From Neurons to ANS 21units, t

- Page 38 and 39:

24 Introduction to ANS Technologya

- Page 40 and 41:

26 Introduction to ANS TechnologyOu

- Page 42 and 43:

28 Introduction to ANS TechnologyIn

- Page 44 and 45:

30 Introduction to ANS Technologyle

- Page 46 and 47:

32 Introduction to ANS TechnologyFi

- Page 48 and 49:

34 Introduction to ANS Technologyne

- Page 50 and 51:

36 Introduction to ANS TechnologyN

- Page 52 and 53:

38 Introduction to ANS Technologyou

- Page 54 and 55:

40 Introduction to ANS TechnologySy

- Page 56 and 57:

42 Introduction to ANS Technology[5

- Page 59 and 60: C H A P T E RAdaline and MadalineSi

- Page 61 and 62: 2.1 Review of Signal Processing 471

- Page 63 and 64: 2.1 Review of Signal Processing 49A

- Page 65 and 66: which describes a typical square wa

- Page 67 and 68: 2.1 Review of Signal Processing 53W

- Page 69 and 70: 2.2 Adaline and the Adaptive Linear

- Page 71 and 72: 2.2 Adaline and the Adaptive Linear

- Page 73 and 74: 2.2 Adaline and the Adaptive Linear

- Page 75 and 76: 2.2 Adaline and the Adaptive Linear

- Page 77 and 78: 2.2 Adaline and the Adaptive Linear

- Page 79 and 80: 2.2 Adaline and the Adaptive Linear

- Page 81 and 82: 2.2 Adaline and the Adaptive Linear

- Page 83 and 84: 2.3 Applications of Adaptive Signal

- Page 85 and 86: 2.3 Applications of Adaptive Signal

- Page 87 and 88: 2.4 The Madaline 73= -1.5Figure 2.1

- Page 89 and 90: 2.4 The Madaline 75with the least c

- Page 91 and 92: 2.4 The Madaline 77right of the top

- Page 93 and 94: 2.5 Simulating the Adaline 79Retina

- Page 95 and 96: 2.5 Simulating the Adalinerecord la

- Page 97 and 98: 2.5 Simulating the Adaline S3Adalin

- Page 99 and 100: 2.5 Simulating the Adaline 85mented

- Page 101: Bibliography 87[8] Rodney Winter an

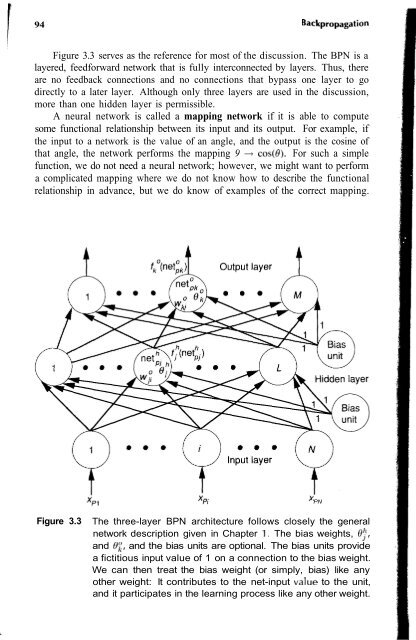

- Page 104 and 105: 90 BackpropagationOutput read in pa

- Page 106 and 107: 92 Backpropagationan inordinate amo

- Page 110 and 111: 96 Backpropagation1. Apply an input

- Page 112 and 113: 98 Backpropagationwhere we have use

- Page 114 and 115: 1 00 Backpropagationmethod. Moreove

- Page 116 and 117: 1 02 Backpropagation1. Apply the in

- Page 118 and 119: 104 Backpropagationexample, the lim

- Page 120 and 121: 106 Backpropagation-- E,wFigure 3.6

- Page 122 and 123: 108 BackpropagationFigure 3.7This B

- Page 124 and 125: 110 BackpropagationTraining the Net

- Page 126 and 127: 112 BackpropagationAutomatic Paint

- Page 128 and 129: 114 Backpropagationfor the network

- Page 130 and 131: 116 Backpropagation3.5.2 BPN Specia

- Page 132 and 133: 118 Backpropagationof the Adaline s

- Page 134 and 135: 120 BackpropagationThe final routin

- Page 136 and 137: 122 BackpropagationHere again, to i

- Page 138 and 139: 124 Backpropagationyour modificatio

- Page 141 and 142: HThe BAM and theHopfield MemoryThe

- Page 143 and 144: 4.1 Associative-Memory Definitions

- Page 145 and 146: 4.2 The BAM 131All the 6ij terms in

- Page 147 and 148: .^Although we consistently begin wi

- Page 149 and 150: 4.2 The BAM 135For our first trial,

- Page 151 and 152: 4.2 The BAM 137is fairly easy to ap

- Page 153 and 154: 4.2 The BAM 139term valley to refer

- Page 155 and 156: 4.3 The Hopfield Memory 141where a

- Page 157 and 158: 4.3 The Hopfield Memory 143Figure 4

- Page 159 and 160:

4.3 The Hopfield Memory 145A =50(a)

- Page 161 and 162:

4.3 The Hopfield Memory 147In Eq. (

- Page 163 and 164:

4.3 The Hopfield Memory 149The TSP

- Page 165 and 166:

4.3 The Hopfield Memory 1512. Energ

- Page 167 and 168:

4.3 The Hopfield Memory 153causes a

- Page 169 and 170:

4.3 The Hopfield Memory 155where Aw

- Page 171 and 172:

4.4 Simulating the BAM 1571 2 3 4 5

- Page 173 and 174:

4.4 Simulating the BAM 159weight_pt

- Page 175 and 176:

4.4 Simulating the BAM 161The disad

- Page 177 and 178:

4.4 Simulating the BAM 163for refer

- Page 179 and 180:

4.4 Simulating the BAM 165of signal

- Page 181 and 182:

Bibliography 167Suggested ReadingsI

- Page 183 and 184:

C H A P T E RSimulated AnnealingThe

- Page 185 and 186:

5.1 Information Theory and Statisti

- Page 187 and 188:

5.1 Information Theory and Statisti

- Page 189 and 190:

5.1 Information Theory and Statisti

- Page 191 and 192:

5.1 Information Theory and Statisti

- Page 193 and 194:

5.2 The Boltzmann Machine 179where

- Page 195 and 196:

5.2 The Boltzmann Machine 1811. For

- Page 197 and 198:

5.2 The Boltzmann Machine 183popula

- Page 199 and 200:

5.2 The Boltzmann Machine 185Hf, on

- Page 201 and 202:

5.2 The Boltzmann Machine 187Thenan

- Page 203 and 204:

5.3 The Boltzmann Simulator 189Fort

- Page 205 and 206:

5.3 The Boltzmann Simulator 191(b)F

- Page 207 and 208:

5.3 The Boltzmann Simulator 193We w

- Page 209 and 210:

5.3 The Boltzmann Simulator 195ANNE

- Page 211 and 212:

5.3 The Boltzmann Simulator 197appl

- Page 213 and 214:

5.3 The Boltzmann Simulator 199Befo

- Page 215 and 216:

5.3 The Boltzmann Simulator 201Fina

- Page 217 and 218:

5.3 The Boltzmann Simulator 203begi

- Page 219 and 220:

5.3 The Boltzmann Simulator 205begi

- Page 221 and 222:

5.4 Using the Boltzmann Simulator 2

- Page 223 and 224:

5.4 Using the Boltzmann Simulator 2

- Page 225 and 226:

Suggested Readings 211All that rema

- Page 227 and 228:

C H A P T E RThe Counterpropagation

- Page 229 and 230:

6.1 CPN Building Blocks 215y' Outpu

- Page 231 and 232:

6.1 CRN Building Blocks 217The vect

- Page 233 and 234:

6.1 CPN Building Blocks 219(a)(b)Fi

- Page 235 and 236:

6.1 CPN Building Blocks 221Initial

- Page 237 and 238:

6.1 CPN Building Blocks 223Aw = cc(

- Page 239 and 240:

6.1 CPN Building Blocks 225'! '2 '

- Page 241 and 242:

6.1 CRN Building Blocks 227where x'

- Page 243 and 244:

6.1 CPN Building Blocks 229f(w)w(a)

- Page 245 and 246:

6.1 CPN Building Blocks 231y' Outpu

- Page 247 and 248:

6.1 CRN Building Blocks 233UCRUCS\(

- Page 249 and 250:

6.2 CPN Data Processing 2356.2 CPN

- Page 251 and 252:

6.2 CPN Data Processing 237The comp

- Page 253 and 254:

6.2 CPN Data Processing 239those ou

- Page 255 and 256:

6.2 CRN Data Processing 241Region o

- Page 257 and 258:

6.2 CRN Data Processing 243x' Outpu

- Page 259 and 260:

,6.3 An Image-Classification Exampl

- Page 261 and 262:

6.4 The CPN Simulator 247(a)(b)Figu

- Page 263 and 264:

6.4 The CPN Simulator 249outsweight

- Page 265 and 266:

6.4 The CPN Simulator 251our simula

- Page 267 and 268:

6.4 The CRN Simulator 253Before we

- Page 269 and 270:

6.4 The CRN Simulator 255learned, w

- Page 271 and 272:

6.4 The CPN Simulator 257doj = rand

- Page 273 and 274:

6.4 The CRN Simulator 259VFigure 6.

- Page 275 and 276:

Programming Exercises 261code for t

- Page 277 and 278:

C H A P T E RSelf-Organizing MapsTh

- Page 279 and 280:

7.1 SOM Data Processing 265topology

- Page 281 and 282:

7.1 SOM Data Processing 267(a)(b)Fi

- Page 283 and 284:

7.1 SOM Data Processing 269(0,0) (0

- Page 285 and 286:

7.1 SOM Data Processing 271(a)(b)Fi

- Page 287 and 288:

7.1 SOM Data Processing 273to assoc

- Page 289 and 290:

7.2 Applications of Self-Organizing

- Page 291 and 292:

7.2 Applications of Self-Organizing

- Page 293 and 294:

7.3 Simulating the SOM 279The resul

- Page 295 and 296:

7.3 Simulating the SOM 281model of

- Page 297 and 298:

7.3 Simulating the SOM 283domag = p

- Page 299 and 300:

7.3 Simulating the SOM 285row = (W-

- Page 301 and 302:

7.3 Simulating the SOM 287Figure 7.

- Page 303 and 304:

Bibliography 289to the number of tr

- Page 305 and 306:

H A P T E RAdaptiveResonance Theory

- Page 307 and 308:

8.1 ART Network Description 293equi

- Page 309 and 310:

8.1 ART Network Description 2951 0

- Page 311 and 312:

8.1 ART Network Description 2978.1.

- Page 313 and 314:

8.2 ART1 299Exercise 8.2: Show that

- Page 315 and 316:

8.2 ART1 301is inactive, G is not i

- Page 317 and 318:

8.2 ART1 303To all F 2 unitsFrom F,

- Page 319 and 320:

8.2 ART1 305from the F 2 layer. Sin

- Page 321 and 322:

8.2 ART1 307on connections from tho

- Page 323 and 324:

8.2 ART1 309Recall that |S| = |I| w

- Page 325 and 326:

8.2 ART1 311on FI and N be the numb

- Page 327 and 328:

8.2 ART1 313Since L = 3 and M = 5,

- Page 329 and 330:

8.2 ART1 315In this case, the equil

- Page 331 and 332:

8.3 ART2 317Orienting , Attentional

- Page 333 and 334:

8.3 ART2 319of keeping the activati

- Page 335 and 336:

8.3 ART2 321Notice that, after the

- Page 337 and 338:

8.3 ART2 323not want a reset in thi

- Page 339 and 340:

8.3 ART2 3254. Propagate forward to

- Page 341 and 342:

8.4 The ART1 Simulator 327and the t

- Page 343 and 344:

8.4 The ART1 Simulator 329rho : flo

- Page 345 and 346:

8.4 The ART1 Simulator 331Notice th

- Page 347 and 348:

8.4 The ART1 Simulator 333for i = 1

- Page 349 and 350:

8.4 The ART1 Simulator 335• We up

- Page 351 and 352:

Suggested Readings 337Programming E

- Page 353:

Bibliography 339[11] Stephen Grossb

- Page 356 and 357:

342 Spatiotemporal Pattern Classifi

- Page 358 and 359:

344 Spatiotemporal Pattern Classifi

- Page 360 and 361:

346 Spatiotemporal Pattern Classifi

- Page 362 and 363:

348 Spatiotemporal Pattern Classifi

- Page 364 and 365:

350 Spatiotemporal Pattern Classifi

- Page 366 and 367:

352 Spatiotemporal Pattern Classifi

- Page 368 and 369:

354 Spatiotemporal Pattern Classifi

- Page 370 and 371:

356 Spatiotemporal Pattern Classifi

- Page 372 and 373:

358 Spatiotemporal Pattern Classifi

- Page 374 and 375:

360 Spatiotemporal Pattern Classifi

- Page 376 and 377:

362 Spatiotemporal Pattern Classifi

- Page 378 and 379:

364 Spatiotemporal Pattern Classifi

- Page 380 and 381:

366 Spatiotemporal Pattern Classifi

- Page 382 and 383:

368 Spatiotemporal Pattern Classifi

- Page 384 and 385:

370 Programming Exercisesthe networ

- Page 387 and 388:

C H A P T E RThe NeocognitronANS ar

- Page 389 and 390:

The Neocognitron 375The visual cort

- Page 391 and 392:

10.1 Neocognitron Architecture 377S

- Page 393 and 394:

10.1 Neocognitron Architecture 379(

- Page 395 and 396:

10.2 Neocognitron Data Processing 3

- Page 397 and 398:

10.2 Neocognitron Data Processing 3

- Page 399 and 400:

10.2 Neocognitron Data Processing 3

- Page 401 and 402:

10.2 Neocognitron Data Processing 3

- Page 403 and 404:

10.3 Performance of the Neocognitro

- Page 405 and 406:

10.4 Addition of Lateral Inhibition

- Page 407:

Bibliography 393References at the e

- Page 410 and 411:

396 Indexsummary, 101, 160training

- Page 412 and 413:

398 Indexdifferential, 359, 361lear

- Page 414 and 415:

400 Indexassociator (A) units, 22,