download LR pdf - Kabk

download LR pdf - Kabk

download LR pdf - Kabk

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

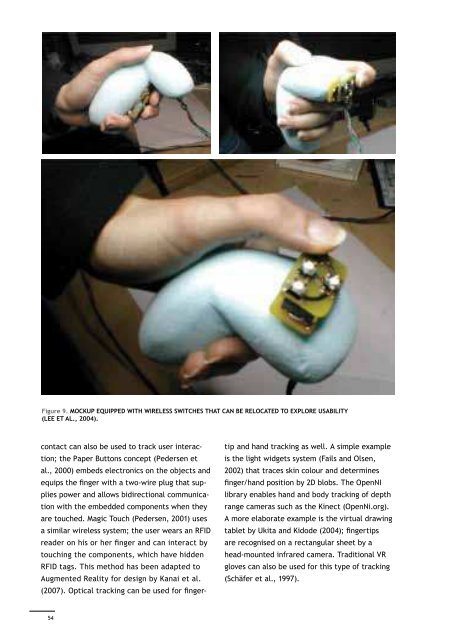

3.3 Other input modalitiesSpeech and gesture recognition require considerationin AR as well. In particular, pen-basedinteraction would be a natural extension to theexpressiveness of today’s designer skills. Oviattet al. (2000) offer an comprehensive overview ofthe so-called Recognition-Based User Interfaces(RUIs), including the issues and Human Factorsaspects of these modalities. Furthermore,speech-based interaction can also be useful toactivate operations while the hands are used forselection.4. Conclusions and FurtherreadingThis article introduces two important hardwaresystems for AR: displays and input technologies.To superimpose virtual images onto physicalmodels, head mounted-displays (HMDs), seethroughboards, projection-based techniquesand embedded displays have been employed.An important observation is that HMDs, thoughbest known by the public, have serious limitationsand constraints in terms of the field ofview and resolution and lend themselves to akind of isolation. For all display technologies,the current challenges include an untetheredinterface, the enhancement of graphics capabilities,visual coverage of the display and improvementof resolution. LED-based laser projectionand OLEDs are expected to play an importantrole in the next generation of IAP devicesbecause this technology can be employed bysee-through or projection-based displays.To interactively merge the digital and physicalparts of Augmented prototypes, position andorientation tracking of the physical componentsis needed, as well as additional user inputmeans. For global position tracking, a variety ofprinciples exist. Optical tracking and scanningsuffer from the issues concerning line of sightand occlusion. Magnetic, mechanical linkage andultrasound-based position trackers are obtrusiveand only a limited number of trackers can beused concurrently.The resulting palette of solutions is summarizedin Table 3 as a morphological chart. In devising asolution for your AR system, you can use this asa checklist or inspiration of display and input.Figure 9. Mockup equipped with wireless switches that can be relocated to explore usability(Lee et al., 2004).contact can also be used to track user interac-tip and hand tracking as well. A simple exampleDisplay Imaging principleVideo MixingProjectorbasedSee-throughtion; the Paper Buttons concept (Pedersen etal., 2000) embeds electronics on the objects andequips the finger with a two-wire plug that suppliespower and allows bidirectional communicationwith the embedded components when theyare touched. Magic Touch (Pedersen, 2001) usesa similar wireless system; the user wears an RFIDreader on his or her finger and can interact byis the light widgets system (Fails and Olsen,2002) that traces skin colour and determinesfinger/hand position by 2D blobs. The OpenNIlibrary enables hand and body tracking of depthrange cameras such as the Kinect (OpenNi.org).A more elaborate example is the virtual drawingtablet by Ukita and Kidode (2004); fingertipsare recognised on a rectangular sheet by aDisplay arrangmentInput technologiesPositiontrackingEventsensingHeadattachedHandheld/wearableMagnetic PassivemarkersPhysical sensorsWiredconnectionWirelessSpatialOpticalActive 3D lasermarkers scanningVirtualSurfacetracking3DtrackingUltrasoundMechanicaltouching the components, which have hiddenhead-mounted infrared camera. Traditional VRRFID tags. This method has been adapted togloves can also be used for this type of trackingTable 3. Morphological chart of AR enabling technologies.Augmented Reality for design by Kanai et al.(Schäfer et al., 1997).(2007). Optical tracking can be used for finger -54 55