Shared Gaussian Process Latent Variables Models - Oxford Brookes ...

Shared Gaussian Process Latent Variables Models - Oxford Brookes ...

Shared Gaussian Process Latent Variables Models - Oxford Brookes ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

2.5. NON-LINEAR 31<br />

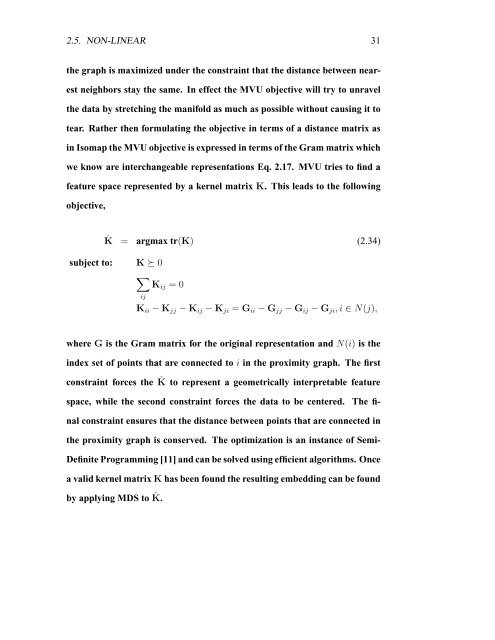

the graph is maximized under the constraint that the distance between near-<br />

est neighbors stay the same. In effect the MVU objective will try to unravel<br />

the data by stretching the manifold as much as possible without causing it to<br />

tear. Rather then formulating the objective in terms of a distance matrix as<br />

in Isomap the MVU objective is expressed in terms of the Gram matrix which<br />

we know are interchangeable representations Eq. 2.17. MVU tries to find a<br />

feature space represented by a kernel matrix K. This leads to the following<br />

objective,<br />

ˆK = argmax tr(K) (2.34)<br />

subject to: K 0<br />

<br />

Kij = 0<br />

ij<br />

Kii − Kjj − Kij − Kji = Gii − Gjj − Gij − Gji, i ∈ N(j),<br />

where G is the Gram matrix for the original representation and N(i) is the<br />

index set of points that are connected to i in the proximity graph. The first<br />

constraint forces the ˆ K to represent a geometrically interpretable feature<br />

space, while the second constraint forces the data to be centered. The fi-<br />

nal constraint ensures that the distance between points that are connected in<br />

the proximity graph is conserved. The optimization is an instance of Semi-<br />

Definite Programming [11] and can be solved using efficient algorithms. Once<br />

a valid kernel matrix K has been found the resulting embedding can be found<br />

by applying MDS to ˆ K.