Shared Gaussian Process Latent Variables Models - Oxford Brookes ...

Shared Gaussian Process Latent Variables Models - Oxford Brookes ...

Shared Gaussian Process Latent Variables Models - Oxford Brookes ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

2.6. GENERATIVE DIMENSIONALITY REDUCTION 35<br />

X<br />

Y<br />

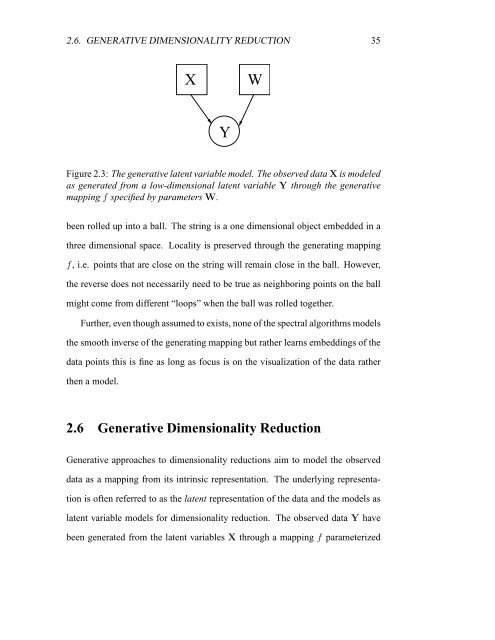

Figure 2.3: The generative latent variable model. The observed data X is modeled<br />

as generated from a low-dimensional latent variable Y through the generative<br />

mapping f specified by parameters W.<br />

been rolled up into a ball. The string is a one dimensional object embedded in a<br />

three dimensional space. Locality is preserved through the generating mapping<br />

f, i.e. points that are close on the string will remain close in the ball. However,<br />

the reverse does not necessarily need to be true as neighboring points on the ball<br />

W<br />

might come from different “loops” when the ball was rolled together.<br />

Further, even though assumed to exists, none of the spectral algorithms models<br />

the smooth inverse of the generating mapping but rather learns embeddings of the<br />

data points this is fine as long as focus is on the visualization of the data rather<br />

then a model.<br />

2.6 Generative Dimensionality Reduction<br />

Generative approaches to dimensionality reductions aim to model the observed<br />

data as a mapping from its intrinsic representation. The underlying representa-<br />

tion is often referred to as the latent representation of the data and the models as<br />

latent variable models for dimensionality reduction. The observed data Y have<br />

been generated from the latent variables X through a mapping f parameterized