Automatic Extraction of Examples for Word Sense Disambiguation

Automatic Extraction of Examples for Word Sense Disambiguation

Automatic Extraction of Examples for Word Sense Disambiguation

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

CHAPTER 2. BASIC APPROACHES TO WORD SENSE DISAMBIGUATION 14<br />

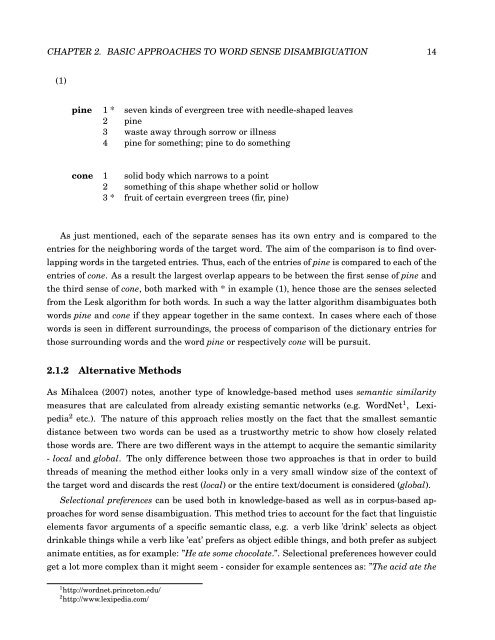

(1)<br />

pine 1 * seven kinds <strong>of</strong> evergreen tree with needle-shaped leaves<br />

2 pine<br />

3 waste away through sorrow or illness<br />

4 pine <strong>for</strong> something; pine to do something<br />

cone 1 solid body which narrows to a point<br />

2 something <strong>of</strong> this shape whether solid or hollow<br />

3 * fruit <strong>of</strong> certain evergreen trees (fir, pine)<br />

As just mentioned, each <strong>of</strong> the separate senses has its own entry and is compared to the<br />

entries <strong>for</strong> the neighboring words <strong>of</strong> the target word. The aim <strong>of</strong> the comparison is to find over-<br />

lapping words in the targeted entries. Thus, each <strong>of</strong> the entries <strong>of</strong> pine is compared to each <strong>of</strong> the<br />

entries <strong>of</strong> cone. As a result the largest overlap appears to be between the first sense <strong>of</strong> pine and<br />

the third sense <strong>of</strong> cone, both marked with * in example (1), hence those are the senses selected<br />

from the Lesk algorithm <strong>for</strong> both words. In such a way the latter algorithm disambiguates both<br />

words pine and cone if they appear together in the same context. In cases where each <strong>of</strong> those<br />

words is seen in different surroundings, the process <strong>of</strong> comparison <strong>of</strong> the dictionary entries <strong>for</strong><br />

those surrounding words and the word pine or respectively cone will be pursuit.<br />

2.1.2 Alternative Methods<br />

As Mihalcea (2007) notes, another type <strong>of</strong> knowledge-based method uses semantic similarity<br />

measures that are calculated from already existing semantic networks (e.g. <strong>Word</strong>Net 1 , Lexi-<br />

pedia 2 etc.). The nature <strong>of</strong> this approach relies mostly on the fact that the smallest semantic<br />

distance between two words can be used as a trustworthy metric to show how closely related<br />

those words are. There are two different ways in the attempt to acquire the semantic similarity<br />

- local and global. The only difference between those two approaches is that in order to build<br />

threads <strong>of</strong> meaning the method either looks only in a very small window size <strong>of</strong> the context <strong>of</strong><br />

the target word and discards the rest (local) or the entire text/document is considered (global).<br />

Selectional preferences can be used both in knowledge-based as well as in corpus-based ap-<br />

proaches <strong>for</strong> word sense disambiguation. This method tries to account <strong>for</strong> the fact that linguistic<br />

elements favor arguments <strong>of</strong> a specific semantic class, e.g. a verb like ’drink’ selects as object<br />

drinkable things while a verb like ’eat’ prefers as object edible things, and both prefer as subject<br />

animate entities, as <strong>for</strong> example: ”He ate some chocolate.”. Selectional preferences however could<br />

get a lot more complex than it might seem - consider <strong>for</strong> example sentences as: ”The acid ate the<br />

1 http://wordnet.princeton.edu/<br />

2 http://www.lexipedia.com/