Automatic Extraction of Examples for Word Sense Disambiguation

Automatic Extraction of Examples for Word Sense Disambiguation

Automatic Extraction of Examples for Word Sense Disambiguation

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

CHAPTER 4. EVALUATION OF WSD SYSTEMS 42<br />

67.3%. From this result we could conclude that the expected per<strong>for</strong>mance <strong>of</strong> the systems in that<br />

task was in the range between the MFS and the inter-annotator agreement (IAA). However, the<br />

best per<strong>for</strong>ming system was reported to have reached an accuracy <strong>of</strong> 79.3% (coarse) and 72.9%<br />

(fine). Those considerably unexpected results could either mean that the IAA achieved by the<br />

OMWE is not good enough to be used as an upper bound <strong>for</strong> the task or that the systems reach<br />

considerably better per<strong>for</strong>mance than humans do <strong>for</strong> the given data.<br />

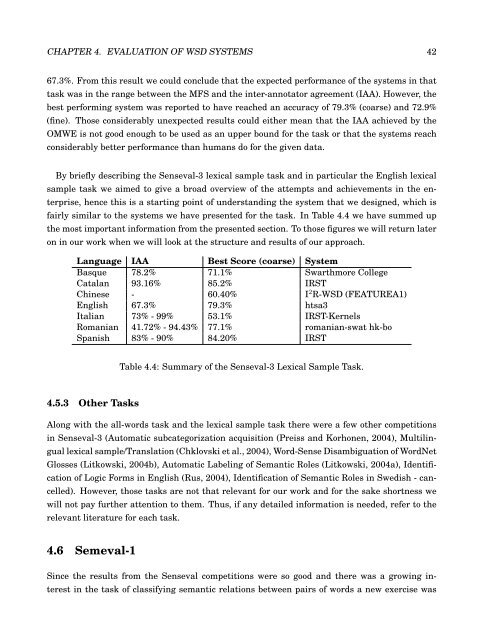

By briefly describing the <strong>Sense</strong>val-3 lexical sample task and in particular the English lexical<br />

sample task we aimed to give a broad overview <strong>of</strong> the attempts and achievements in the en-<br />

terprise, hence this is a starting point <strong>of</strong> understanding the system that we designed, which is<br />

fairly similar to the systems we have presented <strong>for</strong> the task. In Table 4.4 we have summed up<br />

the most important in<strong>for</strong>mation from the presented section. To those figures we will return later<br />

on in our work when we will look at the structure and results <strong>of</strong> our approach.<br />

Language IAA Best Score (coarse) System<br />

Basque 78.2% 71.1% Swarthmore College<br />

Catalan 93.16% 85.2% IRST<br />

Chinese - 60.40% I 2 R-WSD (FEATUREA1)<br />

English 67.3% 79.3% htsa3<br />

Italian 73% - 99% 53.1% IRST-Kernels<br />

Romanian 41.72% - 94.43% 77.1% romanian-swat hk-bo<br />

Spanish 83% - 90% 84.20% IRST<br />

4.5.3 Other Tasks<br />

Table 4.4: Summary <strong>of</strong> the <strong>Sense</strong>val-3 Lexical Sample Task.<br />

Along with the all-words task and the lexical sample task there were a few other competitions<br />

in <strong>Sense</strong>val-3 (<strong>Automatic</strong> subcategorization acquisition (Preiss and Korhonen, 2004), Multilin-<br />

gual lexical sample/Translation (Chklovski et al., 2004), <strong>Word</strong>-<strong>Sense</strong> <strong>Disambiguation</strong> <strong>of</strong> <strong>Word</strong>Net<br />

Glosses (Litkowski, 2004b), <strong>Automatic</strong> Labeling <strong>of</strong> Semantic Roles (Litkowski, 2004a), Identifi-<br />

cation <strong>of</strong> Logic Forms in English (Rus, 2004), Identification <strong>of</strong> Semantic Roles in Swedish - can-<br />

celled). However, those tasks are not that relevant <strong>for</strong> our work and <strong>for</strong> the sake shortness we<br />

will not pay further attention to them. Thus, if any detailed in<strong>for</strong>mation is needed, refer to the<br />

relevant literature <strong>for</strong> each task.<br />

4.6 Semeval-1<br />

Since the results from the <strong>Sense</strong>val competitions were so good and there was a growing in-<br />

terest in the task <strong>of</strong> classifying semantic relations between pairs <strong>of</strong> words a new exercise was