Automatic Extraction of Examples for Word Sense Disambiguation

Automatic Extraction of Examples for Word Sense Disambiguation

Automatic Extraction of Examples for Word Sense Disambiguation

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

CHAPTER 2. BASIC APPROACHES TO WORD SENSE DISAMBIGUATION 24<br />

that represent the relevant in<strong>for</strong>mation about the example they refer to. Those attributes are<br />

predefined and picked up uni<strong>for</strong>mly <strong>for</strong> each instance so that each vector has the same number<br />

and type <strong>of</strong> features. There are three types <strong>of</strong> features from which the selection can be made<br />

- local features, global features and syntactic dependencies. Local features describe the narrow<br />

context around the target word - bigrams, trigrams (other n-grams) that are generally consti-<br />

tuted by lemmas, POS tags, word-<strong>for</strong>ms together with their positions with respect to the target<br />

word. Global features on the other hand consider not only the local context around the word<br />

that is being disambiguated, but the whole context (whole sentences, paragraphs, documents)<br />

aiming to describe best the semantic domain in which the target word is found. The third type<br />

<strong>of</strong> features that can be extracted are the syntactic dependencies. They are normally extracted<br />

at a sentence level using heuristic patterns and regular expressions in order to gain a better<br />

representation <strong>for</strong> the syntactic cues and argument-head relations such as subject, object, noun-<br />

modifier, preposition etc.<br />

Commonly, features are selected out <strong>of</strong> a predefined pool <strong>of</strong> features (PF), which consists out<br />

<strong>of</strong> the most relevant features <strong>for</strong> the approached task. We compiled such PF (see Appendix B)<br />

being mostly influenced <strong>for</strong> our choice by (Dinu and Kübler, 2007) and (Mihalcea, 2002).<br />

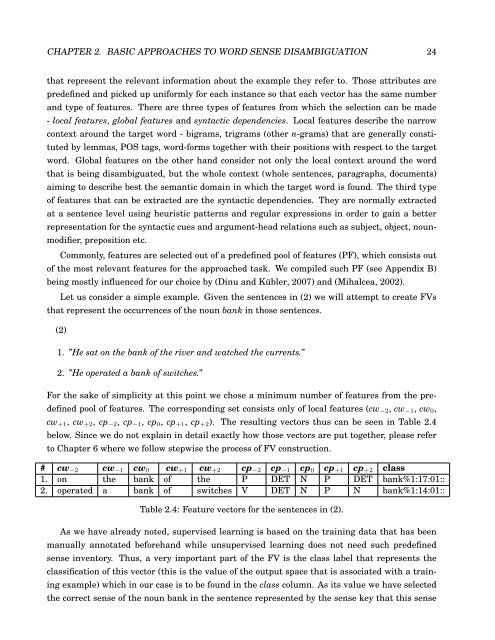

Let us consider a simple example. Given the sentences in (2) we will attempt to create FVs<br />

that represent the occurrences <strong>of</strong> the noun bank in those sentences.<br />

(2)<br />

1. ”He sat on the bank <strong>of</strong> the river and watched the currents.”<br />

2. ”He operated a bank <strong>of</strong> switches.”<br />

For the sake <strong>of</strong> simplicity at this point we chose a minimum number <strong>of</strong> features from the pre-<br />

defined pool <strong>of</strong> features. The corresponding set consists only <strong>of</strong> local features (cw−2, cw−1, cw0,<br />

cw+1, cw+2, cp−2, cp−1, cp0, cp+1, cp+2). The resulting vectors thus can be seen in Table 2.4<br />

below. Since we do not explain in detail exactly how those vectors are put together, please refer<br />

to Chapter 6 where we follow stepwise the process <strong>of</strong> FV construction.<br />

# cw−2 cw−1 cw0 cw+1 cw+2 cp−2 cp−1 cp0 cp+1 cp+2 class<br />

1. on the bank <strong>of</strong> the P DET N P DET bank%1:17:01::<br />

2. operated a bank <strong>of</strong> switches V DET N P N bank%1:14:01::<br />

Table 2.4: Feature vectors <strong>for</strong> the sentences in (2).<br />

As we have already noted, supervised learning is based on the training data that has been<br />

manually annotated be<strong>for</strong>ehand while unsupervised learning does not need such predefined<br />

sense inventory. Thus, a very important part <strong>of</strong> the FV is the class label that represents the<br />

classification <strong>of</strong> this vector (this is the value <strong>of</strong> the output space that is associated with a train-<br />

ing example) which in our case is to be found in the class column. As its value we have selected<br />

the correct sense <strong>of</strong> the noun bank in the sentence represented by the sense key that this sense