Automatic Extraction of Examples for Word Sense Disambiguation

Automatic Extraction of Examples for Word Sense Disambiguation

Automatic Extraction of Examples for Word Sense Disambiguation

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

CHAPTER 6. AUTOMATIC EXTRACTION OF EXAMPLES FOR WSD 61<br />

course, this is good especially <strong>for</strong> fully supervised approaches, since then the training data is<br />

considerably less and exceptional cases can be represented only with a few instances. A negative<br />

feature, however, is the fact that each instance is taken into account with all its features present<br />

in the FV. Since memory-based learners are extremely sensitive to irrelevant features this leads<br />

to a significant decrease in accuracy whenever such features are present.<br />

For this purpose we use a <strong>for</strong>ward-backward algorithm similar to (Dinu and Kübler, 2007)<br />

or (Mihalcea, 2002) which improves the per<strong>for</strong>mance <strong>of</strong> a word-expert by selecting a subset <strong>of</strong><br />

features from the provided FV <strong>for</strong> the targeted word. This subset has less but more relevant <strong>for</strong><br />

the disambiguation process features.<br />

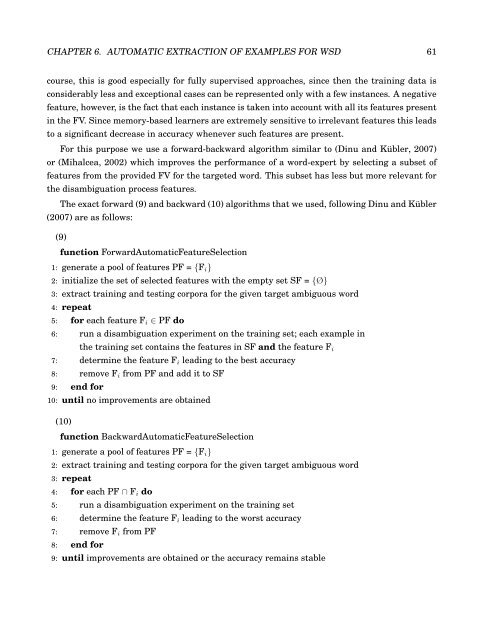

The exact <strong>for</strong>ward (9) and backward (10) algorithms that we used, following Dinu and Kübler<br />

(2007) are as follows:<br />

(9)<br />

function Forward<strong>Automatic</strong>FeatureSelection<br />

1: generate a pool <strong>of</strong> features PF = {Fi}<br />

2: initialize the set <strong>of</strong> selected features with the empty set SF = {Ø}<br />

3: extract training and testing corpora <strong>for</strong> the given target ambiguous word<br />

4: repeat<br />

5: <strong>for</strong> each feature Fi ∈ PF do<br />

6: run a disambiguation experiment on the training set; each example in<br />

the training set contains the features in SF and the feature Fi<br />

7: determine the feature Fi leading to the best accuracy<br />

8: remove Fi from PF and add it to SF<br />

9: end <strong>for</strong><br />

10: until no improvements are obtained<br />

(10)<br />

function Backward<strong>Automatic</strong>FeatureSelection<br />

1: generate a pool <strong>of</strong> features PF = {Fi}<br />

2: extract training and testing corpora <strong>for</strong> the given target ambiguous word<br />

3: repeat<br />

4: <strong>for</strong> each PF ∩ Fi do<br />

5: run a disambiguation experiment on the training set<br />

6: determine the feature Fi leading to the worst accuracy<br />

7: remove Fi from PF<br />

8: end <strong>for</strong><br />

9: until improvements are obtained or the accuracy remains stable