AD Tutorial at the TU Berlin

AD Tutorial at the TU Berlin

AD Tutorial at the TU Berlin

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

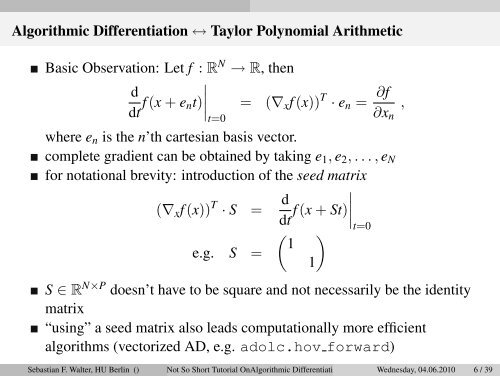

Algorithmic Differenti<strong>at</strong>ion ↔ Taylor Polynomial Arithmetic<br />

Basic Observ<strong>at</strong>ion: Let f : R N → R, <strong>the</strong>n<br />

d<br />

dt f (x + e nt)<br />

∣ = (∇ x f (x)) T · e n = ∂f ,<br />

t=0<br />

∂x n<br />

where e n is <strong>the</strong> n’th cartesian basis vector.<br />

complete gradient can be obtained by taking e 1 , e 2 , . . . , e N<br />

for not<strong>at</strong>ional brevity: introduction of <strong>the</strong> seed m<strong>at</strong>rix<br />

(∇ x f (x)) T · S = d dt f (x + St) ∣<br />

∣∣∣t=0<br />

e.g. S =<br />

( 1<br />

1)<br />

S ∈ R N×P doesn’t have to be square and not necessarily be <strong>the</strong> identity<br />

m<strong>at</strong>rix<br />

“using” a seed m<strong>at</strong>rix also leads comput<strong>at</strong>ionally more efficient<br />

algorithms (vectorized <strong>AD</strong>, e.g. adolc.hov forward)<br />

Sebastian F. Walter, HU <strong>Berlin</strong> () Not So Short <strong>Tutorial</strong> OnAlgorithmic Differenti<strong>at</strong>ion Wednesday, 04.06.2010 6 / 39