ISSUE 152 : Jan/Feb - 2002 - Australian Defence Force Journal

ISSUE 152 : Jan/Feb - 2002 - Australian Defence Force Journal

ISSUE 152 : Jan/Feb - 2002 - Australian Defence Force Journal

- No tags were found...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

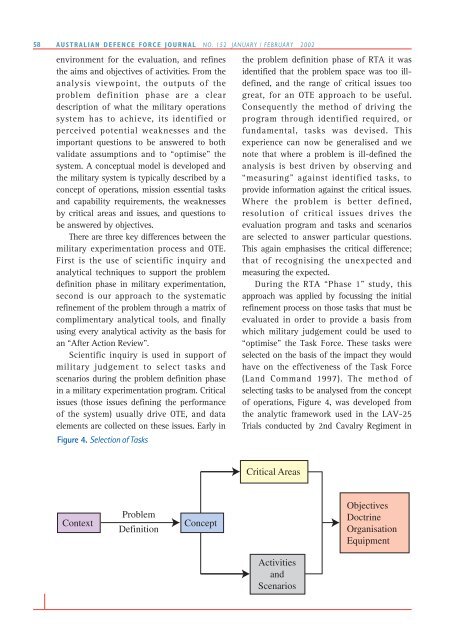

58AUSTRALIAN DEFENCE FORCE JOURNAL NO. <strong>152</strong> JANUARY / FEBRUARY <strong>2002</strong>environment for the evaluation, and refinesthe aims and objectives of activities. From theanalysis viewpoint, the outputs of theproblem definition phase are a cleardescription of what the military operationssystem has to achieve, its identified orperceived potential weaknesses and theimportant questions to be answered to bothvalidate assumptions and to “optimise” thesystem. A conceptual model is developed andthe military system is typically described by aconcept of operations, mission essential tasksand capability requirements, the weaknessesby critical areas and issues, and questions tobe answered by objectives.There are three key differences between themilitary experimentation process and OTE.First is the use of scientific inquiry andanalytical techniques to support the problemdefinition phase in military experimentation,second is our approach to the systematicrefinement of the problem through a matrix ofcomplimentary analytical tools, and finallyusing every analytical activity as the basis foran “After Action Review”.Scientific inquiry is used in support ofmilitary judgement to select tasks andscenarios during the problem definition phasein a military experimentation program. Criticalissues (those issues defining the performanceof the system) usually drive OTE, and dataelements are collected on these issues. Early inFigure 4. Selection of Tasksthe problem definition phase of RTA it wasidentified that the problem space was too illdefined,and the range of critical issues toogreat, for an OTE approach to be useful.Consequently the method of driving theprogram through identified required, orfundamental, tasks was devised. Thisexperience can now be generalised and wenote that where a problem is ill-defined theanalysis is best driven by observing and“measuring” against identified tasks, toprovide information against the critical issues.Where the problem is better defined,resolution of critical issues drives theevaluation program and tasks and scenariosare selected to answer particular questions.This again emphasises the critical difference;that of recognising the unexpected andmeasuring the expected.During the RTA “Phase 1” study, thisapproach was applied by focussing the initialrefinement process on those tasks that must beevaluated in order to provide a basis fromwhich military judgement could be used to“optimise” the Task <strong>Force</strong>. These tasks wereselected on the basis of the impact they wouldhave on the effectiveness of the Task <strong>Force</strong>(Land Command 1997). The method ofselecting tasks to be analysed from the conceptof operations, Figure 4, was developed fromthe analytic framework used in the LAV-25Trials conducted by 2nd Cavalry Regiment inCritical AreasContextProblemDefinitionConceptObjectivesDoctrineOrganisationEquipmentActivitiesandScenarios