Iraqi Kurdistan All in the Timing

GEO_ExPro_v12i6

GEO_ExPro_v12i6

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Recent Advances <strong>in</strong> Technology<br />

code shown <strong>in</strong> <strong>the</strong> second table. We<br />

see that <strong>the</strong> addition of only three l<strong>in</strong>es<br />

turns <strong>the</strong> conventional program <strong>in</strong>to an<br />

MPI parallel program. At <strong>the</strong> start of<br />

<strong>the</strong> computation a copy of <strong>the</strong> program<br />

starts execution on each of <strong>the</strong> processors<br />

we have available. The two first l<strong>in</strong>es are<br />

calls provided by <strong>the</strong> MPI system and<br />

provide each of <strong>the</strong> execut<strong>in</strong>g programs<br />

with <strong>in</strong>formation on <strong>the</strong> total number of<br />

processors (<strong>the</strong> variable np) and a unique<br />

number <strong>in</strong> <strong>the</strong> range 0 to np identify<strong>in</strong>g<br />

each processor. Let us assume we have a<br />

system where np equals 1,000 and that<br />

<strong>the</strong> number of rectangles is equal to one<br />

million. The program <strong>the</strong>n works by<br />

number<strong>in</strong>g <strong>the</strong> rectangles used <strong>in</strong> <strong>the</strong> computation from 1<br />

to 1,000,000, and processor number 0 computes <strong>the</strong> sum of<br />

<strong>the</strong> areas for rectangles number 1, 1001, 2001… and so on up<br />

to one million. Processor number 1 does exactly <strong>the</strong> same,<br />

except it computes <strong>the</strong> sum of <strong>the</strong> areas for rectangles number<br />

2, 1002, 2002… and so on. When each processor has f<strong>in</strong>ished<br />

comput<strong>in</strong>g <strong>the</strong>ir sum, a s<strong>in</strong>gle MPI Reduce call will comb<strong>in</strong>e<br />

<strong>the</strong> sums from each of <strong>the</strong> 1,000 processors <strong>in</strong>to a f<strong>in</strong>al sum.<br />

The speedup for this k<strong>in</strong>d of problem is directly proportional<br />

to <strong>the</strong> number of processors used. Runn<strong>in</strong>g <strong>the</strong> program<br />

<strong>in</strong> Table 2 us<strong>in</strong>g 100 million rectangles on 12 processors<br />

gives a speedup of about 11.25, which is roughly what one<br />

would expect, allow<strong>in</strong>g some time for overhead. However,<br />

MPI_Comm_size(MPI_COMM_WORLD,&np);<br />

MPI_Comm_rank(MPI_COMM_WORLD,&myid);<br />

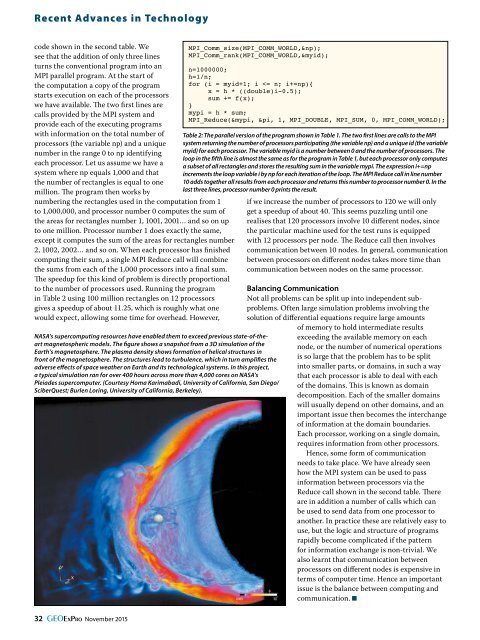

NASA’s supercomput<strong>in</strong>g resources have enabled <strong>the</strong>m to exceed previous state-of-<strong>the</strong>art<br />

magnetospheric models. The figure shows a snapshot from a 3D simulation of <strong>the</strong><br />

Earth’s magnetosphere. The plasma density shows formation of helical structures <strong>in</strong><br />

front of <strong>the</strong> magnetosphere. The structures lead to turbulence, which <strong>in</strong> turn amplifies <strong>the</strong><br />

adverse effects of space wea<strong>the</strong>r on Earth and its technological systems. In this project,<br />

a typical simulation ran for over 400 hours across more than 4,000 cores on NASA’s<br />

Pleiades supercomputer. (Courtesy Homa Karimabadi, University of California, San Diego/<br />

SciberQuest; Burlen Lor<strong>in</strong>g, University of California, Berkeley).<br />

n=1000000;<br />

h=1/n;<br />

for (i = myid+1; i