- Page 1 and 2:

Textbook of Patient Safety and Clin

- Page 3 and 4:

Liam Donaldson • Walter Ricciardi

- Page 5 and 6:

Foreword As a member of the 17th le

- Page 7 and 8:

Preface Despite the extensive atten

- Page 9 and 10:

Acknowledgements The volume editors

- Page 11 and 12:

xii Contents 11 Adverse Event Inves

- Page 13 and 14:

Part I Introduction

- Page 15 and 16: 4 explain how to prepare and update

- Page 17 and 18: 6 considered preventable) [11]. Fur

- Page 19 and 20: 8 W. Ricciardi and F. Cascini the l

- Page 21 and 22: 10 W. Ricciardi and F. Cascini inte

- Page 23 and 24: 12 maximized or perhaps even realiz

- Page 25 and 26: 14 steps in obtaining, managing, an

- Page 27 and 28: 16 quality improvement methodologie

- Page 29 and 30: 18 99. Morciano C, et al. Policies

- Page 31 and 32: 20 Reason travelled the world makin

- Page 33 and 34: 22 For each hospital unit, other do

- Page 35 and 36: 24 As head of clinical risk managem

- Page 37 and 38: 26 R. Tartaglia Table 2.1 Differenc

- Page 39 and 40: 28 R. Tartaglia Open Access This ch

- Page 41 and 42: 30 otherwise efficient brains lead

- Page 43 and 44: 32 • A routine violation is basic

- Page 45 and 46: 34 Table 3.1 Framework of contribut

- Page 47 and 48: 36 H. Higham and C. Vincent The con

- Page 49 and 50: 38 H. Higham and C. Vincent recogni

- Page 51 and 52: 40 H. Higham and C. Vincent Table 3

- Page 53 and 54: 42 assessment and prevention was no

- Page 55 and 56: 44 of human error, 2nd: 1983: Bella

- Page 57 and 58: 46 ment of theories on how to deliv

- Page 59 and 60: 48 all times, not only when there i

- Page 61 and 62: 50 healthcare, pursued by enthusias

- Page 63 and 64: 52 15. National Academies of Scienc

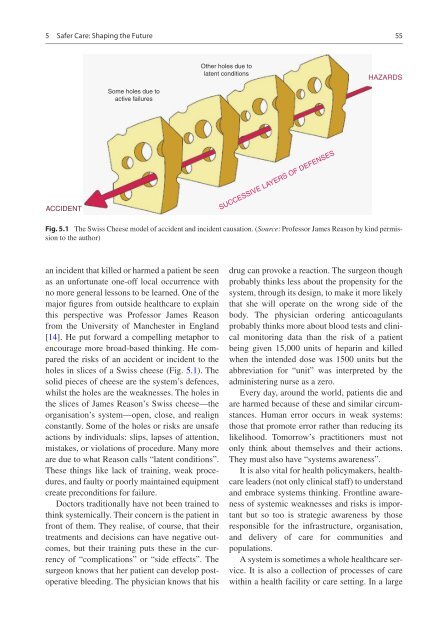

- Page 65: 54 In this chapter, I will reflect

- Page 69 and 70: 58 medicals, these are part of the

- Page 71 and 72: 60 facility and could therefore bec

- Page 73 and 74: 62 health. It is hoped that the Che

- Page 75 and 76: 64 Medication Without Harm, invites

- Page 77 and 78: 66 21. World Alliance for Patient S

- Page 79 and 80: 68 death of my late husband, Pat, f

- Page 81 and 82: 70 S. Sheridan et al. test. A separ

- Page 83 and 84: 72 enced some kind of harm due to h

- Page 85 and 86: 74 S. Sheridan et al. member of The

- Page 87 and 88: 76 6.7.1 Example: Canada 6.7.1.1 Wo

- Page 89 and 90: 78 All stakeholders must accept, va

- Page 91 and 92: Human Factors and Ergonomics in Hea

- Page 93 and 94: 7 Human Factors and Ergonomics in H

- Page 95 and 96: 7 Human Factors and Ergonomics in H

- Page 97 and 98: 7 Human Factors and Ergonomics in H

- Page 99 and 100: 7 Human Factors and Ergonomics in H

- Page 101 and 102: Patient Safety in the World Neelam

- Page 103 and 104: 8 Patient Safety in the World 95 8.

- Page 105 and 106: 8 Patient Safety in the World adopt

- Page 107 and 108: Infection Prevention and Control An

- Page 109 and 110: 9 Infection Prevention and Control

- Page 111 and 112: 9 Infection Prevention and Control

- Page 113 and 114: 9 Infection Prevention and Control

- Page 115 and 116: 9 Infection Prevention and Control

- Page 117 and 118:

9 Infection Prevention and Control

- Page 119 and 120:

9 Infection Prevention and Control

- Page 121 and 122:

9 Infection Prevention and Control

- Page 123 and 124:

9 Infection Prevention and Control

- Page 125 and 126:

The Patient Journey Elena Beleffi,

- Page 127 and 128:

10 The Patient Journey 119 End of e

- Page 129 and 130:

10 The Patient Journey 121 Fig. 10.

- Page 131 and 132:

10 The Patient Journey advocates) i

- Page 133 and 134:

10 The Patient Journey Table 10.2 T

- Page 135 and 136:

10 The Patient Journey 7. Internati

- Page 137 and 138:

130 from these interactions that pe

- Page 139 and 140:

132 Data integration is certainly t

- Page 141 and 142:

134 organizational processes, such

- Page 143 and 144:

136 Table 11.3 Scheme of contributo

- Page 145 and 146:

138 tocol requires one or more exte

- Page 147 and 148:

140 system failure. Quantification

- Page 149 and 150:

142 8. Carayon P, editor. Handbook

- Page 151 and 152:

144 similar industries, in terms of

- Page 153 and 154:

146 (2002) argue is a more effectiv

- Page 155 and 156:

148 for research in patient safety

- Page 157 and 158:

150 hospital in Kenya with focus on

- Page 159 and 160:

152 Haines et al. (2002) proposed a

- Page 161 and 162:

154 G. Dagliana et al. Fast Track -

- Page 163 and 164:

156 G. Dagliana et al. 11. Jha AK,

- Page 165 and 166:

Part III Patient Safety in the Main

- Page 167 and 168:

162 development of new receptor ant

- Page 169 and 170:

164 Physician burnout and the psych

- Page 171 and 172:

166 S. Damiani et al. Fig. 13.1 In

- Page 173 and 174:

168 mortality and has rapidly becom

- Page 175 and 176:

170 ing to patient safety and share

- Page 177 and 178:

172 ability to cope with the workin

- Page 179 and 180:

174 2. Vlayen A, Verelst S, Bekkeri

- Page 181 and 182:

Safe Surgery Saves Lives Francesco

- Page 183 and 184:

14 Safe Surgery Saves Lives manner

- Page 185 and 186:

14 Safe Surgery Saves Lives not yet

- Page 187 and 188:

14 Safe Surgery Saves Lives In a su

- Page 189 and 190:

14 Safe Surgery Saves Lives 14.15.1

- Page 191 and 192:

14 Safe Surgery Saves Lives • Tea

- Page 193 and 194:

Emergency Department Clinical Risk

- Page 195 and 196:

15 Emergency Department Clinical Ri

- Page 197 and 198:

15 Emergency Department Clinical Ri

- Page 199 and 200:

15 Emergency Department Clinical Ri

- Page 201 and 202:

15 Emergency Department Clinical Ri

- Page 203 and 204:

15 Emergency Department Clinical Ri

- Page 205 and 206:

15 Emergency Department Clinical Ri

- Page 207 and 208:

15 Emergency Department Clinical Ri

- Page 209 and 210:

206 • Pragmatic: in a healthcare

- Page 211 and 212:

208 Risks associated with pregravid

- Page 213 and 214:

210 Table 16.1 Common and Recurrent

- Page 215 and 216:

212 improved clinical surveillance

- Page 217 and 218:

214 (only IM wards or all medical w

- Page 219 and 220:

216 M. L. Regina et al. Table 17.1

- Page 221 and 222:

218 Table 17.2 Types and preventabi

- Page 223 and 224:

220 nicians were certain resulted w

- Page 225 and 226:

222 M. L. Regina et al. Table 17.5

- Page 227 and 228:

224 Table 17.6 “Debiasing questio

- Page 229 and 230:

226 in care. At a minimum, medicati

- Page 231 and 232:

228 cation rates there substantiall

- Page 233 and 234:

230 well-structured, concise, focus

- Page 235 and 236:

232 M. L. Regina et al. Fig. 17.3 W

- Page 237 and 238:

234 M. L. Regina et al. Table 17.12

- Page 239 and 240:

236 Table 17.14 Risk factors for as

- Page 241 and 242:

238 Table 17.16 Common risk factors

- Page 243 and 244:

240 In patients with kidney disease

- Page 245 and 246:

242 Table 17.19 Clinical response t

- Page 247 and 248:

244 stones. Giordano’s test posit

- Page 249 and 250:

246 in practice. Swiss Med Wkly. 20

- Page 251 and 252:

248 procedures done by general inte

- Page 253 and 254:

250 tion: a systematic review and m

- Page 255 and 256:

252 162. Improvement IfH. What is a

- Page 257 and 258:

254 and therapeutic strategies, all

- Page 259 and 260:

256 direct effect of radiotherapy o

- Page 261 and 262:

258 obtaining treatment consent and

- Page 263 and 264:

260 A. Marcolongo et al. Table 18.1

- Page 265 and 266:

262 A. Marcolongo et al. Toxicities

- Page 267 and 268:

264 nies that are aimed at building

- Page 269 and 270:

266 A. Marcolongo et al. tissues. B

- Page 271 and 272:

268 and breast cancer) and can be r

- Page 273 and 274:

270 happened during the first three

- Page 275 and 276:

272 40. World Health Organization (

- Page 277 and 278:

Patient Safety in Orthopedics and T

- Page 279 and 280:

19 Patient Safety in Orthopedics an

- Page 281 and 282:

19 Patient Safety in Orthopedics an

- Page 283 and 284:

19 Patient Safety in Orthopedics an

- Page 285 and 286:

19 Patient Safety in Orthopedics an

- Page 287 and 288:

19 Patient Safety in Orthopedics an

- Page 289 and 290:

Patient Safety and Risk Management

- Page 291 and 292:

20 Patient Safety and Risk Manageme

- Page 293 and 294:

20 Patient Safety and Risk Manageme

- Page 295 and 296:

20 Patient Safety and Risk Manageme

- Page 297 and 298:

20 Patient Safety and Risk Manageme

- Page 299 and 300:

20 Patient Safety and Risk Manageme

- Page 301 and 302:

Patient Safety in Pediatrics Sara A

- Page 303 and 304:

21 Patient Safety in Pediatrics eve

- Page 305 and 306:

21 Patient Safety in Pediatrics of

- Page 307 and 308:

21 Patient Safety in Pediatrics Cri

- Page 309 and 310:

21 Patient Safety in Pediatrics of

- Page 311 and 312:

Patient Safety in Radiology Mahdieh

- Page 313 and 314:

22 Patient Safety in Radiology The

- Page 315 and 316:

22 Patient Safety in Radiology indu

- Page 317 and 318:

22 Patient Safety in Radiology CA a

- Page 319 and 320:

22 Patient Safety in Radiology Refe

- Page 321 and 322:

Organ Donor Risk Stratification in

- Page 323 and 324:

23 Organ Donor Risk Stratification

- Page 325 and 326:

23 Organ Donor Risk Stratification

- Page 327 and 328:

326 M. Plebani et al. Fig. 24.1 The

- Page 329 and 330:

328 ical laboratory and the definit

- Page 331 and 332:

330 • Findings of external accred

- Page 333 and 334:

332 M. Plebani et al. Fig. 24.2 Mod

- Page 335 and 336:

334 laboratory medicine professiona

- Page 337 and 338:

336 (WHO) website on the incidence

- Page 339 and 340:

338 54. Magnezi R, Hemi A, Hemi R.

- Page 341 and 342:

340 In 2010 this condition represen

- Page 343 and 344:

342 which should be slightly smalle

- Page 345 and 346:

344 needed by the pulse waveform to

- Page 347 and 348:

346 washed properly prior to steril

- Page 349 and 350:

348 ocular reaction without infecti

- Page 351 and 352:

350 large retrospective cohort stud

- Page 353 and 354:

352 • Lack of appropriate skills.

- Page 355 and 356:

354 scription [126]. Furthermore, a

- Page 357 and 358:

356 time and knowledge to young doc

- Page 359 and 360:

358 48. Barry P, Gettinby G, Lees F

- Page 361 and 362:

360 implant versus intravitreal ant

- Page 363 and 364:

Part IV Healthcare Organization

- Page 365 and 366:

366 causes of safety incidents and

- Page 367 and 368:

368 • Human factors such as teamw

- Page 369 and 370:

370 access to the Emergency Room. F

- Page 371 and 372:

372 that many doctors simply choose

- Page 373 and 374:

374 E. Alti and A. Mereu Open Acces

- Page 375 and 376:

376 components (people, technology,

- Page 377 and 378:

378 J. Braithwaite et al. Hence, cl

- Page 379 and 380:

380 stakeholders to figure out how

- Page 381 and 382:

382 J. Braithwaite et al. Clinician

- Page 383 and 384:

384 27.5.3 Social Networks in a War

- Page 385 and 386:

386 J. Braithwaite et al. Fig. 27.9

- Page 387 and 388:

388 representations the essential l

- Page 389 and 390:

390 21. Pomare C, Churruca K, Ellis

- Page 391 and 392:

Measuring Clinical Workflow to Impr

- Page 393 and 394:

28 Measuring Clinical Workflow to I

- Page 395 and 396:

28 Measuring Clinical Workflow to I

- Page 397 and 398:

28 Measuring Clinical Workflow to I

- Page 399 and 400:

28 Measuring Clinical Workflow to I

- Page 401 and 402:

Shiftwork Organization Giovanni Cos

- Page 403 and 404:

29 Shiftwork Organization influenci

- Page 405 and 406:

29 Shiftwork Organization Many epid

- Page 407 and 408:

29 Shiftwork Organization quences o

- Page 409 and 410:

29 Shiftwork Organization in shift

- Page 411 and 412:

Non-technical Skills in Healthcare

- Page 413 and 414:

30 Non-technical Skills in Healthca

- Page 415 and 416:

30 Non-technical Skills in Healthca

- Page 417 and 418:

30 Non-technical Skills in Healthca

- Page 419 and 420:

30 Non-technical Skills in Healthca

- Page 421 and 422:

30 Non-technical Skills in Healthca

- Page 423 and 424:

30 Non-technical Skills in Healthca

- Page 425 and 426:

30 Non-technical Skills in Healthca

- Page 427 and 428:

30 Non-technical Skills in Healthca

- Page 429 and 430:

30 Non-technical Skills in Healthca

- Page 431 and 432:

30 Non-technical Skills in Healthca

- Page 433 and 434:

Medication Safety Hooi Cheng Soon,

- Page 435 and 436:

31 Medication Safety • Be familia

- Page 437 and 438:

31 Medication Safety 439 Table 31.1

- Page 439 and 440:

31 Medication Safety to be made bet

- Page 441 and 442:

31 Medication Safety medication reg

- Page 443 and 444:

31 Medication Safety and contexts,

- Page 445 and 446:

31 Medication Safety quently when m

- Page 447 and 448:

31 Medication Safety 31.5 Final Rec

- Page 449 and 450:

31 Medication Safety 451 ity and im

- Page 451 and 452:

31 Medication Safety 453 Open Acces

- Page 453 and 454:

456 studies in recent years have hi

- Page 455 and 456:

458 F. Ranzani and O. Parlangeli in

- Page 457 and 458:

460 Any difficulty encountered by t

- Page 459 and 460:

462 32.4.2 The Usability Assessment

- Page 461 and 462:

464 23. Parlangeli O, Mengoni G, Gu

- Page 463 and 464:

466 S. Ushiro et al. No-fault compe

- Page 465 and 466:

468 33.2 The Meaning of “No-Fault

- Page 467 and 468:

470 S. Ushiro et al. figure of 2.3

- Page 469 and 470:

472 S. Ushiro et al. Childbirth fac

- Page 471 and 472:

474 • When cerebral palsy is caus

- Page 473 and 474:

476 (c) (d) (e) (f) (g) (h) (i) (j)

- Page 475 and 476:

478 115 bpm to 80 bpm and complete

- Page 477 and 478:

480 • It is desirable that system

- Page 479 and 480:

482 cific monitoring procedures (1)

- Page 481 and 482:

484 Res. 2019;45(3):493-513. https:

- Page 483 and 484:

486 19. In the most severe cases, t

- Page 485 and 486:

488 Phases 3 and 4, strong emphasis

- Page 487 and 488:

490 Phases 5 and 6 focus is on the

- Page 489 and 490:

492 during a pandemic to promote tr

- Page 491 and 492:

494 M. Tanzini et al. the mother, p

- Page 493:

496 All four abilities are necessar