3D DISCRETE DISLOCATION DYNAMICS APPLIED TO ... - NUMODIS

3D DISCRETE DISLOCATION DYNAMICS APPLIED TO ... - NUMODIS

3D DISCRETE DISLOCATION DYNAMICS APPLIED TO ... - NUMODIS

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

3.3 Parallelization of the serial DDD program 71<br />

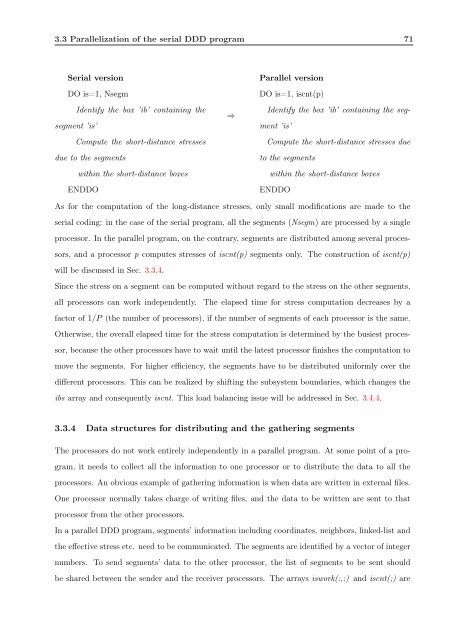

Serial version<br />

DO is=1, Nsegm<br />

segment ’is’<br />

Identify the box ’ib’ containing the<br />

Compute the short-distance stresses<br />

due to the segments<br />

within the short-distance boxes<br />

ENDDO<br />

⇒<br />

Parallel version<br />

DO is=1, iscnt(p)<br />

Identify the box ’ib’ containing the seg-<br />

ment ’is’<br />

Compute the short-distance stresses due<br />

to the segments<br />

within the short-distance boxes<br />

ENDDO<br />

As for the computation of the long-distance stresses, only small modifications are made to the<br />

serial coding: in the case of the serial program, all the segments (Nsegm) are processed by a single<br />

processor. In the parallel program, on the contrary, segments are distributed among several proces-<br />

sors, and a processor p computes stresses of iscnt(p) segments only. The construction of iscnt(p)<br />

will be discussed in Sec. 3.3.4.<br />

Since the stress on a segment can be computed without regard to the stress on the other segments,<br />

all processors can work independently. The elapsed time for stress computation decreases by a<br />

factor of 1/P (the number of processors), if the number of segments of each processor is the same.<br />

Otherwise, the overall elapsed time for the stress computation is determined by the busiest proces-<br />

sor, because the other processors have to wait until the latest processor finishes the computation to<br />

move the segments. For higher efficiency, the segments have to be distributed uniformly over the<br />

different processors. This can be realized by shifting the subsystem boundaries, which changes the<br />

ibs array and consequently iscnt. This load balancing issue will be addressed in Sec. 3.4.4.<br />

3.3.4 Data structures for distributing and the gathering segments<br />

The processors do not work entirely independently in a parallel program. At some point of a pro-<br />

gram, it needs to collect all the information to one processor or to distribute the data to all the<br />

processors. An obvious example of gathering information is when data are written in external files.<br />

One processor normally takes charge of writing files, and the data to be written are sent to that<br />

processor from the other processors.<br />

In a parallel DDD program, segments’ information including coordinates, neighbors, linked-list and<br />

the effective stress etc. need to be communicated. The segments are identified by a vector of integer<br />

numbers. To send segments’ data to the other processor, the list of segments to be sent should<br />

be shared between the sender and the receiver processors. The arrays iswork(:,:) and iscnt(;) are