Virtual Connect SAN Cookbook.pdf - Sallustio.ch

Virtual Connect SAN Cookbook.pdf - Sallustio.ch

Virtual Connect SAN Cookbook.pdf - Sallustio.ch

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

HP <strong>Virtual</strong> <strong>Connect</strong> Fibre Channel Networking<br />

Scenarios<br />

<strong>Cookbook</strong><br />

Part Number c01702940<br />

Second Edition (May 2011)

© Copyright 2009 Hewlett-Packard Development Company, L.P.<br />

The information contained herein is subject to <strong>ch</strong>ange without notice. The only warranties for HP products and services are set forth in the express<br />

warranty statements accompanying su<strong>ch</strong> products and services. Nothing herein should be construed as constituting an additional warranty. HP<br />

shall not be liable for te<strong>ch</strong>nical or editorial errors or omissions contained herein.<br />

Confidential computer software. Valid license from HP required for possession, use or copying. Consistent with FAR 12.211 and 12.212,<br />

Commercial Computer Software, Computer Software Documentation, and Te<strong>ch</strong>nical Data for Commercial Items are licensed to the U.S.<br />

Government under vendor‘s standard commercial license.<br />

Microsoft, Windows, and Windows Server are U.S. registered trademarks of Microsoft Corporation. Intel, Pentium, and Itanium are trademarks<br />

or registered trademarks of Intel Corporation or its subsidiaries in the United States and other countries. UNIX is a registered trademark of The<br />

Open Group.<br />

Intended audience<br />

This document is for the person who installs, administers, and troubleshoots HP BladeSystem c-Class products. Only persons experienced in<br />

server blade te<strong>ch</strong>nology and configuration should attempt these procedures. HP assumes you are qualified in the servicing of computer<br />

equipment and trained in recognizing hazards in products with hazardous energy levels.

Contents<br />

About this document ...................................................................................................................... 6<br />

Introduction ................................................................................................................................................. 6<br />

Considerations and concepts ......................................................................................................... 7<br />

Key takeaways ............................................................................................................................................ 7<br />

Description of the VC <strong>SAN</strong> modules ............................................................................................................... 8<br />

<strong>Virtual</strong> <strong>Connect</strong> Fibre Channel support ........................................................................................................... 9<br />

Supported VC <strong>SAN</strong> Fabric configuration ...................................................................................................... 11<br />

Multi-enclosure VC Domain configuration ..................................................................................................... 13<br />

Multiple-fabric Support ................................................................................................................................ 15<br />

NPIV ......................................................................................................................................................... 17<br />

Port Group ................................................................................................................................................ 19<br />

Scenario 1: Simplest scenario with multipathing ............................................................................. 25<br />

Overview .................................................................................................................................................. 25<br />

Benefits ..................................................................................................................................................... 26<br />

Considerations ........................................................................................................................................... 26<br />

Requirements ............................................................................................................................................. 26<br />

Installation and configuration ...................................................................................................................... 27<br />

Swit<strong>ch</strong> configuration ......................................................................................................................... 27<br />

VC CLI commands ............................................................................................................................ 27<br />

Configuring the VC module ............................................................................................................... 27<br />

Defining a new VC <strong>SAN</strong> Fabric via GUI ............................................................................................. 27<br />

Defining a new VC <strong>SAN</strong> Fabric via CLI .............................................................................................. 30<br />

Blade Server configuration .......................................................................................................................... 31<br />

Verification ................................................................................................................................................ 31<br />

Summary ................................................................................................................................................... 31<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric<br />

................................................................................................................................................ 32<br />

Overview .................................................................................................................................................. 32<br />

Benefits ..................................................................................................................................................... 35<br />

Considerations ........................................................................................................................................... 35<br />

Requirements ............................................................................................................................................. 36<br />

Installation and configuration ...................................................................................................................... 36<br />

Swit<strong>ch</strong> configuration ......................................................................................................................... 36<br />

VC CLI commands ............................................................................................................................ 36<br />

Configuring the VC module ............................................................................................................... 36<br />

Defining a new VC <strong>SAN</strong> Fabric via GUI ............................................................................................. 36<br />

Defining a new VC <strong>SAN</strong> Fabric via CLI .............................................................................................. 40<br />

Blade Server configuration .......................................................................................................................... 41<br />

Verification ................................................................................................................................................ 41<br />

Summary ................................................................................................................................................... 41<br />

Scenario 3: Multiple VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant<br />

<strong>SAN</strong> fabric with different priority tiers ........................................................................................... 42<br />

Overview .................................................................................................................................................. 42<br />

Benefits ..................................................................................................................................................... 43<br />

Contents 3

Considerations ........................................................................................................................................... 44<br />

Requirements ............................................................................................................................................. 44<br />

Installation and configuration ...................................................................................................................... 44<br />

Swit<strong>ch</strong> configuration ......................................................................................................................... 44<br />

VC CLI commands ............................................................................................................................ 44<br />

Configuring the VC module ............................................................................................................... 44<br />

Defining a new VC <strong>SAN</strong> Fabric via GUI ............................................................................................. 45<br />

Defining a new VC <strong>SAN</strong> Fabric via CLI .............................................................................................. 49<br />

Blade Server configuration .......................................................................................................................... 50<br />

Verification ................................................................................................................................................ 50<br />

Summary ................................................................................................................................................... 52<br />

Scenario 4: Multiple VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to several redundant <strong>SAN</strong><br />

fabric with different priority tiers ................................................................................................... 53<br />

Overview .................................................................................................................................................. 53<br />

Benefits ..................................................................................................................................................... 54<br />

Considerations ........................................................................................................................................... 55<br />

Requirements ............................................................................................................................................. 55<br />

Installation and configuration ...................................................................................................................... 55<br />

Swit<strong>ch</strong> configuration ......................................................................................................................... 55<br />

VC CLI commands ............................................................................................................................ 55<br />

Configuring the VC module ............................................................................................................... 55<br />

Defining a new VC <strong>SAN</strong> Fabric via GUI ............................................................................................. 56<br />

Defining a new VC <strong>SAN</strong> Fabric via CLI .............................................................................................. 60<br />

Blade Server configuration .......................................................................................................................... 61<br />

Verification ................................................................................................................................................ 61<br />

Summary ................................................................................................................................................... 63<br />

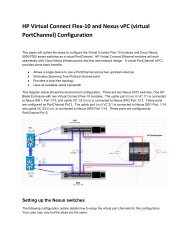

Scenario 5: <strong>SAN</strong> connectivity with HP <strong>Virtual</strong> <strong>Connect</strong> FlexFabric 10Gb/24-Port module ................... 64<br />

Overview .................................................................................................................................................. 64<br />

<strong>Virtual</strong> connect FlexFabric Uplink Port Mappings ........................................................................................... 66<br />

Requirements ............................................................................................................................................. 68<br />

Installation and configuration ...................................................................................................................... 68<br />

Swit<strong>ch</strong> configuration ......................................................................................................................... 68<br />

VC CLI commands ............................................................................................................................ 68<br />

Configuring the VC FlexFabric module ............................................................................................... 68<br />

Defining a new VC <strong>SAN</strong> Fabric via GUI ............................................................................................. 69<br />

Defining a new VC <strong>SAN</strong> Fabric via CLI .............................................................................................. 75<br />

Blade Server configuration .......................................................................................................................... 76<br />

Verification ................................................................................................................................................ 76<br />

Summary ................................................................................................................................................... 76<br />

Scenario 6: Adding VC Fabric uplink ports with Dynamic Login Balancing to an existing VC <strong>SAN</strong> fabric<br />

................................................................................................................................................ 77<br />

Overview .................................................................................................................................................. 77<br />

Benefits ..................................................................................................................................................... 77<br />

Initial configuration..................................................................................................................................... 77<br />

Adding an additional uplink port ................................................................................................................. 82<br />

Via the GUI ..................................................................................................................................... 82<br />

Via the CLI....................................................................................................................................... 83<br />

Login Redistribution .................................................................................................................................... 84<br />

Manual Login Redistribution via the GUI ............................................................................................. 86<br />

Manual Login Redistribution via the CLI .............................................................................................. 87<br />

Verification ................................................................................................................................................ 88<br />

Contents 4

Summary ................................................................................................................................................... 89<br />

Scenario 6: Cisco MDS Dynamic Port V<strong>SAN</strong> Membership ............................................................... 90<br />

Overview .................................................................................................................................................. 90<br />

Benefits ..................................................................................................................................................... 90<br />

Requirements ............................................................................................................................................. 90<br />

Installation and configuration ...................................................................................................................... 90<br />

Summary ................................................................................................................................................... 94<br />

Appendix A: Blade Server configuration with <strong>Virtual</strong> <strong>Connect</strong> Fibre Channel Modules ........................ 95<br />

Defining a Server Profile with FC <strong>Connect</strong>ions, via GUI .................................................................................. 95<br />

Defining a Server Profile with FC <strong>Connect</strong>ions, via CLI ................................................................................... 99<br />

Defining a Boot from <strong>SAN</strong> Server Profile via GUI .......................................................................................... 99<br />

Defining a Boot from <strong>SAN</strong> Server Profile via CLI .......................................................................................... 102<br />

Appendix B: Blade Server configuration with <strong>Virtual</strong> <strong>Connect</strong> FlexFabric Modules ............................ 103<br />

Defining a Server Profile with FCoE <strong>Connect</strong>ions, via GUI ............................................................................ 103<br />

Defining a Server Profile with FCoE <strong>Connect</strong>ions, via CLI ............................................................................. 107<br />

Defining a Boot from <strong>SAN</strong> Server Profile via GUI ........................................................................................ 107<br />

Defining a Boot from <strong>SAN</strong> Server Profile via CLI .......................................................................................... 110<br />

Appendix C: Brocade <strong>SAN</strong> swit<strong>ch</strong> NPIV configuration .................................................................. 111<br />

Enabling NPIV using the GUI ..................................................................................................................... 111<br />

Enabling NPIV using the CLI ...................................................................................................................... 112<br />

Recommendations .................................................................................................................................... 114<br />

Appendix D: Cisco MDS <strong>SAN</strong> swit<strong>ch</strong> NPIV configuration .............................................................. 115<br />

Enabling NPIV using the GUI ..................................................................................................................... 115<br />

Enabling NPIV using the CLI ...................................................................................................................... 118<br />

Appendix E: Cisco Nexus Swit<strong>ch</strong> NPIV configuration .................................................................... 120<br />

Enabling NPIV using the GUI ..................................................................................................................... 120<br />

Enabling NPIV using the CLI ...................................................................................................................... 123<br />

Appendix F: Static Login Distribution Scenario with VC Firmware 1.24 and earlier .......................... 127<br />

Overview ................................................................................................................................................ 127<br />

Benefits ................................................................................................................................................... 127<br />

Requirements ........................................................................................................................................... 127<br />

Installation and configuration .................................................................................................................... 128<br />

Verification .............................................................................................................................................. 130<br />

Summary ................................................................................................................................................. 131<br />

Appendix G: <strong>Connect</strong>ivity verification and testing ........................................................................ 132<br />

<strong>Connect</strong>ivity verification on the VC module ................................................................................................. 132<br />

<strong>Connect</strong>ivity verification on the upstream <strong>SAN</strong> swit<strong>ch</strong> .................................................................................. 136<br />

Testing the loss of uplink ports ................................................................................................................... 139<br />

Appendix H: Boot from <strong>SAN</strong> troubleshooting ............................................................................... 144<br />

Verification during POST ........................................................................................................................... 144<br />

Boot from <strong>SAN</strong> not activated ........................................................................................................... 144<br />

Boot from <strong>SAN</strong> activated ................................................................................................................ 146<br />

Boot from <strong>SAN</strong> misconfigured ......................................................................................................... 148<br />

Troubleshooting ....................................................................................................................................... 148<br />

Acronyms and abbreviations ...................................................................................................... 149<br />

Reference ................................................................................................................................. 151<br />

Contents 5

About this document<br />

Introduction<br />

This guide details the concepts and implementation steps for integrating HP <strong>Virtual</strong> <strong>Connect</strong> Fibre Channel<br />

modules and HP <strong>Virtual</strong> <strong>Connect</strong> FlexFabric Modules into an existing <strong>SAN</strong> Fabric.<br />

The scenarios in this guide are simplistic while covering a range of typical building blocks to use when<br />

designing a solution.<br />

For more information on BladeSystem and <strong>Virtual</strong> <strong>Connect</strong>, see the HP website<br />

http://www.hp.com/go/blades/<br />

About this document 6

Considerations and concepts<br />

Key takeaways<br />

The most important points to remember when using the <strong>Virtual</strong> <strong>Connect</strong> Fiber Chanel Module or the <strong>Virtual</strong><br />

<strong>Connect</strong> FlexFabric module are:<br />

<br />

<br />

<br />

<br />

The HP VC-FC Module requires an HP <strong>Virtual</strong> <strong>Connect</strong> Ethernet Module to also be installed in<br />

order to be managed (it‘s not the case for HP VC FlexFabric). This is because the VC Ethernet<br />

module contains the processor on whi<strong>ch</strong> the <strong>Virtual</strong> <strong>Connect</strong> Manager firmware runs.<br />

The lack of some special features that is available in standard FC swit<strong>ch</strong>es like ISL Trunking, QoS,<br />

long-distance support, and so on, for the external links from VC-FC to the core swit<strong>ch</strong>. ISL<br />

Trunking, Port Channeling between a VC module and an upstream Fibre Channel swit<strong>ch</strong> are not<br />

supported because <strong>Virtual</strong> <strong>Connect</strong> Fibre Channel modules must be connected to a data center<br />

Fibre Channel swit<strong>ch</strong> that supports N_Port_ID virtualization (NPIV). The NPIV standard requires<br />

external ports to be N_Ports and not E_Ports or F_Ports required for trunking.<br />

NPIV support is required in the FC swit<strong>ch</strong>es that connect to the HP VC-FC and HP FlexFabric<br />

Modules —an issue if the FC swit<strong>ch</strong>es cannot be upgraded to latest firmware.<br />

Does not support direct storage atta<strong>ch</strong>ment (requires at least one external FC swit<strong>ch</strong>) because VC<br />

uses an N-port uplink requires to be connected to a FC swit<strong>ch</strong>, it can be connected to data center<br />

Brocade, McData, Cisco, and Qlogic FC swit<strong>ch</strong>es that support the NPIV protocol.<br />

Considerations and concepts 7

Description of the VC <strong>SAN</strong> modules<br />

HP <strong>Virtual</strong> <strong>Connect</strong> 4Gb 20-Port Fibre Channel Module<br />

4 Uplink ports 4Gb FC [1/2/4 Gb]<br />

16 Downlink ports [1/2/4 Gb]<br />

Up to 128 virtual ma<strong>ch</strong>ines running on the<br />

same physical server to access separate<br />

storage resources<br />

HP <strong>Virtual</strong> <strong>Connect</strong> 8Gb 20-Port Fibre Channel Module<br />

4 Uplink ports 8Gb FC [2/4/8 Gb]<br />

16 Downlink ports [1/2/4/8 Gb]<br />

Up to 128 virtual ma<strong>ch</strong>ines running on the<br />

same physical server to access separate<br />

storage resources<br />

HP <strong>Virtual</strong> <strong>Connect</strong> 8Gb 24-Port Fibre Channel Module<br />

8 Uplink ports 8Gb FC [2/4/8 Gb]<br />

16 Downlink ports [1/2/4/8 Gb]<br />

Up to 255 virtual ma<strong>ch</strong>ines running on the<br />

same physical server to access separate<br />

storage resources<br />

HP <strong>Virtual</strong> <strong>Connect</strong> FlexFabric 10Gb/24-Port Module<br />

X1 – X4<br />

Uplink ports available<br />

for FC connection<br />

[2/4/8 Gb]<br />

4 Uplink ports 8Gb FC [2/4/8 Gb]<br />

16 Downlink ports [FlexHBA: any speed]<br />

Up to 255 virtual ma<strong>ch</strong>ines running on the<br />

same physical server to access separate<br />

storage resources<br />

Can be configurable<br />

as well as<br />

Ethernet 10Gb<br />

Considerations and concepts 8

<strong>Virtual</strong> <strong>Connect</strong> Fibre Channel support<br />

<strong>Virtual</strong> <strong>Connect</strong> connectivity Stream documents describing the different configuration supported by HP are<br />

available on www.hp.com/storage/spock (HP Passport is required; if you are a new user, please click on<br />

―please register‖).<br />

For any specific supported Fabric OS, <strong>SAN</strong>-OS & NX-OS versions for <strong>SAN</strong>s involving 3rd-party<br />

equipment, please consult your 3rd-party vendor.<br />

<strong>Virtual</strong> <strong>Connect</strong><br />

From the HP StorageWorks SPOCK homepage, select <strong>Virtual</strong> <strong>Connect</strong> on the bottom left menu in the<br />

‗Other Hardware‘ section or use the following link to access directly to the VC web page:<br />

http://h20272.www2.hp.com/Pages/spock2Html.aspx?htmlFile=hw_virtual_connect.html<br />

Three connectivity stream documents are currently available, one for ea<strong>ch</strong> <strong>Virtual</strong> <strong>Connect</strong> model type:<br />

FC and FCoE swit<strong>ch</strong>es<br />

It‘s interesting as well to visit the SPOCK SWITCH page to get the supported configurations of the<br />

upstream swit<strong>ch</strong> connected to <strong>Virtual</strong> <strong>Connect</strong>. Details about firmware and OS versions are usually<br />

provided.<br />

From the HP StorageWorks SPOCK homepage, select Swit<strong>ch</strong> on the bottom left menu in the ‗Other<br />

Hardware‘ section or use the following link to access directly to the swit<strong>ch</strong> web page:<br />

http://h20272.www2.hp.com/Pages/spock2Html.aspx?htmlFile=hw_swit<strong>ch</strong>es.html<br />

Considerations and concepts 9

Considerations and concepts 10

Supported VC <strong>SAN</strong> Fabric configuration<br />

To form a <strong>Virtual</strong> <strong>Connect</strong> <strong>SAN</strong> fabric correctly, participating uplinks must be connected to the same <strong>SAN</strong><br />

fabric:<br />

VC <strong>SAN</strong> 1 VC <strong>SAN</strong> 2<br />

VC <strong>SAN</strong> 1<br />

VC <strong>SAN</strong> Fabrics defined<br />

in the VC Domain<br />

VC-FC Module<br />

VC-FC Module<br />

Fabric 1 Fabric 2<br />

Fabric 1 Fabric 2<br />

External Datacenter<br />

<strong>SAN</strong> Fabrics<br />

Different <strong>Virtual</strong> <strong>Connect</strong> <strong>SAN</strong> fabrics can be connected to the same <strong>SAN</strong> fabric:<br />

VC <strong>SAN</strong> 1 VC <strong>SAN</strong> 2<br />

VC-FC Module<br />

Fabric 1<br />

This configuration gives you more granular control over whi<strong>ch</strong> server blades use ea<strong>ch</strong> VC-FC port, while<br />

also enabling the distribution of servers according to their I/O workloads.<br />

Considerations and concepts 11

10/100Base-TX<br />

6 15 16<br />

13 14<br />

11 12<br />

9 10<br />

7 8<br />

1 2 3 4 21 22 31 32<br />

29 30<br />

27 28<br />

25 26<br />

23 24<br />

17 18 19 20 37 38 47 48<br />

45 46<br />

43 44<br />

41 42<br />

39 40<br />

33 34 35 36<br />

5<br />

H3C S3600<br />

Speed:Green=100Mbps,Yellow=10Mbps Duplex:Green=Full Duplex,Yellow=Half Duplex Power:Green=DeliveringPower,Yellow=Fault,Flashing Green=Over Budget<br />

Series<br />

12Vdc<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

Console<br />

1000Base-X<br />

52<br />

51<br />

50<br />

49<br />

Unit<br />

20%<br />

40%<br />

60%<br />

80%<br />

100%<br />

Mode<br />

RPS<br />

PWR<br />

Flashing=PoE<br />

Yellow=Duplex<br />

Green=Speed<br />

PS 1<br />

PS<br />

6<br />

UID<br />

X1<br />

1 2 DP1-A DP1-B<br />

1 2 DP1-A DP1-B<br />

HP VC Flex-10 Enet Module X1 X2 X3 X4 X5 X6<br />

UID<br />

HP 4Gb VC-FC Module<br />

iLO<br />

Reset<br />

SHARED<br />

PS<br />

5<br />

1 2 3 4<br />

Active<br />

UID<br />

Cntrl 1<br />

PS<br />

4<br />

12Vdc<br />

UID<br />

SHARED: UPLINK or X-LINK<br />

X7 X8<br />

Enclosure<br />

UID<br />

Mfg<br />

Remove management modules before ejecting sleeve<br />

Mgmt<br />

UID<br />

X1<br />

Enclosure Interlink<br />

HP VC Flex-10 Enet Module X1 X2 X3 X4 X5 X6<br />

UID<br />

HP 4Gb VC-FC Module<br />

SHARED<br />

iLO<br />

Reset<br />

1 2 3 4<br />

Active<br />

12Vdc<br />

UID<br />

PS<br />

3<br />

Cntrl 2<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

UID<br />

SHARED: UPLINK or X-LINK<br />

X7 X8<br />

PS<br />

2<br />

UID<br />

PS<br />

1<br />

Mfg<br />

PS 2<br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

12Vdc<br />

10/100Base-TX<br />

6 15 16<br />

13 14<br />

11 12<br />

9 10<br />

7 8<br />

1 2 3 4 21 22 31 32<br />

29 30<br />

27 28<br />

25 26<br />

23 24<br />

17 18 19 20 37 38 47 48<br />

45 46<br />

43 44<br />

41 42<br />

39 40<br />

33 34 35 36<br />

5<br />

H3C S3600<br />

Speed:Green=100Mbps,Yellow=10Mbps Duplex:Green=Full Duplex,Yellow=Half Duplex Power:Green=DeliveringPower,Yellow=Fault,Flashing Green=Over Budget<br />

Series<br />

Console<br />

1000Base-X<br />

52<br />

51<br />

50<br />

49<br />

Unit<br />

20%<br />

40%<br />

60%<br />

80%<br />

100%<br />

Mode<br />

RPS<br />

PWR<br />

Flashing=PoE<br />

Yellow=Duplex<br />

Green=Speed<br />

The following diagrams show two typical supported configurations for <strong>Virtual</strong> <strong>Connect</strong> Fibre Channel<br />

8Gb 20-port and <strong>Virtual</strong> <strong>Connect</strong> FlexFabric modules:<br />

Figure 1: Typical FC configuration with VC-FC 8Gb 20-port modules - Redundant paths - server-to-Fabric<br />

uplink ratio 4:1<br />

Storage Array<br />

Storage Controller<br />

Storage Controller<br />

Fabric-1<br />

Fabric-2<br />

<strong>SAN</strong> Swit<strong>ch</strong> A<br />

<strong>SAN</strong> uplink<br />

connection<br />

<strong>SAN</strong> Swit<strong>ch</strong> B<br />

LAN Swit<strong>ch</strong> A<br />

LAN Swit<strong>ch</strong> B<br />

FAN<br />

1<br />

FAN<br />

5<br />

Ethernet uplink<br />

connection<br />

SUS-1<br />

1<br />

3<br />

2<br />

4<br />

SUS-2<br />

Ethernet uplink<br />

connection<br />

5<br />

6<br />

7<br />

8<br />

OA1<br />

OA2<br />

FAN<br />

6<br />

FAN<br />

10<br />

HP BladeSystem c7000<br />

Considerations and concepts 12

10/100Base-TX<br />

6 15 16<br />

13 14<br />

11 12<br />

9 10<br />

7 8<br />

1 2 3 4 21 22 31 32<br />

29 30<br />

27 28<br />

25 26<br />

23 24<br />

17 18 19 20 37 38 47 48<br />

45 46<br />

43 44<br />

41 42<br />

39 40<br />

33 34 35 36<br />

5<br />

H3C S3600<br />

Speed:Green=100Mbps,Yellow=10Mbps Duplex:Green=Full Duplex,Yellow=Half Duplex Power:Green=DeliveringPower,Yellow=Fault,Flashing Green=Over Budget<br />

Series<br />

12Vdc<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

Console<br />

1000Base-X<br />

52<br />

51<br />

50<br />

49<br />

Unit<br />

20%<br />

40%<br />

60%<br />

80%<br />

100%<br />

Mode<br />

RPS<br />

PWR<br />

Flashing=PoE<br />

Yellow=Duplex<br />

Green=Speed<br />

PS 1<br />

PS<br />

6<br />

1 2 DP1-A DP1-B<br />

1 2 DP1-A DP1-B<br />

UID<br />

HP VC FlexFabric 10Gb/24-Port Module<br />

iLO<br />

Reset<br />

S H A R E D : U P L I N K o r X - L I N K<br />

X1 X2 X3 X4<br />

X5 X6 X7 X8<br />

Active<br />

PS<br />

5<br />

UID<br />

Cntrl 1<br />

PS<br />

4<br />

12Vdc<br />

UID<br />

Mfg<br />

Enclosure<br />

UID<br />

Mgmt<br />

Remove management modules before ejecting sleeve<br />

UID<br />

Enclosure Interlink<br />

S H A R E D : U P L I N K o r X - L I N K<br />

X1 X2 X3 X4<br />

X5 X6 X7 X8<br />

HP VC FlexFabric 10Gb/24-Port Module<br />

iLO<br />

Reset<br />

12Vdc<br />

Active<br />

UID<br />

PS<br />

3<br />

Cntrl 2<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

UID<br />

PS<br />

2<br />

UID<br />

Mfg<br />

PS<br />

1<br />

PS 2<br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

12Vdc<br />

10/100Base-TX<br />

6 15 16<br />

13 14<br />

11 12<br />

9 10<br />

7 8<br />

1 2 3 4 21 22 31 32<br />

29 30<br />

27 28<br />

25 26<br />

23 24<br />

17 18 19 20 37 38 47 48<br />

45 46<br />

43 44<br />

41 42<br />

39 40<br />

33 34 35 36<br />

5<br />

H3C S3600<br />

Speed:Green=100Mbps,Yellow=10Mbps Duplex:Green=Full Duplex,Yellow=Half Duplex Power:Green=DeliveringPower,Yellow=Fault,Flashing Green=Over Budget<br />

Series<br />

Console<br />

1000Base-X<br />

52<br />

51<br />

50<br />

49<br />

Unit<br />

20%<br />

40%<br />

60%<br />

80%<br />

100%<br />

Mode<br />

RPS<br />

PWR<br />

Flashing=PoE<br />

Yellow=Duplex<br />

Green=Speed<br />

Figure 2: Typical FC configuration with VC FlexFabric modules - Redundant paths - server-to- Fabric uplink<br />

ratio 4:1<br />

Storage Array<br />

Storage Controller<br />

Storage Controller<br />

Fabric-1<br />

Fabric-2<br />

<strong>SAN</strong> Swit<strong>ch</strong> A<br />

<strong>SAN</strong> uplink<br />

connection<br />

<strong>SAN</strong> Swit<strong>ch</strong> B<br />

LAN Swit<strong>ch</strong> A<br />

LAN Swit<strong>ch</strong> B<br />

Ethernet uplink<br />

connection<br />

SUS-1<br />

FAN<br />

1<br />

1<br />

3<br />

FAN<br />

5<br />

2<br />

4<br />

SUS-2<br />

Ethernet uplink<br />

connection<br />

5<br />

6<br />

7<br />

8<br />

OA1<br />

OA2<br />

FAN<br />

6<br />

FAN<br />

10<br />

HP BladeSystem c7000<br />

For more information about enclosure support, configuration guidelines, see the <strong>Virtual</strong> <strong>Connect</strong> Setup<br />

and Installation Guide<br />

http://bizsupport2.austin.hp.com/bc/docs/support/SupportManual/c01732252/c01732252.<strong>pdf</strong><br />

Multi-enclosure VC Domain configuration<br />

<strong>Virtual</strong> <strong>Connect</strong> version 2.10 and higher supports the connection of up to four c7000 enclosures, whi<strong>ch</strong><br />

can reduce the number of network connections per rack and also enables a single VC manager to control<br />

multiple enclosures.<br />

A single set of cables can be used to carry all external Ethernet traffic from a single rack but the Fibre<br />

Channel data packets are not transmitted between modules therefore ea<strong>ch</strong> <strong>Virtual</strong> <strong>Connect</strong> Fiber Channel<br />

module must be connected to the <strong>SAN</strong> Fabrics.<br />

Also when VC-FC is implemented in a multi-enclosure domain, all enclosures must have identical VC-FC<br />

module placement and cabling. This ensures that the profile mobility is maintained, so that when a profile<br />

is moved from one enclosure to another within the stacked VC Domain, <strong>SAN</strong> connectivity is preserved.<br />

Considerations and concepts 13

10/100Base-TX<br />

6 15 16<br />

13 14<br />

11 12<br />

9 10<br />

7 8<br />

1 2 3 4 21 22 31 32<br />

29 30<br />

27 28<br />

25 26<br />

23 24<br />

17 18 19 20 37 38 47 48<br />

45 46<br />

43 44<br />

41 42<br />

39 40<br />

33 34 35 36<br />

5<br />

H3C S3600<br />

Speed:Green=100Mbps,Yellow=10Mbps Duplex:Green=Full Duplex,Yellow=Half Duplex Power:Green=DeliveringPower,Yellow=Fault,Flashing Green=Over Budget<br />

Series<br />

12Vdc<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

Console<br />

1000Base-X<br />

52<br />

51<br />

50<br />

49<br />

Unit<br />

20%<br />

40%<br />

60%<br />

80%<br />

100%<br />

Mode<br />

RPS<br />

PWR<br />

Flashing=PoE<br />

Yellow=Duplex<br />

Green=Speed<br />

FAN<br />

1<br />

OA1<br />

FAN<br />

6<br />

FAN<br />

1<br />

OA1<br />

FAN<br />

6<br />

PS<br />

6<br />

PS<br />

6<br />

PS 1<br />

UID<br />

UID<br />

X1<br />

HP VC Flex-10 Enet Module X1 X2 X3 X4 X5 X6<br />

UID<br />

HP 4Gb VC-FC Module<br />

iLO<br />

Reset<br />

X1<br />

SHARED<br />

PS<br />

5<br />

PS<br />

5<br />

1 2 3 4<br />

Active<br />

UID<br />

PS<br />

4<br />

HP VC Flex-10 Enet Module X1 X2 X3 X4 X5 X6<br />

UID<br />

HP 4Gb VC-FC Module<br />

iLO<br />

Reset<br />

SHARED<br />

1 2 3 4<br />

Active<br />

UID<br />

Cntrl 1<br />

1 2 DP1-A DP1-B<br />

1 2 DP1-A DP1-B<br />

PS<br />

4<br />

12Vdc<br />

UID<br />

SHARED: UPLINK or X-LINK<br />

X7 X8<br />

Enclosure<br />

UID<br />

Remove management modules before ejecting sleeve<br />

SHARED: UPLINK or X-LINK<br />

X7 X8<br />

Enclosure<br />

UID<br />

Mfg<br />

Remove management modules before ejecting sleeve<br />

Mgmt<br />

UID<br />

X1<br />

Enclosure Interlink<br />

UID<br />

Enclosure Interlink<br />

HP VC Flex-10 Enet Module X1 X2 X3 X4 X5 X6<br />

UID<br />

HP 4Gb VC-FC Module<br />

X1<br />

SHARED<br />

iLO<br />

Reset<br />

1 2 3 4<br />

Active<br />

UID<br />

PS<br />

3<br />

HP VC Flex-10 Enet Module X1 X2 X3 X4 X5 X6<br />

UID<br />

HP 4Gb VC-FC Module<br />

SHARED<br />

iLO<br />

Reset<br />

1 2 3 4<br />

Active<br />

12Vdc<br />

UID<br />

PS<br />

3<br />

Cntrl 2<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

UID<br />

SHARED: UPLINK or X-LINK<br />

X7 X8<br />

PS<br />

2<br />

SHARED: UPLINK or X-LINK<br />

X7 X8<br />

PS<br />

2<br />

UID<br />

PS<br />

1<br />

PS<br />

1<br />

Mfg<br />

FAN<br />

5<br />

OA2<br />

FAN<br />

10<br />

FAN<br />

5<br />

OA2<br />

FAN<br />

10<br />

PS 2<br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

12Vdc<br />

10/100Base-TX<br />

6 15 16<br />

13 14<br />

11 12<br />

9 10<br />

7 8<br />

1 2 3 4 21 22 31 32<br />

29 30<br />

27 28<br />

25 26<br />

23 24<br />

17 18 19 20 37 38 47 48<br />

45 46<br />

43 44<br />

41 42<br />

39 40<br />

33 34 35 36<br />

5<br />

H3C S3600<br />

Speed:Green=100Mbps,Yellow=10Mbps Duplex:Green=Full Duplex,Yellow=Half Duplex Power:Green=DeliveringPower,Yellow=Fault,Flashing Green=Over Budget<br />

Series<br />

Console<br />

1000Base-X<br />

52<br />

51<br />

50<br />

49<br />

Unit<br />

20%<br />

40%<br />

60%<br />

80%<br />

100%<br />

Mode<br />

RPS<br />

PWR<br />

Flashing=PoE<br />

Yellow=Duplex<br />

Green=Speed<br />

Figure 3: Multi-Enclosure Stacking requires all VC-FC modules to be connected to the <strong>SAN</strong>.<br />

Storage Array<br />

Storage Controller<br />

Storage Controller<br />

Fabric-1<br />

Fabric-2<br />

LAN Swit<strong>ch</strong> A<br />

<strong>SAN</strong> Swit<strong>ch</strong> A<br />

<strong>SAN</strong> uplink<br />

connection<br />

<strong>SAN</strong> Swit<strong>ch</strong> B<br />

Ethernet uplink<br />

connection<br />

SUS-1<br />

1<br />

3<br />

2<br />

4<br />

5<br />

6<br />

7<br />

8<br />

1<br />

3<br />

5<br />

2<br />

4<br />

6<br />

Ethernet uplink<br />

connection<br />

LAN Swit<strong>ch</strong> B<br />

7<br />

8<br />

SUS-2<br />

: 10Gb Stack Links<br />

For more information, see the <strong>Virtual</strong> <strong>Connect</strong> Multi-Enclosure Stacking Reference Guide<br />

http://bizsupport2.austin.hp.com/bc/docs/support/SupportManual/c02102153/c02102153.<strong>pdf</strong><br />

Considerations and concepts 14

Multiple-fabric Support<br />

Support for multiple <strong>SAN</strong> fabrics per VC-FC module is provided in VC firmware 1.31 and above. This<br />

feature allows the Storage administrator to assign any of the available VC-FC uplinks to a different <strong>SAN</strong><br />

fabric and dynamically assign server HBAs to the desired <strong>SAN</strong> fabric.<br />

Server 1<br />

Server 2<br />

Server 3<br />

Server 4<br />

HBA1 HBA2 HBA1 HBA2 HBA1 HBA2<br />

HBA1<br />

HBA2<br />

VC <strong>SAN</strong> 1 VC <strong>SAN</strong> 2 VC <strong>SAN</strong> 3 VC <strong>SAN</strong> 4<br />

VC Domain<br />

VC-FC Module<br />

Fabric 1<br />

Fabric 4<br />

Fabric 2 Fabric 3<br />

The <strong>Virtual</strong> <strong>Connect</strong> 4Gb and 8Gb 20-Port Fibre Channel modules support up to 4 <strong>SAN</strong> fabrics:<br />

VC-FC 20-port Module<br />

Fabric 1<br />

Fabric 4<br />

Fabric 2 Fabric 3<br />

Considerations and concepts 15

The <strong>Virtual</strong> <strong>Connect</strong> 8Gb 24-Port Fibre Channel module supports up to 8 <strong>SAN</strong> fabrics:<br />

Fabric 6 Fabric 7<br />

Fabric 5<br />

Fabric 8<br />

VC-FC 8Gb 24-Port Module<br />

Fabric 1<br />

Fabric 4<br />

Fabric 2 Fabric 3<br />

The <strong>Virtual</strong> <strong>Connect</strong> FlexFabric module supports up to 4 <strong>SAN</strong> fabrics:<br />

VC-FlexFabric Module<br />

Fabric 1<br />

Fabric 4<br />

Fabric 2 Fabric 3<br />

Considerations and concepts 16

12Vdc<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

UID<br />

HP 4Gb VC-FC Module<br />

PS 1<br />

Cntrl 1<br />

1 2 DP1-A DP1-B<br />

1 2 DP1-A DP1-B<br />

1 2 3 4<br />

12Vdc<br />

UID<br />

Mfg<br />

Mgmt<br />

HP ProLiant<br />

BL460c<br />

UID<br />

NIC<br />

1<br />

NIC<br />

2<br />

UID<br />

Cntrl 2<br />

HP 4Gb VC-FC Module<br />

UID<br />

UID<br />

12Vdc<br />

Mfg<br />

PS 2<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

1 2 3 4<br />

12Vdc<br />

NPIV<br />

For Fibre Channel connections, HP VC 4Gb FC module, HP VC 8Gb 24-Port FC module, HP VC 8Gb 20-<br />

Port FC module or HP VC FlexFabric 10Gb/24-port module uplinks can be connected only to Fibre<br />

Channel swit<strong>ch</strong> ports that support N_port_ID virtualization (NPIV).<br />

The use of NPIV allows the VC-FC modules to be connected to any swit<strong>ch</strong> supporting the NPIV protocol<br />

like data center Brocade, McData, Cisco, and Qlogic FC swit<strong>ch</strong>es.<br />

To verify that NPIV support is provided and for instructions on enabling this support see the firmware<br />

documentation that ships with the Fibre Channel swit<strong>ch</strong>.<br />

The <strong>SAN</strong> swit<strong>ch</strong> ports connecting to the VC Fabric uplink ports must be sometimes configured to accept<br />

NPIV logins, see "Appendix C: Brocade <strong>SAN</strong> swit<strong>ch</strong> NPIV configuration" or "Appendix D: Cisco <strong>SAN</strong><br />

swit<strong>ch</strong> NPIV configuration" or "Appendix E: Cisco NEXUS <strong>SAN</strong> swit<strong>ch</strong> NPIV configuration" depending on<br />

your swit<strong>ch</strong> model.<br />

Figure 4: NPIV requires a <strong>SAN</strong> swit<strong>ch</strong> between the VC Fabric uplink ports and the Storage Disk Array<br />

Storage Array<br />

Fabric-1<br />

Fabric-2<br />

HBA 1 HBA 2<br />

Blade Server<br />

Considerations and concepts 17

UID<br />

HP 4Gb VC-FC Module<br />

1 2 3 4<br />

PS 1<br />

Cntrl 1<br />

1 2 DP1-A DP1-B<br />

1 2 DP1-A DP1-B<br />

UID<br />

Mfg<br />

HP ProLiant<br />

BL460c<br />

UID<br />

NIC<br />

1<br />

NIC<br />

2<br />

Mgmt<br />

UID<br />

HP 4Gb VC-FC Module<br />

Cntrl 2<br />

UID<br />

UID<br />

Mfg<br />

PS 2<br />

1 2 3 4<br />

Figure 5: VC Fabric uplink ports cannot be connected directly to a Storage Array (no NPIV support)<br />

Storage Array<br />

HBA 1 HBA 2<br />

Blade Server<br />

The VC-FC and VC FlexFabric modules are FC standards-based and are compatible with all other NPIV<br />

standard–compliant swit<strong>ch</strong> products.<br />

Due to the use of NPIV, special features that are available in standard FC swit<strong>ch</strong>es like ISL Trunking, QoS,<br />

extended distances, etc. are not supported with VC-FC and VC FlexFabric.<br />

Considerations and concepts 18

Port Group<br />

Beginning with version 1.31 of <strong>Virtual</strong> <strong>Connect</strong> Manager, users can group multiple VC Fabric uplinks<br />

logically into a <strong>Virtual</strong> <strong>Connect</strong> fabric when atta<strong>ch</strong>ed to the same Fibre Channel <strong>SAN</strong> fabric.<br />

VC Domain<br />

VC <strong>SAN</strong> 1<br />

VC <strong>SAN</strong> 2<br />

VC-FC Module<br />

VC-FC Module<br />

Fabric 1<br />

Fabric 2<br />

There are several benefits with Fabric port grouping; the bandwidth is increased, the server-to-uplink ratio<br />

is improved, a better redundancy is provided with automatic port failover.<br />

Increased bandwidth<br />

Depending on the VC Fiber Channel module and the number of uplinks used, the server-to-uplink ratio<br />

(i.e. oversubscription ratio) is adjustable to 2:1, 4:1, 8:1, or 16:1. So as few as two or as many as 16<br />

servers share one physical link on a fully populated enclosure with 16 servers.<br />

The use of multiple uplinks can truly reduce the risk of congestion.<br />

2:1 oversubscription with 24-port VC-FC modules:<br />

2:1<br />

VC-FC<br />

16 servers 24-port 8 Uplinks<br />

Considerations and concepts 19

4:1 oversubscription with 20-port VC-FC modules and with VC FlexFabric modules:<br />

4:1<br />

VC-FC<br />

16 servers 20-port 4 Uplinks<br />

Dynamic Logins Load Balancing and increased redundancy<br />

When VC Fabric uplinks are grouped into a single fabric, the module uses dynamic login distribution to<br />

load balance the server connections across all available uplink ports.<br />

The port with the least number of logins across the VC <strong>SAN</strong> Fabric is used, when the number of logins is<br />

equal, VC makes a round-robin decision.<br />

Static Uplink Login Distribution has been removed since VC 3.00.<br />

Server 1<br />

Server 2<br />

Server 3<br />

Server 4<br />

HBA1 HBA2 HBA1 HBA2 HBA1 HBA2<br />

HBA1<br />

HBA2<br />

VC Domain<br />

VC <strong>SAN</strong> 1<br />

VC-FC Module<br />

Server 3<br />

Server 2<br />

Server 1<br />

Server 4<br />

Fabric 1<br />

Considerations and concepts 20

Uplink port path failover:<br />

The module uses dynamic login distribution to provide an uplink port path failover that enables server<br />

connections to fail over within the <strong>Virtual</strong> <strong>Connect</strong> fabric.<br />

If a fabric uplink port in the group becomes unavailable, hosts logged in through that uplink are<br />

reconnected automatically to the fabric through the remaining uplink(s) in the group, resulting in autofailover.<br />

Server 1<br />

Server 2<br />

Server 3<br />

Server 4<br />

HBA1 HBA2 HBA1 HBA2 HBA1 HBA2<br />

HBA1<br />

HBA2<br />

VC Domain<br />

VC <strong>SAN</strong> 1<br />

VC-FC Module<br />

Server 3<br />

Server 2<br />

Server 1<br />

Server 4<br />

Fabric 1<br />

This automatic failover saves time and effort whenever there is a link failure between an uplink port on<br />

VC and an external fabric, and allows smooth transition without mu<strong>ch</strong> disruption to the traffic. However,<br />

the hosts will have to perform re-login before resuming their I/O operations.<br />

Considerations and concepts 21

Login Redistribution<br />

If a failed port becomes available again, the logins redistribution (or logins failback) is not automatic<br />

except if the Automatic Login Redistribution available with the VC FlexFabric modules is used.<br />

Server 1<br />

Server 2<br />

Server 3<br />

Server 4<br />

HBA1 HBA2 HBA1 HBA2 HBA1 HBA2<br />

HBA1<br />

HBA2<br />

VC Domain<br />

VC <strong>SAN</strong> 1<br />

VC-FC Module<br />

Server 3<br />

Server 2<br />

Server 1<br />

Server 4<br />

Fabric 1<br />

There are two Login redistribution modes:<br />

<br />

<br />

Manual Login Re-Distribution: When configured, a user is expected to initiate a Login Re-<br />

Distribution request via VC GUI or CLI interfaces.<br />

Automatic Login Re-Distribution: When configured, the VC FlexFabric module initiates Login Re-<br />

Distribution automatically when the time interval specified expires.<br />

Table 1: Manual and Automatic Logins Redistribution support<br />

Login Re-Distribution<br />

Mode<br />

Auto-failover*<br />

Auto-failback**<br />

VC-FC<br />

support<br />

MANUAL YES NO YES<br />

AUTOMATIC<br />

YES<br />

YES after link<br />

stability delay<br />

NO<br />

FlexFabric<br />

support<br />

YES<br />

(default)<br />

YES<br />

*: when a port in the <strong>SAN</strong> Fabric group becomes unavailable<br />

**: when a failed port returns to a good working condition<br />

Considerations and concepts 22

Link stability<br />

This interval defines the number of seconds that the VC fabric uplink(s) have to stabilize before the VC FC<br />

module attempts to load-balance the logins.<br />

The administrator can configure the link stability interval parameter on a VC domain basis.<br />

Automatic Login Redistribution can be enabled for VC FlexFabric. For all legacy VC-FC modules, the<br />

Login Redistribution is manual only.<br />

Considerations and concepts 23

Manual Logins redistribution<br />

To manually redistribute logins on a VC <strong>SAN</strong> fabric, select the VC <strong>SAN</strong> Fabric, then ‗Server connections‘<br />

tab then click ‗Redistribute Logins‘.<br />

'Redistribute Logins' is only valid for a VC <strong>SAN</strong> fabric with Manual Login Distribution.<br />

Considerations and concepts 24

Scenario 1: Simplest scenario with multipathing<br />

Overview<br />

This scenario covers the setup and configuration of two VC <strong>SAN</strong> Fabrics, ea<strong>ch</strong> utilizing a single uplink<br />

connected to a redundant Fabric.<br />

Figure 6: Logical view<br />

on a fully populated enclosure with 16 servers<br />

Server 1<br />

Server 2<br />

Server 3<br />

…<br />

Server 16<br />

HBA1 HBA2<br />

HBA1 HBA2<br />

HBA1 HBA2<br />

…<br />

HBA1<br />

HBA2<br />

VC Domain<br />

Fabric--1<br />

Fabric--2<br />

VC-FC 8Gb<br />

20-port Module<br />

Fabric 1<br />

Fabric 2<br />

Scenario 1: Simplest scenario with multipathing 25

12Vdc<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

UID<br />

HP 4Gb VC-FC Module<br />

PS 1<br />

Cntrl 1<br />

1 2 DP1-A DP1-B<br />

1 2 DP1-A DP1-B<br />

1 2 3 4<br />

12Vdc<br />

UID<br />

Mfg<br />

Mgmt<br />

HP ProLiant<br />

BL460c<br />

UID<br />

NIC<br />

1<br />

NIC<br />

2<br />

UID<br />

Cntrl 2<br />

HP 4Gb VC-FC Module<br />

UID<br />

UID<br />

12Vdc<br />

Mfg<br />

PS 2<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

1 2 3 4<br />

12Vdc<br />

Figure 7: Physical view<br />

Storage Array<br />

Fabric-1<br />

Fabric-2<br />

HBA 1 HBA 2<br />

Blade Server<br />

Benefits<br />

This configuration offers the simplicity of managing only one redundant fabric with a single uplink.<br />

Transparent failover is managed by a multipathing I/O driver running in the server Operation System.<br />

This scenario maximizes the use of the VC Fabric uplink ports, reduces the total number of swit<strong>ch</strong> ports<br />

needed in the datacenter and saves money as Fabric ports can be expensive.<br />

Considerations<br />

In a fully populated c7000 enclosure, the server-to-uplink ratio is 16:1; this configuration may result in<br />

poor response time and may require particular performance monitoring attention.<br />

A failure somewhere between VC and the external fabric can disrupt all server I/O operations if a<br />

properly configured multipathing I/O driver running in the server Operating System is not used.<br />

The <strong>SAN</strong> swit<strong>ch</strong> ports connecting to the VC-FC modules must be configured to accept NPIV logins.<br />

Requirements<br />

This configuration requires two VC <strong>SAN</strong> fabrics with two <strong>SAN</strong> swit<strong>ch</strong>es that support NPIV, at least two<br />

VC-FC modules, and at least two VC fabric uplink ports connected to the redundant <strong>SAN</strong> fabric.<br />

Scenario 1: Simplest scenario with multipathing 26

For more information about configuring FC swit<strong>ch</strong>es for NPIV, see "Appendix C: Brocade <strong>SAN</strong> swit<strong>ch</strong><br />

NPIV configuration" or "Appendix D: Cisco <strong>SAN</strong> swit<strong>ch</strong> NPIV configuration" or "Appendix E: Cisco<br />

NEXUS <strong>SAN</strong> swit<strong>ch</strong> NPIV configuration" depending on your swit<strong>ch</strong> model.<br />

Installation and configuration<br />

Swit<strong>ch</strong> configuration<br />

Appendices B, C and D provide the steps required to configure NPIV on the upstream <strong>SAN</strong> swit<strong>ch</strong> in a<br />

Brocade, Cisco Nexus or Cisco MDS Fiber Channel infrastructure.<br />

VC CLI commands<br />

In addition to the GUI, many of the configuration settings within VC can be also be accomplished via a<br />

CLI command set. In order to connect to VC via a CLI, open an SSH connection to the IP address of the<br />

active VCM. Once logged in, VC provides a CLI with help menus. The <strong>Virtual</strong> <strong>Connect</strong> CLI guide also<br />

provides many useful examples. Throughout this scenario the CLI commands to configure VC for ea<strong>ch</strong><br />

setting are provided.<br />

Configuring the VC module<br />

Physically connect Port 1 on the first VC-FC module to swit<strong>ch</strong> port in <strong>SAN</strong> Fabric 1<br />

Physically connect Port 1 on the second VC-FC module to swit<strong>ch</strong> port in <strong>SAN</strong> Fabric 2<br />

Defining a new VC <strong>SAN</strong> Fabric via GUI<br />

To configure the VC-FC modules from the HP <strong>Virtual</strong> <strong>Connect</strong> Manager GUI:<br />

Scenario 1: Simplest scenario with multipathing 27

1. Create a VC <strong>SAN</strong> Fabric by selecting Define <strong>SAN</strong> Fabric from the VC Home page<br />

The Define Fabric screen appears.<br />

2. Provide the VC Fabric Name, in this case Fabric-1 and add the Fabric uplink Port 1 from the first VC-<br />

FC module.<br />

3. On the <strong>SAN</strong> Fabrics screen, right-click with the mouse above a row to access the context menu and<br />

select Add<br />

Scenario 1: Simplest scenario with multipathing 28

4. Create a new VC Fabric named Fabric_2. Under Enclosure Uplink Ports, add Port 1 from the second<br />

VC-FC module, and then click Apply.<br />

Two VC <strong>SAN</strong> fabrics have been created, ea<strong>ch</strong> with one uplink port allocated from one VC module.<br />

Scenario 1: Simplest scenario with multipathing 29

Defining a new VC <strong>SAN</strong> Fabric via CLI<br />

To configure the VC-FC modules from the CLI:<br />

1. Log in to the <strong>Virtual</strong> <strong>Connect</strong> Manager CLI using your favorite tool.<br />

2. Enter the following commands to create the fabrics and assign the uplink ports:<br />

add fabric Fabric_1 Bay=5 Ports=1<br />

add fabric Fabric_2 Bay=6 Ports=1<br />

3. When complete, run the show fabric command.<br />

Scenario 1: Simplest scenario with multipathing 30

Blade Server configuration<br />

Server profile configuration steps can be found in Appendix A.<br />

Verification<br />

Verifications and troubleshooting steps are covered in Appendix G.<br />

Summary<br />

In this scenario we have created two FC <strong>SAN</strong> Fabrics, utilizing a single uplink ea<strong>ch</strong>; this is the simplest<br />

scenario that can be used to maximize the use of the VC-FC uplink ports and reduce the number of<br />

datacenter <strong>SAN</strong> ports. A multipathing driver will be required for transparent failover between the two<br />

server HBA ports.<br />

Additional uplinks could be added to the <strong>SAN</strong> fabrics whi<strong>ch</strong> could increase performance and/or<br />

availability. This will be covered in the following scenario.<br />

Scenario 1: Simplest scenario with multipathing 31

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login<br />

Balancing connected to the same redundant<br />

<strong>SAN</strong> fabric<br />

Overview<br />

This scenario covers the setup and configuration of two VC <strong>SAN</strong> Fabrics with Dynamic Login Balancing<br />

Distribution, ea<strong>ch</strong> utilizing two to eight uplink ports connected to a redundant Fabric.<br />

Figure 8 : 8:1 oversubscription with VC-FC 8Gb 20-Port modules using 4 uplink ports<br />

on a fully populated enclosure with 16 servers<br />

Server 1<br />

Server 2<br />

Server 3<br />

…<br />

Server 16<br />

HBA1 HBA2<br />

HBA1 HBA2<br />

HBA1 HBA2<br />

…<br />

HBA1<br />

HBA2<br />

VC Domain<br />

Fabric--1<br />

Fabric--2<br />

VC-FC 8Gb<br />

20-port Module<br />

Fabric 1<br />

Fabric 2<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 32

NOTE: Static Login Distribution has been removed since VC firmware 3.00 but is the<br />

only method available in VC firmware 1.24 and earlier. Dynamic Login Balancing<br />

capabilities are included in VC firmware 1.3x and later.<br />

Figure 9: 4:1 oversubscription with VC-FC 8Gb 20-Port modules using 8 uplink ports<br />

on a fully populated enclosure with 16 servers<br />

Server 1<br />

Server 2<br />

Server 3<br />

…<br />

Server 16<br />

HBA1 HBA2<br />

HBA1 HBA2<br />

HBA1 HBA2<br />

…<br />

HBA1<br />

HBA2<br />

VC Domain<br />

Fabric--1<br />

Fabric--2<br />

VC-FC 8Gb<br />

20-port Module<br />

Fabric 1<br />

Fabric 2<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 33

Figure 10: 2:1 oversubscription with VC-FC 8Gb 24-Port modules using 16 uplink ports<br />

on a fully populated enclosure with 16 servers<br />

Server 1<br />

Server 2<br />

Server 3<br />

…<br />

Server 16<br />

HBA1 HBA2<br />

HBA1 HBA2<br />

HBA1 HBA2<br />

…<br />

HBA1<br />

HBA2<br />

VC Domain<br />

Fabric--1<br />

Fabric--2<br />

VC-FC 8Gb<br />

24-port Module<br />

Fabric 1<br />

Fabric 2<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 34

12Vdc<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

UID<br />

HP 4Gb VC-FC Module<br />

PS 1<br />

Cntrl 1<br />

1 2 DP1-A DP1-B<br />

1 2 DP1-A DP1-B<br />

1 2 3 4<br />

12Vdc<br />

UID<br />

Mfg<br />

Mgmt<br />

HP ProLiant<br />

BL460c<br />

UID<br />

NIC<br />

1<br />

NIC<br />

2<br />

UID<br />

Cntrl 2<br />

HP 4Gb VC-FC Module<br />

UID<br />

UID<br />

12Vdc<br />

Mfg<br />

PS 2<br />

HP StorageWorks<br />

4/32B <strong>SAN</strong> Swit<strong>ch</strong><br />

0 4 1 5 2 6 3 7<br />

8 12 9 13 10 14 1 15<br />

16 20 17 21 18 2 19 23<br />

24 28 25 29 26 30 27 31<br />

1 2 3 4<br />

12Vdc<br />

Figure 11: Physical view<br />

Storage Array<br />

Fabric-1<br />

Fabric-2<br />

HBA 1 HBA 2<br />

Blade Server<br />

Benefits<br />

The use of multiple ports in ea<strong>ch</strong> VC <strong>SAN</strong> Fabric allows to dynamically distribute server logins across the<br />

ports using a round robin format. Dynamic Login Distribution performs auto-failover for the server logins if<br />

the corresponding uplink port becomes unavailable. Servers that were logged in to the failed port are<br />

reconnected to one of the remaining ports in the VC <strong>SAN</strong> fabric.<br />

This configuration offers increase performance and better availability. The server-to-uplink ratio is<br />

adjustable, up to 2:1 with the VC-FC 8Gb 24-port module (as few as two servers share one physical<br />

Fabric uplink) and up to 4:1 with the VC-FC 20-port and FlexFabric modules.<br />

Considerations<br />

The <strong>SAN</strong> swit<strong>ch</strong> ports connecting to the VC-FC modules must be configured to accept NPIV logins.<br />

Due to the use of NPIV, special features that are available in standard FC swit<strong>ch</strong>es like ISL Trunking, QoS,<br />

extended distances, etc. are not supported with VC-FC and VC FlexFabric.<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 35

The automatic failover allows smooth transition without mu<strong>ch</strong> disruption to the traffic. However, the hosts<br />

will have to perform re-login before resuming their I/O operations. Only a redundant <strong>SAN</strong> fabric with a<br />

multipathing I/O driver running in the server Operation System can provide a complete transparent<br />

transition.<br />

Requirements<br />

This configuration requires two VC <strong>SAN</strong> fabrics with two <strong>SAN</strong> swit<strong>ch</strong>es that support NPIV, at least two<br />

VC-FC modules, and at least four VC fabric uplink ports connected to the redundant <strong>SAN</strong> fabric.<br />

For more information about configuring FC swit<strong>ch</strong>es for NPIV, see "Appendix C: Brocade <strong>SAN</strong> swit<strong>ch</strong><br />

NPIV configuration" or "Appendix D: Cisco <strong>SAN</strong> swit<strong>ch</strong> NPIV configuration" or "Appendix E: Cisco<br />

NEXUS <strong>SAN</strong> swit<strong>ch</strong> NPIV configuration" depending on your swit<strong>ch</strong> model.<br />

Installation and configuration<br />

Swit<strong>ch</strong> configuration<br />

Appendices B, C and D provide the steps required to configure NPIV on the upstream <strong>SAN</strong> swit<strong>ch</strong> in a<br />

Brocade, Cisco MDS or Cisco Nexus Fiber Channel infrastructure.<br />

VC CLI commands<br />

In addition to the GUI, many of the configuration settings within VC can be also be accomplished via a<br />

CLI command set. In order to connect to VC via a CLI, open an SSH connection to the IP address of the<br />

active VCM. Once logged in, VC provides a CLI with help menus. The <strong>Virtual</strong> <strong>Connect</strong> CLI guide also<br />

provides many useful examples. Throughout this scenario the CLI commands to configure VC for ea<strong>ch</strong><br />

setting are provided.<br />

Configuring the VC module<br />

Physically connect the uplink ports on the first VC-FC module to swit<strong>ch</strong> port in <strong>SAN</strong> Fabric 1<br />

Physically connect the uplink ports on the second VC-FC module to swit<strong>ch</strong> port in <strong>SAN</strong> Fabric 2<br />

Defining a new VC <strong>SAN</strong> Fabric via GUI<br />

To configure the VC-FC modules from the HP <strong>Virtual</strong> <strong>Connect</strong> Manager home screen:<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 36

1. Create a VC <strong>SAN</strong> Fabric by selecting Define <strong>SAN</strong> Fabric from the VC Home page<br />

The Define Fabric screen appears.<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 37

2. Provide the VC Fabric Name, in this case Fabric_1 and add the uplink ports that will be connected<br />

to this fabric, and then click Apply. The following example uses Port 1, Port 2, Port 3 and Port 4<br />

from the first VC-FC module (Bay 5).<br />

3. On the <strong>SAN</strong> Fabrics screen, select a row and right-click to access the context menu and select Add.<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 38

4. Create a new VC Fabric named Fabric_2 and add the uplink ports that will be connected to this<br />

fabric, and then click Apply. The following example uses Port 1, Port 2, Port 3 and Port 4 from the<br />

second VC-FC module (Bay 6).<br />

5. Two VC <strong>SAN</strong> fabrics have been created ea<strong>ch</strong> with four uplink ports allocated from a VC module in<br />

Bay 5 and a VC module in Bay6.<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 39

Defining a new VC <strong>SAN</strong> Fabric via CLI<br />

To configure the VC-FC modules from the CLI:<br />

1. Log in to the <strong>Virtual</strong> <strong>Connect</strong> Manager CLI using your favorite tool.<br />

2. Enter the following commands to create the fabrics and assign the uplink ports:<br />

add fabric Fabric_1 Bay=5 Ports=1,2,3,4<br />

add fabric Fabric_2 Bay=6 Ports=1,2,3,4<br />

3. When complete, run the show fabric command.<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 40

Blade Server configuration<br />

Server profile configuration steps can be found in Appendix A.<br />

Verification<br />

Verifications and troubleshooting steps are covered in Appendix G.<br />

Summary<br />

In this scenario we have created two FC <strong>SAN</strong> Fabrics with multiple uplink ports utilizing Dynamic Login<br />

Distribution whi<strong>ch</strong> allows for login balancing and host connectivity auto failover. This configuration<br />

enables increased performance and improved availability. Host login connections to the VC Fabric uplink<br />

ports are handled dynamically, and the load is balanced across all available ports in the group.<br />

A multipathing driver will be required for transparent failover between the two server HBA ports.<br />

Scenario 2: VC <strong>SAN</strong> fabrics with Dynamic Login Balancing connected to the same redundant <strong>SAN</strong> fabric 41

Scenario 3: Multiple VC <strong>SAN</strong> fabrics with<br />

Dynamic Login Balancing connected to the same<br />

redundant <strong>SAN</strong> fabric with different priority tiers<br />

Overview<br />