Improving TCP Performance in High Bandwidth High RTT Links ...

Improving TCP Performance in High Bandwidth High RTT Links ...

Improving TCP Performance in High Bandwidth High RTT Links ...

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

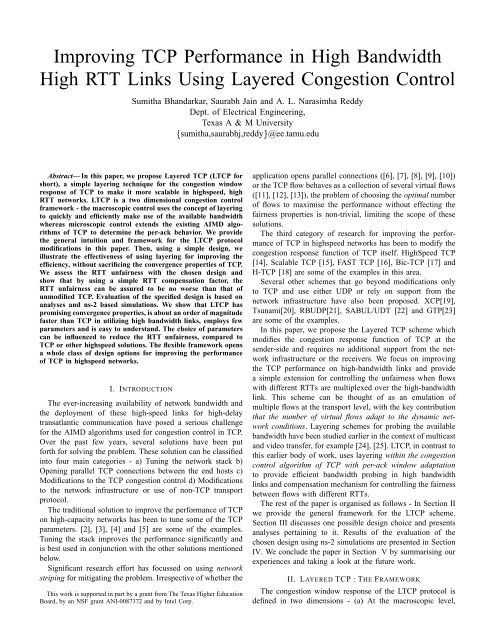

<strong>Improv<strong>in</strong>g</strong> <strong>TCP</strong> <strong>Performance</strong> <strong>in</strong> <strong>High</strong> <strong>Bandwidth</strong><br />

<strong>High</strong> <strong>RTT</strong> L<strong>in</strong>ks Us<strong>in</strong>g Layered Congestion Control<br />

Sumitha Bhandarkar, Saurabh Ja<strong>in</strong> and A. L. Narasimha Reddy<br />

Dept. of Electrical Eng<strong>in</strong>eer<strong>in</strong>g,<br />

Texas A & M University<br />

sumitha,saurabhj,reddy¡ @ee.tamu.edu<br />

Abstract— In this paper, we propose Layered <strong>TCP</strong> (L<strong>TCP</strong> for<br />

short), a simple layer<strong>in</strong>g technique for the congestion w<strong>in</strong>dow<br />

response of <strong>TCP</strong> to make it more scalable <strong>in</strong> highspeed, high<br />

<strong>RTT</strong> networks. L<strong>TCP</strong> is a two dimensional congestion control<br />

framework - the macroscopic control uses the concept of layer<strong>in</strong>g<br />

to quickly and efficiently make use of the available bandwidth<br />

whereas microscopic control extends the exist<strong>in</strong>g AIMD algorithms<br />

of <strong>TCP</strong> to determ<strong>in</strong>e the per-ack behavior. We provide<br />

the general <strong>in</strong>tuition and framework for the L<strong>TCP</strong> protocol<br />

modifications <strong>in</strong> this paper. Then, us<strong>in</strong>g a simple design, we<br />

illustrate the effectiveness of us<strong>in</strong>g layer<strong>in</strong>g for improv<strong>in</strong>g the<br />

efficiency, without sacrific<strong>in</strong>g the convergence properties of <strong>TCP</strong>.<br />

We assess the <strong>RTT</strong> unfairness with the chosen design and<br />

show that by us<strong>in</strong>g a simple <strong>RTT</strong> compensation factor, the<br />

<strong>RTT</strong> unfairness can be assured to be no worse than that of<br />

unmodified <strong>TCP</strong>. Evaluation of the specified design is based on<br />

analyses and ns-2 based simulations. We show that L<strong>TCP</strong> has<br />

promis<strong>in</strong>g convergence properties, is about an order of magnitude<br />

faster than <strong>TCP</strong> <strong>in</strong> utiliz<strong>in</strong>g high bandwidth l<strong>in</strong>ks, employs few<br />

parameters and is easy to understand. The choice of parameters<br />

can be <strong>in</strong>fluenced to reduce the <strong>RTT</strong> unfairness, compared to<br />

<strong>TCP</strong> or other highspeed solutions. The flexible framework opens<br />

a whole class of design options for improv<strong>in</strong>g the performance<br />

of <strong>TCP</strong> <strong>in</strong> highspeed networks.<br />

I. INTRODUCTION<br />

The ever-<strong>in</strong>creas<strong>in</strong>g availability of network bandwidth and<br />

the deployment of these high-speed l<strong>in</strong>ks for high-delay<br />

transatlantic communication have posed a serious challenge<br />

for the AIMD algorithms used for congestion control <strong>in</strong> <strong>TCP</strong>.<br />

Over the past few years, several solutions have been put<br />

forth for solv<strong>in</strong>g the problem. These solution can be classified<br />

<strong>in</strong>to four ma<strong>in</strong> categories - a) Tun<strong>in</strong>g the network stack b)<br />

Open<strong>in</strong>g parallel <strong>TCP</strong> connections between the end hosts c)<br />

Modifications to the <strong>TCP</strong> congestion control d) Modifications<br />

to the network <strong>in</strong>frastructure or use of non-<strong>TCP</strong> transport<br />

protocol.<br />

The traditional solution to improve the performance of <strong>TCP</strong><br />

on high-capacity networks has been to tune some of the <strong>TCP</strong><br />

parameters. [2], [3], [4] and [5] are some of the examples.<br />

Tun<strong>in</strong>g the stack improves the performance significantly and<br />

is best used <strong>in</strong> conjunction with the other solutions mentioned<br />

below.<br />

Significant research effort has focussed on us<strong>in</strong>g network<br />

strip<strong>in</strong>g for mitigat<strong>in</strong>g the problem. Irrespective of whether the<br />

This work is supported <strong>in</strong> part by a grant from The Texas <strong>High</strong>er Education<br />

Board, by an NSF grant ANI-0087372 and by Intel Corp.<br />

application opens parallel connections ([6], [7], [8], [9], [10])<br />

or the <strong>TCP</strong> flow behaves as a collection of several virtual flows<br />

([11], [12], [13]), the problem of choos<strong>in</strong>g the optimal number<br />

of flows to maximise the performance without effect<strong>in</strong>g the<br />

fairness properties is non-trivial, limit<strong>in</strong>g the scope of these<br />

solutions.<br />

The third category of research for improv<strong>in</strong>g the performance<br />

of <strong>TCP</strong> <strong>in</strong> highspeed networks has been to modify the<br />

congestion response function of <strong>TCP</strong> itself. <strong>High</strong>Speed <strong>TCP</strong><br />

[14], Scalable <strong>TCP</strong> [15], FAST <strong>TCP</strong> [16], Bic-<strong>TCP</strong> [17] and<br />

H-<strong>TCP</strong> [18] are some of the examples <strong>in</strong> this area.<br />

Several other schemes that go beyond modifications only<br />

to <strong>TCP</strong> and use either UDP or rely on support from the<br />

network <strong>in</strong>frastructure have also been proposed. XCP[19],<br />

Tsunami[20], RBUDP[21], SABUL/UDT [22] and GTP[23]<br />

are some of the examples.<br />

In this paper, we propose the Layered <strong>TCP</strong> scheme which<br />

modifies the congestion response function of <strong>TCP</strong> at the<br />

sender-side and requires no additional support from the network<br />

<strong>in</strong>frastructure or the receivers. We focus on improv<strong>in</strong>g<br />

the <strong>TCP</strong> performance on high-bandwidth l<strong>in</strong>ks and provide<br />

a simple extension for controll<strong>in</strong>g the unfairness when flows<br />

with different <strong>RTT</strong>s are multiplexed over the high-bandwidth<br />

l<strong>in</strong>k. This scheme can be thought of as an emulation of<br />

multiple flows at the transport level, with the key contribution<br />

that the number of virtual flows adapt to the dynamic network<br />

conditions. Layer<strong>in</strong>g schemes for prob<strong>in</strong>g the available<br />

bandwidth have been studied earlier <strong>in</strong> the context of multicast<br />

and video transfer, for example [24], [25]. L<strong>TCP</strong>, <strong>in</strong> contrast to<br />

this earlier body of work, uses layer<strong>in</strong>g with<strong>in</strong> the congestion<br />

control algorithm of <strong>TCP</strong> with per-ack w<strong>in</strong>dow adaptation<br />

to provide efficient bandwidth prob<strong>in</strong>g <strong>in</strong> high bandwidth<br />

l<strong>in</strong>ks and compensation mechanism for controll<strong>in</strong>g the fairness<br />

between flows with different <strong>RTT</strong>s.<br />

The rest of the paper is organised as follows - In Section II<br />

we provide the general framework for the L<strong>TCP</strong> scheme.<br />

Section III discusses one possible design choice and presents<br />

analyses perta<strong>in</strong><strong>in</strong>g to it. Results of the evaluation of the<br />

chosen design us<strong>in</strong>g ns-2 simulations are presented <strong>in</strong> Section<br />

IV. We conclude the paper <strong>in</strong> Section V by summaris<strong>in</strong>g our<br />

experiences and tak<strong>in</strong>g a look at the future work.<br />

II. LAYERED <strong>TCP</strong> : THE FRAMEWORK<br />

The congestion w<strong>in</strong>dow response of the L<strong>TCP</strong> protocol is<br />

def<strong>in</strong>ed <strong>in</strong> two dimensions - (a) At the macroscopic level,

;<br />

;<br />

<br />

L<strong>TCP</strong> uses the concept of layer<strong>in</strong>g. If congestion is not<br />

observed over a period of time, then number of layers is<br />

<strong>in</strong>creased. (b) At the microscopic level, it extends the exist<strong>in</strong>g<br />

AIMD algorithms of <strong>TCP</strong> to determ<strong>in</strong>e the per-ack behavior.<br />

When operat<strong>in</strong>g at a higher layer, a flow <strong>in</strong>creases its congestion<br />

w<strong>in</strong>dow faster than when operat<strong>in</strong>g at a lower layer. In this<br />

section, we present the <strong>in</strong>tuition for the layer<strong>in</strong>g framework.<br />

The primary goal beh<strong>in</strong>d design<strong>in</strong>g the L<strong>TCP</strong> protocol is<br />

to make the congestion response function scale <strong>in</strong> highspeed<br />

networks under the follow<strong>in</strong>g constra<strong>in</strong>ts: (a) the L<strong>TCP</strong> flows<br />

should be fair to each other when they have same <strong>RTT</strong> (b) the<br />

unfairness of L<strong>TCP</strong> flows with different <strong>RTT</strong>s should be no<br />

worse than the unfairness between unmodified <strong>TCP</strong> flows with<br />

similar <strong>RTT</strong>s (c) the L<strong>TCP</strong> flows should be fair to <strong>TCP</strong> flows<br />

when the w<strong>in</strong>dow is below a predef<strong>in</strong>ed threshold ¢¤£ . The<br />

threshold ¢¤£ def<strong>in</strong>es the regime <strong>in</strong> which L<strong>TCP</strong> is friendly<br />

to standard implementations of <strong>TCP</strong>. We choose a value of<br />

50 packets for ¢ £ . This value has been chosen to ma<strong>in</strong>ta<strong>in</strong><br />

proportional fairness between L<strong>TCP</strong> and unmodified <strong>TCP</strong><br />

flows <strong>in</strong> slow networks which do not require the w<strong>in</strong>dow scale<br />

option [26] to be turned on. Arguments aimed at keep<strong>in</strong>g new<br />

protocols fair to <strong>TCP</strong> below a predef<strong>in</strong>ed w<strong>in</strong>dow threshold,<br />

while allow<strong>in</strong>g more aggressive behavior beyond the threshold<br />

have been put forth <strong>in</strong> [14], [15] as well.<br />

All new L<strong>TCP</strong> connections start with one layer and behave<br />

<strong>in</strong> all respects the same as <strong>TCP</strong>. The congestion w<strong>in</strong>dow<br />

response is modified only if the congestion w<strong>in</strong>dow <strong>in</strong>creases<br />

beyond the threshold ¢ £ . Just like the standard implementations<br />

of <strong>TCP</strong>, the L<strong>TCP</strong> protocol is ack-clocked and the<br />

congestion w<strong>in</strong>dow of an L<strong>TCP</strong> flow changes with each <strong>in</strong>com<strong>in</strong>g<br />

ack. However, an L<strong>TCP</strong> flow <strong>in</strong>creases the congestion<br />

w<strong>in</strong>dow more aggressively than the standard implementation<br />

of <strong>TCP</strong> depend<strong>in</strong>g on the layer at which it is operat<strong>in</strong>g.<br />

When operat<strong>in</strong>g at ¥ layer , the L<strong>TCP</strong> protocol <strong>in</strong>creases the<br />

congestion w<strong>in</strong>dow as if it were ¥ emulat<strong>in</strong>g virtual flows.<br />

That is, the congestion w<strong>in</strong>dow is <strong>in</strong>creased ¥§¦©¨ by for<br />

each <strong>in</strong>com<strong>in</strong>g ack, or equivalently, it is <strong>in</strong>creased ¥ by on<br />

the successful receipt of one w<strong>in</strong>dow of acknowledgements.<br />

This is similar to the <strong>in</strong>crease behaviour explored <strong>in</strong> [11].<br />

Layers, on the other hand, are added if congestion is not<br />

observed over an extended period of time. To do this, a simple<br />

layer<strong>in</strong>g scheme is used. Suppose, each ¥ layer is associated<br />

with a step-size . When the current congestion w<strong>in</strong>dow<br />

exceeds the w<strong>in</strong>dow correspond<strong>in</strong>g to the last addition of a<br />

layer (¢ ) by the step-size , a new layer is added. Thus,<br />

¢¢¤¢! "#%$&$'$&$'$(¢))¢+*,! "+* (1)<br />

and the number of layers -¥ , when ¢/.0¢210¢¤435 .<br />

Figure 1 shows this graphically. The step size associated<br />

with the ¥ layer should be chosen such that convergence<br />

is possible when several flows (with similar <strong>RTT</strong>) share the<br />

bandwidth. Consider the simple case when the l<strong>in</strong>k is to be<br />

shared by two L<strong>TCP</strong> flows with same <strong>RTT</strong>. Say, the flow that<br />

started earlier operates at a higher layer ¥ (with a larger<br />

w<strong>in</strong>dow) compared to the later-start<strong>in</strong>g flow operat<strong>in</strong>g at a<br />

smaller layer ¥ (with the smaller w<strong>in</strong>dow). In the absence<br />

of network congestion, the first flow <strong>in</strong>creases the congestion<br />

Fig. 1.<br />

Graphical Perspective of Layers <strong>in</strong> L<strong>TCP</strong><br />

w<strong>in</strong>dow by ¥6 packets per <strong>RTT</strong>, whereas the second flow<br />

<strong>in</strong>creases by ¥7 packets per <strong>RTT</strong>. In order to ensure that the<br />

first flow does not cont<strong>in</strong>ue to <strong>in</strong>crease at a rate faster than the<br />

second flow, it is essential that the first flow adds layers slower<br />

than the second flow. Thus, if 98 is the stepsize associated<br />

with layer ¥6 and !: is the stepsize associated with layer<br />

¥7 , then<br />

8 <br />

¥§¥7@?BA of .<br />

The design of the decrease behavior is guided by the follow<strong>in</strong>g<br />

reason<strong>in</strong>g - <strong>in</strong> order for two flows with same <strong>RTT</strong>, start<strong>in</strong>g<br />

at different times to converge, the time taken by the larger<br />

flow to rega<strong>in</strong> the bandwidth it gave up after a congestion<br />

event should be larger than the recovery time of the smaller<br />

flow. Suppose the two flows are operat<strong>in</strong>g at layers ¥ and ¥ <br />

each flow upon a packet loss. After the packet drop, suppose<br />

the flows operate at ¥¤E layers ¥¤E and respectively. Then, the<br />

flows F7G 8<br />

8<br />

take FHG :<br />

:<br />

and <strong>RTT</strong>s respectively to rega<strong>in</strong> the lost<br />

bandwidth. From the above reason<strong>in</strong>g, this gives us -<br />

(¥ <br />

: <br />

¥=<br />

(2)<br />

¥ ), and ¢DC and ¢DC is the w<strong>in</strong>dow reduction of<br />

E<br />

E<br />

¢DC<br />

E ¥<br />

The w<strong>in</strong>dow reduction can be chosen proportional to the<br />

current w<strong>in</strong>dow size or be based on the layer at which the<br />

flow operates. If the latter is chosen, then care must be taken<br />

to ensure convergence when two flows operate at the same<br />

layer but at different w<strong>in</strong>dow sizes.<br />

This framework provides a simple, yet scalable design for<br />

the congestion response function of <strong>TCP</strong> for the congestion<br />

avoidance phase <strong>in</strong> highspeed networks. The congestion w<strong>in</strong>dow<br />

response <strong>in</strong> slow start is not modified, allow<strong>in</strong>g the<br />

architecture to evolve with experimental slowstart algorithms<br />

such as [27]. At the end of slowstart the number of layers<br />

to operate at can easily be determ<strong>in</strong>ed based on the w<strong>in</strong>dow<br />

size. The key factor for the framework is to determ<strong>in</strong>e an<br />

appropriate relationships for the step I size (or equivalently,<br />

the w<strong>in</strong>dow ¢I size at which the layer transitions occur) and<br />

the w<strong>in</strong>dow reduction that satisfy the conditions <strong>in</strong> Equations<br />

2 and 3.<br />

¢DC<br />

E ; ¥<br />

(3)

;<br />

¥<br />

;<br />

s<br />

s<br />

s<br />

A<br />

s<br />

III. A DESIGN CHOICE<br />

Several different design options are possible for choos<strong>in</strong>g<br />

the appropriate relationship for the stepsize and the w<strong>in</strong>dow<br />

reduction ¢DC . In this section, we present one possible design.<br />

We support this design with extensive analysis to understand<br />

the protocol behavior.<br />

For our design, we first choose the decrease behavior. S<strong>in</strong>ce<br />

we want to keep the design as close to that of <strong>TCP</strong> as possible,<br />

we choose a multiplicative decrease. However, the w<strong>in</strong>dow<br />

reduction is based on a factor of J such that -<br />

(4)<br />

¢DCDKJML4¢<br />

Based on this choice for the decrease behavior we determ<strong>in</strong>e<br />

the appropriate <strong>in</strong>crease behavior such that the conditions <strong>in</strong><br />

Equation 2 and Equation 3 are satisfied. To provide an <strong>in</strong>tuition<br />

for choice of the <strong>in</strong>crease behavior, we start by consider<strong>in</strong>g Eq.<br />

3. Also, <strong>in</strong> order to allow smooth layer transitions, we stipulate<br />

that after a w<strong>in</strong>dow reduction due to a packet loss, atmost one<br />

layer can be dropped i.e., a flow operat<strong>in</strong>g at ¥ layer before<br />

the packet loss should operate at ¥ layer NO¥QPSR#T or after the<br />

w<strong>in</strong>dow reduction. Based on this stipulation, there are four<br />

ensur<strong>in</strong>g convergence between multiple flows with similar<br />

<strong>RTT</strong> shar<strong>in</strong>g the same l<strong>in</strong>k. While it is essential to choose the<br />

relationship between ¢ and ¢ +*, such that the condition<br />

<strong>in</strong> equation 7 is satisfied to ensure convergence, a very conservative<br />

choice would make the protocol slow <strong>in</strong> <strong>in</strong>creas<strong>in</strong>g<br />

the layers and hence less efficient <strong>in</strong> utiliz<strong>in</strong>g the bandwidth.<br />

With this choice, s<strong>in</strong>ce layer<strong>in</strong>g starts at ¢¤j¢ £ , we<br />

have -<br />

By def<strong>in</strong>ition, a¢435lPe¢¤ and hence we have,<br />

¢)<br />

¥U BR#TNO¥dP

s<br />

p<br />

T<br />

JlNŒ¢DE E T ‰Šĉ‹<br />

E ¥<br />

’<br />

–<br />

C<br />

‚<br />

C<br />

†<br />

$<br />

where Ǹ T is the time (<strong>in</strong> <strong>RTT</strong>s) for <strong>in</strong>creas<strong>in</strong>g the w<strong>in</strong>dow<br />

from layer s to Ǹ ¥W gR#T . When the flow operates at layer<br />

¥<br />

, to reach to the next layer, it has to <strong>in</strong>crease the w<strong>in</strong>dow<br />

¥<br />

by and the rate of <strong>in</strong>crease ¥ is per <strong>RTT</strong>. Thus Ǹ T is<br />

given by s <strong>RTT</strong>s. Substitut<strong>in</strong>g this <strong>in</strong> the above equation and<br />

vxw<br />

do<strong>in</strong>g the summation we f<strong>in</strong>d that the time to reach a w<strong>in</strong>dow<br />

size ¢¤ of is<br />

Tt<br />

Ǹ ¥dPAyTǸ ¥V<br />

z ¢¤£ (13)<br />

NO<br />

Note that the above analysis assumes that slowstart is term<strong>in</strong>ated<br />

before layer<strong>in</strong>g starts.<br />

Table <strong>in</strong> Fig. 2 shows the number of layers correspond<strong>in</strong>g<br />

to the w<strong>in</strong>dowsize at layer (¢ transitions ) ¢ £ Zrh with .<br />

For a 2.4Gbps l<strong>in</strong>k with an <strong>RTT</strong> of 150ms and packet size<br />

of 1500 bytes, the w<strong>in</strong>dow size can grow to 30,000. The<br />

number of layers required to ma<strong>in</strong>ta<strong>in</strong> full l<strong>in</strong>k utilization <strong>in</strong><br />

this case is ¥igR#r atleast . Based on this we J${Rr<br />

choose<br />

(correspond<strong>in</strong>g ¥WDR| to ).<br />

The table also shows the speedup <strong>in</strong> claim<strong>in</strong>g bandwidth<br />

compared to <strong>TCP</strong>, for an L<strong>TCP</strong> flow ¢¤£¤rh with , with the<br />

assumption that slowstart is term<strong>in</strong>ated when w<strong>in</strong>dow ¢ £ = .<br />

This column gives an idea of the number of virtual <strong>TCP</strong> flows<br />

emulated by an L<strong>TCP</strong> flow. For <strong>in</strong>stance, a flow that evolves<br />

to layer 15, behaves similar to establish<strong>in</strong>g 10 parallel flows<br />

at the beg<strong>in</strong>n<strong>in</strong>g of the connection.<br />

time of L<strong>TCP</strong> compared to <strong>TCP</strong> is a factor of L4Ǹ ¥-P¤R#T .<br />

2) Throughput Analysis: In order to understand the rela-<br />

p<br />

php<br />

tionship between throughput of an L<strong>TCP</strong> flow and the drop<br />

† probability of the l<strong>in</strong>k, we present the follow<strong>in</strong>g analysis.<br />

Fig. 3 shows the steady state behavior of the congestion<br />

w<strong>in</strong>dow of an L<strong>TCP</strong> flow with a uniform loss probability<br />

model. Suppose the number of layers at steady state ¥ is<br />

and the l<strong>in</strong>k drop probability † is . ¢DE E Let ¢DE and represent<br />

the congestion w<strong>in</strong>dow just before and just after a packet<br />

drop respectively. On a packet loss the congestion w<strong>in</strong>dow<br />

is reduced Jo¢DE E by . Suppose the flow operates at ¥SE layer<br />

after the packet drop. Then, for each <strong>RTT</strong> after the loss, the<br />

congestion w<strong>in</strong>dow is <strong>in</strong>creased at the rate ¥SE of until the<br />

w<strong>in</strong>dow reaches the ¢D‡ ‡ value , when the next packet drop<br />

occurs. The w<strong>in</strong>dow behavior of the L<strong>TCP</strong> flow, <strong>in</strong> general,<br />

will look like Fig. 3 at steady state.<br />

With this model, the time between two successive losses,<br />

say , ‚ F E E E<br />

will be ‚ F E E LC<br />

ss<br />

E<br />

<strong>RTT</strong>s or seconds. The<br />

number of packets sent between two successive sˆ ˆ<br />

losses, say<br />

, is given by the area of the shaded region <strong>in</strong> Fig.<br />

‰<br />

3. This<br />

can be shown to be -<br />

NxRŽP<br />

J<br />

A<br />

T (14)<br />

Fig. 3.<br />

Analysis of Steady State Behavior<br />

The throughput of such an L<strong>TCP</strong> flow can be computed ‘ as<br />

£ . That ’ is,<br />

¢D‡ ‡<br />

Fig. 2.<br />

Comparison of L<strong>TCP</strong> (with }~ = 50 and € = 0.15) to <strong>TCP</strong><br />

An L<strong>TCP</strong> flow with w<strong>in</strong>dow size ¢<br />

will reduce the congestion<br />

w<strong>in</strong>dow by Jo¢ . It then starts to <strong>in</strong>crease the congestion<br />

w<strong>in</strong>dow at the rate of atleast Ǹ ¥ P^RT packets per <strong>RTT</strong> (s<strong>in</strong>ce we<br />

stipulate that a packet drop results <strong>in</strong> the reduction of atmost<br />

one layer). The upper bound on the packet loss recovery time<br />

for L<strong>TCP</strong> then, ƒ +*x„ is . In case of <strong>TCP</strong>, upon a packet drop,<br />

F ‚<br />

the w<strong>in</strong>dow is reduced by half, and after the drop the rate<br />

of <strong>in</strong>crease is 1 per <strong>RTT</strong>. Thus, the packet recovery time is<br />

¦…A . The last column of Table <strong>in</strong> Fig.2 shows the speed up<br />

¢<br />

<strong>in</strong> packet recovery time for L<strong>TCP</strong> J${Rr with compared to<br />

<strong>TCP</strong>. Based on the conservative estimate that a layer reduction<br />

occurs after a packet drop, the speed up <strong>in</strong> the packet recovery<br />

The number of packets sent between two losses is<br />

noth<strong>in</strong>g but<br />

<br />

‰”ˆ<br />

. By substitut<strong>in</strong>g this <strong>in</strong> Eq. 14, and solv<strong>in</strong>g<br />

•<br />

for ¢DE E we have,<br />

Substitut<strong>in</strong>g <strong>in</strong> Eq. 15 we have<br />

¢ E E <br />

¢˜i<br />

J<br />

A<br />

E ¥<br />

‚ T—† JlN“RŽP<br />

E T NxRP‚<br />

ss›š<br />

(16)<br />

(17)<br />

Aga<strong>in</strong> if we consider the example above of the 2.4Gbps<br />

l<strong>in</strong>k with an <strong>RTT</strong> of 150ms and packet size of 1500 bytes,<br />

the w<strong>in</strong>dow size can grow to 30,000. From, Fig. 2 we see<br />

¢_<br />

ssMN“RŽP<br />

T (15)

œ<br />

’<br />

Ÿ<br />

<br />

¢<br />

ª<br />

C<br />

<br />

’ Ÿ<br />

¢<br />

ª<br />

R<br />

’<br />

<br />

C<br />

T<br />

C<br />

«<br />

‚<br />

’<br />

T<br />

†<br />

ª<br />

ª<br />

f<br />

†<br />

ª<br />

<br />

8 NaF £u£ 8x„ NxRŽP§† T<br />

G : NaF<br />

8 F£u£…8<br />

G : F<br />

’<br />

¢<br />

¢<br />

C<br />

C<br />

ª<br />

ª<br />

<br />

C<br />

C<br />

F 8<br />

G £u£ 8 ‹<br />

: F<br />

C<br />

<br />

<br />

†<br />

ª<br />

T<br />

C<br />

C<br />

<br />

<br />

8<br />

that this w<strong>in</strong>dow size corresponds to a layersize ¥m0R#r of .<br />

Substitut<strong>in</strong>g this value <strong>in</strong> the above equation, we notice that<br />

for the 2.4Gbps l<strong>in</strong>k mentioned above JcZ${Rr with , L<strong>TCP</strong><br />

offers an improvement of a factor of about 8 for the achievable<br />

s<strong>in</strong>gle-flow throughput compared to <strong>TCP</strong>.<br />

3) <strong>RTT</strong> Unfairness: In this section we assess the <strong>RTT</strong><br />

unfairness of L<strong>TCP</strong> under the assumptions of random loss<br />

model as well as synchronized loss models. In [29], for a<br />

random loss model the probability of the packet loss is given<br />

by -<br />

N—>C<br />

ss<br />

N—^XC<br />

T<br />

ss<br />

NO^>C<br />

ss<br />

(18)<br />

where 6ž<br />

NO^>C<br />

ss<br />

T and N—>C<br />

ss<br />

T are the w<strong>in</strong>dow <strong>in</strong>crease<br />

and decrease functions respectively.<br />

Ÿ<br />

For L<strong>TCP</strong> and TŠ Jo¢ .<br />

Ÿ<br />

ss<br />

T”d¥§¦© N—^XC<br />

ss<br />

N—>C<br />

Substitut<strong>in</strong>g these values <strong>in</strong> the above equation and approximat<strong>in</strong>g<br />

, we can calculate the loss rate as ¥/ž)¢ ¢¡¢£<br />

-<br />

(19)<br />

=ž<br />

Jo¢D¤<br />

¡¢£ ¥ T NxR9<br />

¢ ¥ where is the statistical equilibrium w<strong>in</strong>dow.<br />

It is clear from the above equation that the two L<strong>TCP</strong><br />

flows experienc<strong>in</strong>g the same loss probability, will have the<br />

same equilibrium w<strong>in</strong>dow size, regardless of the round trip<br />

time. However, throughput at the equilibrium po<strong>in</strong>t becomes<br />

<strong>in</strong>versely proportional to its round trip time s<strong>in</strong>ce the average<br />

transmission ¦ ¥ rate and is given ¢ ¥ ¦…C<br />

ss<br />

by .<br />

The loss probability for <strong>TCP</strong> with similar assumptions is<br />

given by:<br />

=ž<br />

R<br />

q$§ry¢ ¥ T N“R9<br />

(20)<br />

The equilibrium w<strong>in</strong>dow size of the <strong>TCP</strong> flow does not depend<br />

on the <strong>RTT</strong> either. Therefore, for random losses the <strong>RTT</strong><br />

dependence of w<strong>in</strong>dow of an L<strong>TCP</strong> flow is same as <strong>TCP</strong>. Thus<br />

L<strong>TCP</strong> has w<strong>in</strong>dow-oriented fairness similar to <strong>TCP</strong> and will<br />

not perform worse than <strong>TCP</strong> <strong>in</strong> case of random losses.<br />

Because of the nature of current deployment of high bandwidth<br />

networks, it is likely that the degree of multiplex<strong>in</strong>g<br />

will be small and as such, an assumption of synchronized loss<br />

model may be more appropriate. So we present here the details<br />

of the analysis with the synchronised loss model as well.<br />

Follow<strong>in</strong>g a similar analysis <strong>in</strong> [17], for synchronized losses,<br />

suppose the time between two drops ¨ is . For a © flow<br />

ss,ª<br />

with<br />

round trip C time<br />

w<strong>in</strong>dow size is<br />

and probability of loss †<br />

•¬« <br />

<br />

, the average<br />

ss,ª<br />

ª (21)<br />

¨{†<br />

ª¢® ¥¤E<br />

ª +¯±°¦©° ® <br />

R<br />

J<br />

N“RŽP<br />

J<br />

A<br />

T (22)<br />

¢<br />

By substitut<strong>in</strong>g the above <strong>in</strong> Eq. 21 and simplify<strong>in</strong>g we get,<br />

E ª ®<br />

ss,ª (23)<br />

¨²¥<br />

From Eq. 9 we see that the number of ¥ layers is related to<br />

the w<strong>in</strong>dow ¢ size based on the ¥³ž\¢ ´<br />

relationship . By<br />

substitut<strong>in</strong>g this relationship <strong>in</strong> the above equation we have,<br />

´:(24)<br />

When the <strong>RTT</strong> unfairness is def<strong>in</strong>ed as the throughput ratio<br />

of two flows <strong>in</strong> terms of their <strong>RTT</strong> ratios, the <strong>RTT</strong> unfairness<br />

for L<strong>TCP</strong> is -<br />

ž\N<br />

®<br />

ssµªT<br />

¨<br />

ss<br />

ž\N ss Th:<br />

(25)<br />

NxRŽP§† £u£ : £u£ :¢„ T<br />

G G<br />

(s<strong>in</strong>ce †b1^1)R ).<br />

This is slightly worse than the <strong>RTT</strong> unfairness of unmodified<br />

<strong>TCP</strong> shown to be proportional NG £u£ :<br />

G £u£ 8 T <br />

to <strong>in</strong> [17].<br />

In case of L<strong>TCP</strong>, a flow with larger w<strong>in</strong>dow size operates at a<br />

higher layer and <strong>in</strong>creases the w<strong>in</strong>dow at the rate correspond<strong>in</strong>g<br />

to that layer. Therefore, it is relatively more aggressive<br />

than a flow with a smaller w<strong>in</strong>dow size which operates at a<br />

lower layer and hence <strong>in</strong>creases the w<strong>in</strong>dow at a lower rate.<br />

This, coupled with the difference <strong>in</strong> <strong>RTT</strong>s could make the <strong>RTT</strong><br />

unfairness of L<strong>TCP</strong> worse than that of <strong>TCP</strong>. When the losses<br />

are random, the larger flow experiences more packet drops<br />

than the smaller flow. The result<strong>in</strong>g difference <strong>in</strong> the number<br />

of loss events reduces the effect of <strong>RTT</strong> unfairness of L<strong>TCP</strong>.<br />

However, with synchronous loss model, when equal number<br />

of loss events may be observed by different flows, the <strong>RTT</strong><br />

unfairness could be worse than that of <strong>TCP</strong>.<br />

In order to compensate for this dependence of aggressiveness<br />

on <strong>RTT</strong>, we <strong>in</strong>troduce the <strong>RTT</strong> compensation ¥ G<br />

factor<br />

and modify the per-ack behavior such that, an L<strong>TCP</strong> flow<br />

at ¥ layer will <strong>in</strong>crease the congestion w<strong>in</strong>dow at the rate<br />

for the successful receipt of one w<strong>in</strong>dow of<br />

acknowledgements. The <strong>RTT</strong> compensation factor is set to<br />

¥ G L=¥ of<br />

1 for the base <strong>RTT</strong> of 10ms. For larger ¥ G<br />

<strong>RTT</strong>s, is<br />

ss ss<br />

made<br />

proportional to the ratio C<br />

·¸º¹° C ¦Ǹ<br />

scales up the rate at which the higher <strong>RTT</strong> flow <strong>in</strong>crease its<br />

w<strong>in</strong>dow. S<strong>in</strong>ce the <strong>RTT</strong> compensation ¥ G<br />

factor is constant<br />

for a given <strong>RTT</strong> and multiplicative <strong>in</strong> nature, it does not alter<br />

the analyses <strong>in</strong> the earlier discussion for flows with same<br />

<strong>RTT</strong>. However, the throughput equation for L<strong>TCP</strong> and the <strong>RTT</strong><br />

unfairness can now be re-written as -<br />

T . This uniformly<br />

s<strong>in</strong>ce the flow will send •¬« packets between two consecutive<br />

drop events and the number of <strong>RTT</strong>s between the two consecutive<br />

loss events<br />

<br />

£u£ « .<br />

From Eq. 17, we have the bandwidth of an L<strong>TCP</strong> flow to<br />

G<br />

be<br />

G £u£ «<br />

E<br />

¢˜i»<br />

ss<br />

E<br />

ª Ll¥ G<br />

« L ®<br />

ss,ª š<br />

¥<br />

G 8 ¥<br />

G : ¥<br />

´:(26)<br />

žZN ss T : N<br />

G £u£#:<br />

The above equation shows that ¥ G<br />

by choos<strong>in</strong>g appropriately,<br />

the <strong>RTT</strong> unfairness of L<strong>TCP</strong> flows can be<br />

´<br />

controlled.<br />

, the <strong>RTT</strong> unfairness<br />

For <strong>in</strong>stance, choos<strong>in</strong>g ¥ G<br />

NxRŽP ‚ T<br />

¢˜<br />

ž¼C<br />

ss ª<br />

/<br />

ss à š<br />

ss 8

f<br />

f<br />

C<br />

C<br />

N<br />

<br />

<br />

T<br />

<br />

R<br />

T<br />

f<br />

<br />

k<br />

of the L<strong>TCP</strong> protocol will be similar to the AIMD scheme<br />

used œ<strong>in</strong><br />

<strong>TCP</strong>. By ¥ G žC<br />

ss<br />

choos<strong>in</strong>g , the effect of <strong>RTT</strong> on<br />

the scheme can be entirely elim<strong>in</strong>ated and the L<strong>TCP</strong> protocol<br />

behaves like a rate controlled scheme <strong>in</strong>dependent of the <strong>RTT</strong>.<br />

, we :<br />

By choos<strong>in</strong>g an <strong>in</strong>termediate value such as, ¥ G žBC<br />

it does not <strong>in</strong>terfere with the simulations. For the L<strong>TCP</strong> flows,<br />

the parameter ¢¤£ is set to 50 packets and the parameter J<br />

was set to 0.15. The traffic constitute of FTP transfer between<br />

the senders and receivers.<br />

can reduce the <strong>RTT</strong> unfairness of L<strong>TCP</strong> <strong>in</strong> comparison to <strong>TCP</strong>.<br />

In general, suppose we ¥ G<br />

choose, proportional C<br />

ss½<br />

to ,<br />

¾ where is a constant. After a w<strong>in</strong>dow reduction WR, suppose<br />

a flow operates at ¥SE layer . When <strong>RTT</strong> compensation is<br />

used it FHG !¿±À> E<br />

takes <strong>RTT</strong>s FHG À G £u£<br />

!¿±À¢ E<br />

or secs to rega<strong>in</strong> the<br />

lost bandwidth. Suppose two flows with different <strong>RTT</strong>s are<br />

compet<strong>in</strong>g for the available bandwidth, Equations 3 can be<br />

re-written as<br />

ss 8<br />

<br />

Substitut<strong>in</strong>g the value of WR and further solv<strong>in</strong>g it, we have,<br />

G ÁLl¥ E ; ¥<br />

ss<br />

ss<br />

¥ G 9Ll¥ E<br />

½ *, 1<br />

BR#T NO¥¤EÂ<br />

E ¥<br />

¾]P

larger than that of <strong>TCP</strong>, which ow<strong>in</strong>g to under-utilization of<br />

the l<strong>in</strong>k sees lower congestion losses.<br />

Fig. 8.<br />

Fairness Among L<strong>TCP</strong> Flows<br />

Fig. 6.<br />

L<strong>in</strong>k Utilization and Packet Loss Rate for L<strong>TCP</strong> and <strong>TCP</strong><br />

2) Dynamic L<strong>in</strong>k Shar<strong>in</strong>g: In this experiment, we evaluate<br />

how L<strong>TCP</strong> flows respond to dynamically chang<strong>in</strong>g traffic<br />

conditions created by multiplex<strong>in</strong>g of several flows start<strong>in</strong>g<br />

and stopp<strong>in</strong>g at different times. One L<strong>TCP</strong> flow is started at<br />

time 0, and allowed to reach steady state. A new L<strong>TCP</strong> flow is<br />

then added at 150 seconds and lasts for 1250 seconds. A third<br />

L<strong>TCP</strong> flow is added at 350 seconds and lasts for 850 seconds.<br />

F<strong>in</strong>ally, the fourth flow starts the transfer at 550 seconds and<br />

sends data for 450 seconds. All these flows share the same<br />

bottleneck l<strong>in</strong>k. Fig. 7 shows the throughput of each flow.<br />

From the graph we see that, when a new flow is started and the<br />

available l<strong>in</strong>k bandwidth on the bottleneck l<strong>in</strong>k decreases, the<br />

exist<strong>in</strong>g L<strong>TCP</strong> flows quickly give up bandwidth until all flows<br />

reach the fair utilization level. The per-flow l<strong>in</strong>k utilizations<br />

rema<strong>in</strong> stable at this value until some of the flows stop send<strong>in</strong>g<br />

traffic. When that happens, the rema<strong>in</strong><strong>in</strong>g L<strong>TCP</strong> flows quickly<br />

ramp up the congestion w<strong>in</strong>dow to reach the new fair shar<strong>in</strong>g<br />

level, such that the l<strong>in</strong>k is fully utilized.<br />

1000<br />

900<br />

4) Interaction with <strong>TCP</strong>: In this section, we study the effect<br />

of L<strong>TCP</strong> on regular <strong>TCP</strong> flows. It must be noted that the<br />

w<strong>in</strong>dow response function of L<strong>TCP</strong> is designed to be more<br />

aggressive than <strong>TCP</strong> <strong>in</strong> high speed networks. So a s<strong>in</strong>gle flow<br />

of <strong>TCP</strong> cannot compete with a s<strong>in</strong>gle flow of L<strong>TCP</strong> and thus,<br />

for this simulation we compare the aggregate throughput of<br />

ten <strong>TCP</strong> flows to that of one L<strong>TCP</strong> flow at different l<strong>in</strong>k<br />

bandwidths. Also, to verify that an established L<strong>TCP</strong> flow<br />

gives up a share of its bandwidth to <strong>TCP</strong> flows, we first<br />

start the L<strong>TCP</strong> transfer and let it run for 300 seconds, so<br />

that it utilizes the l<strong>in</strong>k fully. At this po<strong>in</strong>t the <strong>TCP</strong> flows are<br />

started. The throughput is calculated for the flows after another<br />

300 seconds. Fig.9 shows the results. As the l<strong>in</strong>k bandwidth<br />

<strong>in</strong>creases, <strong>TCP</strong> flows become more <strong>in</strong>efficient <strong>in</strong> utiliz<strong>in</strong>g the<br />

available bandwidth, and as such, the percentage of the bandwidth<br />

used by <strong>TCP</strong> decreases. But, the aggregate throughput<br />

of <strong>TCP</strong> flows <strong>in</strong>creases with higher l<strong>in</strong>k capacity, show<strong>in</strong>g that<br />

<strong>in</strong>spite of the aggressive nature of L<strong>TCP</strong> congestion control, it<br />

still gives up a share of the bandwidth to compet<strong>in</strong>g <strong>TCP</strong> flows.<br />

For <strong>in</strong>stance, on the 500Mbps l<strong>in</strong>k, the aggregate throughput<br />

of the <strong>TCP</strong> flows is 101.25Mbps but on the 1Gbps l<strong>in</strong>k it is<br />

185.64 Mbps.<br />

800<br />

700<br />

L<strong>TCP</strong>1<br />

L<strong>TCP</strong>2<br />

L<strong>TCP</strong>3<br />

L<strong>TCP</strong>4<br />

Throughput (Mbps)<br />

600<br />

500<br />

400<br />

300<br />

200<br />

100<br />

0<br />

0 200 400 600 800 1000 1200 1400 1600 1800<br />

Time (seconds)<br />

Fig. 7.<br />

Dynamic L<strong>in</strong>k Shar<strong>in</strong>g<br />

3) Fairness Among multiple L<strong>TCP</strong> Flows: In this experiment,<br />

we evaluate the fairness of L<strong>TCP</strong> flows to each other.<br />

Different number of L<strong>TCP</strong> flows are started at the same time<br />

and the average per-flow bandwidth of each flow is noted. The<br />

table <strong>in</strong> Fig 8 shows that when the number of flows is varied,<br />

the maximum and the m<strong>in</strong>imum per-flow throughputs rema<strong>in</strong><br />

close to the average, <strong>in</strong>dicat<strong>in</strong>g that the per-flow throughput<br />

of each flow is close to the fair proportional share. This is<br />

verified by calculat<strong>in</strong>g the Fairness Index proposed by Ja<strong>in</strong> et.<br />

al., <strong>in</strong> [28]. The Fairness Index be<strong>in</strong>g close to 1 shows that the<br />

L<strong>TCP</strong> flows share the available network bandwidth equitably.<br />

Fig. 9.<br />

Interaction of L<strong>TCP</strong> with <strong>TCP</strong><br />

5) <strong>RTT</strong> Unfairness: Table <strong>in</strong> Fig.10 shows the ratio of<br />

throughput of two flows with different <strong>RTT</strong>s shar<strong>in</strong>g the same<br />

bottleneck l<strong>in</strong>k. The ratio of <strong>RTT</strong> of the two flows is set to 1,<br />

2 and 3 for the three runs of the experiment, with the <strong>RTT</strong> of<br />

the shorter-delay flow be<strong>in</strong>g 40ms. For the computation of the<br />

<strong>RTT</strong> compensation factor ¥ G<br />

, a base <strong>RTT</strong> of 10ms is used.<br />

It can be seen from the table that, the use of <strong>RTT</strong> compensation<br />

factor, reduces the <strong>RTT</strong> unfairness of L<strong>TCP</strong>. With<br />

ss 8 ´<br />

an<br />

L<strong>TCP</strong> shows <strong>RTT</strong><br />

unfairness comparable to <strong>TCP</strong> while with <strong>RTT</strong> compensation<br />

<strong>RTT</strong> compensation factor of ¥ G žC

Fig. 10.<br />

factor of ¥ G<br />

<strong>RTT</strong> Unfairness for Different Values of ÊŽË<br />

comparison to that of <strong>TCP</strong> is reduced.<br />

Several other experiments were conducted verify<strong>in</strong>g the<br />

effect of the <strong>RTT</strong> compensation factor on the l<strong>in</strong>k utilization,<br />

flow throughput, fairness and loss rates. However, due to lack<br />

of space, those results have not been <strong>in</strong>cluded here. They will<br />

be made available <strong>in</strong> a technical report.<br />

žÌC<br />

8 : the <strong>RTT</strong> unfairness of L<strong>TCP</strong> <strong>in</strong><br />

ss<br />

V. CONCLUSIONS AND FUTURE WORK<br />

In this paper we have proposed L<strong>TCP</strong>, a layered approach<br />

for modify<strong>in</strong>g <strong>TCP</strong> for high-speed high-delay l<strong>in</strong>ks. L<strong>TCP</strong><br />

employs two dimensional control for adapt<strong>in</strong>g to available<br />

bandwidth. At the macroscopic level, L<strong>TCP</strong> uses the concept<br />

of layer<strong>in</strong>g to <strong>in</strong>crease the congestion w<strong>in</strong>dow when<br />

congestion is not observed over an extended period of time.<br />

With<strong>in</strong> a layer ¥ , L<strong>TCP</strong> uses modified additive <strong>in</strong>crease (by ¥<br />

per <strong>RTT</strong>) and rema<strong>in</strong>s ack-clocked. The layered architecture<br />

provides flexibility <strong>in</strong> choos<strong>in</strong>g the sizes of the layers for<br />

achiev<strong>in</strong>g different goals. This paper explored one design<br />

option. For this design, the w<strong>in</strong>dow reduction on a packet loss<br />

is chosen to be multiplicative.<br />

We have shown through analysis and simulations that a<br />

s<strong>in</strong>gle L<strong>TCP</strong> flow can adapt to nearly fully utiliz<strong>in</strong>g the l<strong>in</strong>k<br />

bandwidth. Other significant features of the chosen design are<br />

(a) it provides a significant speedup <strong>in</strong> claim<strong>in</strong>g bandwidth<br />

and <strong>in</strong> packet loss recovery times compared to unmodified<br />

<strong>TCP</strong> (b) multiple flows share the available l<strong>in</strong>k capacity<br />

equitably (c) an <strong>RTT</strong> compensation can be used to ensure<br />

that the <strong>RTT</strong> unfairness is no worse than unmodified <strong>TCP</strong> (d)<br />

requires simple modifications to <strong>TCP</strong>’s congestion response<br />

mechanisms and is controlled only by simple parameters -<br />

¢S£ , J and ¥ G<br />

.<br />

We have also implemented L<strong>TCP</strong> <strong>in</strong> the L<strong>in</strong>ux kernel and<br />

are currently compar<strong>in</strong>g its performance with other schemes.<br />

We plan to characterize the traffic <strong>in</strong> high speed l<strong>in</strong>ks to<br />

understand the level of multiplex<strong>in</strong>g and the nature of the<br />

losses. Comparative third party evaluation of L<strong>TCP</strong> aga<strong>in</strong>st<br />

other proposals is currently underway at SLAC, Stanford.<br />

Our design is h<strong>in</strong>ged on an early decision to use multiplicative<br />

decrease, and on the stipulation that atmost one<br />

layer is dropped after a congestion event. A number of other<br />

possibilities exist for alternate designs of the general L<strong>TCP</strong><br />

approach. We plan to pursue these options <strong>in</strong> future.<br />

REFERENCES<br />

[1] M. Mathis, J. Semske, J. Mahdavi, and T. Ott, “The macroscopic<br />

behavior of the <strong>TCP</strong> congestion avoidance algorithms,” ACM Computer<br />

Communication Review, vol. 27(3), July 1997.<br />

[2] Jeffrey Semke, Jamshid Mahdavi, and Matthew Mathis, “Automatic <strong>TCP</strong><br />

Buffer Tun<strong>in</strong>g”, Proceed<strong>in</strong>gs of ACM SIGCOMM, October 1998.<br />

[3] Eric Weigle and Wu-chun Feng, “Dynamic Right-Siz<strong>in</strong>g: a Simulation<br />

Study”, Proceed<strong>in</strong>gs of IEEE International Conference on Computer<br />

Communications and Networks (ICCCN), October 2001.<br />

[4] Brian L. Tierney, Dan Gunter, Jason Lee, Mart<strong>in</strong> Stoufer and Joseph<br />

B. Evans, “Enabl<strong>in</strong>g Network-Aware Applications”, 10th IEEE International<br />

Symposium on <strong>High</strong> <strong>Performance</strong> Distributed Comput<strong>in</strong>g<br />

(HPDC), August 2001.<br />

[5] Tom Dunigan, Matt Mathis and Brian Tierney, “A <strong>TCP</strong> Tun<strong>in</strong>g Daemon”,<br />

SuperComput<strong>in</strong>g (SC) November, 2002.<br />

[6] Ostermann, S., Allman, M., and H. Kruse, “An Application-Level<br />

solution to <strong>TCP</strong>’s Satellite Inefficiencies”, Workshop on Satellite-based<br />

Information Services (WOSBIS), November, 1996.<br />

[7] J. Lee, D. Gunter, B. Tierney, B, Allcock, J. Bester, J. Bresnahan and<br />

S. Tuecke, “Applied Techniques for <strong>High</strong> <strong>Bandwidth</strong> Data Transfers<br />

Across Wide Area Networks”, Proceed<strong>in</strong>gs of International Conference<br />

on Comput<strong>in</strong>g <strong>in</strong> <strong>High</strong> Energy and Nuclear Physics, September 2001.<br />

[8] C. Baru, R. Moore, A. Rajasekar, and M. Wan, “The SDSC storage<br />

resource broker”, In Proc. CASCON’98 Conference, Dec 1998.<br />

[9] R. Long, L. E. Berman, L. Neve, G. Roy, and G. R. Thoma, “An<br />

application-level technique for faster transmission of large images on<br />

the Internet”, Proceed<strong>in</strong>gs of the SPIE: Multimedia Comput<strong>in</strong>g and<br />

Network<strong>in</strong>g 1995, Feb 1995.<br />

[10] H. Sivakumar, S. Bailey and R. Grossman, “PSockets: The Case for<br />

Application-level Network Strip<strong>in</strong>g for Data Intensive Applications us<strong>in</strong>g<br />

<strong>High</strong> Speed Wide Area Networks”, Proceed<strong>in</strong>gs of Super Comput<strong>in</strong>g,<br />

November 2000.<br />

[11] Jon Crowcroft and Philippe Oechsl<strong>in</strong>, “Differentiated End-to-End Internet<br />

Services us<strong>in</strong>g a Weighted Proportional Fair Shar<strong>in</strong>g <strong>TCP</strong>”, ACM<br />

CCR, vol. 28, no. 3, July 1998.<br />

[12] Thomas Hacker, Brian Noble and Brian Athey, “<strong>Improv<strong>in</strong>g</strong> Throughput<br />

and Ma<strong>in</strong>ta<strong>in</strong><strong>in</strong>g Fairness us<strong>in</strong>g Parallel <strong>TCP</strong>”, Proceed<strong>in</strong>gs of IEEE<br />

Infocom 2004, March 2004.<br />

[13] Hung-Yun Hsieh and Raghupathy Sivakumar, “p<strong>TCP</strong>: An End-to-End<br />

Transport Layer Protocol for Striped Connections”, Proceed<strong>in</strong>gs of 10th<br />

IEEE International Conference on Network Protocols, November 2002.<br />

[14] Sally Floyd, “<strong>High</strong>Speed <strong>TCP</strong> for Large Congestion W<strong>in</strong>dows”, RFC<br />

3649 December 2003.<br />

[15] Tom Kelly, “Scalable <strong>TCP</strong>: <strong>Improv<strong>in</strong>g</strong> <strong>Performance</strong> <strong>in</strong> <strong>High</strong>Speed Wide<br />

Area Networks”, ACM Computer Communications Review, April 2003.<br />

[16] Cheng J<strong>in</strong>, David X. Wei and Steven H. Low, “FAST <strong>TCP</strong>: motivation,<br />

architecture, algorithms, performance”, IEEE Infocom, March 2004.<br />

[17] Lisong Xu, Khaled Harfoush, and Injong Rhee, “B<strong>in</strong>ary Increase<br />

Congestion Control for Fast Long-Distance Networks”, To appear <strong>in</strong><br />

Proceed<strong>in</strong>gs of IEEE Infocom 2004, March 2004.<br />

[18] R. N. Shorten, D. J. Leith, J. Foy, and R. Kilduff, “Analysis and design<br />

of congestion control <strong>in</strong> synchronized communication networks”, June<br />

2003, submitted for publication.<br />

[19] D<strong>in</strong>a Katabi, Mark Handley, and Chalrie Rohrs, “Congestion Control<br />

for <strong>High</strong> <strong>Bandwidth</strong>-Delay Product Networks”, Proceed<strong>in</strong>gs of ACM<br />

SIGCOMM 2002, August 2002.<br />

[20] README file of tsunami-2002-12-02 release.<br />

http://www.<strong>in</strong>diana.edu/ anml/anmlresearch.html<br />

[21] Eric He, Jason Leigh, Oliver Yu and Thomas A. DeFanti, “Reliable Blast<br />

UDP : Predictable <strong>High</strong> <strong>Performance</strong> Bulk Data Transfer”, Proceed<strong>in</strong>gs<br />

of IEEE Cluster Comput<strong>in</strong>g, September, 2002.<br />

[22] H. Sivakumar, R. Grossman, M. Mazzucco, Y. Pan, and Q. Zhang,<br />

“Simple Available <strong>Bandwidth</strong> Utilization Library for <strong>High</strong>-Speed Wide<br />

Area Networks”, to appear <strong>in</strong> Journal of Supercomput<strong>in</strong>g, 2004.<br />

[23] R.X. Wu and A.A. Chien, “GTP: Group Transport Protocol for Lambda-<br />

Grids”, 4th IEEE/ACM International Symposium on Cluster Comput<strong>in</strong>g<br />

and the Grid, April 2004.<br />

[24] S. McCanne, V. Jacobson, and M. Vetterli, “Receiver-driven layered<br />

multicast”, Proceed<strong>in</strong>gs of ACM SIGCOMM ’96, August 1996.<br />

[25] L. Vicisano, L. Rizzo, and J. Crowcroft, “<strong>TCP</strong>-like congestion control<br />

for layered multicast data transfer” Proceed<strong>in</strong>gs of IEEE Infocom ’98,<br />

March 1998.<br />

[26] V. Jacobson, R. Braden and D. Borman, “<strong>TCP</strong> Extensions for <strong>High</strong><br />

<strong>Performance</strong>”, RFC 1323, May 1992.<br />

[27] S. Floyd, “Limited Slow-Start for <strong>TCP</strong> with Large Congestion W<strong>in</strong>dows”,<br />

RFC 3742, March 2004.<br />

[28] Dah-M<strong>in</strong>g Chiu and Raj Ja<strong>in</strong>, “Analysis of the Increase and Decrease Algorithms<br />

for Congestion Avoidance <strong>in</strong> Computer Networks”, Computer<br />

Networks and ISDN Systems, 17, June 1989<br />

[29] S. J. Golestani and K. K. Sabnani, “Fundamental observations on<br />

multicast congestion control <strong>in</strong> the Internet”, In proceed<strong>in</strong>gs of IEEE<br />

INFOCOM’99, New York, NY, March 1999.