Bivariate or joint probability distributions

Bivariate or joint probability distributions

Bivariate or joint probability distributions

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

1<br />

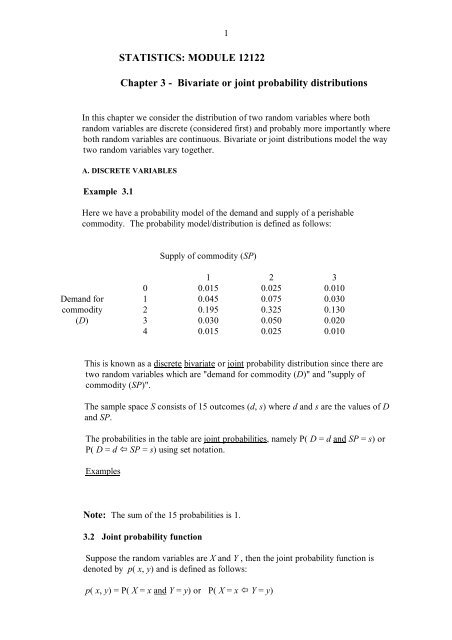

STATISTICS: MODULE 12122<br />

Chapter 3 - <strong>Bivariate</strong> <strong>or</strong> <strong>joint</strong> <strong>probability</strong> <strong>distributions</strong><br />

In this chapter we consider the distribution of two random variables where both<br />

random variables are discrete (considered first) and probably m<strong>or</strong>e imp<strong>or</strong>tantly where<br />

both random variables are continuous. <strong>Bivariate</strong> <strong>or</strong> <strong>joint</strong> <strong>distributions</strong> model the way<br />

two random variables vary together.<br />

A. DISCRETE VARIABLES<br />

Example 3.1<br />

Here we have a <strong>probability</strong> model of the demand and supply of a perishable<br />

commodity. The <strong>probability</strong> model/distribution is defined as follows:<br />

Supply of commodity (SP)<br />

1 2 3<br />

0 0.015 0.025 0.010<br />

Demand f<strong>or</strong> 1 0.045 0.075 0.030<br />

commodity 2 0.195 0.325 0.130<br />

(D) 3 0.030 0.050 0.020<br />

4 0.015 0.025 0.010<br />

This is known as a discrete bivariate <strong>or</strong> <strong>joint</strong> <strong>probability</strong> distribution since there are<br />

two random variables which are "demand f<strong>or</strong> commodity (D)" and "supply of<br />

commodity (SP)".<br />

The sample space S consists of 15 outcomes (d, s) where d and s are the values of D<br />

and SP.<br />

The probabilities in the table are <strong>joint</strong> probabilities, namely P( D = d and SP = s) <strong>or</strong><br />

P( D = d ï SP = s) using set notation.<br />

Examples<br />

Note: The sum of the 15 probabilities is 1.<br />

3.2 Joint <strong>probability</strong> function<br />

Suppose the random variables are X and Y , then the <strong>joint</strong> <strong>probability</strong> function is<br />

denoted by p( x, y) and is defined as follows:<br />

p( x, y) = P( X = x and Y = y) <strong>or</strong> P( X = x ï Y = y)

∑ ∑ , = 1.<br />

Also p( x y)<br />

x<br />

y<br />

2<br />

3.3 Marginal <strong>probability</strong> <strong>distributions</strong><br />

The marginal <strong>distributions</strong> are the <strong>distributions</strong> of X and Y considered separately<br />

and model how X and Y vary separately from each other. Suppose the <strong>probability</strong><br />

functions of X and Y are p<br />

X ( x)<br />

and pY ( y)<br />

respectively so that<br />

p<br />

X ( x)<br />

= P(X = x) and p ( y)<br />

Also ∑ p<br />

X ( x)<br />

= 1 and pY<br />

( y)<br />

x<br />

∑ = 1.<br />

y<br />

Y<br />

= P(Y = y)<br />

It is quite straightf<strong>or</strong>ward to obtain these these from the <strong>joint</strong> <strong>probability</strong> distribution<br />

p x p x , y p y p x , y<br />

since<br />

X ( ) = ∑ ( ) and<br />

Y ( ) = ∑ ( )<br />

y<br />

In regression problems we are very interested in conditional <strong>probability</strong> <strong>distributions</strong><br />

such as the conditional distribution of X given Y = y and the conditional distribution<br />

of Y given X = x<br />

x<br />

3.4 Conditional <strong>probability</strong> <strong>distributions</strong><br />

The conditional <strong>probability</strong> function of X given Y = y is denoted by p( x y)<br />

is defined as<br />

p( x y ) = P( X x Y y)<br />

= = =<br />

( = = )<br />

P( Y = y)<br />

P X x and Y y<br />

=<br />

( , )<br />

( y)<br />

p x y<br />

whereas the conditional <strong>probability</strong> function of Y given X = x is denoted by p( y x)<br />

and defined as<br />

p( y x ) = P( Y y X x)<br />

= = =<br />

( = = )<br />

P( X = x)<br />

P Y y and X x<br />

=<br />

p<br />

Y<br />

( , )<br />

( x)<br />

p x y<br />

p<br />

X<br />

3.5 Joint <strong>probability</strong> distribution function<br />

The <strong>joint</strong> (cumulative) <strong>probability</strong> distribution function (c.d.f.) is denoted by F(x, y)<br />

and is defined as<br />

F(x, y) = P( X ó x and Y ó y) and 0 ó F(x, y) ó 1<br />

The marginal c.d.f ’s are denoted by FX ( x)<br />

and F ( y)<br />

F<br />

X ( x)<br />

= P( X ó x) and F ( y)<br />

(see Chapter 1, section 1.12 ).<br />

Y<br />

= P( Y ó y)<br />

Y<br />

and are defined as follows

3<br />

3.6 Are X and Y independent?<br />

If either (a) F(x, y) = FX ( x)<br />

. FY ( y)<br />

<strong>or</strong> (b)p(x, y) = p<br />

X ( x)<br />

. pY<br />

( y)<br />

then X and Y are independent random variables.<br />

Example 3.2 The <strong>joint</strong> distribution of X and Y is<br />

X<br />

-2 -1 0 1 2<br />

Y 10 0.09 0.15 0.27 0.25 0.04<br />

20 0.01 0.05 0.08 0.05 0.01<br />

(a) Find the marginal <strong>distributions</strong> of X and Y.<br />

(b) Find the conditional distribution of X given Y =20.<br />

(c) Are X and Y independent?<br />

B. CONTINUOUS VARIABLES<br />

3.7 Joint <strong>probability</strong> density function<br />

The <strong>joint</strong> p.d.f. is denoted by f (x, y) (where f ( x , y)<br />

≥ 0 all x and y) and defines<br />

a <strong>probability</strong> surface in 3 dimensions. Probability is a volume under this surface and<br />

the total volume under the p.d.f. surface is 1 as the total <strong>probability</strong> is 1 i.e.<br />

∞<br />

∞<br />

( , )<br />

∫ ∫ f x y dx dy = 1<br />

−∞<br />

−∞<br />

y=<br />

d x=<br />

b<br />

and P( a ó X ó b and c ó Y ó d ) = ( , )<br />

∫<br />

∫<br />

y=<br />

c x=<br />

a<br />

f x y dx dy<br />

As bef<strong>or</strong>e with discrete variables, the marginal <strong>distributions</strong> are the <strong>distributions</strong> of<br />

X and Y considered separately and model how X and Y vary separately from each other.<br />

Whereas with discrete random variables we speak of marginal <strong>probability</strong> functions,<br />

with continuous random variables we speak of marginal <strong>probability</strong> density functions.<br />

Example 3.3<br />

An electronics system has one of each of two different types of components in <strong>joint</strong><br />

operation. Let X and Y denote the random lengths of life of the components of type 1<br />

and 2, respectively. Their <strong>joint</strong> density function is given by<br />

( x y)<br />

/<br />

f ( x , y) = ⎛ x e x ; y<br />

⎝ ⎜ 1 ⎞ ⎠ ⎟ − + 2<br />

> 0 > 0<br />

8<br />

= 0<br />

otherwise

4<br />

Example 3.4<br />

The random variables X and Y have a bivariate n<strong>or</strong>mal distribution if<br />

( )<br />

−<br />

f x, y = ae b<br />

where<br />

a=<br />

2π σ σ 1−<br />

ρ<br />

X<br />

1<br />

Y<br />

2<br />

and<br />

b =<br />

1<br />

2<br />

( − ρ )<br />

2 1<br />

⎡ ⎛ x − µ ⎞ ⎛<br />

X<br />

x − µ ⎞ ⎛<br />

X<br />

y − µ ⎞ ⎛<br />

Y<br />

y − µ<br />

⎢ ⎜ ⎟ − 2ρ⎜<br />

⎟ ⎜ ⎟ + ⎜<br />

⎣⎢<br />

⎝ σ<br />

X ⎠ ⎝ σ<br />

X ⎠ ⎝ σ<br />

Y ⎠ ⎝ σ<br />

Y<br />

2 2<br />

Y<br />

⎞<br />

⎟<br />

⎠<br />

⎤<br />

⎥<br />

⎦⎥<br />

where −∞< x

3.9 Conditional <strong>probability</strong> density functions<br />

The conditional p.d.f of X given Y = y is denoted by f ( x y)<br />

and<br />

defined as f ( x y ) = f ( x Y y)<br />

= =<br />

5<br />

( , )<br />

( y)<br />

f x y<br />

whereas the conditional p.d.f of Y given X = x is denoted by f ( y x)<br />

and<br />

defined as f ( y x ) = f ( y X x)<br />

= =<br />

f<br />

Y<br />

( , )<br />

( x)<br />

f x y<br />

f<br />

X<br />

3.10 Joint <strong>probability</strong> distribution function<br />

As in 3.5 the <strong>joint</strong> (cumulative) <strong>probability</strong> distribution function (c.d.f.) is denoted by<br />

F(x, y) and is defined as F(x, y) = P( X ó x and Y ó y) but F(x, y) in the continuous<br />

case is the volume under the p.d.f. surface from X = −∞ to X = x and from Y = −∞ to<br />

Y = y, so that<br />

F( x y)<br />

v=<br />

y<br />

∫<br />

u=<br />

x<br />

, = ( , )<br />

v=−∞<br />

∫<br />

u=−∞<br />

f u v du dv<br />

The marginal c.d.f. ‘s are defined as in 3.5 and can be obtained from the <strong>joint</strong><br />

distribution function F(x, y) as follows:<br />

F<br />

F<br />

X ( x)<br />

= F( x y MAX )<br />

Y ( y)<br />

= F( x y)<br />

, where y MAX<br />

is the largest value of y and<br />

MAX , where x MAX<br />

is the largest value of x.<br />

3.11 Imp<strong>or</strong>tant connections between the p.d.f ‘s and the <strong>joint</strong> c.d.f.’s.<br />

(i) The joinf p.d.f. f (x, y) =<br />

( , )<br />

∂<br />

2 F x y<br />

∂x∂y<br />

(ii) The marginal p.d.f’s can be obtained from the marginal c.d.f.’s as follows:<br />

the marginal p.d.f. of X = f ( x)<br />

X<br />

=<br />

the marginal p.d.f. of Y = f ( y)<br />

Y<br />

=<br />

dF<br />

X<br />

dx<br />

dF y<br />

Y<br />

dy<br />

( x)<br />

( )<br />

<strong>or</strong> F ′ ( x)<br />

X<br />

,<br />

<strong>or</strong> F ′ ( y)<br />

Y<br />

3.12 Are X and Y independent?<br />

X and Y are independent random variables if either<br />

(a) F(x, y) = FX ( x)<br />

FY<br />

( y)<br />

; <strong>or</strong>

(b) f(x, y) = f<br />

X ( x)<br />

f ( y)<br />

Y<br />

; <strong>or</strong><br />

6<br />

(c) f ( x y ) = function of x only <strong>or</strong> equivalently f ( y x ) = function of y only<br />

Example 3.5 The <strong>joint</strong> distribution function of X and Y is given by<br />

F x y y x 2<br />

⎛ ⎞<br />

2<br />

( , ) =<br />

3 ⎜ + x⎟ ⎝ 2 ⎠<br />

0≤ x,<br />

y ≤1<br />

= 0 otherwise<br />

(i) Find the marginal distribution and density functions.<br />

(ii) Find the <strong>joint</strong> density function.<br />

(iii) Are X and Y independent random variables?<br />

Example 3.6<br />

X and Y have the <strong>joint</strong> <strong>probability</strong> density function<br />

2<br />

8x<br />

f ( x, y)<br />

= 1≤ x,<br />

y≤<br />

2<br />

3<br />

7y<br />

(a) Derive the marginal distribution function of X.<br />

(b) Derive the conditional density function of X given Y = y<br />

(c) Are X and Y independent?<br />

Given:<br />

Given:<br />

Joint density fn. f (x ,y) Joint distribution fn. F(x, y)<br />

⏐ Integrate w.r.t ⏐ Differentiate (partially)<br />

⏐ x and y ⏐ w.r.t. x and y<br />

↓<br />

↓<br />

Joint distribution fn. F(x, y) Joint density fn. f (x, y)<br />

v=<br />

y<br />

∫<br />

v=−∞<br />

u=<br />

x<br />

∫<br />

u=−∞<br />

( , )<br />

f u v du dv<br />

∂<br />

2 F<br />

∂x∂y .

Example 3.6(b) and (c)<br />

7<br />

Solution From 3.9 the conditional p.d.f of X given Y = y is denoted by f ( x y)<br />

and<br />

defined as f ( x y ) = f ( x Y y)<br />

= =<br />

( , )<br />

( y)<br />

f x y<br />

and Y and fY ( y)<br />

is the marginal p.d.f. of Y. We know f ( x y)<br />

find f ( y)<br />

Y<br />

.<br />

There are two ways you can find f ( y)<br />

f<br />

Y<br />

where f ( x , y)<br />

is the <strong>joint</strong> p.d.f. of X<br />

x<br />

, = 8 7y<br />

2<br />

3<br />

so we need to<br />

Y<br />

. The first way involves integration and the<br />

second way involves differentiation. I will do both ways to show you how to use the<br />

different results we have here but you should always choose the way you find easiest i.e<br />

you would not be expected to find fY ( y)<br />

both ways in any assessed w<strong>or</strong>k .<br />

Method 1<br />

∞<br />

From 3.8 fY ( y)<br />

= ∫ f ( x , y)<br />

dx so f ( y)<br />

−∞<br />

Y<br />

=<br />

2<br />

2<br />

8x<br />

∫ 3<br />

7y<br />

dx =<br />

1<br />

8<br />

y<br />

2<br />

7 3 2<br />

∫ x dx =<br />

1<br />

2<br />

3<br />

8 ⎡ x ⎤<br />

3 ⎢<br />

7y<br />

⎣ 3<br />

⎥ =<br />

⎦<br />

1<br />

=<br />

8<br />

7y<br />

3<br />

3<br />

⎡2<br />

⎢<br />

⎣ 3<br />

−<br />

1⎤<br />

⎥<br />

3<br />

= 8<br />

⎦ 7y<br />

3<br />

⎡7<br />

⎣<br />

⎢3<br />

⎤<br />

⎦<br />

⎥ = 8<br />

y<br />

3 3<br />

Method 2<br />

From 3.11 f ( y)<br />

Y<br />

=<br />

dF<br />

Y<br />

( y)<br />

dy<br />

From 3.10 FY ( y)<br />

= F( x y)<br />

F<br />

F<br />

where F ( y)<br />

MAX , where x MAX<br />

Y ( y)<br />

= F( 2, y)<br />

and from part (a), F( x y)<br />

( y)<br />

Hence f ( y)<br />

4<br />

21 2 1 ⎛<br />

1 1 ⎞<br />

⎜ −<br />

2 ⎟ = 4 ⎛<br />

⎝ y ⎠ 3 1 1 ⎞<br />

⎜ −<br />

2 ⎟<br />

⎝ y ⎠<br />

3<br />

2, = ( − )<br />

Y<br />

= d ⎛ 4 ⎛<br />

dy 3 1 1 ⎞⎞<br />

⎜ ⎜ −<br />

2<br />

⎟⎟ = 4 ⎛ 2 ⎞<br />

⎜ 3 ⎟ =<br />

⎝ ⎝ y ⎠⎠<br />

3 ⎝ y ⎠<br />

Y<br />

is the marginal c.d.f of Y.<br />

is the largest value of x, so<br />

4<br />

21<br />

3<br />

, = ( x − )<br />

8<br />

3y<br />

3<br />

hence F ( y)<br />

⎛<br />

1 ⎜1−<br />

⎝<br />

1 ⎞<br />

2 ⎟ so<br />

y ⎠<br />

Y<br />

= 4 ⎛<br />

3 1 1 ⎞<br />

⎜ −<br />

2 ⎟ .<br />

⎝ y ⎠<br />

as with method 1.<br />

Now theref<strong>or</strong>e the conditional density function of X given Y = y , f ( x y)<br />

is given by<br />

So ( )<br />

f<br />

f ( x y ) = ( x,<br />

y )<br />

f ( y)<br />

Y<br />

=<br />

⎛ 8x<br />

⎜<br />

⎝ 7y<br />

⎛ 8<br />

⎜<br />

⎝ 3y<br />

2<br />

3<br />

3<br />

⎞<br />

⎟<br />

⎠<br />

⎞<br />

⎟<br />

⎠<br />

= 3 x<br />

7<br />

f x y = 3 3<br />

x<br />

7<br />

1 ó x ó 2 and 1 ó y ó 2<br />

= 0 otherwise<br />

(c) Now f ( x y)<br />

is a function of x only, so using result 3.12(c), X and Y are independent.<br />

Notice also that f ( x y ) = f<br />

X ( x)<br />

which you would expect if X and Y are independent.<br />

3

3.13 Expectations and variances<br />

8<br />

Discrete random variables<br />

r<br />

r<br />

r<br />

r<br />

( ) = ∑ ∑ ( , ) = ∑ x ∑ p( x , y)<br />

= x p<br />

X ( x)<br />

E X x p x y<br />

x<br />

y<br />

x<br />

r<br />

r<br />

r<br />

r<br />

( ) = ∑ ∑ ( , ) = ∑ y ∑ p( x , y)<br />

= y pY<br />

( y)<br />

E Y y p x y<br />

Examples<br />

x<br />

y<br />

y<br />

y<br />

x<br />

∑ r =1,2.....<br />

x<br />

∑ r =1,2....<br />

y<br />

2 2<br />

Hence Var(X) = E( X ) − ( E( X ))<br />

, Var(Y) = E( Y ) ( E( Y<br />

)<br />

Continuous random variables<br />

∞<br />

∞<br />

r r r<br />

( ) ∫ ∫ ( ) ∫ X ( )<br />

− etc.<br />

2 2<br />

E X = x f x , y dx dy = x f x dx r = 1, 2 .....<br />

−∞ −∞<br />

∞<br />

∞<br />

r r r<br />

( ) ∫ ∫ ( ) ∫ Y ( )<br />

E Y = y f x , y dx dy = y f y dy r = 1, 2 .....<br />

Examples<br />

−∞ −∞<br />

∞<br />

−∞<br />

∞<br />

−∞<br />

3.14 Expectation of a function of the r.v.'s X and Y<br />

Continuous X and Y<br />

Discrete X and Y<br />

∞<br />

∞<br />

∫ ∫<br />

E[ g( X, Y)] = g( x, y) f ( x, y)<br />

dxdy<br />

−∞ −∞<br />

∞<br />

∞<br />

e . g . ⎡<br />

E X ⎤ x<br />

⎣<br />

⎢ Y ⎦<br />

⎥ = ∫ ∫<br />

y f ( x, y)<br />

dxdy<br />

E[XY] =<br />

−∞ −∞<br />

∞<br />

∞<br />

∫ ∫<br />

−∞ −∞<br />

xy f ( x , y ) dxdy .<br />

3.15 Covariance and c<strong>or</strong>relation<br />

Covariance of X and Y is defined as follows : Cov (X,Y) = σ XY<br />

= E(XY) - E(X)E(Y).<br />

Notes<br />

(a) If the random variables increase together <strong>or</strong> decrease together, then the covariance<br />

will be positive, whereas if one random variable increases and the other variable<br />

decreases and vice-versa, then the covariance will be negative.<br />

(b) If X and Y are independent r.v's, then E(XY) = E(X)E(Y) so cov(X, Y) = 0.<br />

However<br />

if cov(X,Y) = 0, it does not follow that X and Y are independent unless X and Y are

9<br />

N<strong>or</strong>mal r.v's.<br />

C<strong>or</strong>relation coefficient = ρ = c<strong>or</strong>r(X,Y) = Cov ( X , Y ) .<br />

σ<br />

Xσ<br />

Y<br />

Note<br />

(a) The c<strong>or</strong>relation coefficient is a number between -1 and 1 i.e. -1 ó ρ ó 1<br />

(b) If the random variables increase together <strong>or</strong> decrease together, then ρ<br />

will be positive, whereas if one random variable increases and the other variable<br />

decreases and vice-versa, then ρ will be negative.<br />

(c) It measures the degree of linear relationship between the two random variables X<br />

and Y , so if there is a non-linear relationship between X and Y <strong>or</strong> X and Y are<br />

independent random variables, then ρ will be 0.<br />

You will study c<strong>or</strong>relation in m<strong>or</strong>e detail in the Econometric part of the course with<br />

David Winter.<br />

Example 3.7 In Example 3.2 are X and Y c<strong>or</strong>related?<br />

Solution Below is the <strong>joint</strong> <strong>or</strong> bivariate <strong>probability</strong> distribution of X and Y:<br />

X<br />

-2 -1 0 1 2<br />

Y 10 0.09 0.15 0.27 0.25 0.04<br />

20 0.01 0.05 0.08 0.05 0.01<br />

The marginal <strong>distributions</strong> of X and Y are<br />

x -2 -1 0 1 2 Total<br />

p<br />

X<br />

x<br />

0.10 0.20 0.35 0.30 0.05 1.00<br />

P(X =x) <strong>or</strong> ( )<br />

and<br />

P(Y = y) <strong>or</strong> ( )<br />

y 10 20 Total<br />

pY y<br />

0.8 0.20 1.00<br />

Example 3. 8 In Example 3.6<br />

(i) Calculate E(X), Var(X), E(Y) and cov(X,Y).<br />

(ii) Are X and Y independent?<br />

3.14 Useful results on expectations and variances<br />

(i) E( aX + bY) = aE( X) + bE( Y)<br />

where a and b are constants.

10<br />

(ii) Var( aX + bY) = a Var( X) + b Var( Y) + 2ab cov( X, Y).<br />

Result (i) can be extended to any n random variables X 1<br />

, X 2<br />

,......., X n<br />

E a X + a X + ....... + a X = a E X + a E X + ........ + a E X<br />

( ) ( ) ( ) ( )<br />

1 1 2 2 n n 1 1 2 2<br />

n n<br />

When X and Y are independent, then<br />

(iii) Var( aX + bY) = a 2 Var( X) + b 2 Var( Y)<br />

= so cov( X, Y ) = 0<br />

(iv) E( XY ) E( X) E( Y )<br />

Results (iii) and (iv) can be extended to any n independent random variables<br />

X 1<br />

, X 2<br />

,......., X n<br />

(iii)* Var( a X a X ....... a X )<br />

+ + + =<br />

1 1 2 2<br />

n<br />

n<br />

2<br />

2<br />

( ) + ( ) + ........ + ( )<br />

2<br />

a Var X a Var X a Var X<br />

1<br />

1 2<br />

(iv)* E( X X ..... X ) = E( X ). E( X )........<br />

E( X )<br />

1 2 n<br />

1 2<br />

n<br />

2<br />

n<br />

n

11<br />

3.15 Combinations of independent N<strong>or</strong>mal random variables<br />

Suppose X<br />

X<br />

2<br />

2<br />

2<br />

~ N ( µ , σ ) , X ~ N( µ , σ ) , X ~ N ( µ , σ ) ,.........and<br />

1 2 2<br />

2 2 2<br />

3 3 3<br />

~ N ( µ , σ 2 ) X 1<br />

, X 2<br />

,......., X n<br />

are independent random variables, then if<br />

n n n<br />

Y = a1 X1 + a2 X2 + a3 X3 + ..... + a n<br />

X n<br />

where a 1<br />

, a 2<br />

..... a n<br />

are constants,<br />

2 2 2 2 2 2<br />

Y ~ N( a µ + a µ + a µ + ..... + a µ , a σ + a σ + ..... + a σ )<br />

1 1 2 2 3 3 n n 1 1 2 2<br />

n n<br />

∑<br />

i.e. Y ~ N( a µ , a σ 2 ).<br />

∑<br />

i i i i

12<br />

In particular, suppose X 1<br />

, X 2<br />

..... X n<br />

f<strong>or</strong>m a random sample from a N<strong>or</strong>mal<br />

population with mean µ and variance σ 2 ,<br />

2 2 2 2<br />

µ = µ = µ = ..... = µ = µ and σ = σ = ..... = σ = σ .<br />

1 2 3 n<br />

1<br />

∑<br />

Y ~ N( a µ , a<br />

2 σ<br />

2 ).<br />

i<br />

∑<br />

i<br />

2<br />

n<br />

Further, suppose that a = a = a = ..... = a =<br />

1 2 3<br />

n<br />

1<br />

n<br />

then Y = X + X + X<br />

1 2<br />

...<br />

n<br />

n<br />

=<br />

X<br />

and X ~ N( µ , σ 2 ) .<br />

n