Multivariate Gaussianization for Data Processing

Multivariate Gaussianization for Data Processing

Multivariate Gaussianization for Data Processing

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

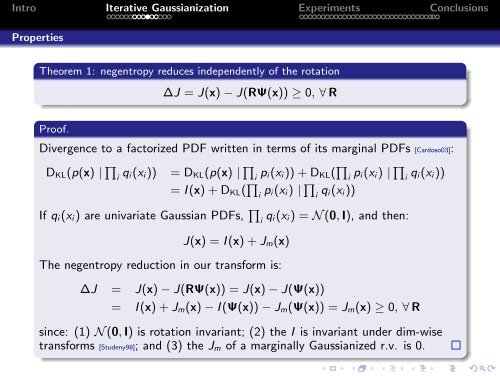

Intro Iterative <strong>Gaussianization</strong> Experiments ConclusionsPropertiesTheorem 1: negentropy reduces independently of the rotation∆J = J(x) − J(RΨ(x)) ≥ 0, ∀ RProof.Divergence to a factorized PDF written in terms of its marginal PDFs [Cardoso03]:D KL (p(x) | ∏ i q i(x i )) = D KL (p(x) | ∏ i p i(x i )) + D KL ( ∏ i p i(x i ) | ∏ i q i(x i ))= I (x) + D KL ( ∏ i p i(x i ) | ∏ i q i(x i ))If q i (x i ) are univariate Gaussian PDFs, ∏ i q i(x i ) = N (0, I), and then:J(x) = I (x) + J m(x)The negentropy reduction in our trans<strong>for</strong>m is:∆J = J(x) − J(RΨ(x)) = J(x) − J(Ψ(x))= I (x) + J m(x) − I (Ψ(x)) − J m(Ψ(x)) = J m(x) ≥ 0, ∀ Rsince: (1) N (0, I) is rotation invariant; (2) the I is invariant under dim-wisetrans<strong>for</strong>ms [Studeny98]; and (3) the J m of a marginally Gaussianized r.v. is 0.