- Page 1 and 2:

TEAMFLY

- Page 6:

Data Mining Techniques For Marketin

- Page 10:

To Stephanie, Sasha, and Nathaniel.

- Page 16:

xx Acknowledgments And, of course,

- Page 20:

TEAMFLY Team-Fly ®

- Page 24:

xxiv Introduction Even if the techn

- Page 30:

Contents Acknowledgments About the

- Page 34:

Contents vii Learning Things That A

- Page 38:

Contents ix Different Kinds of Chur

- Page 42:

Contents xi Chapter 8 How Does a Ne

- Page 46:

Contents xiii Case Study: Who Is Us

- Page 50:

Contents xv Chapter 14 Data Mining

- Page 54:

Contents xvii Availability of Train

- Page 58:

CHAPTER 1 Why and What Is Data Mini

- Page 62:

Why and What Is Data Mining? 3 In t

- Page 66:

Why and What Is Data Mining? 5 many

- Page 70:

Why and What Is Data Mining? 7 DATA

- Page 74:

Why and What Is Data Mining? 9 Clas

- Page 78:

Why and What Is Data Mining? 11 cho

- Page 82:

Why and What Is Data Mining? 13 man

- Page 86:

Why and What Is Data Mining? 15 Com

- Page 90:

Why and What Is Data Mining? 17 sit

- Page 94:

Why and What Is Data Mining? 19 And

- Page 100:

22 Chapter 2 Data is at the heart o

- Page 104:

24 Chapter 2 Marketing literature f

- Page 108:

26 Chapter 2 What Is the Virtuous C

- Page 112:

28 Chapter 2 that lurking inside th

- Page 116:

30 Chapter 2 possible to identify t

- Page 120:

32 Chapter 2 All of these measureme

- Page 124:

34 Chapter 2 Data mining results ch

- Page 128:

36 Chapter 2 Quota Savings Randomiz

- Page 132:

38 Chapter 2 Some of these fields r

- Page 136:

40 Chapter 2 How Data Mining Was Ap

- Page 140:

42 Chapter 2 smaller group of likel

- Page 144:

44 Chapter 3 years, the authors hav

- Page 148:

46 Chapter 3 Ford is the only one w

- Page 152:

48 Chapter 3 Figure 3.2 shows anoth

- Page 156:

50 Chapter 3 The data mining method

- Page 160:

52 Chapter 3 In the most general se

- Page 164:

54 Chapter 3 of maleness. It seems

- Page 168:

56 Chapter 3 Step One: Translate th

- Page 172:

58 Chapter 3 ■■ ■■ ■■ C

- Page 176:

60 Chapter 3 Data mining is often p

- Page 180:

62 Chapter 3 These operational syst

- Page 184:

64 Chapter 3 Often, variables that

- Page 188:

66 Chapter 3 90% 80% 70% 60% 50% 40

- Page 192:

68 Chapter 3 advantage as smarter p

- Page 196:

70 Chapter 3 Including Multiple Tim

- Page 200:

72 Chapter 3 People often find it h

- Page 204:

74 Chapter 3 When missing values mu

- Page 208:

76 Chapter 3 category, such as bake

- Page 212:

78 Chapter 3 Step Eight: Assess Mod

- Page 216:

80 Chapter 3 Percent of Row Frequen

- Page 220:

82 Chapter 3 An example helps to ex

- Page 224:

84 Chapter 3 Lift Value 1.5 1.4 1.3

- Page 228:

86 Chapter 3 before. The newly disc

- Page 232:

88 Chapter 4 comes from traditional

- Page 236:

90 Chapter 4 based on price will no

- Page 240:

92 Chapter 4 The problem with this

- Page 244:

94 Chapter 4 DATA BY CENSUS TRACT T

- Page 248:

96 Chapter 4 Actually, the first le

- Page 252:

98 Chapter 4 ROC CURVES Models are

- Page 256:

100 Chapter 4 The upper, curved lin

- Page 260:

102 Chapter 4 BENEFIT (continued) A

- Page 264:

104 Chapter 4 A smaller, better-tar

- Page 268:

106 Chapter 4 Reaching the People M

- Page 272:

108 Chapter 4 Difference in respons

- Page 276:

110 Chapter 4 Among the most useful

- Page 280:

112 Chapter 4 More typically, a bus

- Page 284:

114 Chapter 4 Nonrepayment of debt

- Page 288:

116 Chapter 4 Making Recommendation

- Page 292:

118 Chapter 4 Retention campaigns c

- Page 296:

120 Chapter 4 information than simp

- Page 300:

122 Chapter 4 From a data mining pe

- Page 304:

124 Chapter 5 What is remarkable an

- Page 308:

126 Chapter 5 TIP The simplest expl

- Page 312:

128 Chapter 5 Time Series Histogram

- Page 316:

130 Chapter 5 The Central Limit The

- Page 320:

132 Chapter 5 A QUESTION OF TERMINO

- Page 324:

134 Chapter 5 small probability. Pr

- Page 328:

136 Chapter 5 Cross-Tabulations Tim

- Page 332:

138 Chapter 5 In addition, various

- Page 336:

140 Chapter 5 the challenger offer.

- Page 340:

142 Chapter 5 Table 5.2 The 95 Perc

- Page 344:

144 Chapter 5 Table 5.3 The 95 Perc

- Page 348:

146 Chapter 5 What the Confidence I

- Page 352:

148 Chapter 5 says that with contro

- Page 356:

150 Chapter 5 The appeal of the chi

- Page 360:

152 Chapter 5 distribution depends

- Page 364:

154 Chapter 5 Table 5.7 Chi-Square

- Page 368:

156 Chapter 5 Table 5.8 Chi-Square

- Page 372:

158 Chapter 5 100% 80% 60% 40% 20%

- Page 376:

160 Chapter 5 There Is a Lot of Dat

- Page 380:

162 Chapter 5 Figure 5.11 shows ano

- Page 386:

CHAPTER 6 Decision Trees Decision t

- Page 390:

Decision Trees 167 thinks of a part

- Page 394:

Decision Trees 169 Scoring Figure 6

- Page 398:

Decision Trees 171 50% tot units de

- Page 402:

Decision Trees 173 The first split

- Page 406:

Decision Trees 175 the best splits,

- Page 410:

Decision Trees 177 Purity and Diver

- Page 414:

Decision Trees 179 Entropy Reductio

- Page 418:

Decision Trees 181 COMPARING TWO SP

- Page 422:

Decision Trees 183 statistical rela

- Page 426:

Decision Trees 185 The CART Pruning

- Page 430:

Decision Trees 187 COMPARING MISCLA

- Page 434:

Decision Trees 189 Picking the Best

- Page 438:

Decision Trees 191 The trees grown

- Page 442:

Decision Trees 193 WARNING Small no

- Page 446:

Decision Trees 195 Taking Cost into

- Page 450:

Decision Trees 197 Voter #1 and Vot

- Page 454:

Decision Trees 199 Neural Trees One

- Page 458:

Decision Trees 201 part of the targ

- Page 462:

Decision Trees 203 Decision Trees i

- Page 466:

Decision Trees 205 Applying Decisio

- Page 470:

Decision Trees 207 USING DECISION T

- Page 474:

Decision Trees 209 enjoyed using th

- Page 480:

212 Chapter 7 probing neural networ

- Page 484:

214 Chapter 7 of the value of the p

- Page 488:

216 Chapter 7 Table 7.1 Common Feat

- Page 492:

218 Chapter 7 Year_Built (1923), su

- Page 496:

220 Chapter 7 The solution is to in

- Page 500:

222 Chapter 7 Feed-forward networks

- Page 504:

224 Chapter 7 magnitude of the weig

- Page 508:

226 Chapter 7 Feed-Forward Neural N

- Page 512:

228 Chapter 7 last purchase age gen

- Page 516:

230 Chapter 7 TRAINING AS OPTIMIZAT

- Page 520:

232 Chapter 7 networks now takes se

- Page 524:

234 Chapter 7 Size of Training Set

- Page 528:

236 Chapter 7 This transformation (

- Page 532:

238 Chapter 7 Features with Ordered

- Page 536:

240 Chapter 7 be mapped to -1.0, -0

- Page 540:

242 Chapter 7 pattern the network f

- Page 544:

244 Chapter 7 1.0 B B B B A A B 0.0

- Page 548:

246 Chapter 7 Notice that the time-

- Page 552: 248 Chapter 7 2. Measure the output

- Page 556: 250 Chapter 7 The output units comp

- Page 560: 252 Chapter 7 unknown instance is f

- Page 564: 254 Chapter 7 The story continues w

- Page 570: CHAPTER 8 Nearest Neighbor Approach

- Page 574: Memory-Based Reasoning and Collabor

- Page 578: Memory-Based Reasoning and Collabor

- Page 582: Memory-Based Reasoning and Collabor

- Page 586: Memory-Based Reasoning and Collabor

- Page 590: Memory-Based Reasoning and Collabor

- Page 594: Memory-Based Reasoning and Collabor

- Page 598: Memory-Based Reasoning and Collabor

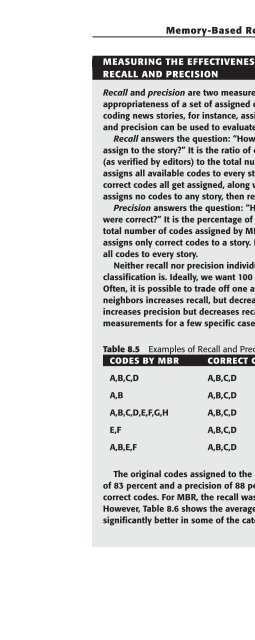

- Page 604: 274 Chapter 8 MEASURING THE EFFECTI

- Page 608: 276 Chapter 8 Gender is an example

- Page 612: 278 Chapter 8 Furthermore, there is

- Page 616: 280 Chapter 8 In Table 8.12, the fi

- Page 620: 282 Chapter 8 Table 8.16 Confidence

- Page 624: 284 Chapter 8 Comparing Profiles On

- Page 628: 286 Chapter 8 produces better resul

- Page 632: 288 Chapter 9 In this shopping bask

- Page 636: 290 Chapter 9 The order is the fund

- Page 640: 292 Chapter 9 Order Characteristics

- Page 644: 294 Chapter 9 450 400 Mail Drop 350

- Page 648: 296 Chapter 9 Association Rules One

- Page 652:

298 Chapter 9 explanation: Is the d

- Page 656:

300 Chapter 9 This simple co-occurr

- Page 660:

302 Chapter 9 Detergent 1 0 0 1 1 S

- Page 664:

304 Chapter 9 Table 9.3 Transaction

- Page 668:

306 Chapter 9 The number of combina

- Page 672:

308 Chapter 9 Data Quality The data

- Page 676:

310 Chapter 9 Table 9.6 Confidence

- Page 680:

312 Chapter 9 For instance, in the

- Page 684:

314 Chapter 9 A pizza restaurant ha

- Page 688:

316 Chapter 9 TIP Adding virtual tr

- Page 692:

318 Chapter 9 Sequential Analysis U

- Page 696:

320 Chapter 9 Market basket analysi

- Page 700:

322 Chapter 10 often yields very in

- Page 704:

324 Chapter 10 Oops! These edges in

- Page 708:

326 Chapter 10 A C D Pregel River N

- Page 712:

328 Chapter 10 leaves the car in th

- Page 716:

330 Chapter 10 Directed Graphs The

- Page 720:

332 Chapter 10 The Kleinberg Algori

- Page 724:

334 Chapter 10 Identifying the Cand

- Page 728:

336 Chapter 10 Hubs and Authorities

- Page 732:

338 Chapter 10 353 3658 00:00:41

- Page 736:

169 44 61 340 Chapter 10 The proces

- Page 740:

342 Chapter 10 USING SQL TO COLOR A

- Page 744:

5 MOU 344 Chapter 10 customer behav

- Page 748:

346 Chapter 10 Second, link analysi

- Page 754:

CHAPTER 11 Automatic Cluster Detect

- Page 758:

Automatic Cluster Detection 351 the

- Page 762:

Automatic Cluster Detection 353 The

- Page 766:

Automatic Cluster Detection 355 the

- Page 770:

Automatic Cluster Detection 357 X 2

- Page 774:

Automatic Cluster Detection 359 thi

- Page 778:

Automatic Cluster Detection 361 DIS

- Page 782:

Automatic Cluster Detection 363 Man

- Page 786:

Automatic Cluster Detection 365 Use

- Page 790:

Automatic Cluster Detection 367 The

- Page 794:

Automatic Cluster Detection 369 sub

- Page 798:

Automatic Cluster Detection 371 Dis

- Page 802:

Automatic Cluster Detection 373 is

- Page 806:

Automatic Cluster Detection 375 sig

- Page 810:

Automatic Cluster Detection 377 Cre

- Page 814:

Automatic Cluster Detection 379 Pop

- Page 818:

Automatic Cluster Detection 381 Les

- Page 822:

CHAPTER 12 Knowing When to Worry: H

- Page 826:

Hazard Functions and Survival Analy

- Page 830:

Hazard Functions and Survival Analy

- Page 834:

Hazard Functions and Survival Analy

- Page 838:

Hazard Functions and Survival Analy

- Page 842:

Hazard Functions and Survival Analy

- Page 846:

Hazard Functions and Survival Analy

- Page 850:

Hazard Functions and Survival Analy

- Page 854:

Hazard Functions and Survival Analy

- Page 858:

1 Hazard Functions and Survival Ana

- Page 862:

Hazard Functions and Survival Analy

- Page 866:

Hazard Functions and Survival Analy

- Page 870:

Hazard Functions and Survival Analy

- Page 874:

Hazard Functions and Survival Analy

- Page 878:

Hazard Functions and Survival Analy

- Page 882:

Hazard Functions and Survival Analy

- Page 886:

Hazard Functions and Survival Analy

- Page 890:

Hazard Functions and Survival Analy

- Page 894:

Hazard Functions and Survival Analy

- Page 900:

422 Chapter 13 problems involving c

- Page 904:

424 Chapter 13 template for the hum

- Page 908:

426 Chapter 13 generation n generat

- Page 912:

428 Chapter 13 SIMPLE OVERVIEW OF G

- Page 916:

430 Chapter 13 Table 13.3 The Popul

- Page 920:

432 Chapter 13 Table 13.5 The Popul

- Page 924:

434 Chapter 13 So far, this problem

- Page 928:

436 Chapter 13 schema match the cor

- Page 932:

438 Chapter 13 The Schema Theorem e

- Page 936:

440 Chapter 13 The first problem fa

- Page 940:

442 Chapter 13 trained to fill in a

- Page 944:

444 Chapter 13 Figure 13.7 The Gena

- Page 948:

446 Chapter 13 Lessons Learned Gene

- Page 952:

448 Chapter 14 has largely replaced

- Page 956:

450 Chapter 14 NO CUSTOMER RELATION

- Page 960:

452 Chapter 14 ■■ ■■ Automa

- Page 964:

454 Chapter 14 Such agent relations

- Page 968:

456 Chapter 14 Larger businesses, o

- Page 972:

458 Chapter 14 Subscription Relatio

- Page 976:

Respond from Some Channel Not Pay 4

- Page 980:

462 Chapter 14 Who Are the Prospect

- Page 984:

464 Chapter 14 What Is the Role of

- Page 988:

466 Chapter 14 New sales come in th

- Page 992:

468 Chapter 14 AN ENGINE FOR CHURN

- Page 996:

470 Chapter 14 Winback Once custome

- Page 1000:

TEAMFLY Team-Fly ®

- Page 1004:

474 Chapter 15 believe that, over t

- Page 1008:

476 Chapter 15 The level of abstrac

- Page 1012:

478 Chapter 15 effort. One of the g

- Page 1016:

480 Chapter 15 WHAT IS A RELATIONAL

- Page 1020:

482 Chapter 15 WHAT IS A RELATIONAL

- Page 1024:

484 Chapter 15 warehouse must be re

- Page 1028:

486 Chapter 15 One or more of these

- Page 1032:

488 Chapter 15 Central Repository T

- Page 1036:

490 Chapter 15 BACKGROUND ON PARALL

- Page 1040:

492 Chapter 15 important type of da

- Page 1044:

494 Chapter 15 The data warehouse i

- Page 1048:

496 Chapter 15 In the middle are of

- Page 1052:

498 Chapter 15 Shop Date Product sh

- Page 1056:

500 Chapter 15 The third type of cu

- Page 1060:

502 Chapter 15 ranges of customer v

- Page 1064:

504 Chapter 15 Conformed Dimensions

- Page 1068:

506 Chapter 15 In diagrams, the dim

- Page 1072:

508 Chapter 15 One of the problems

- Page 1076:

510 Chapter 15 graph. Neural networ

- Page 1080:

512 Chapter 15 A typical data wareh

- Page 1084:

514 Chapter 16 A Customer-Centric O

- Page 1088:

516 Chapter 16 data is not readily

- Page 1092:

518 Chapter 16 Operational Data (bi

- Page 1096:

520 Chapter 16 Collecting the Right

- Page 1100:

522 Chapter 16 devising new product

- Page 1104:

524 Chapter 16 direct mail decrease

- Page 1108:

526 Chapter 16 A new data mining gr

- Page 1112:

528 Chapter 16 Scoring is not compl

- Page 1116:

530 Chapter 16 three major modules,

- Page 1120:

532 Chapter 16 What is appealing ab

- Page 1124:

534 Chapter 16 account future growt

- Page 1128:

536 Chapter 16 Comprehensible Outpu

- Page 1132:

538 Chapter 16 step is to create a

- Page 1136:

540 Chapter 17 budget for buying ha

- Page 1140:

542 Chapter 17 It is perhaps unfort

- Page 1144:

544 Chapter 17 The distribution of

- Page 1148:

546 Chapter 17 Before ignoring a co

- Page 1152:

548 Chapter 17 Figure 17.4 Angoss K

- Page 1156:

550 Chapter 17 ■■ True numeric

- Page 1160:

552 Chapter 17 Dates and Times Date

- Page 1164:

554 Chapter 17 Neural networks and

- Page 1168:

556 Chapter 17 One of the most impo

- Page 1172:

558 Chapter 17 Constructing the Cus

- Page 1176:

560 Chapter 17 Identifying the Cust

- Page 1180:

562 Chapter 17 business customers o

- Page 1184:

564 Chapter 17 Making Progress Alth

- Page 1188:

566 Chapter 17 Changes over Time Pe

- Page 1192:

568 Chapter 17 DM TM WEB Credit Car

- Page 1196:

570 Chapter 17 When the lookup tabl

- Page 1200:

572 Chapter 17 Pivoting Regular Tim

- Page 1204:

574 Chapter 17 Summarizing Transact

- Page 1208:

576 Chapter 17 One method of calcul

- Page 1212:

578 Chapter 17 TIP When many differ

- Page 1216:

580 Chapter 17 Revolvers, Transacto

- Page 1220:

582 Chapter 17 Table 17.5 Six Credi

- Page 1224:

584 Chapter 17 Table 17.6 Potential

- Page 1228:

586 Chapter 17 $2,000 $1,500 $1,000

- Page 1232:

588 Chapter 17 120 Payment as Multi

- Page 1236:

590 Chapter 17 The Dark Side of Dat

- Page 1240:

592 Chapter 17 Dirty Data Dirty dat

- Page 1244:

594 Chapter 17 and so on. However,

- Page 1248:

596 Chapter 17 varies from tool to

- Page 1252:

598 Chapter 18 Getting Started The

- Page 1256:

600 Chapter 18 These are areas wher

- Page 1260:

602 Chapter 18 proof-of-concept pro

- Page 1264:

604 Chapter 18 Although the details

- Page 1268:

606 Chapter 18 less likely to churn

- Page 1272:

608 Chapter 18 from one record to a

- Page 1276:

610 Chapter 18 are appropriate for

- Page 1280:

612 Chapter 18 serial number and ph

- Page 1284:

614 Chapter 18 plan allows. Since t

- Page 1288:

616 Index analysis differential res

- Page 1292:

618 Index auxiliary information, 56

- Page 1296:

620 Index champion-challenger appro

- Page 1300:

622 Index creative process, data mi

- Page 1304:

624 Index data (continued) missing

- Page 1308:

626 Index discrete outcomes, classi

- Page 1312:

628 Index genetic algorithms case s

- Page 1316:

630 Index intuition, data explorati

- Page 1320:

632 Index memory-based reasoning (M

- Page 1324:

634 Index new customer information

- Page 1328:

636 Index proof-of-concept projects

- Page 1332:

638 Index response, survey response

- Page 1336:

640 Index SQL data, time series ana

- Page 1340:

642 Index testing (continued) KS (K