cloudera-spark

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Developing Spark Applications<br />

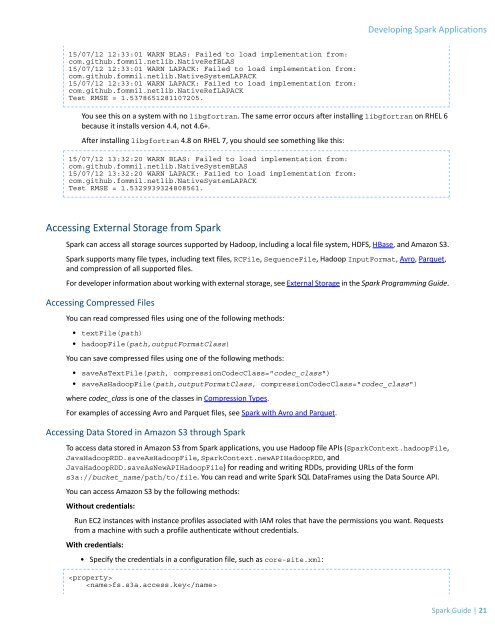

15/07/12 12:33:01 WARN BLAS: Failed to load implementation from:<br />

com.github.fommil.netlib.NativeRefBLAS<br />

15/07/12 12:33:01 WARN LAPACK: Failed to load implementation from:<br />

com.github.fommil.netlib.NativeSystemLAPACK<br />

15/07/12 12:33:01 WARN LAPACK: Failed to load implementation from:<br />

com.github.fommil.netlib.NativeRefLAPACK<br />

Test RMSE = 1.5378651281107205.<br />

You see this on a system with no libgfortran. The same error occurs after installing libgfortran on RHEL 6<br />

because it installs version 4.4, not 4.6+.<br />

After installing libgfortran 4.8 on RHEL 7, you should see something like this:<br />

15/07/12 13:32:20 WARN BLAS: Failed to load implementation from:<br />

com.github.fommil.netlib.NativeSystemBLAS<br />

15/07/12 13:32:20 WARN LAPACK: Failed to load implementation from:<br />

com.github.fommil.netlib.NativeSystemLAPACK<br />

Test RMSE = 1.5329939324808561.<br />

Accessing External Storage from Spark<br />

Spark can access all storage sources supported by Hadoop, including a local file system, HDFS, HBase, and Amazon S3.<br />

Spark supports many file types, including text files, RCFile, SequenceFile, Hadoop InputFormat, Avro, Parquet,<br />

and compression of all supported files.<br />

For developer information about working with external storage, see External Storage in the Spark Programming Guide.<br />

Accessing Compressed Files<br />

You can read compressed files using one of the following methods:<br />

• textFile(path)<br />

• hadoopFile(path,outputFormatClass)<br />

You can save compressed files using one of the following methods:<br />

• saveAsTextFile(path, compressionCodecClass="codec_class")<br />

• saveAsHadoopFile(path,outputFormatClass, compressionCodecClass="codec_class")<br />

where codec_class is one of the classes in Compression Types.<br />

For examples of accessing Avro and Parquet files, see Spark with Avro and Parquet.<br />

Accessing Data Stored in Amazon S3 through Spark<br />

To access data stored in Amazon S3 from Spark applications, you use Hadoop file APIs (SparkContext.hadoopFile,<br />

JavaHadoopRDD.saveAsHadoopFile, SparkContext.newAPIHadoopRDD, and<br />

JavaHadoopRDD.saveAsNewAPIHadoopFile) for reading and writing RDDs, providing URLs of the form<br />

s3a://bucket_name/path/to/file. You can read and write Spark SQL DataFrames using the Data Source API.<br />

You can access Amazon S3 by the following methods:<br />

Without credentials:<br />

Run EC2 instances with instance profiles associated with IAM roles that have the permissions you want. Requests<br />

from a machine with such a profile authenticate without credentials.<br />

With credentials:<br />

• Specify the credentials in a configuration file, such as core-site.xml:<br />

<br />

fs.s3a.access.key<br />

Spark Guide | 21