cloudera-spark

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Developing Spark Applications<br />

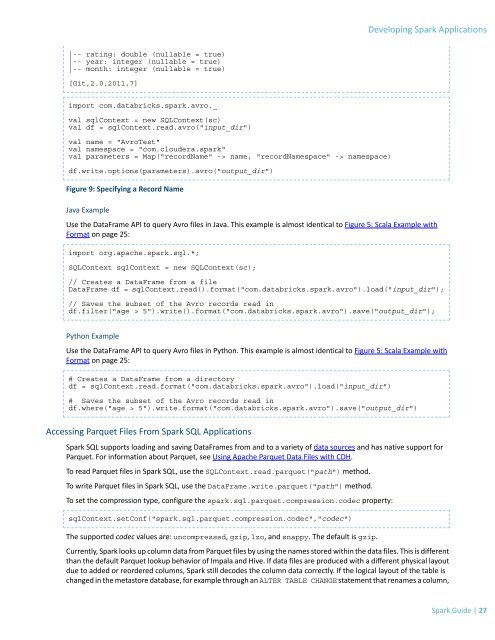

|-- rating: double (nullable = true)<br />

|-- year: integer (nullable = true)<br />

|-- month: integer (nullable = true)<br />

[Git,2.0,2011,7]<br />

import com.databricks.<strong>spark</strong>.avro._<br />

val sqlContext = new SQLContext(sc)<br />

val df = sqlContext.read.avro("input_dir")<br />

val name = "AvroTest"<br />

val namespace = "com.<strong>cloudera</strong>.<strong>spark</strong>"<br />

val parameters = Map("recordName" -> name, "recordNamespace" -> namespace)<br />

df.write.options(parameters).avro("output_dir")<br />

Figure 9: Specifying a Record Name<br />

Java Example<br />

Use the DataFrame API to query Avro files in Java. This example is almost identical to Figure 5: Scala Example with<br />

Format on page 25:<br />

import org.apache.<strong>spark</strong>.sql.*;<br />

SQLContext sqlContext = new SQLContext(sc);<br />

// Creates a DataFrame from a file<br />

DataFrame df = sqlContext.read().format("com.databricks.<strong>spark</strong>.avro").load("input_dir");<br />

// Saves the subset of the Avro records read in<br />

df.filter("age > 5").write().format("com.databricks.<strong>spark</strong>.avro").save("output_dir");<br />

Python Example<br />

Use the DataFrame API to query Avro files in Python. This example is almost identical to Figure 5: Scala Example with<br />

Format on page 25:<br />

# Creates a DataFrame from a directory<br />

df = sqlContext.read.format("com.databricks.<strong>spark</strong>.avro").load("input_dir")<br />

# Saves the subset of the Avro records read in<br />

df.where("age > 5").write.format("com.databricks.<strong>spark</strong>.avro").save("output_dir")<br />

Accessing Parquet Files From Spark SQL Applications<br />

Spark SQL supports loading and saving DataFrames from and to a variety of data sources and has native support for<br />

Parquet. For information about Parquet, see Using Apache Parquet Data Files with CDH.<br />

To read Parquet files in Spark SQL, use the SQLContext.read.parquet("path") method.<br />

To write Parquet files in Spark SQL, use the DataFrame.write.parquet("path") method.<br />

To set the compression type, configure the <strong>spark</strong>.sql.parquet.compression.codec property:<br />

sqlContext.setConf("<strong>spark</strong>.sql.parquet.compression.codec","codec")<br />

The supported codec values are: uncompressed, gzip, lzo, and snappy. The default is gzip.<br />

Currently, Spark looks up column data from Parquet files by using the names stored within the data files. This is different<br />

than the default Parquet lookup behavior of Impala and Hive. If data files are produced with a different physical layout<br />

due to added or reordered columns, Spark still decodes the column data correctly. If the logical layout of the table is<br />

changed in the metastore database, for example through an ALTER TABLE CHANGE statement that renames a column,<br />

Spark Guide | 27