cloudera-spark

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Running Spark Applications<br />

resources to pools and run the applications in those pools and enable applications running in pools to be preempted.<br />

See Dynamic Resource Pools.<br />

If you are using Spark Streaming, see the recommendation in Spark Streaming and Dynamic Allocation on page 13.<br />

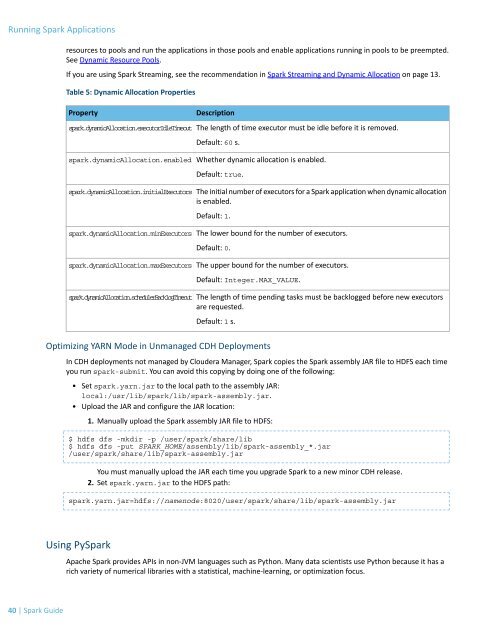

Table 5: Dynamic Allocation Properties<br />

Property<br />

<strong>spark</strong>.dynamicAllocation.executorIdleTimeout<br />

<strong>spark</strong>.dynamicAllocation.enabled<br />

Description<br />

The length of time executor must be idle before it is removed.<br />

Default: 60 s.<br />

Whether dynamic allocation is enabled.<br />

Default: true.<br />

<strong>spark</strong>.dynamicAllocation.initialExecutors<br />

The initial number of executors for a Spark application when dynamic allocation<br />

is enabled.<br />

Default: 1.<br />

<strong>spark</strong>.dynamicAllocation.minExecutors<br />

<strong>spark</strong>.dynamicAllocation.maxExecutors<br />

The lower bound for the number of executors.<br />

Default: 0.<br />

The upper bound for the number of executors.<br />

Default: Integer.MAX_VALUE.<br />

<strong>spark</strong>.dynamicAllocation.schedulerBacklogTimeout<br />

The length of time pending tasks must be backlogged before new executors<br />

are requested.<br />

Default: 1 s.<br />

Optimizing YARN Mode in Unmanaged CDH Deployments<br />

In CDH deployments not managed by Cloudera Manager, Spark copies the Spark assembly JAR file to HDFS each time<br />

you run <strong>spark</strong>-submit. You can avoid this copying by doing one of the following:<br />

• Set <strong>spark</strong>.yarn.jar to the local path to the assembly JAR:<br />

local:/usr/lib/<strong>spark</strong>/lib/<strong>spark</strong>-assembly.jar.<br />

• Upload the JAR and configure the JAR location:<br />

1. Manually upload the Spark assembly JAR file to HDFS:<br />

$ hdfs dfs -mkdir -p /user/<strong>spark</strong>/share/lib<br />

$ hdfs dfs -put SPARK_HOME/assembly/lib/<strong>spark</strong>-assembly_*.jar<br />

/user/<strong>spark</strong>/share/lib/<strong>spark</strong>-assembly.jar<br />

You must manually upload the JAR each time you upgrade Spark to a new minor CDH release.<br />

2. Set <strong>spark</strong>.yarn.jar to the HDFS path:<br />

<strong>spark</strong>.yarn.jar=hdfs://namenode:8020/user/<strong>spark</strong>/share/lib/<strong>spark</strong>-assembly.jar<br />

Using PySpark<br />

Apache Spark provides APIs in non-JVM languages such as Python. Many data scientists use Python because it has a<br />

rich variety of numerical libraries with a statistical, machine-learning, or optimization focus.<br />

40 | Spark Guide