cloudera-spark

Create successful ePaper yourself

Turn your PDF publications into a flip-book with our unique Google optimized e-Paper software.

Developing Spark Applications<br />

conf.set('<strong>spark</strong>.hadoop.avro.mapred.ignore.inputs.without.extension', 'false')<br />

sc = SparkContext(conf = conf)<br />

sqlContext = SQLContext(sc)<br />

The order of precedence in configuration properties is:<br />

1. Properties passed to SparkConf.<br />

2. Arguments passed to <strong>spark</strong>-submit, <strong>spark</strong>-shell, or py<strong>spark</strong>.<br />

3. Properties set in <strong>spark</strong>-defaults.conf.<br />

For more information, see Spark Configuration.<br />

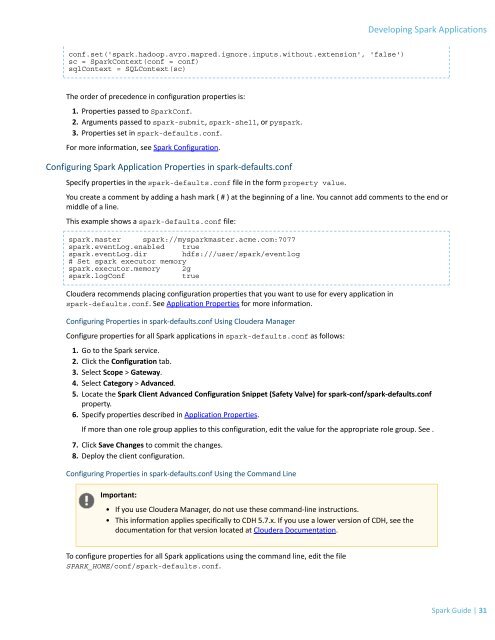

Configuring Spark Application Properties in <strong>spark</strong>-defaults.conf<br />

Specify properties in the <strong>spark</strong>-defaults.conf file in the form property value.<br />

You create a comment by adding a hash mark ( # ) at the beginning of a line. You cannot add comments to the end or<br />

middle of a line.<br />

This example shows a <strong>spark</strong>-defaults.conf file:<br />

<strong>spark</strong>.master <strong>spark</strong>://my<strong>spark</strong>master.acme.com:7077<br />

<strong>spark</strong>.eventLog.enabled true<br />

<strong>spark</strong>.eventLog.dir hdfs:///user/<strong>spark</strong>/eventlog<br />

# Set <strong>spark</strong> executor memory<br />

<strong>spark</strong>.executor.memory 2g<br />

<strong>spark</strong>.logConf<br />

true<br />

Cloudera recommends placing configuration properties that you want to use for every application in<br />

<strong>spark</strong>-defaults.conf. See Application Properties for more information.<br />

Configuring Properties in <strong>spark</strong>-defaults.conf Using Cloudera Manager<br />

Configure properties for all Spark applications in <strong>spark</strong>-defaults.conf as follows:<br />

1. Go to the Spark service.<br />

2. Click the Configuration tab.<br />

3. Select Scope > Gateway.<br />

4. Select Category > Advanced.<br />

5. Locate the Spark Client Advanced Configuration Snippet (Safety Valve) for <strong>spark</strong>-conf/<strong>spark</strong>-defaults.conf<br />

property.<br />

6. Specify properties described in Application Properties.<br />

If more than one role group applies to this configuration, edit the value for the appropriate role group. See .<br />

7. Click Save Changes to commit the changes.<br />

8. Deploy the client configuration.<br />

Configuring Properties in <strong>spark</strong>-defaults.conf Using the Command Line<br />

Important:<br />

• If you use Cloudera Manager, do not use these command-line instructions.<br />

• This information applies specifically to CDH 5.7.x. If you use a lower version of CDH, see the<br />

documentation for that version located at Cloudera Documentation.<br />

To configure properties for all Spark applications using the command line, edit the file<br />

SPARK_HOME/conf/<strong>spark</strong>-defaults.conf.<br />

Spark Guide | 31