FIAS Scientific Report 2011 - Frankfurt Institute for Advanced Studies ...

FIAS Scientific Report 2011 - Frankfurt Institute for Advanced Studies ...

FIAS Scientific Report 2011 - Frankfurt Institute for Advanced Studies ...

You also want an ePaper? Increase the reach of your titles

YUMPU automatically turns print PDFs into web optimized ePapers that Google loves.

Neurally plausible and statistically optimal approaches to learning<br />

Collaborators: J. Lücke 1 , J. A. Shelton 1 , A. S. Sheikh 1 , J. Bornschein 1 , Z. Dai 1 , C. Savin 2 , P. Berkes 3<br />

1 <strong>Frankfurt</strong> <strong>Institute</strong> <strong>for</strong> <strong>Advanced</strong> <strong>Studies</strong>, 2 Computational and Biological Learning Lab, Dept of Engineering, University<br />

of Cambridge, UK, 3 Visual In<strong>for</strong>mation Processing and Learning Lab, Life Science, Brandeis University, USA<br />

The brain’s ability to recognize and interpret images, sounds and other sensory data is unmatched by any<br />

artificial system, so far. Much or most of this ability neural brain circuits acquire through a long process of<br />

small improvements while being exposed to sensory stimuli. This process we call ’learning’, and it has been<br />

recognized as the key to build intelligent systems. In our studies we bring together the in<strong>for</strong>mation theoretic<br />

foundations of learning and learning in neural circuits. Our results help improve the understanding of brain<br />

functions and help building functional approaches <strong>for</strong> scientific data analysis and computer vision.<br />

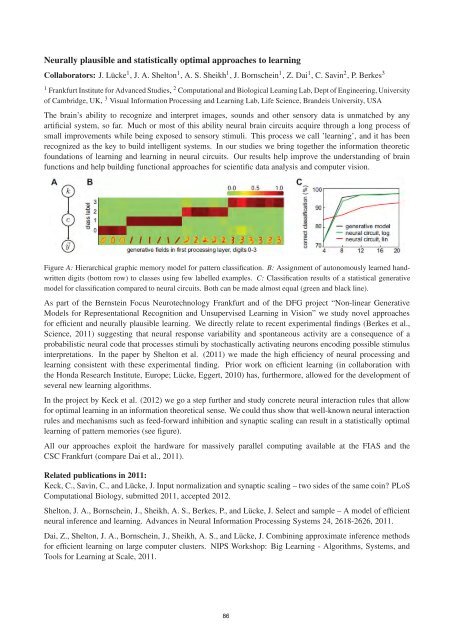

Figure A: Hierarchical graphic memory model <strong>for</strong> pattern classification. B: Assignment of autonomously learned handwritten<br />

digits (bottom row) to classes using few labelled examples. C: Classification results of a statistical generative<br />

model <strong>for</strong> classification compared to neural circuits. Both can be made almost equal (green and black line).<br />

As part of the Bernstein Focus Neurotechnology <strong>Frankfurt</strong> and of the DFG project “Non-linear Generative<br />

Models <strong>for</strong> Representational Recognition and Unsupervised Learning in Vision” we study novel approaches<br />

<strong>for</strong> efficient and neurally plausible learning. We directly relate to recent experimental findings (Berkes et al.,<br />

Science, <strong>2011</strong>) suggesting that neural response variability and spontaneous activity are a consequence of a<br />

probabilistic neural code that processes stimuli by stochastically activating neurons encoding possible stimulus<br />

interpretations. In the paper by Shelton et al. (<strong>2011</strong>) we made the high efficiency of neural processing and<br />

learning consistent with these experimental finding. Prior work on efficient learning (in collaboration with<br />

the Honda Research <strong>Institute</strong>, Europe; Lücke, Eggert, 2010) has, furthermore, allowed <strong>for</strong> the development of<br />

several new learning algorithms.<br />

In the project by Keck et al. (2012) we go a step further and study concrete neural interaction rules that allow<br />

<strong>for</strong> optimal learning in an in<strong>for</strong>mation theoretical sense. We could thus show that well-known neural interaction<br />

rules and mechanisms such as feed-<strong>for</strong>ward inhibition and synaptic scaling can result in a statistically optimal<br />

learning of pattern memories (see figure).<br />

All our approaches exploit the hardware <strong>for</strong> massively parallel computing available at the <strong>FIAS</strong> and the<br />

CSC <strong>Frankfurt</strong> (compare Dai et al., <strong>2011</strong>).<br />

Related publications in <strong>2011</strong>:<br />

Keck, C., Savin, C., and Lücke, J. Input normalization and synaptic scaling – two sides of the same coin? PLoS<br />

Computational Biology, submitted <strong>2011</strong>, accepted 2012.<br />

Shelton, J. A., Bornschein, J., Sheikh, A. S., Berkes, P., and Lücke, J. Select and sample – A model of efficient<br />

neural inference and learning. Advances in Neural In<strong>for</strong>mation Processing Systems 24, 2618-2626, <strong>2011</strong>.<br />

Dai, Z., Shelton, J. A., Bornschein, J., Sheikh, A. S., and Lücke, J. Combining approximate inference methods<br />

<strong>for</strong> efficient learning on large computer clusters. NIPS Workshop: Big Learning - Algorithms, Systems, and<br />

Tools <strong>for</strong> Learning at Scale, <strong>2011</strong>.<br />

86